Today we're addressing one of the most frequently asked questions we see about PC gaming: how many frames per second do you need? Should you be running at the same frame rate as your monitors maximum refresh rate, say 60 FPS on a 60 Hz monitor, or is there a benefit to running games at a much higher frame rate than your monitor can display, like say, 500 FPS?

To answer this question we have to talk a bit about how a GPU and display work together to send frames into your eyeballs, and how technologies like Vsync function.

But the bottom line is, running games at extremely high frame rates, well above your monitor's refresh rate, will lead to a more responsive game experience with lower perceived input latency. That's the answer to the question for those that don't want to wait until the end. Now let's talk about why.

Editor's Note: This feature was originally published on August 2, 2018. It's just as relevant and current today as it was then, so we've bumped it as part of our #ThrowbackThursday initiative.

Let's assume we have a monitor with a fixed refresh rate of 60 Hz. In other words, the monitor is updating its display every 1/60th of a second, or every 16.7ms. When running a game, there is no guarantee that the GPU is able to render every frame in exactly 16.7 milliseconds. Sometimes it might take 20ms, sometimes it might take 15ms, sometimes it might take 8ms. That's the varying nature of rendering a game on a GPU.

With this varying render rate, there is a choice of how each rendered frame is passed to the monitor. It can pass the new frame to the display as soon as it is completely rendered, commonly known as running the game with "Vsync" or vertical sync off, or it can wait until the display is ready to refresh before sending the new frame, known as "Vsync on".

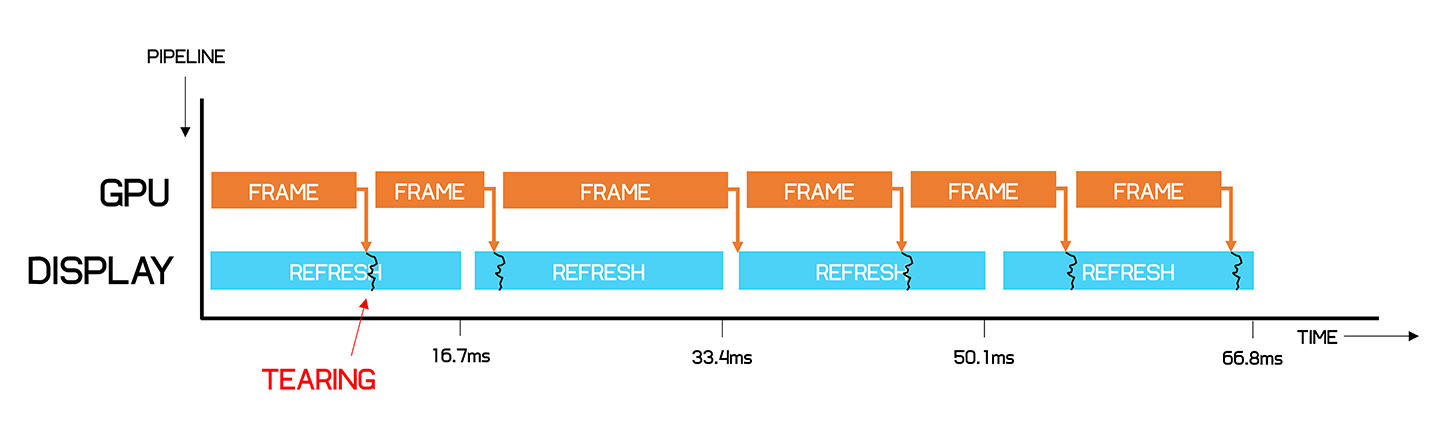

Vsync off

Using the first method, Vsync off, causes tearing. This is because a display cannot update the entire image instantaneously, instead it updates line by line, usually from the top of the display to the bottom. During this process, a new frame may become ready from the GPU, and as we're not using Vsync, the frame is sent to the display immediately. The result is that mid-way through a refresh, the monitor is receiving new data, and updates the remainder of the lines on the display with this new data. You're then left with an image where the top half the screen is from the previous frame and the bottom half is from the new, freshly available frame.

Tearing

Depending on the content being displayed, this split between new and old frames in the one refresh presents itself as a tear, or visible line between the old and new frames. Usually it's most noticeable in fast moving scenes where there is a large difference between one frame and the next.

While Vsync off does lead to tearing, it has the advantage of sending a frame to the display as soon as it is finished being rendered, for low latency between the GPU and display. Keep that in mind for later.

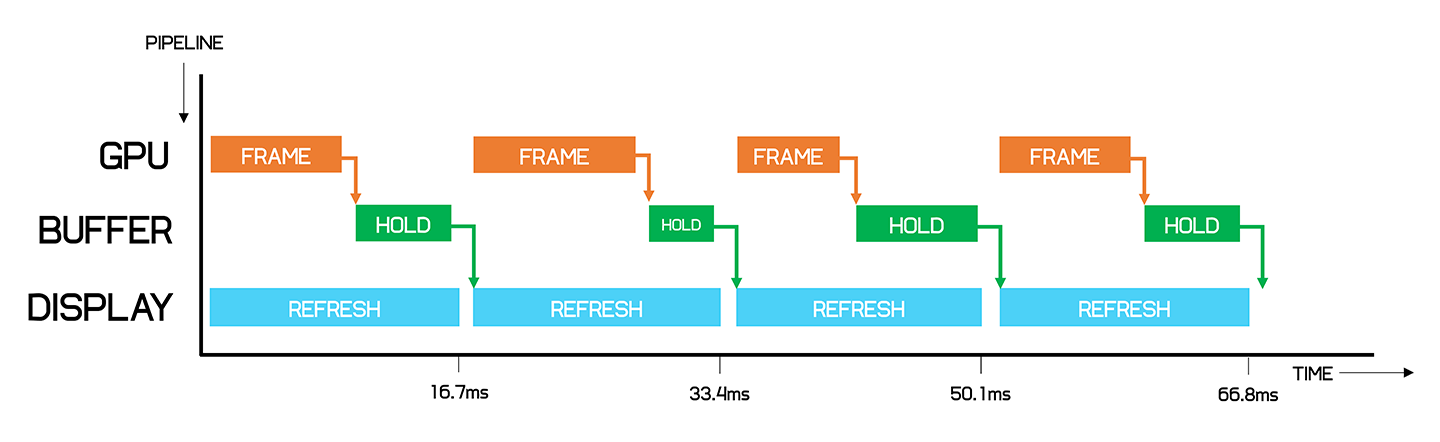

Vsync on

The alternate way to display an image is with Vsync on. Here, instead of the GPU sending the new frame immediately to the display, it shuffles each rendered frame into a buffer. The first buffer is used to store the frame being worked on currently, and the second buffer is used to store the frame the display is currently showing. At no point during the refresh is the second buffer updated, so the display only shows data from one fully rendered frame, and as a result you don't get tearing from an update mid-way through the refresh.

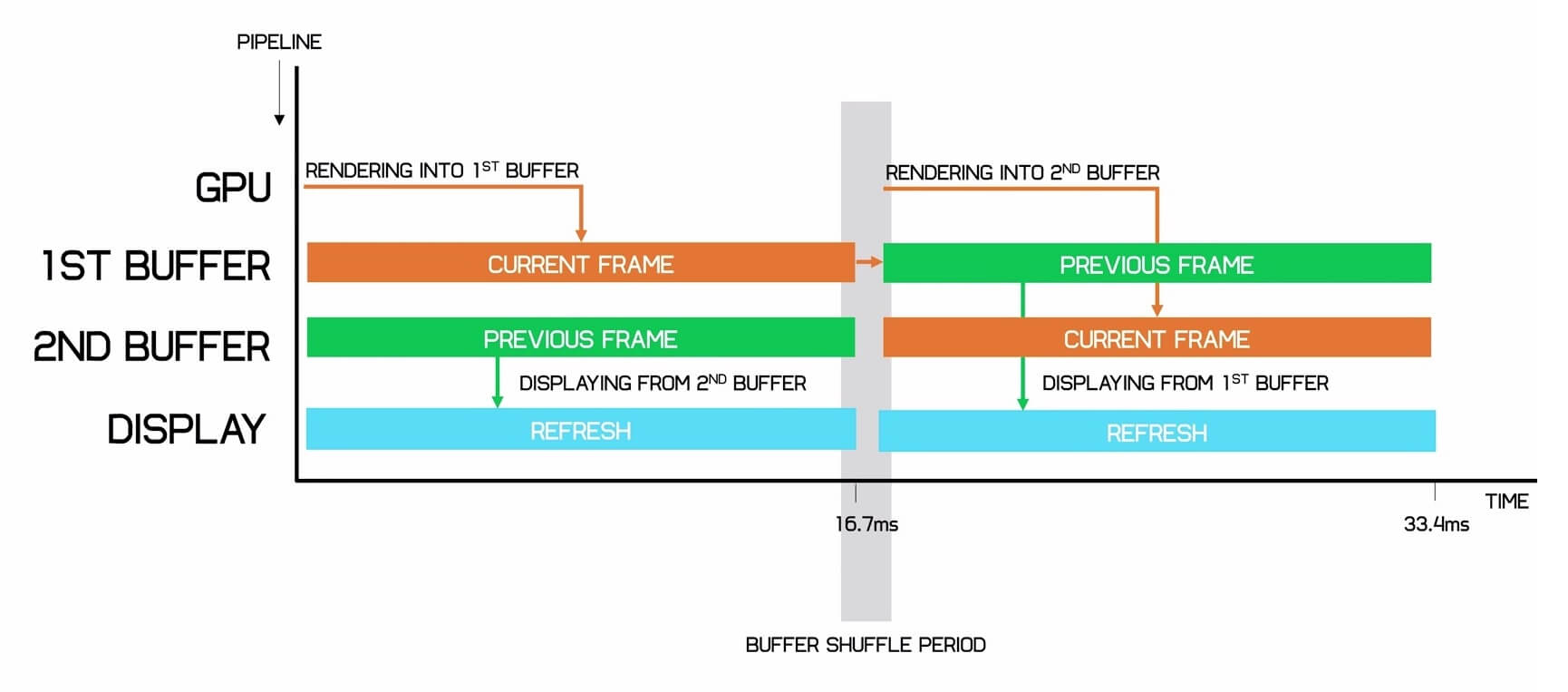

Vsync on, a closer look

The only point at which the second buffer is updated is between the refreshes. To ensure that happens, the GPU waits after it completes rendering a frame, until the display is about to refresh. It then shuffles the buffers, begins rendering a new frame, and the process repeats. Sometimes the process might involve multiple buffers before a frame reaches the display but this is the general gist of how Vsync functions.

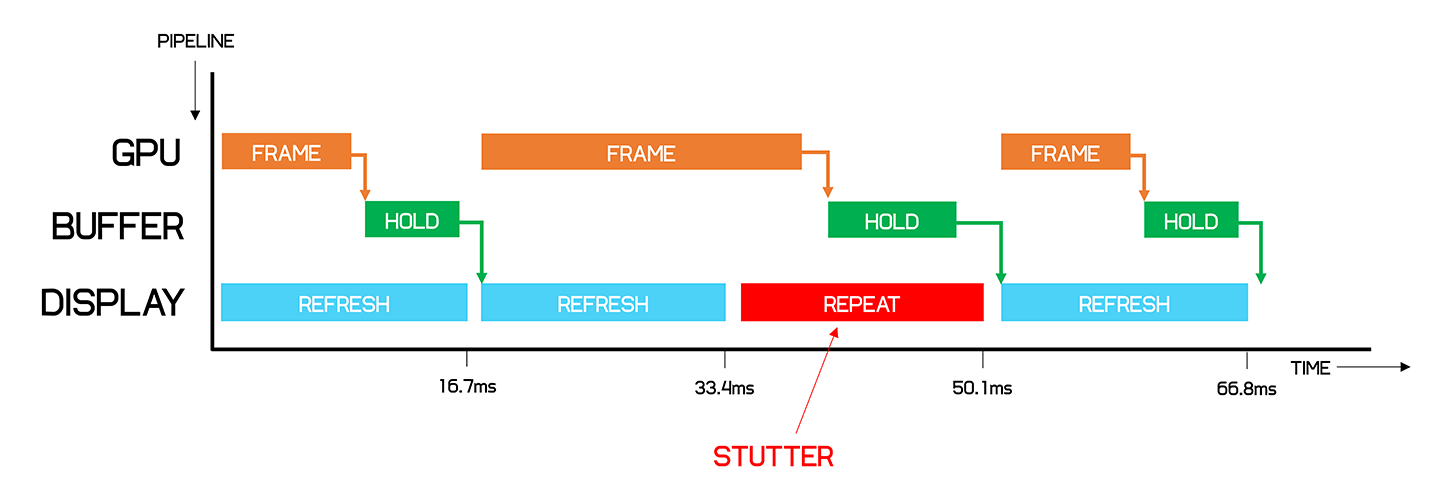

When your GPU is too slow to render a frame... stuttering happens

There are two problems with Vsync. First, if your GPU render rate is too slow to keep up with the display's refresh rate - say it's only capable of rendering at 40 FPS on a 60 Hz display - then the GPU won't render a full frame in time to meet the start of the display's refresh, so a frame is repeated. This causes stuttering as some frames are displayed only once, while others are displayed twice.

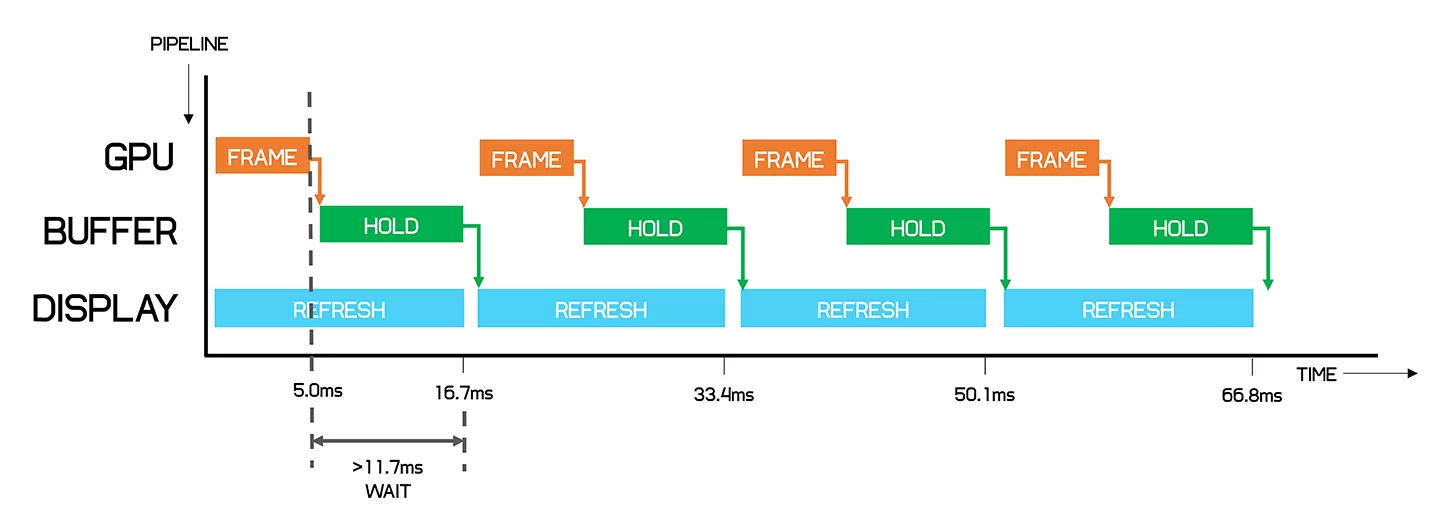

Vsync on: 60Hz display, 200 FPS

The second problem occurs when your GPU is very fast and is easily able to render a frame within the refresh rate interval. Let's say, it can render at 200 FPS, producing a new frame every 5ms, except you're using a 60 Hz display with a 16.7ms refresh window.

With Vsync on, your GPU will complete the next frame to be displayed in 5ms, then it will wait for 11.7 ms before sending the frame to the second buffer to be displayed on the monitor and starting on the next frame. This is why with Vsync on, the highest frame rate you'll get matches the refresh rate of your monitor, as the GPU is essentially 'locked' into rendering no faster than the refresh rate.

Now it's at this point that there's a lot of confusion.

We often hear things like "locking the GPU to my monitor's refresh using Vsync is great, because if it renders faster than the refresh rate, those frames are wasted because the monitor can't show them, and all I get is tearing". A lot of people point to power savings from using Vsync; your GPU doesn't need to work as hard, there's no benefit to running at frame rates higher than the monitor's refresh rate, so run at a locked FPS and save some power.

We can see why people would come to this conclusion and there are some bits of truth there, but it's not accurate in general. And the reason for this is that you're not factoring in the time at which inputs are processed, and how long it takes for those inputs to materialize on the display.

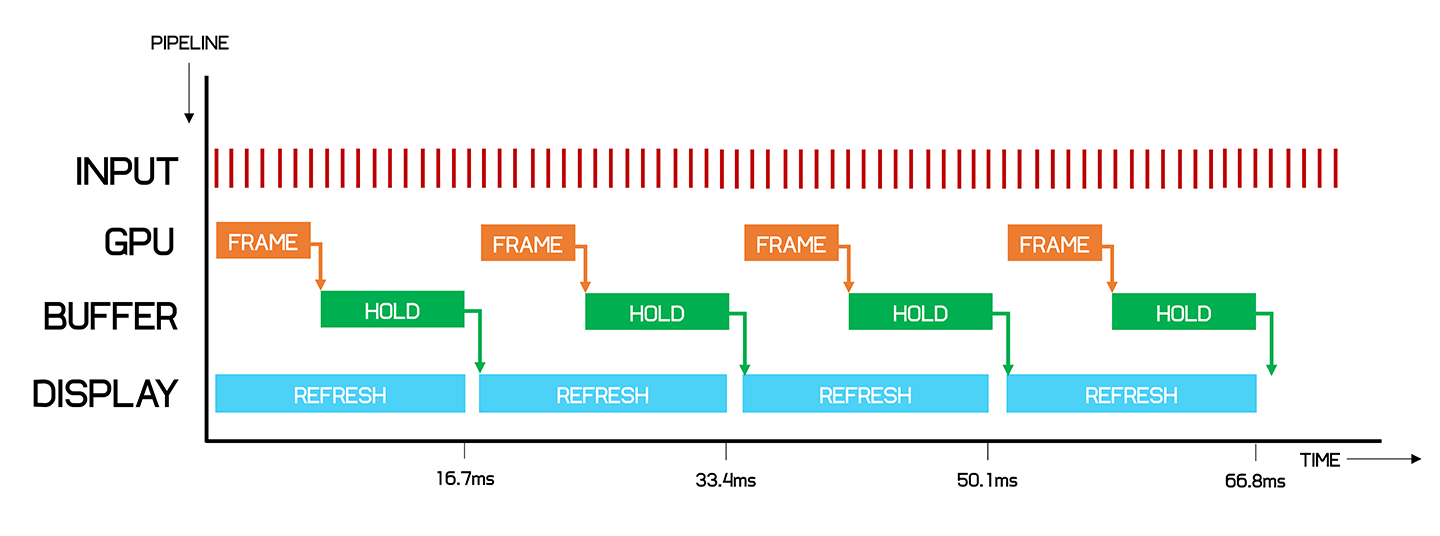

Vsync on including input

To explain why this is the case, let's look at the Vsync on diagram, but overlay the diagram with the input from your mouse and keyboard, which is typically gathered every 1ms. Let's also use the same example where we have a GPU capable of rendering at 200 FPS with a 60 Hz display.

With Vsync and a simple buffer system, in this simplified explanation the GPU begins rendering a frame corresponding to your mouse input as soon as it receives that input, at time 0. It then takes 5ms to render the frame, and it waits a further 11.7ms before sending it to the display buffer.

The display then takes some time to receive the frame to be rendered and physically update the display line by line with this information.

Vsync on including input

Even in the best case scenario, we're looking at a delay of at least 16.7ms between your input and when the display can begin showing the results of that input to you.

When factoring in display input lag, CPU processing time and so forth, the latency between input and display refresh could be easily more than 50ms.

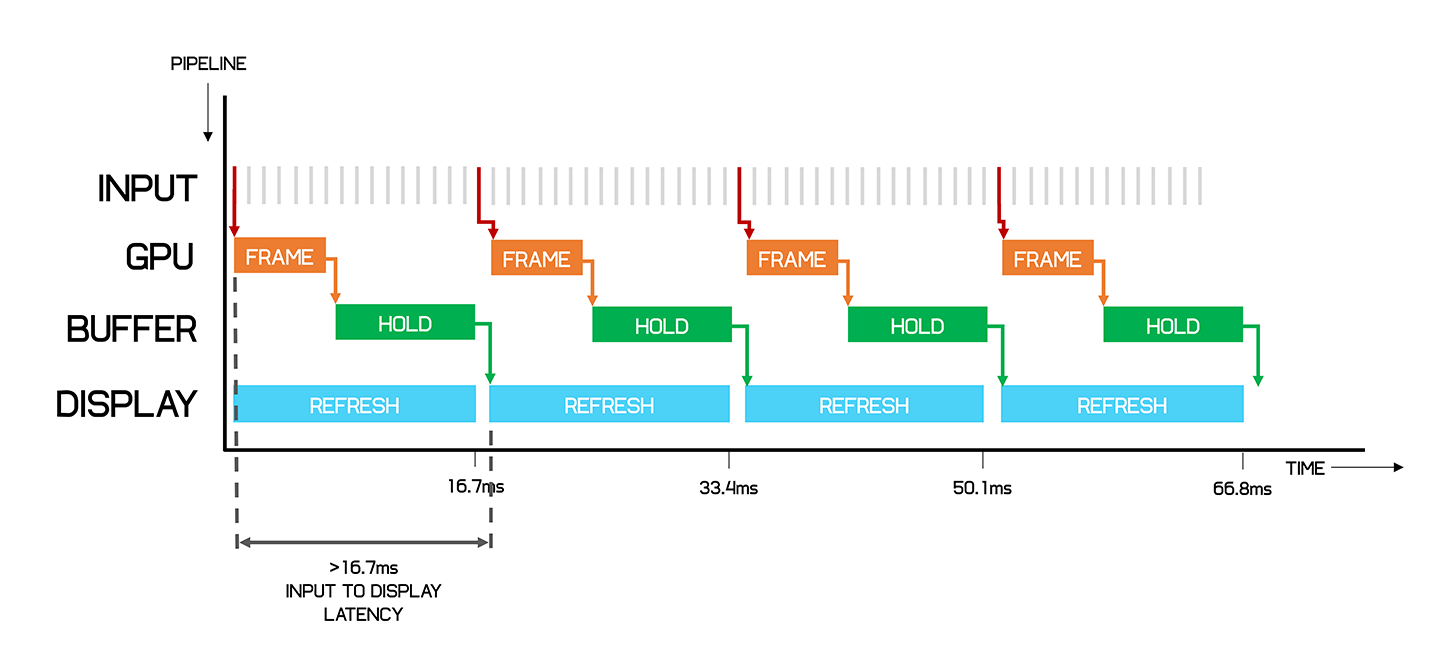

Vsync off including input, 60Hz display, 200 FPS

Now let's look at the Vsync off diagram. The GPU continuously renders regardless of when the display is refreshing, taking 5ms to turn your input into a complete frame. The display can then begin displaying that new frame immediately, albeit it might be only part of that frame. The result is the latency between your input to the game, and when the display can begin showing the results of that input, reduces from 16.7ms to just 5ms. And there won't be any additional buffers in real world implementations; it's as fast as that, plus your monitor's input lag.

And that's where you get the advantage. In this example, running at 200 FPS with Vsync off on a 60 Hz monitor reduces input latency to 5ms, whereas with Vsync on, that latency is at least 16.7ms, if not more.

Even though the display is not able to show all 200 frames per second in its entirety, what the display does show every 1/60th of a second is produced from an input much closer in time to that frame.

This phenomenon, of course, also applies with high refresh monitors. At 144 Hz, for example, you will be able to see many more frames each second so you'll get a smoother and more responsive experience overall. But running at 200 FPS with Vsync off rather than 144 FPS with Vsync on will still give you a difference between 5ms and upwards of 7ms of input latency.

Now when we're talking about millisecond differences, you're probably wondering if you can actually notice this difference in games.

Depending on the kind of game you're playing, the difference can be anything from very noticeable, to no difference whatsoever. A fast paced game like CS: GO running at 400 FPS on a 60 Hz monitor, with input latency at best around 2.5ms, will feel significantly more responsive to your mouse movements than if you were running the same game at 60 FPS with 16.7ms of latency (or more).

In both cases the display is only showing you a new frame 60 times a second, so it won't feel as smooth as on a 144 Hz or 240 Hz display. But the difference in input latency is enormous; running at 400 FPS allows you to get your inputs to the display nearly 7 times faster, if not more. Try it out for yourself and you're bound to feel the difference in responsiveness.

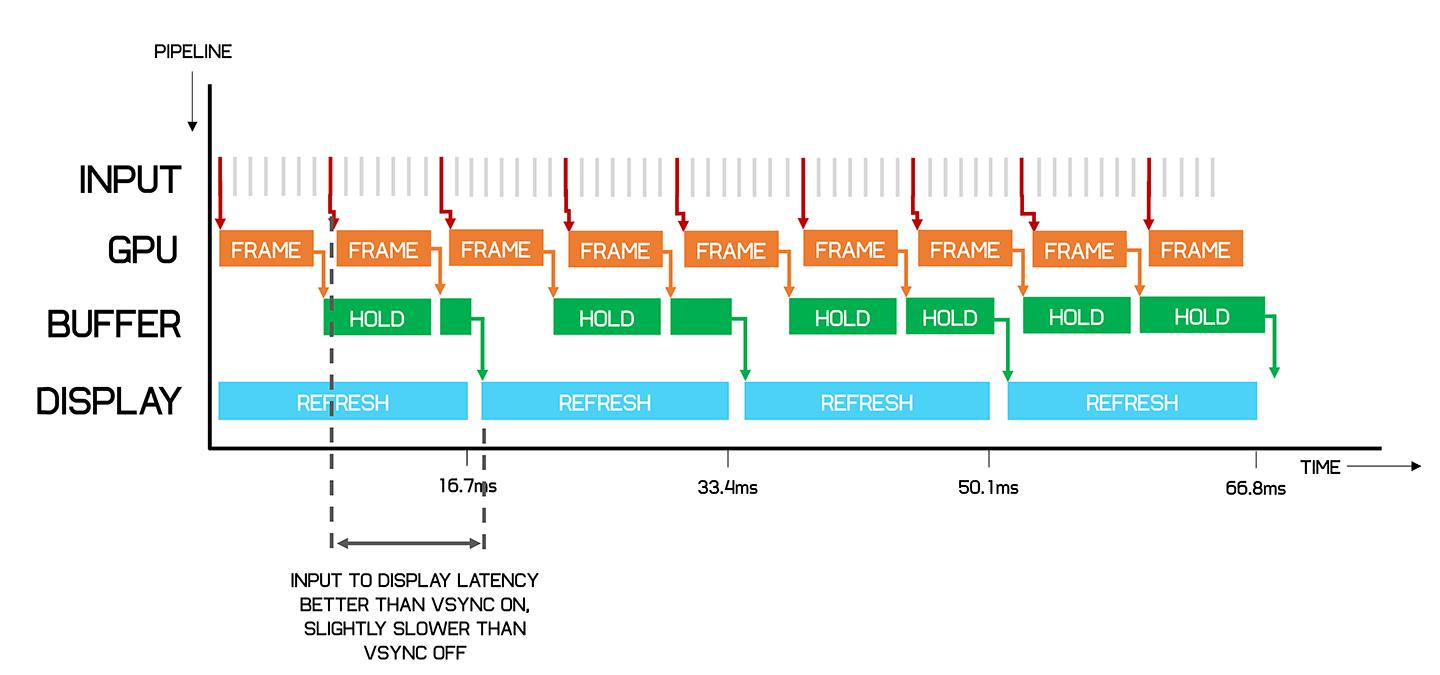

And we haven't just pulled this explanation out of nowhere, in fact Nvidia knows the limitations of Vsync in terms of input latency, which is why they provide an alternative called Fast Sync (AMD's alternative is called Enhanced Sync). This display synchronization technique is like a combination of Vsync on and Vsync off, producing the best of both worlds.

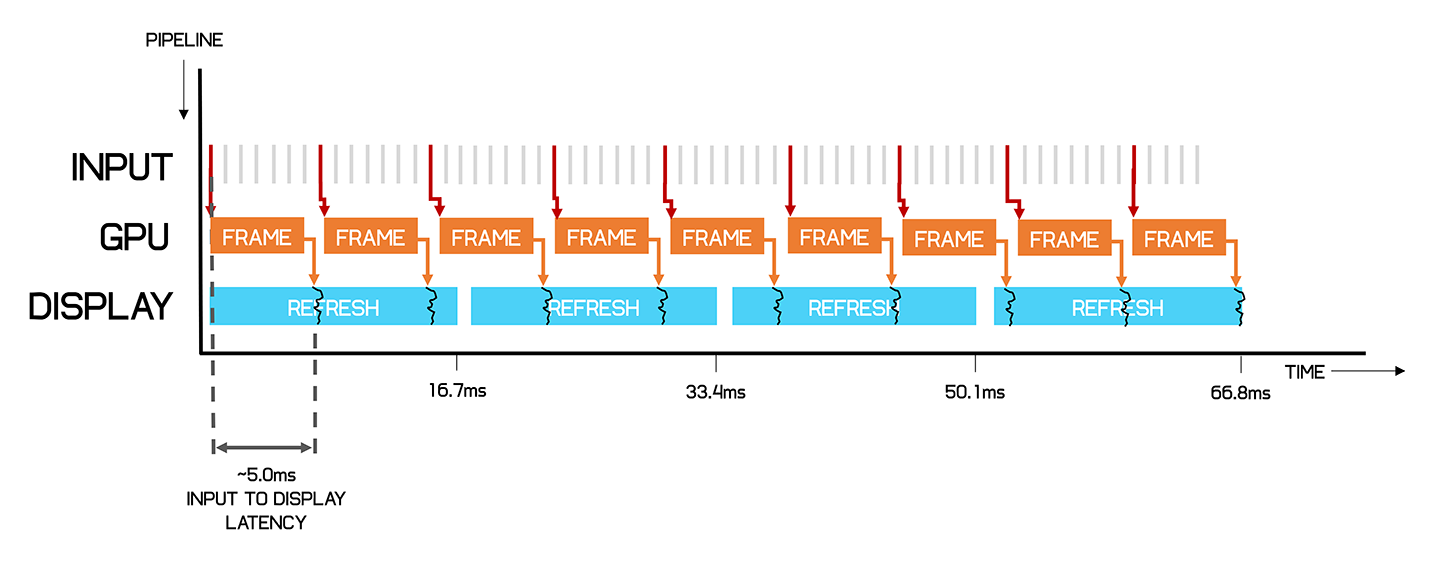

Fast Sync works by introducing an additional buffer into the Vsync on pipeline called the last rendered buffer. This allows the GPU to continue rendering new frames into the back buffer, transitioning into the last rendered buffer when complete. Then on a display refresh, the last rendered buffer is pushed to the front buffer that the display accesses.

Fast Sync / Enhanced Sync

The advantage this creates is the GPU no longer waits after completing a frame for the display refresh to occur, like is the case with Vsync on. Instead, the GPU keeps rendering frames, so that when the display goes to access a frame at the beginning of the refresh period, that frame has been rendered more closely to the refresh window. This reduces input latency. However unlike with Vsync off, Fast Sync delivers a completed frame to the display at the beginning of each refresh, rather than simply pushing the frame to the display immediately, and it's this technique that eliminates tearing.

Fast Sync is only functional when the frame rate is higher than the display's refresh rate, but it does succeed in providing a more responsive game experience without tearing. And of course, AMD has an equivalent called Enhanced Sync.

Hopefully this explainer will have cleared some of your questions about why running a game above your monitor's maximum refresh rate does deliver a more responsive game experience, and why the ability to run games at higher frame rates is always an advantage even if it might appear that your monitor can't take advantage of it.

One last note: we haven't discussed adaptive sync technologies like G-Sync and FreeSync here, and that's because we've been mostly talking about running games above the maximum refresh, where adaptive sync does not apply. There's a lot of different syncing methods out there, but adaptive sync is very different to Vsync and Fast Sync that we've been talking about, and at least for this discussion, isn't really relevant.

Further Reading

Shopping Shortcuts

- Radeon RX 570 on Amazon, Newegg

- Radeon RX 580 on Amazon, Newegg

- GeForce GTX 1060 6GB on Amazon

- GeForce GTX 1070 Ti on Amazon, Newegg

- GeForce RTX 2070 on Amazon, Newegg

- GeForce RTX 2080 on Amazon, Newegg

- GeForce RTX 2080 Ti on Amazon, Newegg

Masthead credit: Photo by Jakob Owens