By now every self-respecting PC enthusiast and gamer will be aware of Nvidia's new GeForce RTX 20 series graphics cards, in the RTX 2080 Ti, RTX 2080 and RTX 2070. It won't be long before we'll get performance numbers for these cards, too, which is exciting. But before these GPUs hit the desktop, I thought it would be an interesting thought experiment to discuss what the mobile line-up of these might look like.

It's a certainty that Nvidia will bring at least some of these GPUs to gaming laptops after the desktop launch, and a few tidbits we've heard from industry sources suggest it'll be around November when we hear about GeForce 20 GPUs for laptops. That's about a month after the release of the RTX 2070, which makes sense considering with the 10 series, laptop parts were announced about 2 months after the GTX 1070.

In this article I'll be breaking down the specs of the RTX cards announced so far, and giving my thoughts into how these GPUs might translate into laptop versions, what sort of specs we could see, and what features will be kept or omitted. We do not have any concrete information on these parts, so this isn't a rumor or leak story, we're simply speculating and opening the discussion into how Nvidia's laptop GPUs typically differ from their desktop counterparts.

No 2080 Ti

Let's start with the RTX 2080 Ti at the top end because this one is pretty simple... It's very unlikely we'll see the RTX 2080 Ti in laptops simply because its 250 watt TDP is too high for most laptop form factors. When creating a laptop GPU, the power draw and TDP is the most important aspect, as laptops have much more limited cooling systems compared to what is possible in desktops. And this is especially true when you consider that most gaming laptops these days are either slim and portable systems, or more mid-tier devices, rather than massively chunky beasts. And the slimmer you go, the less cooling power you have, which restricts the sort of GPU TDPs it can support.

With 10 series laptops, even the most chunky beasts topped out at fully fledged GTX 1080s, as that was the highest-end laptop GPU Nvidia provided. The GTX 1080 on the desktop had a TDP of 180W and that pushed down to around 150W for laptops, whereas the GTX 1080 Ti had a 250W TDP and there was no laptop equivalent. So with the RTX 2080 Ti also packing a 250W TDP, we're certainly not going to see it in laptops.

RTX 2080... for chunky ones

The RTX 2080 is an interesting one. Like with the GTX 1080 in the previous generation, I expect the RTX 2080 to be the top-end GPU available in a mobile form factor, and aside from Max-Q versions which I'll talk about later, the fully-fledged RTX 2080 will be restricted to larger laptop designs due to its higher TDP.

The RTX 2080's TDP is a bit of an unusual case. Nvidia has listed it as 215W for the desktop, so that's up from 180W on the GTX 1080. But there's been some talk that part of this TDP is allocated for VirtualLink, the new USB-C connector spec designed for VR headsets. Supporting this connector adds around 30W to the graphics card's TDP requirement as VirtualLink provides power directly to the VR headset.

It remains to be seen whether Nvidia will leave VirtualLink enabled for laptop GPUs, but I'd imagine using a VR headset with a laptop is a bit more of a niche use case than VR with a desktop. In any case, I'd assume laptop vendors could choose not to support VirtualLink and therefore not have to worry about the added TDP. In that case, laptops without VirtualLink could integrate an RTX 2080 and theoretically only have to design a cooler for a TDP of 185W or so.

That's pretty similar to the GTX 1080's TDP of 180W, and then with further laptop optimizations we'll likely see that drop back down to around the 150W mark of the 1080's laptop variant. So even though the RTX 2080 does pack a higher TDP, I fully expect it to make its way to laptops at around a 150W TDP in the end.

Now the question becomes, what sort of specs and performance can we expect of the RTX 2080 in laptops.

Well with the GTX 1080, the laptop and desktop variants of the GPU were identical: same core configuration, same clock speeds, same memory, and provided the cooling performance was adequate, the laptop 1080 performed on par with the desktop 1080.

Nvidia seems very keen on offering laptop versions of their GPUs that are equivalent to the desktop versions – something we totally support – and I expect that to continue with the RTX 2080. In other words, I fully expect the laptop RTX 2080 to have 2944 CUDA cores with boost clocks in the 1710 MHz range, and use 8 GB of GDDR6 memory.

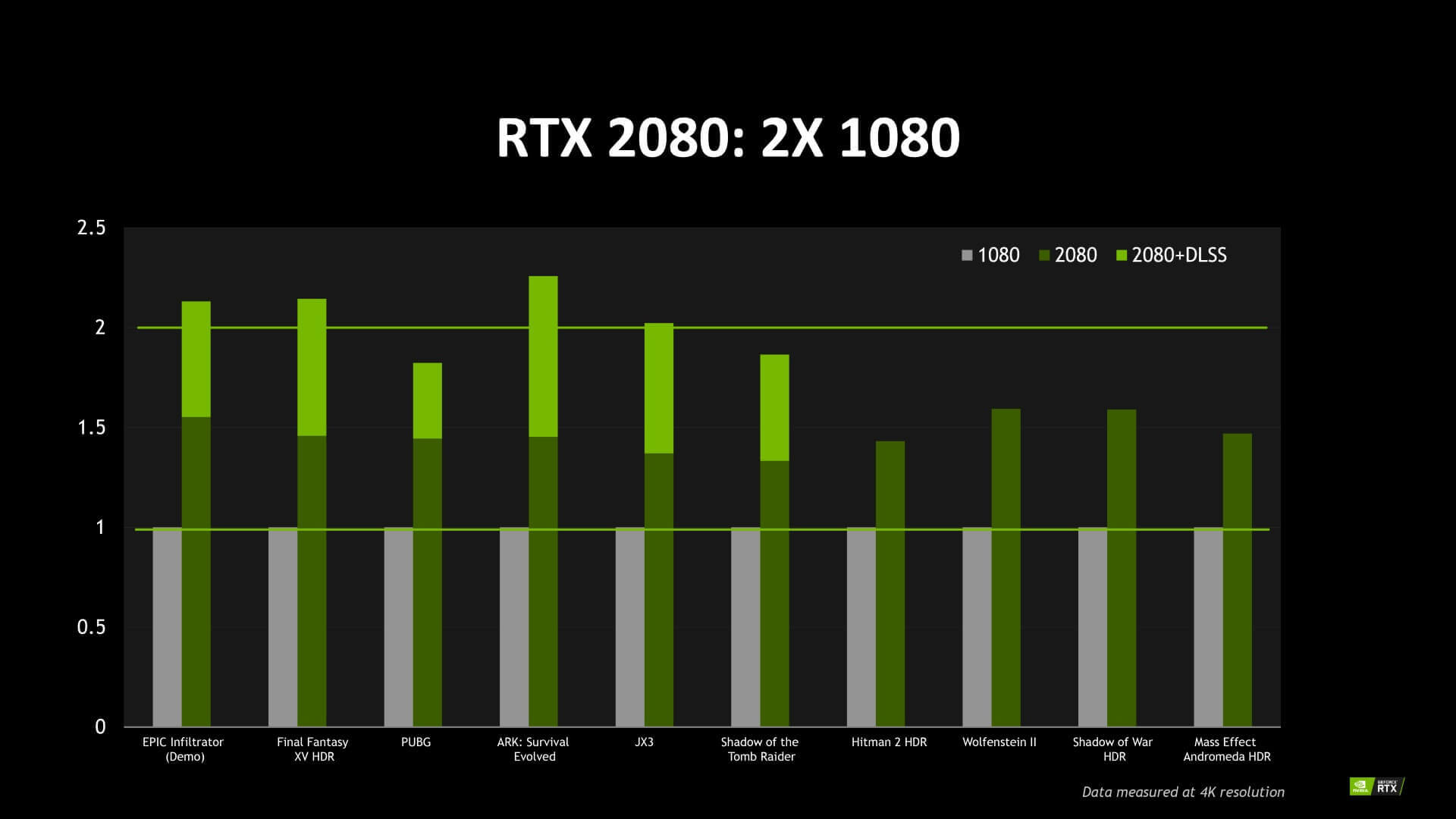

As for real world performance, we're still not sure how the cards will perform in the desktop, so it's hard to say for certain. But we expect the RTX 2080 to perform around the level of the GTX 1080 Ti, and Nvidia's own charts show a 30 to 40 percent performance improvement over the GTX 1080 in best case scenarios. So for top-end laptops, getting a 35% performance bump or so in the same form factor is quite a tasty proposition.

How would Nvidia manage to get this performance boost from roughly the same TDP? Well, Turing is built using TSMC's 12nm process, compared to 16nm for Pascal. That's not a huge change, and Nvidia has chosen not to shrink the die on 12nm, but instead make use of wider pathways. However the slight process improvement should bring a more favourable voltage/frequency curve, so running Turing at the same frequencies as Pascal should consume less power, at least in theory. And we saw something like this in practice with 2nd gen Ryzen moving from 14nm to 12nm: it could run at lower voltages for the same frequencies.

So when you look at the RTX 2080 compared to the GTX 1080, boost clocks are pretty similar: 1710 MHz for the 2080, and 1733 MHz for the 1080. With Turing on 12nm, it should require less voltage to run at that frequency compared to Pascal. But then the RTX 2080 bumps up the CUDA core count from 2560 to 2944, so it's a wider GPU at the same clocks. On paper, it looks like Nvidia has gained some power headroom by not increasing the clock speed of the RTX 2080, and put that towards making the GPU wider, with the end result being similar power consumption for the 2080 and 1080. And that's good news for laptops.

RTX 2070 Predictions

It's a similar story with the RTX 2070. Nvidia lists a desktop TDP of 175W, factor in VirtualLink possibly increasing the TDP and the 2070 should pull back to around 150W, the same TDP as the desktop 1070. And again, the laptop version of the 1070 sat around 115W, so I expect the laptop RTX 2070 to be rated for something similar.

The laptop version of the 1070 was a bit of an enigma in that it was specced different to the desktop 1070 but performed around the same. The laptop variant had 2048 CUDA cores and a boost clock of 1645 MHz, compared to 1920 CUDA cores in the desktop variant with a boost clock of 1683 MHz. Nvidia could do something similar with the laptop RTX 2070, but I think the case of the 1070 was fairly unique and I don't expect them to follow that exact path again.

At the same TDP, the RTX 2070 should be able to provide more performance than the GTX 1070, so again, whatever laptops that could support the 1070's TDP will be able to bump that up to an RTX 2070 and get more performance in the same form factor. That will be great news for mobile gamers as a lot of fairly compelling laptops were able to handle the 1070's TDP, and I think again this will be a sweet spot for high performance gaming laptops.

How they get this extra performance is basically the same as the RTX 2080. The RTX 2070 is clocked around the same level as the GTX 1070, but it includes 2304 CUDA cores compared to 2048, all thanks to the small shift from 16nm to 12nm. On paper, that's a 12 percent performance jump, so it's looking unlikely the 2070 will reach the level of the GTX 1080. That said, any performance increase in the same form factor is very welcome in constrained laptops.

It's trickier to predict what will happen to the tensor cores and RT cores in the laptop versions of the RTX 2080 and RTX 2070. Considering ray-tracing is the flagship feature of these new GPUs, I fully expect the laptop variants to support ray-tracing in some form, so that will mean these cores will need to be on the die and active.

Whether Nvidia will disable some tensor or RT cores to save power, I'm not sure, though it would be the most obvious candidate for power savings. At the end of the day, though, ray-tracing is computationally intensive so if Nvidia really wants to promote this feature as being supported by their laptop GPUs, they'll probably have to keep the RT cores fully enabled. It'll be interesting to see how that pans out and what power implications it has.

I also expect Nvidia to continue the trend of offering Max-Q variants of the RTX 2070 and RTX 2080. I know there are plenty of people out there that dislike Max-Q as they think it's some form of artificially restricting their GPU from reaching its full potential, but in reality it's actually quite a good idea for laptops and other cooling-restricted systems.

The key thing Max-Q does is provide a wider range of GPUs that sit at different TDPs. This allows a manufacturer to choose a GPU that's better suited to their cooling solution. For example a laptop might have more than enough thermal headroom for an RTX 2070, but not enough for an RTX 2080. Instead of capping that system to RTX 2070 performance, that system could integrate an RTX 2080 Max-Q at a TDP between the 2070 and 2080, offering better performance than the 2070 but not quite at the same level as the 2080.

With the GeForce 10 series, Max-Q variants of the 1080 and 1070 were clocked around 250 to 300 MHz lower than their fully fledged counterparts, while using the exact same GPU with the same core configuration and memory. The clock speeds were chosen such that the GPU was operating at an optimal frequency on Pascal's voltage/frequency curve, giving the best efficiency.

I expect something very similar with the GeForce 20 series. We'll get an RTX 2070 Max-Q clocked around 250 MHz lower that will sit between the 2070 and as-yet-unannounced 2060. And then the RTX 2080 Max-Q will also be clocked roughly 250 MHz to sit between the 2070 and 2080. Usually the Max-Q variants are 10% faster than the GPU below them, and 10% slower than the non-Max-Q model, but we'll wait to see how that plays out.

As for pricing, we know with the desktop cards that the GeForce 20 series is pretty expensive, with prices above their 10 series counterparts, and it's unlikely they will offer as good value considering what we know about their performance so far. With the laptop versions, it will probably be a very similar story: better performance in the same form factor, but also considerably higher prices.

An RTX 2070 laptop will likely cost at least $100 more than GTX 1070 laptops, and that margin will be higher for RTX 2080 laptops compared to GTX 1080 laptops.

We are expecting better performance in the same sort of designs, but for some buyers that may not justify the price increase. That said, laptops are sold as entire systems rather than as standalone GPUs, so the value proposition when you compare entire system prices will end up a bit more favourable than on the desktop side comparing standalone cards.

I'm definitely excited to see what Nvidia does with the GeForce 20 series in laptops and how much more performance we can get in the same form factors. I think these GPUs could offer a bit more to laptop buyers than desktop system builders considering laptops are constrained in ways desktops are not, and any performance gains without making laptops chunkier and less portable is always welcome.