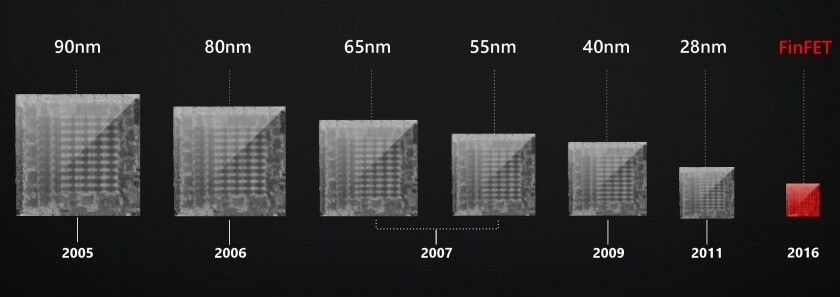

AMD’s next generation Polaris GPUs are expected to break cover as early as next month. Built using a 14-nanometer FinFET manufacturing process, the new Polaris 10 will reportedly replace existing Radeon R9 390 cards according to “well-informed” sources as reported by Fudzilla.

The publication says its sources are confident that Polaris 10 should match or outperform Radeon R9 390 cards and in certain circumstances, trump the R9 390X by a healthy margin.

These days, AMD’s R9 390X starts around $399 or so with non-“X” variants going for just over $300. With Polaris 10, AMD is reportedly hoping to bring the price down to $299 at launch. As part of its marketing, AMD will apparently tout the card’s low power consumption and power-per-watt metrics – areas it feels it can compete soundly against Nvidia.

The Polaris 11, meanwhile, is expected to replace the Radeon 370 and take on the GeForce 950 in terms of performance. Both Polaris 10 and Polaris 11 could arrive at Computex in June.

Nvidia, meanwhile, is working on its own next-generation graphics core, codenamed Pascal. The first card based on Pascal debuted last month although it’s not for consumers. The Tesla P100 is a high-performance compute (HPC) card that’s designed for use in some of the world’s fastest supercomputers.

AMD recently launched a new website that provides details on its Polaris architecture though there’s nothing that we didn't already know.

https://www.techspot.com/news/64679-amd-polaris-10-performance-reportedly-par-390-390x.html