dividebyzero

Posts: 4,840 +1,271

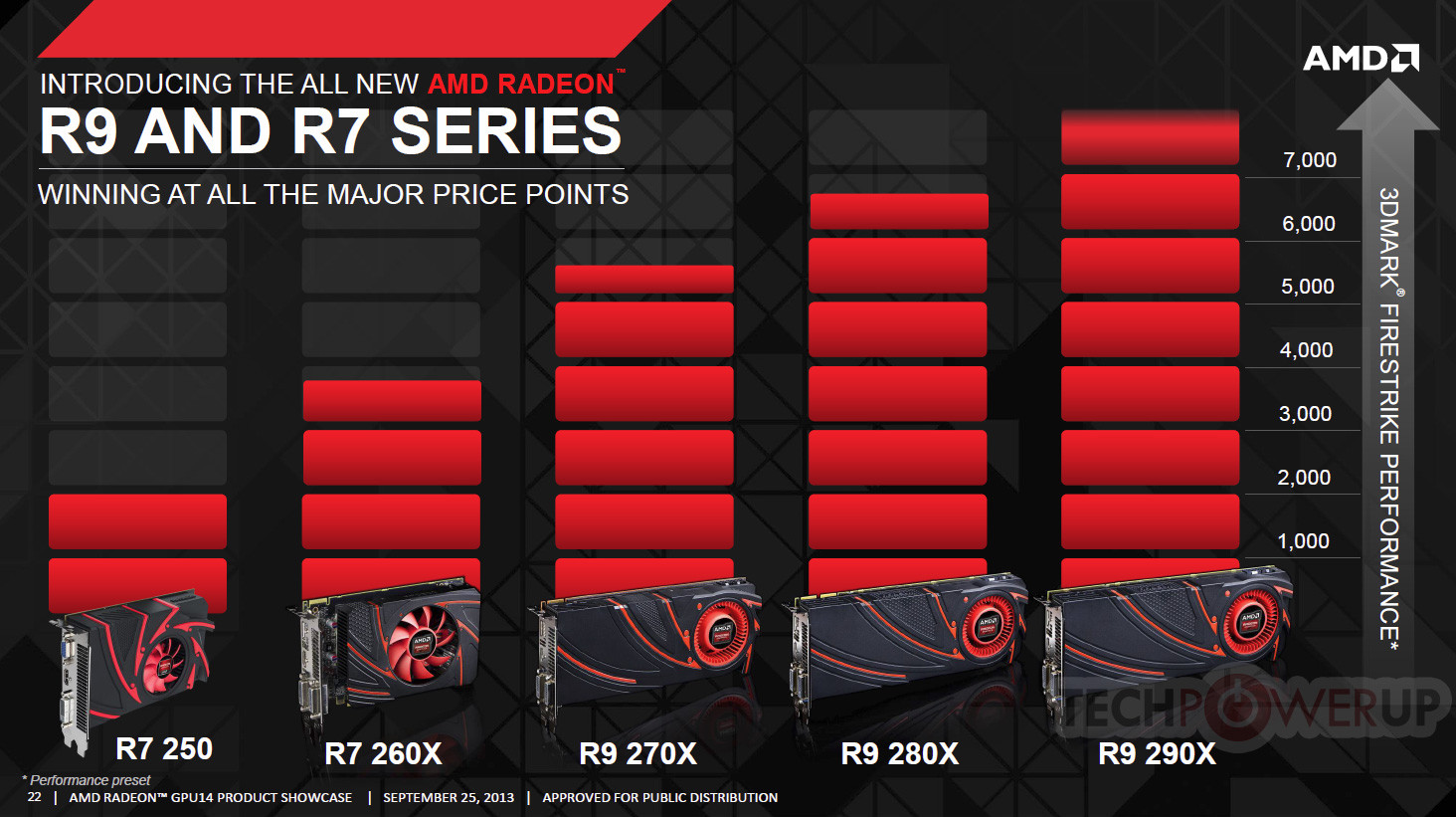

How can it be cherry-picked when it is the only performance slide that AMD have released ?Cherry-picking firestrike

If anyone's cherry-picking Firestrike it is AMD.

Get a grip.

:SMH:

How can it be cherry-picked when it is the only performance slide that AMD have released ?Cherry-picking firestrike

So I read that AMD will not disclose the actual price or the clock speed when you can pre order it...instead you will need to deposit a sum and hope for the best?

What kind of way is that of selling a GPU :O Im buying it anyway but hey, it just seems fishy

That's news to me. Where are you hearing that? I was under the impression that the NDA expired on the 3rd October - the day pre-orders open.

Not sure if offering an unspecified part doesn't affect many countries fair trading and consumers laws. Maybe the pre-order offer isn't open for those?

Short answer, No.Would you purchase a card without knowing the price or clock speed?

Short answer, No.

Long answer, Hell no.

My buying parameters are performance (vs what I presently have), price, overclocking headroom, performance gained from overclock....then a bunch other stuff headed by vendor and noise.

Taking any company- let alone a tech company, on faith? No. Some people with short memories will sign up no doubt- and AMD will sell the 8000 copies, but I don't see anything at all to be gained by blindly trusting a multi-billion dollar company...especially when Battlefield 4 will likely be part of a regular game bundle once the card hits retail proper.

At the moment, you have a single Firestrike chart (showing an 18% increase over the HD 7970) and this slide telling you that the card is capable of "over 5 TFlops" FP32 performance- presumably if it was 5.5 or above AMD would have said so, so that leaves 5.1 - 5.4 as a probable range, versus the 4.3 of Tahiti (18.6% - 25.5% improvement) - not a hell of a lot to go on is it?

That's a big "if". Maybe the BF4 SpecialSuper Edition will come with a mousepad...or a poster...or fake dogtags (my fave!). Of course AMD might only charge you another $50 for all that free stuff - who can say. Somehow I don't see the BF4 edition being cheaper than the regular reference version. If the story is true (and I'm not sold on that), AMD will have no problem scaring up 8000 early adopters in any case. The ultimate test of loyalty.But..But...its so shiny....and if its not more then 600$ it would not be a bad deal...and HEY, you get BF4..woo...

Any card from a vendor overclocked HD 7970GE and up.I want a card better then the r9 280x , which card could you recommend?

That's a big "if". Maybe the BF4 SpecialSuper Edition will come with a mousepad...or a poster...or fake dogtags (my fave!). Of course AMD might only charge you another $50 for all that free stuff - who can say. Somehow I don't see the BF4 edition being cheaper than the regular reference version. If the story is true (and I'm not sold on that), AMD will have no problem scaring up 8000 early adopters in any case. The ultimate test of loyalty.

Any card from a vendor overclocked HD 7970GE and up.

You should start a thread if you're serious. Include the games and GPGPU apps that you use or are planning on using, your native screen resolution, and where you would be buying from (geographic area).

That pretty much only applies when the factory overclocked cards are more expensive than the stock clock versions. Generally there isn't much binning (selecting better GPUs) going into the OC'ed cards these days so if the reference card is cheaper then it is the better deal.Perhaps the overclocked HD 7970GE could be an idea, but I was advised to not buy something factory overclocked , and instead doing that myself.

My buying parameters are performance (vs what I presently have), price, overclocking headroom, performance gained from overclock....then a bunch other stuff headed by vendor and noise.

At the moment, you have a single Firestrike chart (showing an 18% increase over the HD 7970) and this slide telling you that the card is capable of "over 5 TFlops" FP32 performance- presumably if it was 5.5 or above AMD would have said so, so that leaves 5.1 - 5.4 as a probable range, versus the 4.3 of Tahiti (18.6% - 25.5% improvement) - not a hell of a lot to go on is it?

Since you're going with the condescension motif...Here let me do the math for you of how it's going to go down in the real world:

HD7970GE vs. R9 290X both overclocked to 1150mhz GPU on air

Basic mistake I think. What I noted was the results from the (sparse) information supplied by AMD themselves. It is a straight extrapolation of the facts...unless AMD are sandbagging, then it is a straight extrapolation of the purported facts.Now if you think a card with these specs will only beat HD7970GE by 14-17%, you are strongly mistaken

Best case scenario is AMD bite the bullet and opt for a big die to put some pressure on the GTX 780/ Titan - Six Weeks ago

I also noted that AMD drivers are likely at beta stage for the card, so benchmarks should allow for that. Likewise you can only extrapolate so much from a single reference point.At this stage it looks like the 780 and 290X would be roughly matched, kind of like the HD 7970 and GTX 680/770 scenario... A few days ago

The actual answer is - 14.3% (that's minus...a deficit).Memory bandwidth (7000mhz vs. 6000mhz) = 14% advantage

Firestrike is a benchmark that is both GPU and CPU sensitive. It's quite easy to get 1000+ point fluctuations depending upon system specification, so any comparison should be done with similar component fit-out.

Is 9832 the overall score ( in large orange font) ?

I was thinking the same thing however, they don't state what settings they used, if it's 4k on extreme settings they are awesome scores, if default 1080p though, these are not very impressive?

quote]

The slide says it was on the performance preset

and my o/c $200 7950 scores over 8000.

http://www.3dmark.com/3dm/1299529

Unless they are talking about the overall score including the CPU they happened to use and not just the graphics score, but that would be crazy, because they would have to use a CPU similar to or slower than my I5 in order for the R9 to pull the score back up to 8000. Surely if they were going to include the CPU score, which would go against usual practices and common sense, then they would use their fastest CPU to get the score as high as possible.

I really can't figure it out. Yet I've only seen 3 people comment on this anywhere and I'm one of them! (EDIT: until I read a few more posts here, but that's it)

They are also mentioning the theoretical compute power a lot, 5.8TFLOPS, which basically means nothing as it's simply the number of stream processors x clock x 2. The GTX 680 only has 1500 processors compared to the 7970 2000+, yet it is arguably better with less cores, it's they way the cores are used more efficiently.

Let's say they are the same performance for a minute, as the Titan has 2688 processors, using the same ratio of 1500:2000, the R9 290X would need 3500 processors to match it. Unless they've significantly increased efficiency, which I doubt as its AMD.

Anyway time will tell, I sincerely hope they are better than my 7950's and a decent price.

You cant compare Stream processors and Cuda Cores with a core to core count, they are entirely different architectures and designs in general and are used in different ways to provide similar results.They are also mentioning the theoretical compute power a lot, 5.8TFLOPS, which basically means nothing as it's simply the number of stream processors x clock x 2. The GTX 680 only has 1500 processors compared to the 7970 2000+, yet it is arguably better with less cores, it's they way the cores are used more efficiently.

Let's say they are the same performance for a minute, as the Titan has 2688 processors, using the same ratio of 1500:2000, the R9 290X would need 3500 processors to match it. Unless they've significantly increased efficiency, which I doubt as its AMD.

Anyway time will tell, I sincerely hope they are better than my 7950's and a decent price.

Ahh the classic "its not that fast stock but if you overclock it" and the "I own cards from both sides" excuses. (on the internet, we call that defeat.) Watching you dish out lessons and seeing the kneejerk replies is truly something to behold. God bless you dividebyzero, let the truth shine upon those unwilling to accept it.S You're probably hopeful that he includes a GTX 780 and/or Titan using the AB NCP4206 relaxed voltage tweak since you're so fired up about the overclock potential,

NDA apparently lifts 15th October. Save your energy for the review threads

You cant compare Stream processors and Cuda Cores with a core to core count, they are entirely different architectures and designs in general and are used in different ways to provide similar results.

I know, that's my point. But AMD's headline figure is the 5 TFLOPS of compute power (greater than Titans) purely because it has more stream processors than Titan's CUDA cores.

It's as useful as saying an FX-8350 has 8x4000=32000 compute power but an I5 only has 4x3400=13600 compute power.

It's meaningless, but it's their headline figure, along with the Firestrike score of 8000. I mean seriously, these are the 2 numbers that their marketing machine has decided to give us to entice us spend $700 (or $850 in Australia probably) on the new R9 290X. I'm sure it's not the only benchmark they've run, they will have done all of them and decided that this will impress us the most and really show off the performance of the product.

Yet most people who bothered to download and run the test will know their mildly overclocked $200 7950 will score 8200 and a 7970 even more so, around 9000. A Titan scores 12500 and a $650 GTX780 scores 11500.

I just can't see why we're supposed to by impressed by a score of 8000.

Yep, that's exactly what it is - a headline, bullet point, useful round number to fill out a presentation....and of course, doesn't mean squat.I know, that's my point. But AMD's headline figure is the 5 TFLOPS of compute power (greater than Titans) purely because it has more stream processors than Titan's CUDA cores.

Probably because AMD's PR people have little technical prowess. The scores in themselves are meaningless. The only thing you can take out of the slide is the comparative differences between scores in that slide. If you know what one or more of the actual scores are then you can extrapolate the others from that information. The 6800 score of the 280X could be equated with this 7341 score, in which case the 290X would score 8442 in comparison.It's meaningless, but it's their headline figure, along with the Firestrike score of 8000. I mean seriously, these are the 2 numbers that their marketing machine has decided to give us to entice us spend $700 (or $850 in Australia probably) on the new R9 290X.

Personally, power has never been much of an issue for me personally, but its still an important area and some people have high electric bills to worry about.That's a very slim win over the 780, That makes me happy xD

In fact it's not really that impressive, the Titan is still faster and this thing eats more power, I guess if it's priced right (I'll assume it will be) then at least the prices for the 780 and maybe Titan will come down a notch?

I do wonder what Mantle will do for Battlefield 4 though.

Personally, power has never been much of an issue for me personally, but its still an important area and some people have high electric bills to worry about.