I was genuinely interested in the C2D v C2Q gaming question because I owned both, and I didn't cast my net too far beyond Techspot's own CPU benchmarks from way back but I gathered a fair few.

Their earliest archived benches here showed a Q6600 v an E8500. Ok it's a later C2D but it's fit for the comparison purpose in question- a faster higher clocked dual core.

Turned out nearly 3+ years after Q6600 launched in January 2007 most games still ran pretty decent on the C2D.

May 2010, 3+ years after Q6600 launch:

E8500 edges it

It's not massive again but the E8500 is nudging 30FPS when the Q6600 isn't

June 2010:

Rare result Q6600 = E8500.

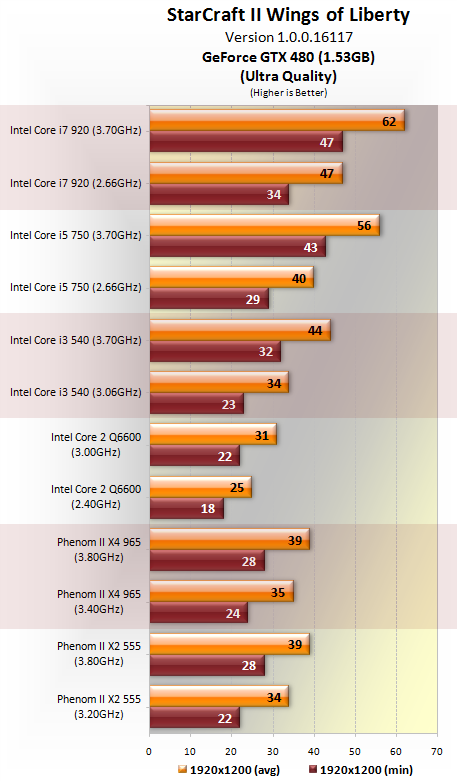

July 2010:

No E8500 tested but the article alludes to it likely being faster when it states the game only uses 2 cores and shows Q6600's struggles against the next gen i3 540 dual core. In short higher clocked C2D = faster than C2Q

October 2010:

Q6600 loses and it's not looking good in any case, even a budget Phenom is faster

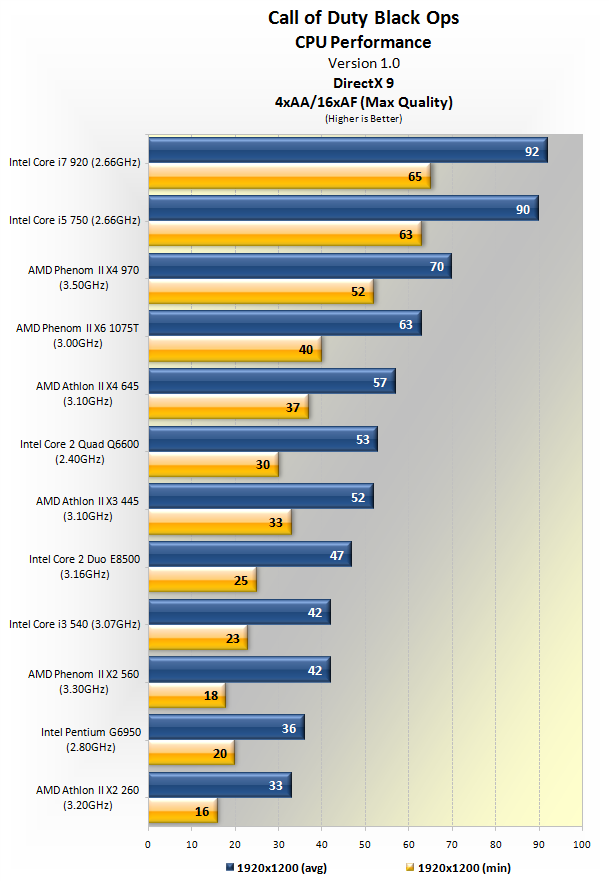

November 2010:

We finally see a good win for the Q6600 against the E8500 approaching 4 years since Q6600 arrived, but it's not

that good. It's just hitting 30FPS minimum here and it's losing to a budget X4 645 quad, which at this point in history only costs about $100. It's also not even half as fast as the i5 750, which shows it's useful life as a gaming chip with a good GPU is probably nearing the end.

February 2011, Q6600 is now over 4 years old:

Again Q6600 struggling here against the E8500 in an advanced (for the time) Unreal engine game. This is important because this mirrored most UE games of this period IIRC for two core usage. RE: Singularity above, but also stuff like Mass effect 2 etc

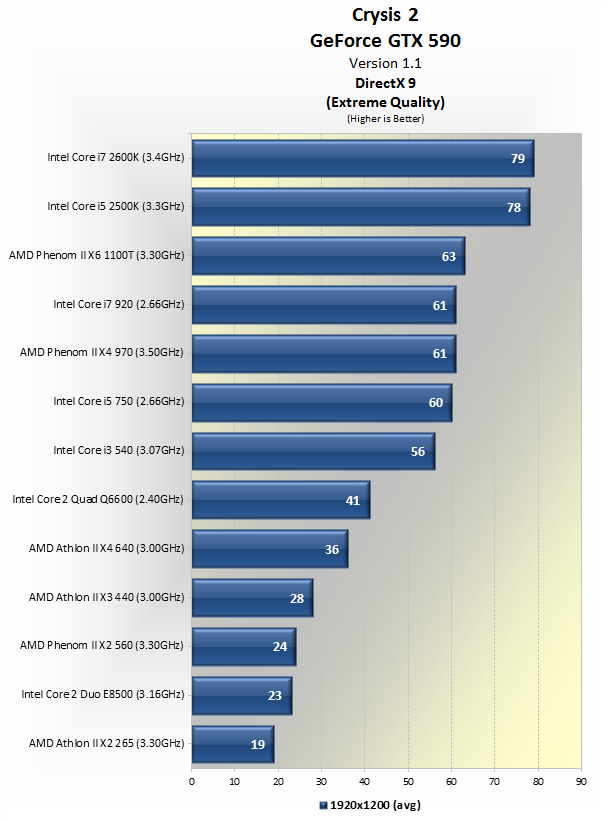

March 2011:

Significant win for the Q6600 here, over 4 years since it arrived though on one of the best technical game engines around. Saying that it is clear again there is one hell of a gap now between it and even a budget dual core like an i3 540 which I think is a little over $100 (it's a year old, was like $140 new)

Q6600 edges it, the E8500 still viable in this though.

It seems Techspot stopped testing Core 2 models after about this point, they were kinda getting too slow I would imagine is the reasoning. I may have missed the odd test they did but I did post ones I found.

I think the pattern is fairly clear, competitiveness of the Q6600 definitely increases as 4 years pass no doubt.

But the problem is two fold as I hinted at in my first post. It took all that time for it to really start showing it's extra muscle in games against the C2D, and by the time it did it was borderline too slow anyway and/or budget chips at that time were starting to destroy it so much you may as well have just ditched the platform.

I hope you find it a little interesting.