First of all, AMD has had the fastest graphics card on the market for almost 2 years now. Where have you been? The HD7990 still destroys a Titan or 980Ti. With this, AMD will have the fastest 2 graphics cards on the market.

Thanks to the vagaries of Crossfire scaling, dual cards of either vendor come attached with a heavy caveat. As for the HD7990 it wouldn't come close to a GTX 980 Ti - especially when the newer and more powerful R9 295X2 barely shades the single GPU card:

I doubt this will be short lived either. NVidia's Pascal is coming out, but not before Polaris from AMD. And if and when NVidia tries to launch their next Titan, AMD will already have Vega to trump.

Unless you have hard evidence to these" facts" I would suggest you tone down the rhetoric unless this is a drive-by posting blitz and you don't plan on being around when these parts actually arrive. GP104 and Polaris are due to drop around the same time. AMD's Roy Taylor is already playing down AMD's all-out-performance in favour of targeting value for money - this should be a pretty obvious indicator.

AMD’s Polaris will be a mainstream GPU, not high-end

You might also note that AMD's Vega has already slipped one quarter on the roadmap. You should also note that the chip's size allied with Globalfoundries rather fanciful predictions (and outright lies) for any of its previous process nodes shouldn't guarantee too much - either time wise or the assumption of volume fully enabled dies.

AMD has been planning this comeback for years and now they're executing and executing well at that. Sorry NVidia fans. It's about time!

Technically, AMD have been planning a comeback since the Evergreen series. The R600 debacle and the hastily reworked R700 bought time to lay the foundation of a more competent graphics strategy - better mGPU scaling, Eyefinity etc)

Wow, these forums are terrible. You people don't really know much about what's going on. This is not a "useless exercise of power vs efficiency". AMD has already had the fastest GPU on the market for over 2 years now. They created a great GPU with the Fiji chip, which, in terms of price/performance ratios, is a better buy that the GTX980Ti, especially in DX12 titles (which is all titles being made today and into the future), where it outperforms the 980Ti and in some cases the Titan as well.

Firstly, not all games will be DX12 going forward. Unless they have incentive, developers aren't going to invest more time and resources (read money) into coding for DX12 unless they have incentive to do so. Game devs and studios can barely - on occasion - get a AAA title out the door without it requiring patches and fixes and launch target slippage as it is. Good luck shoehorning architecture/GPU specific coding requirements into that morass until game engines are geared primarily for DX12 and parallel coding for other APIs is eliminated.

I might also add that a large quantity of new games will use the UE4 engine, so Vulkan will gain traction.

As for the Fury X vs GTX 980 Ti debate. The Fury X shades the reference 980 Ti, but...1. very few reference GTX 980 Ti's are actually sold these days, and 2. GM200 (over)clocks fairly well, which is why you see so many high clocked cards selling at reference prices. As you can see from the chart I posted above, raising the minimum guaranteed boost improves the numbers considerably. Whereas you can buy these cards clocked out of the box, a Fury will require user input and degree of luck/expertise to push a 10% overclock.

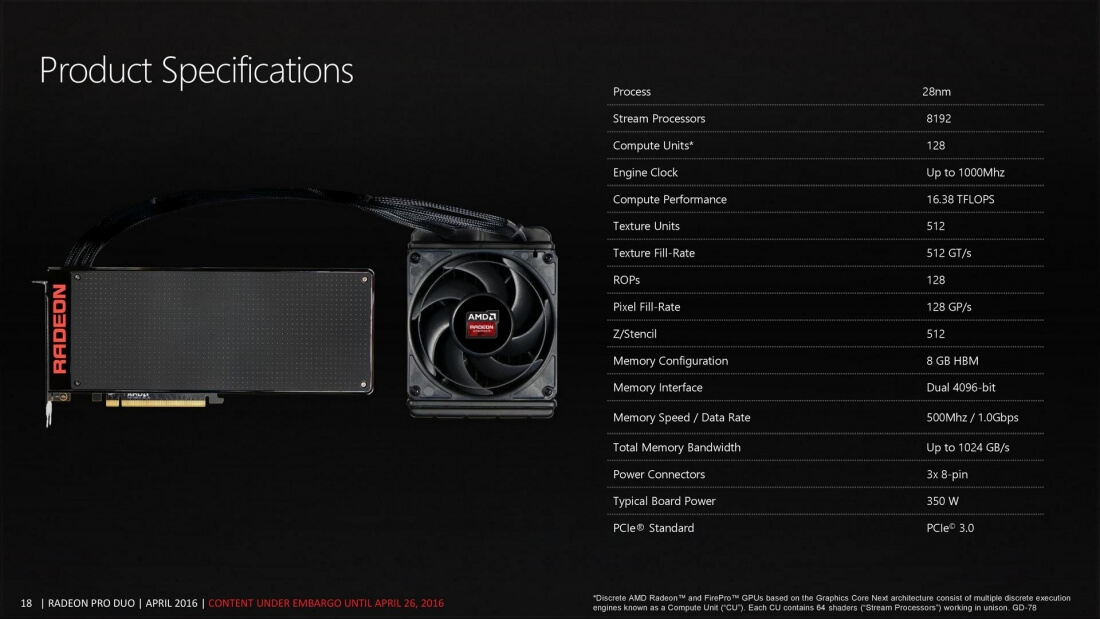

Considering this is the year that VR breaks out with Oculus Rift, Sony's, Microsoft's, Samsung's, and many others VR headsets being released, along with the fact that AMD currently holds 83% of the VR market and is one of VR's biggest supporters and drivers, the release of the ProDuo is nothing less than fitting.

Yes, the Pro Duo is aimed squarely at VR devs, which is why AMD's marketing goes to great lengths to publicize the 24/7 support (devs are seldom tech enthusiasts - they just want everything to work), LiquidVR and FirePro OpenCL drivers etc.

Also consider that an NVidia Titan costs $1500

Nope. Every Titan (GTX Titan, Titan Black, Titan X) has MSRP'd at $999 in reference form with the exception of the EoL'd Titan Z

You obviously don't know anything about how GPU's work. a 980Ti needs 6GB of VRAM, because it uses GDDR5. A Fury only needs 4GB, because it uses HBM,

You are incorrect. Game drivers will allocate memory usage and prioritize for vRAM depending upon capacity.

As you can see here, the larger framebuffer of the 980 Ti means that more of the graphics elements are stored in vRAM, where the smaller framebuffer of the Fury requires elements to swap in and out of system memory. While it appears that the 980 Ti is using more (v)RAM it is because it is less reliant upon system memory.

which is 9x faster than GDDR5, thus allowing a Fury with "only" 4GB of RAM to outperform a 980Ti with 6GB of RAM once you get up to the high resolutions like 4K. As HBM manages memory in a different and more efficient way, not as much of it is required to maintain performance.

That isn't what is actually happening. As resolution/texture computation increases the Fury's greater texture unit (TAU or TMU) count is coming into play. Fiji has 256 TMUs ( 268.8 GTexels/sec), while the 980 Ti has 176 TMUs ( 176 GTexels/sec @ base clock, 189.4 GTex/sec @ average boost). Likewise, at lower resolutions the Fury suffers in comparison to the 980 Ti because the TAU/TMUs aren't the limiting factor, the raster back end is - and Fiji is lacking in ROPs ( 64 compared to GM200's 96 - and each of Fiji's ROPs service a greater number of ALUs (cores)).

And umm...If a 980Ti performs worse than the FuryX with 50% more RAM, and the Titan with 200% more RAM, then why on earth would you think that 8GB would somehow, miraculously be better or interpret that from what I wrote? Here's a spoiler alert for you..."The 390 DOESN'T have HBM, genius", which I thought a "TS Enthusiast - Programmer - VRAM expert" would know, but apparently, my instincts were correct and you have no idea what you're talking about.

It has nothing to do with memory type or capacity (

and please quit the insults and double posting while you are at it). Adding vRAM capacity is only beneficial if the smaller capacity it is being compared to is saturated - I.e. hi res texture packs could choke a 4GB card, but sit comfortably in an 8GB equipped card. Excepting fairly rare instances like this, very high resolutions, or downsampling/full screen AA. It isn't much of an issue. Your assertion that internal bandwidth is a defining factor is just straight out wrong. Very few modern high end GPUs are bandwidth starved.

The real reason that the (stock) 980 Ti performs worse than the Fury X at 4K

The 980 Ti has 45% less TMUs

The 980Ti has 45% less cores

The reason that the 980 Ti performs better at lower resolutions:

The 980 Ti has 50% more ROPs

To that you can add a plethora of other variables including how each architecture handles tessellation, compression/decompression algorithms and how each architecture is impacted by uncompressible content.

One further note: If you are planning on being condescending and rude, it might pay to actually have the facts on your side. Your knowledge of vRAM/system RAM allocation and GPU architecture isn't very thorough to say the least. What you are presenting is misinformation and in many cases contradictory misinformation. Technical discussions are good, but plastering the thread with vendor specific marketing and assumptions based on little or no factual evidence does nothing to advance the dialogue.