You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia quietly launches Titan Black graphics card, rolls out new GeForce drivers

- Thread starter Shawn Knight

- Start date

dividebyzero

Posts: 4,840 +1,271

Luckily, your "Incoherent Rambling" quotient is commendably negligibleI hope my participation doesn't, cause you much pain.

G

Guest

IMHO, if you got $1000 to spent for vga card (for gaming), add some more cash then pick two 780s.

As for me, compared with Quadro 6000, Titan black 1k card could be a solution to people (with limited budget) who need that large memory and utilize its CUDA cores

As for me, compared with Quadro 6000, Titan black 1k card could be a solution to people (with limited budget) who need that large memory and utilize its CUDA cores

Skidmarksdeluxe

Posts: 8,645 +3,290

Nevertheless it'll mainly be used as a gaming card but that's the purchasers prerogative. I don't know what all the fuss is about whether it's a computing card or gaming card, it's just too damn expensive to warrant purchasing at any rate.A graphics card being worth more, because it does more is not an opinion.

Thats just it, the Titan is not a gaming card. DBZ has been trying to tell everyone this, everytime he post about the Titan. He has commented several times, the Titan is a computing card. It may not be worth **** with Bitcoins, but that is not the only computing projects that need processing power.

GhostRyder

Posts: 2,151 +588

Don't worry as long as you don't share an opinion you should be safe from Mr. Opinion KillerSince my best VGA at the moment, is Intel's HD-4000 IGP in my Core-i3, I wouldn't even be allowed to troll in this thread, would I......?

Thank you!Nevertheless it'll mainly be used as a gaming card but that's the purchasers prerogative. I don't know what all the fuss is about whether it's a computing card or gaming card, it's just too damn expensive to warrant purchasing at any rate.

Do you, because clearly sharing an opinion warrants 3 paragraphs and pages of insults on a tech forum apparently.Do you have some kind of learning disability?

Bad punctuation, by your logic I must have made you distraught. I am so sorry for that!Whose talking about the hardware? I was referring to the user base

Did I go onto said forums and tell people they are inferior and foolish for buying such a card? No, only shared an opinion on it being to expensive to warrant a purchase in my eyes even with a few different features. Whether or not they want to spend the money is up to them, I won't put a lock on someones pocket book. But I do have a problem with things like this because like I had stated earlier its falsely labeling this an "Pure Gaming Card".Aside from the obvious issue that most people dropping a $1000 on a graphics card probably know full well what they're in for, there is also the fact that many people don't even use the card for gaming. I'd actually dare to say that the average GTX Titan buyer might be somewhat more knowledgeable than most given the professional uses the card is put to.

Oh relax @cliffordcooley, were all friends hereI hope my participation doesn't, cause you much pain.

dividebyzero

Posts: 4,840 +1,271

That's pretty much the rationale behind the sales. If you're into gaming then a cheaper 780 is the way to go. If you do some pro work + some gaming then you might buy a Titan (or a midrange card + a Quadro). If your workload is pro orientated then you probably buy more than one Titan (and maybe a Quadro for Viewport). When you consider how many cards go into render rigs, individual sales to benchmarkers and gamers start to look fairly insignificant.IMHO, if you got $1000 to spent for vga card (for gaming), add some more cash then pick two 780s.

As for me, compared with Quadro 6000, Titan black 1k card could be a solution to people (with limited budget) who need that large memory and utilize its CUDA cores

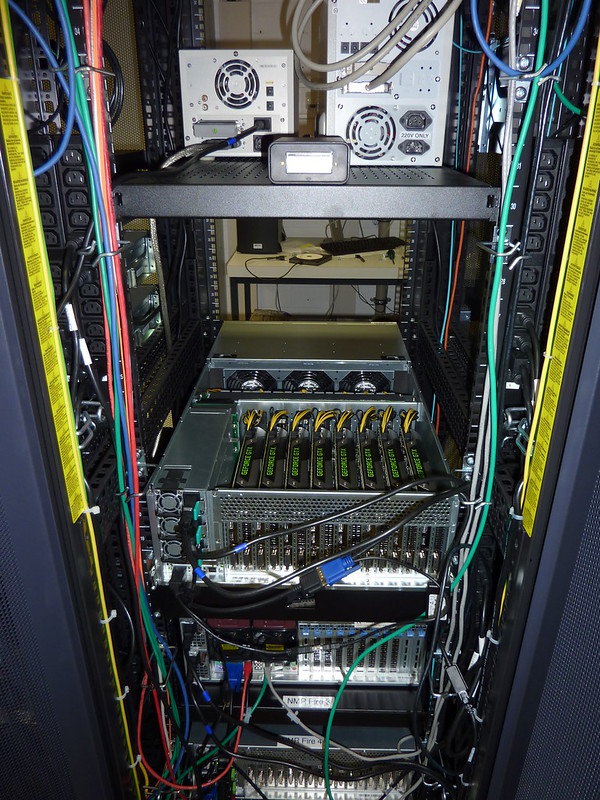

(Tyan FT72B 4U w/ 8 GTX Titans. Workload: Octane).

Eight Titan's work out 20% cheaper than two Quadro K6000's.

amstech

Posts: 2,661 +1,824

Graham, I am sick of your respectful informative posts that make perfect sense. Stop, or I will start ripping you for being an Nvidia fan boy.

God damnit, I am the only one allowed to be a fanboy on this site and get away with it.

Ahhh hahahaha

God damnit, I am the only one allowed to be a fanboy on this site and get away with it.

Ahhh hahahaha

dividebyzero

Posts: 4,840 +1,271

I'd never go against the word of "Bob" Dobbs !Graham, I am sick of your respectful informative posts that make perfect sense. Stop, or I will start ripping you for being an Nvidia fan boy.

God damnit, I am the only one allowed to be a fanboy on this site and get away with it.

Ahhh hahahaha

theBest11778

Posts: 298 +127

Simply put, Titan is meant for Hobbiests, Enthusiasts, and Small Businesses. Hobbiests that like to dabble in 3D rendering will find $1000 a steal. Enthusiasts don't care about money, they just want the Biggest Fastest thing there is regardless of cost. Small Businesses care take advantage of decent HW at a much cheaper price compared to Quadro line cards. Nvidia's not dumb, nor is Intel with their $1000 Extreme CPUs. There's a market for it. Why not take advantage. If the original Titan didn't sell this one wouldn't exist. Business 101.

cliffordcooley

Posts: 13,141 +6,441

Precisely!Business 101.

I know I'm not gonna spend 1K on the card, but then I don't have a use it either. I also know I'm not going to belittle those who do have a use, whether it be professional or hobbyist oriented. If someone is willing to spend 100K for a rare Nintendo cartridge, why would I frown upon someone spending 1K for a card, they may actually be able to make a living with.

dividebyzero

Posts: 4,840 +1,271

Sometimes it just comes down to checking all the boxes. Nvidia knows that professional workstation/co-processor cards are already designed around a hardware standard. Existing rackmount units are constrained by power supply limits ( 2 x 1200-1350W) for full height add-in cards, so the maximum number of GPUs are limited to the 1800-2000W as well as the (up to) eight PCI-E slots afforded by the motherboard. It's no real surprise that the Titan has pretty much the same power draw specification as any top-tier pro card, whether it be Quadro, Tesla, or FirePro.Simply put, Titan is meant for Hobbiests, Enthusiasts, and Small Businesses. Hobbiests that like to dabble in 3D rendering will find $1000 a steal..

No need to shell out big bucks for pro cards when some applications (most CG render engines) don't use double precision, and if you're filling server racks with 8, 16, 24, 32+ etc. boards at a time to build a render farm, you're not only saving cash, but getting the maximum workload return whilst staying within the form factor.

GhostRyder

Posts: 2,151 +588

Quoted from Us.Hardware.info

"In addition to gaming benchmarks we also ran various professional GPGPU benchmarks on a single GTX Titan Black and compared the results with the original Titan's, the GeForce GTX 780 Ti and the Radeon R9 290X. Lest we forget: this is the purpose this card was primarily designed for."

GPGPU Applications Benchmarks

GPGPU Benchmarks, Double Precision

Benchmarks look abyssmal...

Another Quote

"However, these days the first and only real purpose to buying a Titan is GPGPU, either because you want to run specific, professional Cuda applications or because of a particular need for FP64 precision. In that case, Titans are an affordable alternative to Tesla cards. Speaking of affordable alternative: in regular GPGPU applications, AMD's Radeon R9 290X tends to deliver better performance at a significantly lower price than the Titan. Our findings are confirmed by the popularity of AMD's offering among Litecoin miners and the likes."

Or theres also Toms Hardware review of the Titan since the black review is not out yet.

"There is a handful of CUDA-optimized titles where the Titan does really well. Blender, 3ds Max, and Octane all show Nvidia's single-GPU flagship with a commanding lead over the GeForce GTX 680 and prior-gen 580. Bottom line: if you're thinking about using a Titan for rendering, check the application you're using first."

Will be Interesting to see how much of an improvement in these areas of course.

On a different note, the new Geforce Driver is great, got some nice performance in the updated game specs for Far Cry for whatever reason. Much more stable on my laptop at medium settings getting around 60FPS.

"In addition to gaming benchmarks we also ran various professional GPGPU benchmarks on a single GTX Titan Black and compared the results with the original Titan's, the GeForce GTX 780 Ti and the Radeon R9 290X. Lest we forget: this is the purpose this card was primarily designed for."

GPGPU Applications Benchmarks

GPGPU Benchmarks, Double Precision

Benchmarks look abyssmal...

Another Quote

"However, these days the first and only real purpose to buying a Titan is GPGPU, either because you want to run specific, professional Cuda applications or because of a particular need for FP64 precision. In that case, Titans are an affordable alternative to Tesla cards. Speaking of affordable alternative: in regular GPGPU applications, AMD's Radeon R9 290X tends to deliver better performance at a significantly lower price than the Titan. Our findings are confirmed by the popularity of AMD's offering among Litecoin miners and the likes."

Or theres also Toms Hardware review of the Titan since the black review is not out yet.

"There is a handful of CUDA-optimized titles where the Titan does really well. Blender, 3ds Max, and Octane all show Nvidia's single-GPU flagship with a commanding lead over the GeForce GTX 680 and prior-gen 580. Bottom line: if you're thinking about using a Titan for rendering, check the application you're using first."

Will be Interesting to see how much of an improvement in these areas of course.

On a different note, the new Geforce Driver is great, got some nice performance in the updated game specs for Far Cry for whatever reason. Much more stable on my laptop at medium settings getting around 60FPS.

Last edited:

dividebyzero

Posts: 4,840 +1,271

Well, I thought it was common knowledge that Nvidia's OpenCL implementation was lacking.Quoted from Us.Hardware.info....[snip]

But as Tom's Hardware noted (the link you've attributed to Anandtech)

Octane (see previous posts), Blender (see previous posts), V-Ray (see previous posts), and iRay are all optimized for CUDA, and are the pre-eminent CG renderers. AMD obviously doesn't do CUDA - and thus you see the AMD cards not included in benchmark graph comparison for those render engine benchmarks.Quoted from....[snip]

Or theres also Anandtechs review of the Titan since the black review is not out yet.

"There is a handful of CUDA-optimized titles where the Titan does really well. Blender, 3ds Max, and Octane all show Nvidia's single-GPU flagship with a commanding lead over the GeForce GTX 680 and prior-gen 580. Bottom line: if you're thinking about using a Titan for rendering, check the application you're using first."

So...

From the Blender FAQEven though on paper the performance of some of the high end AMD GPU's such as the Radeon HD 7990 match the NVIDIA GTX Titan, software support for OpenCL GPU accelerated rendering is limited. As a result, tests have shown slow performance compared to Nvidia CUDA technology

and...Currently NVidia with CUDA is rendering faster. There is no fundamental reason why this should be so—we don't use any CUDA-specific features—but the compiler appears to be more mature, and can better support big kernels. OpenCL support is still being worked on and has not been optimized as much, because we haven't had the full kernel working yet

OpenCL support for AMD/NVidia GPU rendering is currently on hold. Only a small subset of the entire rendering kernel can currently be compiled, which leaves this mostly at prototype. We will need major driver or hardware improvements to get full cycles support on AMD hardware. For NVidia CUDA still works faster

I might also note that the large movie CG design houses tend towards Nvidia for the same reason. Weta Digital (3 hours down the road from me) uses an Nvidia render farm, as does Pixar, amongst others...and of course, being CUDA optimized, supercomputers run Blender pretty well.AMD - The immediate issue that you run into when trying OpenCL, is that compilation will take a long time, or the compiler will crash running out of memory. We can successfully compile a subset of the rendering kernel (thanks to the work of developers at AMD improving the driver), but not enough to consider this usable in practice beyond a demo.

NVidia hardware and compilers support true function calls, which is important for complex kernels. It seems that AMD hardware or compilers do not support them, or not to the same extent.

In short, while Nvidia's OpenCL performance pretty much sucks, CUDA doesn't, and is faster and more stable than OpenCL whether its running on Nvidia or AMD hardware.

And lastly, from an independent study (PDF)

In our tests, CUDA performed better when transferring data to and from the GPU. We did not see any considerable change in OpenCL’s relative data transfer performance as more data were transferred. CUDA’s kernel execution was also consistently faster than OpenCL’s, despite the two implementations running nearly identical code.

CUDA seems to be a better choice for applications where achieving as high a performance as possible is important. Otherwise the choice between CUDA and OpenCL can be made by considering factors such as prior familiarity with either system, or available development tools for the target GPU hardware.

And....what a difference a few months make...

Titan's MSRP is $999, the 290X is $699 for the out of stock cards, and hitting $900 for those in stock.AMD's Radeon R9 290X tends to deliver better performance at a significantly lower price than the Titan.

Looks like the "significant difference" is less significant by the day. Although I guess the silver lining is at least the cards you cant buy are significantly cheaper than those you can buy.

Last edited:

amstech

Posts: 2,661 +1,824

CUDA has turned into a masterpiece, and Borderlands 2 shut the mouths of the PhysX haters/naysayers. Now I just need to sell my U3011 and get a GSync monitor.

You gotta give it to AMD though, their demeanor is vital for the advancement and competition with some of the newer technology. To even say you can battle with Nvidia on certain fronts is nothing to be ashamed about.

You gotta give it to AMD though, their demeanor is vital for the advancement and competition with some of the newer technology. To even say you can battle with Nvidia on certain fronts is nothing to be ashamed about.

GhostRyder

Posts: 2,151 +588

I might also note that the large movie CG design houses tend towards Nvidia for the same reason. Weta Digital (3 hours down the road from me) uses an Nvidia render farm, as does Pixar, amongst others...and of course, being CUDA optimized, supercomputers run Blender pretty well.

In short, while Nvidia's OpenCL performance pretty much sucks, CUDA doesn't, and is faster and more stable than OpenCL whether its running on Nvidia or AMD hardware.

And lastly, from an independent study (PDF)

Still depends on the application, only a select few of the Cuda applications which is for the most part all rendering applications.

These are the main 4 I at least hear about:

Lux (OpenCL)

Cycles (CUDA)

Octane (CUDA)

V-Ray RT (OpenCL and CUDA)

Though I have seen these used before:

Redshift (Cuda)

Arion (Cuda)

Thea Presto Engine (Cuda)

Furry ball 4.5 (Cuda)

Brigade (Cuda)

Now as far as Vray is concerned its a beta and unstable (openCL mind you), however the Lux one is not bad from what I have seen and heard and theres one called ratGPU (openCL) but ive heard that died. But its a moot point because there is an over abundance in the category of Rendering tech Cuda implemented software.

So the point is for this one main use this card has a decent price to performance ratio, but on everything else its way overpriced. But unless your soul purpose is rendering, there are many better alternatives out there which is the problem...

Gaming = 780ti

Compute and OpenCL = 290X

Rendering = Titan Black

Every card can have one task (At least at the top end) that they do well, does not make it justified on price.

However that small area is not much to justify, Titan (Original) had its price at 1k. The Titan was released and there was nothing even close except on the Dual GPU spectrum (GTX 690 and later HD 7990) which while still expensive it was because it was all that and a bag of chips (Well except on some of the compute of course). That was why 1k for a 6gb gaming beast, rendering monster, mediocre compute GPU made more since because it was 1 of a kind for a good couple of months. This card has TONS of alternatives to every category except rendering and most of those people probably already have Titan and are not going to give it up for a 5-10% performance difference.

No its not, the MSRP of the card is $549.99 and thats what it was released at. The sellers (In America mind you, theres an article about it...) are the ones who chose to raise the price because of supply and demand. Amazon has the XFX Double D for as low as $599.99 and some of the 290's are at the 449.99 threshold for MSI (Albeit that one is out of stock atm, but orderable meaning you can lock in that price). Still up from its launch price, but its come way down though there are a few retailers trying to squeeze said 900 bucks from people but there are cheaper alternatives.Titan's MSRP is $999, the 290X is $699 for the out of stock cards, and hitting $900 for those instock.

Looks like the "significant difference" is less significant by the day. Although I guess the silver lining is at least the cards you cant buy are significantly cheaper than those you can buy.

Last edited:

dividebyzero

Posts: 4,840 +1,271

Some notable additions:Still depends on the application, only a select few of the Cuda applications which is for the most part all rendering applications.

Autodesk 3ds Max (CUDA and OpenCL)

Mental Ray (CUDA) and iRAY (CUDA raytracing)

OptiX and SceniX ( both require professional drivers. Install a cheap Quadro to enable with the Titan)

finalRender (CUDA)

Indigo (CUDA and OpenCL)

Blender (CUDA and OpenCL)

The thing about CUDA is that the plug-ins are compatible across a wide range of applications. Nvidia is first and foremost a software company.

V-Ray (CUDA) is stable. SmallLux is the usual choice for the hobbyist using AMD hardware since it works as advertised, but doesn't scale too well from what I've seen.Now as far as Vray is concerned its a beta and unstable (openCL mind you), however the Lux one is not bad from what I have seen

If the only other viable solution is more than $1k then the price is justified. Even if you limit the workload to CG rendering (and dismiss distributed computing and a host of other GPGPU applications and benchmarkers), that still encompasses:Every card can have one task (At least at the top end) that they do well, does not make it justified on price.However that small area is not much to justify, Titan (Original) had its price at 1k

Filmmakers and animators (also includes hobbyists who like to game in their spare time)

3D artists (sculpture, installation, CG)

Architects

Advertisers and Marketing agencies

Design houses (everything from interior, furniture, motorsport, custom fabrication, renovators etc) and pretty much anyone who uses CAD that wants to present a virtualized render of the final design.

Now this may well seem like niche markets- and they are. The GTX Titan isn't anything other than niche.

You're assuming that the virtualization and other CG market is static. It is not. A quick look at the quantity of CG content in movies, TV, and advertising should provide an inkling that the market is expanding. If you need some numbers to back that viewpoint up, then...This card has TONS of alternatives to every category except rendering and most of those people probably already have Titan and are not going to give it up for a 5-10% performance difference.

And CG hardware (not to be confused with graphics card sales)

BTW: The Tyan render racks are popular enough for Tyan to build a custom motherboard with 4 PLX 8747 lane extenders to enable full PCI-E 3.0 bandwidth. At $17,000 a rack it is actually very cost effective. Using seven Tesla K20's (+ one cheap Quadro for video out) would triple the cost.

GhostRyder

Posts: 2,151 +588

You didn't read, I said "Now as far as Vray is concerned its a beta and unstable (openCL mind you). Never said the Cuda portion was unstable, its the only used version except for experimentation on OpenCL.V-Ray (CUDA) is stable. SmallLux is the usual choice for the hobbyist using AMD hardware since it works as advertised, but doesn't scale too well from what I've seen.

Well this would be just fine if a card nearly half the price did not do most of those needs on equal grounds or better... When we were talking original Titan and there was nothing in that category then it made much more sense and fit a wider area. This version is only slighty bumped from its predecessor and has become dated on many of the areas that it once dominated but still keeps in the same price bracket.If the only other viable solution is more than $1k then the price is justified. Even if you limit the workload to CG rendering (and dismiss distributed computing and a host of other GPGPU applications and benchmarkers), that still encompasses:

Your correct, its a small portion of people that really benefit from the power of the Titan. However with Titan black that area has dwindled even further down because its value has gone down significantly. Gamers will choose 780ti in more cases over Titan black, Titan has already sold significant enough numbers (You have even posted pictures of machines with multitudes of those cards inside) that this is nothing that will be bought to replace the current ones, and the compute area is still more closely dominated by AMD for a lower price.Now this may well seem like niche markets- and they are. The GTX Titan isn't anything other than niche.

CG has become a fundamental part of T.V. and movies, you are correct in that regard. But the people who already wanted something like this or to have an extreme render setup bought the 5% different titan around the past 6 months. This one was not hyped up for a reason and thats pretty apparent when you compare the two and how the tech has become dated for many of the aspects it once had under its belt. Professional aspects are still apparent, but the group of people this is needed especially viewing the 1k base asking price is going to be very low.You're assuming that the virtualization and other CG market is static. It is not. A quick look at the quantity of CG content in movies, TV, and advertising should provide an inkling that the market is expanding. If you need some numbers to back that viewpoint up, then...

I'm just going to stick with this quote from US.Hardware

"However, these days the first and only real purpose to buying a Titan is GPGPU, either because you want to run specific, professional Cuda applications or because of a particular need for FP64 precision. In that case, Titans are an affordable alternative to Tesla cards. Speaking of affordable alternative: in regular GPGPU applications, AMD's Radeon R9 290X tends to deliver better performance at a significantly lower price than the Titan. Our findings are confirmed by the popularity of AMD's offering among Litecoin miners and the likes."

Its got a category its good at, but that is not near the original area Titan itself started and dominated. That's the last I care to talk about this, I am not the only one who has come to the same conclusion that its price is steep for what it is. Unless you want to use it for straight up Cuda based apps there are alternatives all around for better prices from its own side and others.

Last edited:

dividebyzero

Posts: 4,840 +1,271

And I was merely pointing out that the CUDA port was stable to clarify. Please don't nitpick.You didn't read, I said "Now as far as Vray is concerned its a beta and unstable (openCL mind you). Never said the Cuda portion was unstable, its the only used version except for experimentation on OpenCL.

You realize that the Titan (and it's Quadro/Tesla brethren) are a success in CG in large part due to the 6GB framebuffer. The larger the framebuffer, the more complex the scene can be, the larger the frame can be, and the easier the scene can be rendered.Well this would be just fine if a card nearly half the price did not do most of those needs on equal grounds or better...

Now, can you provide me with the name of the card "nearly half the price" that has a 6GB framebuffer?

Sure, a cheaper card with a smaller framebuffer will do the job...eventually, but cost effectiveness is a product of performance per watt and time to completion of task.

If you believe that framebuffer is not a consideration, then you're mistaken- and as I said earlier, this difference is a fairly crucial market segmentation tool that Nvidia have employed ( AMD has also jumped on this bandwagon with the 12GB S10000 - a board that is basically a HD 7990, yet no 12GB 7990 has been or will ever be made).

Doesn't matter. There are new systems being built every day. What do you think that these new systems are going fitted with - the old Titan (likely EOL in any case) or a faster Titan Black at the same price?When we were talking original Titan and there was nothing in that category then it made much more sense and fit a wider area. This version is only slighty bumped from its predecessor.

No it hasn't. The graphs and all the literature point to a growing market in CG, and whilst framebuffer is king (along with code compatibility for the fastest render) the Titan/Titan Black rules by default.However with Titan black that area has dwindled even further down because its value has gone down significantly.

Don't believe a framebuffer can have that much effect on efficiency for a GPU render...

The Quadro K6000 is clocked at least 12% lower than the GTX 780 (most renders peg the GPU at or near 100%), has 25% more shaders and the same bandwidth/memory frequency, yet the speed up over the 780 is in the order of 300%. The difference? 12GB of framebuffer per GPU versus 3 for the 780.

I think the gaming aspect of sales for the Titan Black has already been covered and is not in dispute. As far back as Post #8 I saidGamers will choose 780ti in more cases over Titan black, Titan has already sold significant enough numbers (You have even posted pictures of machines with multitudes of those cards inside) that this is nothing that will be bought to replace the current ones

The card isn't aimed at gamers - at least not 99.99% of them.

Again, it depends upon workload. You certainly don't see many AMD GPU render farms.and the compute area is still more closely dominated by AMD for a lower price.

So, even though the CG hardware is predicted to grow by $US5 billion a year, and the Titan is the one of the most cost effective solutions, you don't see anyone buying else buying it from now on? Well, everyone is entitled to their opinion I guess. Maybe I'll just add new posts whenever a new Titan-based render system goes live. If you're right this thread will die a death- if not I guess the thread will get a few bumps.CG has become a fundamental part of T.V. and movies, you are correct in that regard. But the people who already wanted something like this or to have an extreme render setup bought the 5% different titan around the past 6 months.

The unit sales for any $1K card is very low.Professional aspects are still apparent, but the group of people this is needed especially viewing the 1k base asking price is going to be very low.

GK110's successor (GM200) isn't slated to arrive until early 2015.

If your workload thrives on CUDA and requires a large framebuffer then GK110 is the choice by default. This is a prime example of Nvidia's hand-in-glove approach to market segmentation, hardware/software ecosystem, and strategic marketing. It is also the reason that Nvidia continue to produce revenue, and why they own 80+% of the professional graphics business.

If the GPU was going to be quickly supplanted then they wouldn't have introduced the K6000, K40, and Titan Black. Nvidia need sales of these SKU's to recoup the investment of putting them into the market.

Manufacturers don't tend to replace a new top-tier GPU/board within a year of its introduction, and especially not when the GPU is linked to professional cards. If you think that this is an Nvidia-only trait, I'd ask you where the Hawaii based FirePro are.

Now, as far as I can see, your main problem with the card seems to stem from how it is being marketed. You point out an OEMs advertising

Now, I ask you, if you had a product that competes in a number of arena's why wouldn't you direct ad campaigns at every demographic it could possibly appeal to?But I do have a problem with things like this because like I had stated earlier its falsely labeling this an "Pure Gaming Card".

Secondly, I'd query why this is so abhorrent to you, yet you openly support a company whose PR and marketing have just as bad (or worse) record -including a continuing string of suspect issues stemming from their CPU claims, that began with completely spurious Barcelona marketing to the guerrilla marketing to pre-sell 900 series chipset boards and Bulldozer.

Personally I don't see much ethically wrong with marketing a product if does what is claimed even if it is expensive. I do see something ethically wrong in marketing fictitious products, and deliberately overestimating performance to pre-sell a product.

Last edited:

G

Guest

@/0

totally agree with your points (no way ill not)

Just a question:

What about the Mac Pro?

Why not nVidia inside atm?

thanx

totally agree with your points (no way ill not)

Just a question:

What about the Mac Pro?

Why not nVidia inside atm?

thanx

dividebyzero

Posts: 4,840 +1,271

From my understanding, it seems like price is a major factor. Both the end product and AMD's willingness to customize the cards for Apple.@/0

totally agree with your points (no way ill not)

Just a question:

What about the Mac Pro?

Why not nVidia inside atm?

thanx

The FirePro D500 is basically a HD 7870XT (Tahiti LE) with the full Tahiti 384-bit bus width. No strict analogue exists in AMD's standard FirePro line, but it is between the W7000 ($800 at Newegg / $720 cheapest price found) and W8000 ($1400 at Newegg / $1214 cheapest found). The D700 upgrade for the Pro is a custom W9000 ($3400 at Newegg / $3241 cheapest price found)

A quick look at the Mac Pro configuration page says that you can upgrade from dual D500's to dual D700's for $600. That would be over a $4000-5000 difference if buying the standard FirePro workstation cards. In effect, AMD are selling professional QA'ed boards to Apple at little more than (and probably less if factoring in Apple's profit margins) consumer graphics prices. The only upside of a contract like this is PR linking the brand to Apple.

Last edited:

dividebyzero

Posts: 4,840 +1,271

Apple have always maintained the same strategy with their ODM/OEM partners. Get the component as cheap as possible, and don't let one vendor get too comfortable.I was looking for the main reason - AMD vs nVidia (or FirePro vs Keppler/Tesla)

you pointed a lot but missed the Apple point of view

Apple have a record of alternating between Nvidia and AMD which is likely a deliberate strategy to keeping them in line.

As to why FirePro over Tesla/Quadro ? Why not ? Apple have their own software ecosystem that doesn't really leverage CUDA to any great extent for the Mac's target audience (Final Cut excepted AFAIK). As far as I'm aware, Adobe are also pushing OpenCL over CUDA these days also. If Apple are leaning toward OpenCL performance then the FirePro makes sense, as does AMD's willingness to cut Apple a sweetheart deal on pricing and semi-custom SKUs.

Well, the pointless VRAM race is on

9th March: Sapphire show a 8GB 290X...

24th March: EVGA leak 6GB 780/780Ti

The vendor specific AIB GDDR5 race!

Well, I didn't expect any AIB was deranged enough to think an 8GB gaming graphics card was a marketable proposition, but I guess once it arrived the response was predictable

Name one company that has a 6GB 780 let alone a 6GB 780 Ti ? There isn't one. There won't be one unless AMD allow vendor 8GB 290X's and Nvidia changes its stance.

Last edited:

Similar threads

- Replies

- 28

- Views

- 580

- Replies

- 2

- Views

- 155

Latest posts

-

These credit cards feature OLEDs that light up upon payment

- Uncle Al replied

-

SpaceX to launch a swarm of US reconnaissance satellites next month

- ScottSoapbox replied

-

Amazon denies reports it started a business just to spy on rivals

- nitebird replied

-

PlayStation 5 Pro will be bigger, faster, and better using the same CPU

- anastrophe replied

-

Microsoft Xbox credit card is out of beta, available to all US residents

- Neatfeatguy replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.