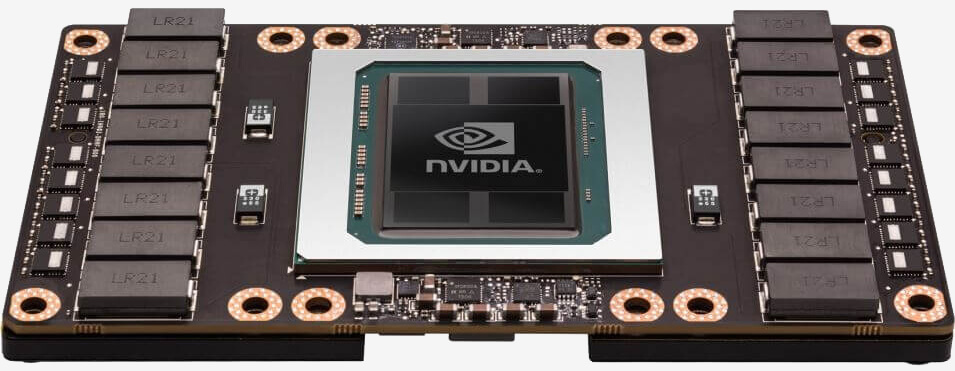

Nvidia's first Pascal-based graphics card isn't a GeForce SKU for consumers; instead it's the Tesla P100, a high-performance compute (HPC) card with a brand new GP100 GPU on-board.

The Tesla P100 is an incredibly powerful card, boasting 10.6 TFLOPs of single-precision performance, 5.3 TFLOPs of double precision, and a whopping 21.2 TFLOPs of half precision. This is a huge performance increase over Nvidia's past single and even dual-GPU compute cards: the Telsa M40, for example, used a fully-unlocked Maxwell GM200 GPU and achieved just 6.8 TFLOPs of single/half precision performance, and just 213 GFLOPs of dual precision.

The GP100 GPU is built on a TSMC 16nm FinFET process, and with 15.3 billion transistors on-die, it has a rated TDP of 300W. The Tesla P100 uses a partially-disabled version of this GPU, featuring just 56 of 60 SMs in a working state, leaving the card with 3,584 CUDA cores. This core is clocked at 1,328 MHz with boost clocks up to 1,480 MHz.

As for memory, Nvidia has loaded this card with 16 GB of HBM2 clocked at 1.4 Gbps, providing a huge 720 GB/s of bandwidth. Unlike with AMD's first-generation HBM graphics cards, Nvidia has been able to include more than 4 GB of memory thanks to HBM2's lack of restrictions in this department.

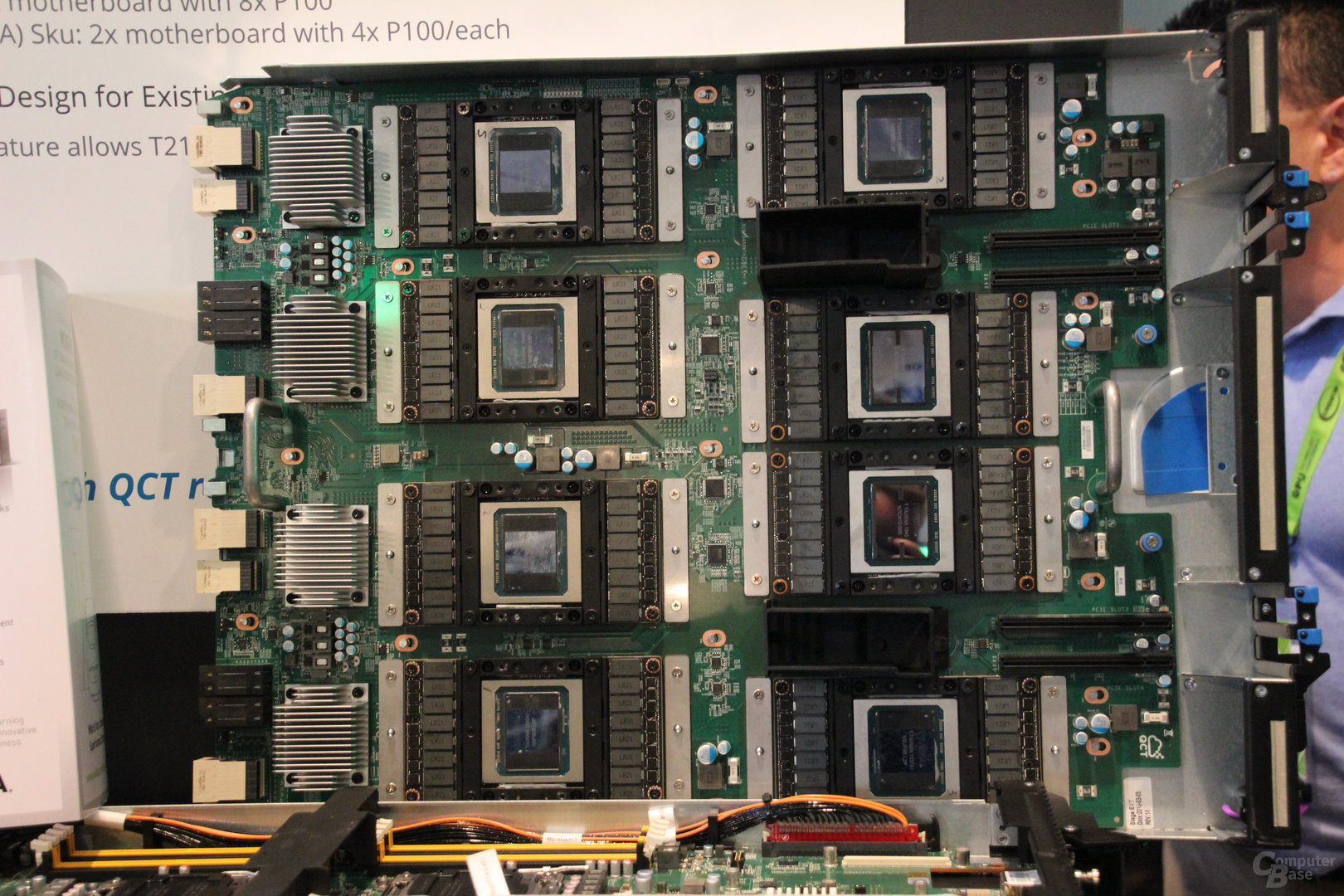

Nvidia is already volume producing the Tesla P100 for use in systems like its very own DGX-1, which will be available in June, as well other systems from IBM, Dell and Cray.

It could be some time before we see the GP100 transition from Telsa cards to GeForce due to cost and production concerns. However we do now know that Pascal can scale to incredibly powerful GPUs, and this could make the next few releases of consumer cards very exciting.

https://www.techspot.com/news/64351-nvidia-first-pascal-gpu-tesla-p100-hpc.html