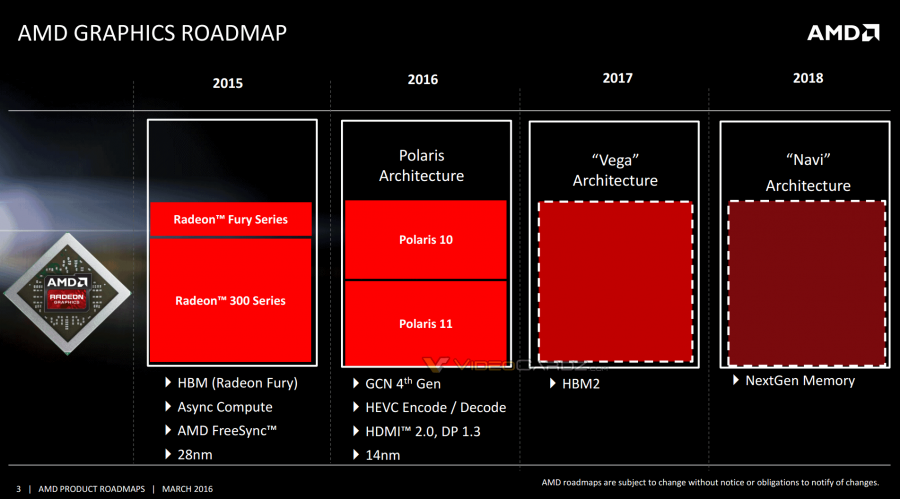

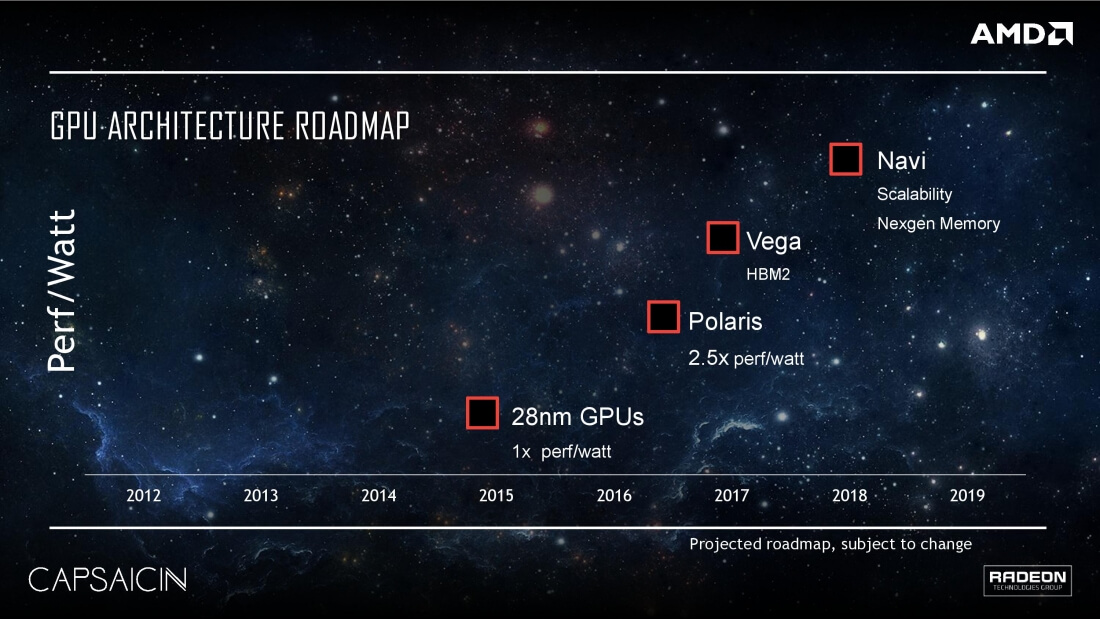

A shaky report from 3DCenter suggests that AMD has pulled the launch of their next high-end GPU, codenamed 'Vega', forward from a scheduled early 2017 release to October of this year.

There are suggestions that this move has been made in response to Nvidia's GeForce GTX 1080 and GTX 1070, which target the high-end performance section of the graphics card market. With AMD's upcoming Polaris GPUs expected to target mainstream PC gamers with lower cost cards, the company mightn't want to be without competitive high performance products until next year.

As The Tech Report notes, these reports have been cobbled together from a variety of sources, including a SemiAccurate post that said Vega has already been shown behind closed doors, and an update to AIDA64 that included "preliminary GPU information for AMD Vega 10 (Greenland)."

3DCenter seems to believe that Vega 10 will be a direct competitor to Nvidia's GP104 silicon, debuting with HBM2 and a large array of shader cores. However the faster version of Vega, named Vega 11 (which is strange considering Polaris 11 is expected to be slower than Polaris 10), is rumored to still launch in early 2017 to compete with Nvidia's fully unlocked Pascal GPU.

Whether or not this rumor comes to be true, AMD will be revealing details of their Polaris architecture just shortly ahead of Computex later this month.

https://www.techspot.com/news/64797-report-suggests-amd-has-pulled-vega-gpu-launch.html