It's hard to believe 15 years have passed since I tested the Pentium 4 series for the first time. I honestly don't remember many of my experiences with the P4 range, if not because of its age then because it was a pretty rubbish series. I do have many fond memories of testing the Core 2 Duo series however.

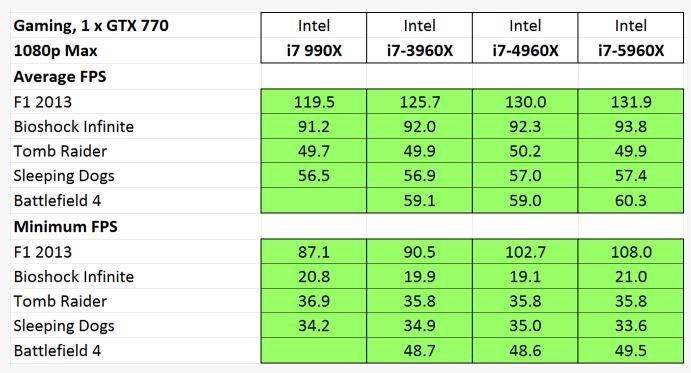

Six long years after the Pentium 4, we reviewed the first generation Core 2 Duo processors. Today we are going to take a look back at the Core 2 Duo and Core 2 Quad CPUs and compare them to the Nehalem-based Core i5-760 and Core i7-870, the Sandy Bridge Core i5-2500K and Core i7-2700K chips, and then to the current generation Haswell Celeron, Pentium, Core i3, Core i5 and Core i7 parts.