Once the bugs have been ironed out, the features are released as stable, and once every few weeks the streams are all refreshed. You can follow all three if you want, but please note that you shouldn't entrust valuable data to anything other than the released stable stream.

What's New

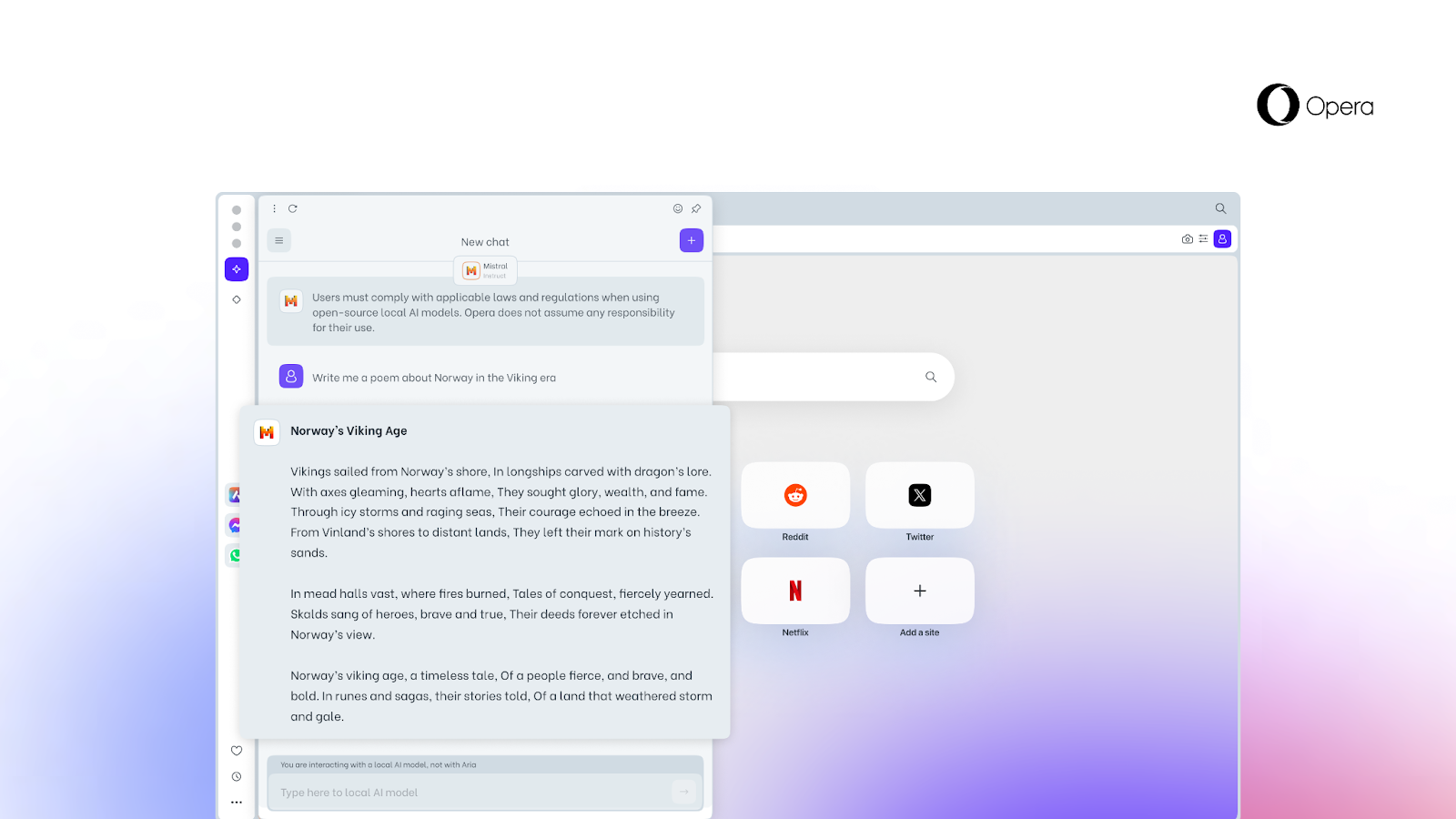

Opera One Developer becomes the first browser with built-in local LLMs – ready for you to test

Using an LLM or Large Language Model is a process that typically requires data being sent to a server. Local LLMs are different, as they allow you to process your prompts directly on your machine without the data you're submitting to the local LLM leaving your computer.

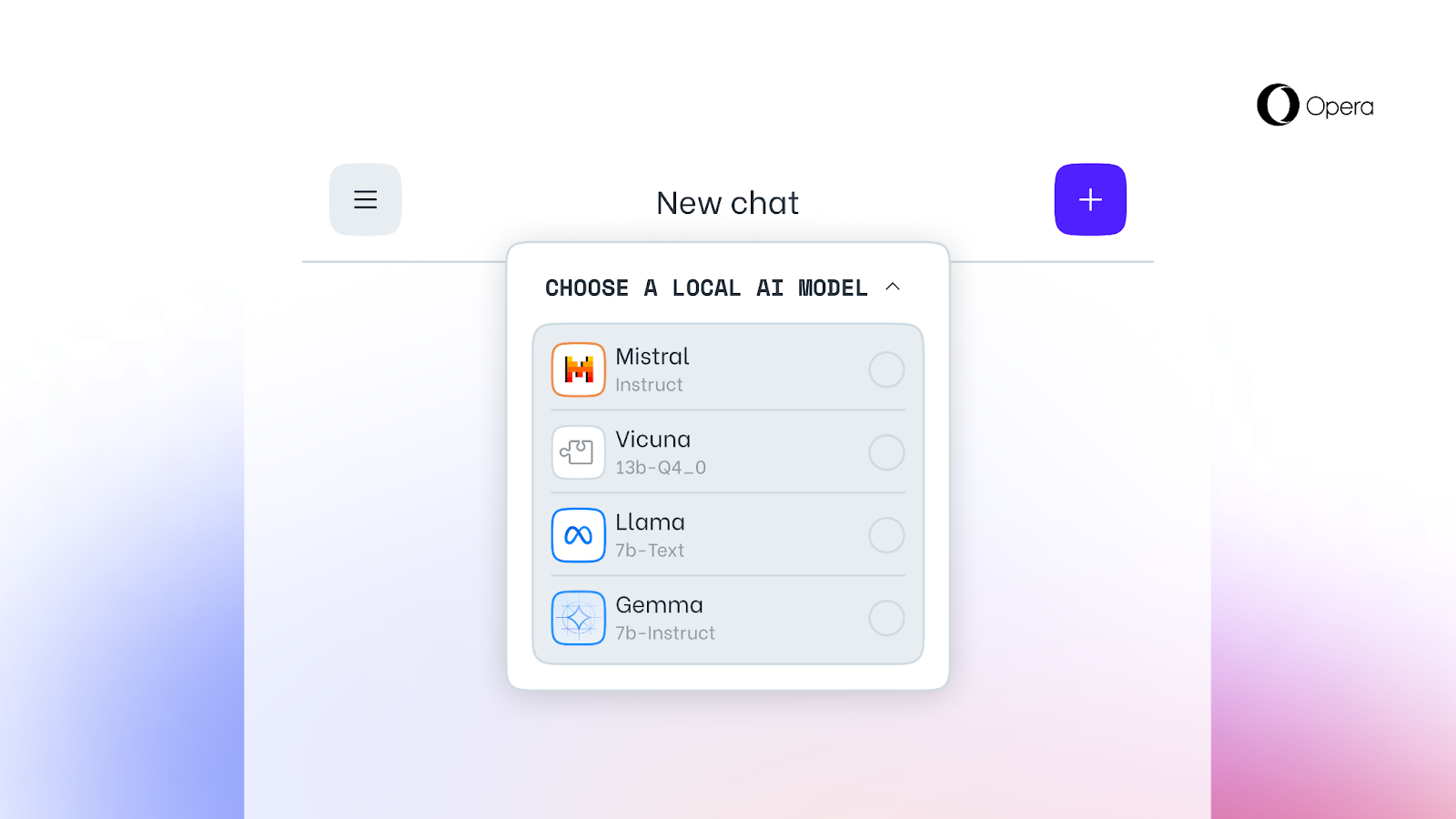

Today, as part of our AI Feature Drops program, we are adding experimental support for 150 local LLM variants from ~50 families of models to our browser. This marks the first time local LLMs can be easily accessed and managed from a major browser through a built-in feature. Among them, you will find:

- Llama from Meta

- Vicuna

- Gemma from Google

- Mixtral from Mistral AI

- And many families more

The models are available from today on in the developer stream of Opera One.

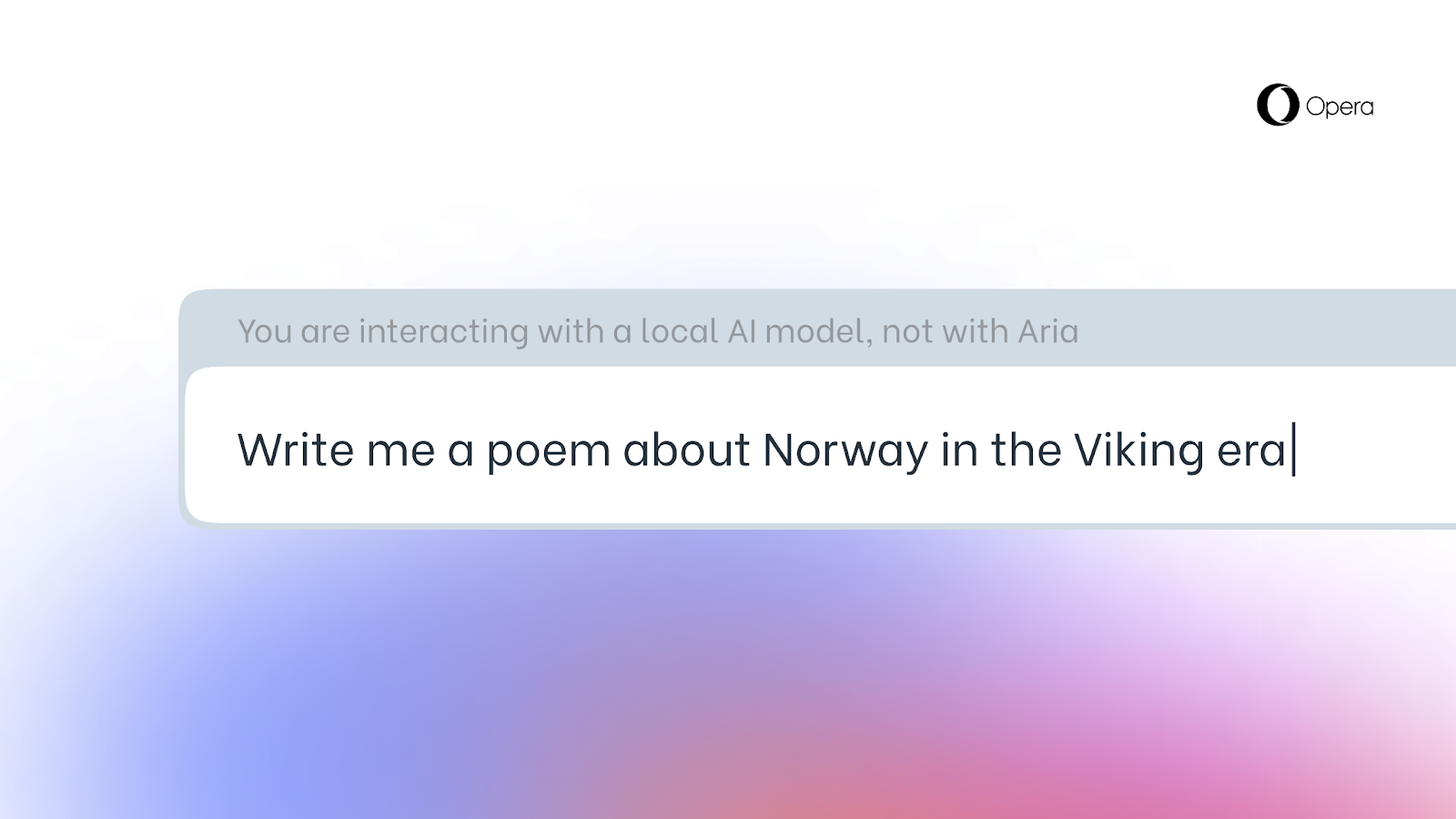

Using Local Large Language Models means users' data is kept locally, on their device, allowing them to use AI without the need to send information to a server. We are testing this new set of local LLMs in the developer stream of Opera One as part of our AI Feature Drops Program, which allows you to test early, often experimental versions of our AI feature set.

As of today, the Opera One Developer community is getting the opportunity to select the model they want to process their input with, which is quite beneficial to the early adopter community that might have a preference for one model over another. This is so bleeding edge, that it might even break. But innovation wouldn't be fun without experimental projects, would it?

Testing local LLMs in Opera Developer

Did we get your attention yet? To test the models, you have to upgrade to the newest version and do the following:

- Open the Aria Chat side panel (as before)

- On the top of the chat there will be a drop-down saying "Choose local mode"

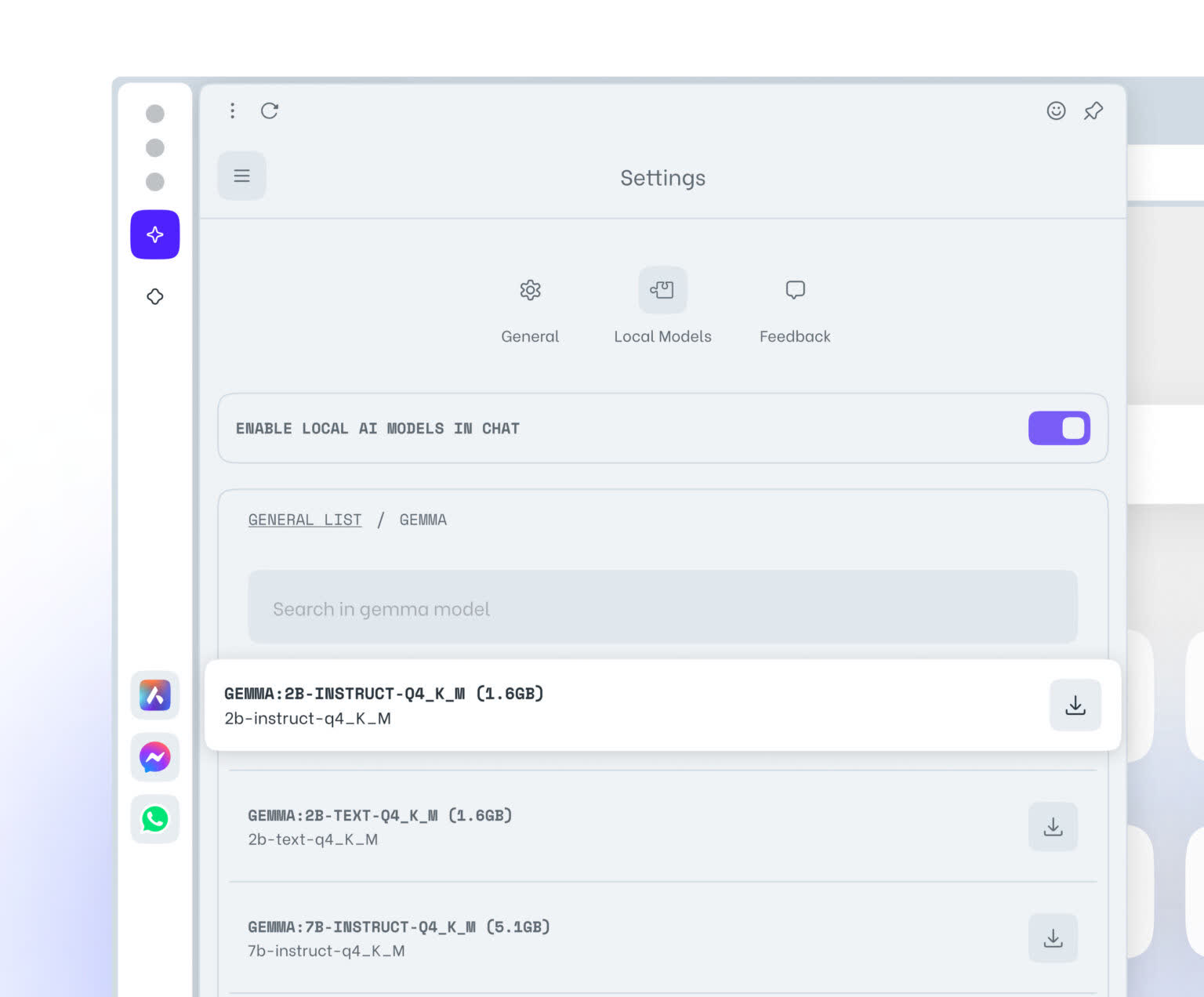

- Click "Go to settings"

- Here you can search and browse which model(s) you want to download. Download e.g GEMMA:2B-INSTRUCT-Q4_K_M which is one of the smaller and faster models by clicking on the download button to the right

- After downloading is complete click the menu button on the top left and start a new chat

- On the top of the chat there will be a drop-down saying "Choose local mode"

- Select the model you just downloaded

- Type in a prompt in the chat, the local model will answer

Choosing a local LLM will then download it to your machine. Please beware that each one of them requires between 2-10 GB of local storage space and that a local LLM is likely to be considerably slower in providing output than a server-based one as it depends on your hardware's computing capabilities. The local LLM will be used instead of Aria, Opera's native browser AI until you start a new chat with Aria or simply switch Aria back on.

With this feature, we are starting to explore some potential future use cases. What if the browser of the future could rely on AI solutions based on your historic input while containing all of the data on your device? Food for thought. We'll keep you posted about where these explorations take us.

Interesting local LLMs to explore

Some interesting local LLMs to explore include Code Llama, an extension of Llama aimed at generating and discussing code, with a focus on expediting efficiency for developers. Code Llama is available in three versions: 7, 13, and 34 billion parameters. It accommodates numerous widely-used programming languages such as Python, C++, Java, PHP, Typescript (JavaScript), C#, Bash, among others.

Variations:

- instruct – fine-tuned to generate helpful and safe answers in natural language

- python – a specialized variation of Code Llama further fine-tuned on 100B tokens of Python code

- code – base model for code completion

Phi-2, released by Microsoft Research, is a 2.7B parameter language model that demonstrates outstanding reasoning and language understanding capabilities. Phi-2 model is best suited for prompts using Question-answering, chat, and code formats.

Mixtral is designed to excel at a wide range of natural language processing tasks, including text generation, question answering, and language understanding. Key benefits: performance, versatility, and accessibility.

We will of course keep adding more interesting models as they appear in the AI community.

Release Notes:

- CHR-9215 Update Chromium on master to 112.0.5596.2

- DNA-103304 The search bar calculator cannot calculate multiplying/dividing negative or positive numbers by negative numbers correctly

- DNA-105341 Make possible to disable opera_feature_* defined in args_default.gni to true'

- DNA-105807 Show embedded YouTube video on Start Page

- DNA-105816 Animate tab removing

- DNA-105907 [Tab Islands] Tab island handle clickable hotspot should be expanded

- DNA-105955 Use Wallpapers API for displaying images and videos

- DNA-105956 Place wallpaper iframe on React Start Page

- DNA-105996 [add-site-modal] autocomplete inserts full URL inside current URL when edit inside

- DNA-105998 Crash at opera::BrowserSidebarItemViewViews::ShowTooltip()

- DNA-106003 Show 'new tab in island' button on top of tab island edge

- DNA-106051 [Tab Islands] Crash when creating more than 8 tab islands in a workspace

- DNA-106081 Shuffle button doesn't work for current date wallpaper

- DNA-106103 Mechanism for additional scopes and extensions mapping

- DNA-106104 Update API versioning

- DNA-106128 Verify updated audio hashes in PipelineIntegrationRegressionTest

- DNA-106129 PipelineIntegrationRegressionTest.BasicPlayback/2 fails

- DNA-106168 EasySetup update

- DNA-106194 [Mac] Support for preinstalled extensions in Mac installer

- DNA-106207 Crash on duplicating a pinned tab

- DNA-106232 [Tab strip][Drag&Drop] Do not attempt to drag tab when it's clicked

- DNA-106238 Use goma on stable Windows builds

- DNA-106299 Enable pre-installing extensions + increment protocol version to v5

- DNA-106300 Fix rule for generating archive_browser_sym_files on crossplatform builds

- DNA-106313 [Tab Island] Add tab in tab island is causing tab strip content to shift when hovering over tab island

- DNA-106345 Crash on calling getRichWallpaper

- DNA-106355 Rich hints fail to compile

- DNA-106372 [Tab islands] Empty space between active tab and stacked tabs element

- DNA-106373 Use ARM nodejs binaries

- DNA-106384 Fix path resolving in optimize_webui.py

- DNA-106387 Animate tab removing within tab island

- DNA-106395 [accessibility] Tab island handle button not visible in accessibility tree

- DNA-106405 Fix crash related to getAccessToken

- DNA-106408 [Tab islands] DCHECK invoked on context menu if pinned tabs are present

- DNA-106412 Content of popup not generated for some extensions when using more then one worksapce

- DNA-106416 Allow chrome://rich-wallpaper to be embedded in startpage and gx corner

- DNA-106433 Extend Easy Setup API