Ever stop and wonder just how much data flows through the miraculous series of tubes we know and love as the Internet? Three UC San Diego scientists set out to answer that question last year by analyzing the total work capacity of servers around the globe and their findings were published in report this week (PDF).

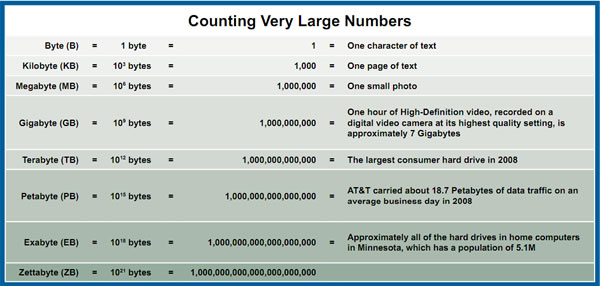

According to their estimate, the world's servers processed 9.57 zettabytes (ZB) of information in 2008. To reflect the magnitude of such a number, the report equates 9.57ZB to stacking Stephen King's longest novel (2.5MB of data) from Earth to Neptune and back 20 times. That data is even underestimated as it excludes private servers built by Google, Microsoft and others.

No matter how you slice it, it's incredibly difficult to conceptualize. "Most of this information is incredibly transient: it is created, used, and discarded in a few seconds without ever being seen by a person," said co-author Roger Bohn. "It's the underwater base of the iceberg that runs the world that we see."

Server sales totaled $53.3 billion in 2008, on par with the previous four years. During the same timeframe, server performance increased five to eight times. Entry-level servers costing less than $25,000 processed about 65% of the world's information in 2008, mid-range servers crunched about 30%, and high-end servers costing $500,000+ handled the remaining 5%.