I want to warn you, there is some thick background information here first. But don't worry. I'll get to the meat of the topic and that's this: Ultimately, I think that PCIe cards will evolve to more external, rack-level, pooled flash solutions, without sacrificing all their great attributes today. This is just my opinion, but other leaders in flash are going down this path too...

I've been working on enterprise flash storage since 2007 - mulling over how to make it work. Endurance, capacity, cost, performance have all been concerns that have been grappled with. Of course the flash is changing too as the nodes change: 60nm, 50nm, 35nm, 24nm, 20nm... and single level cell (SLC) to multi level cell (MLC) to triple level cell (TLC) and all the variants of these "trimmed" for specific use cases. The spec "endurance" has gone from 1 million program/erase cycles (PE) to 3,000, and in some cases 500.

It's worth pointing out that almost all the "magic" that has been developed around flash was already scoped out in 2007. It just takes a while for a whole new industry to mature. Individual die capacity increased, meaning fewer die are needed for a solution - and that means less parallel bandwidth for data transfer... And the "requirement" for state-of-the-art single operation write latency has fallen well below the write latency of the flash itself. (What the ?? Yea - talk about that later in some other blog. But flash is ~1500uS write latency, where state of the art flash cards are ~50uS.) When I describe the state of technology it sounds pretty pessimistic. I'm not. We've overcome a lot.

We built our first PCIe card solution at LSI in 2009. It wasn't perfect, but it was better than anything else out there in many ways. We've learned a lot in the years since - both from making them, and from dealing with customer and users - both of our own solutions and our competitors. We're lucky to be an important player in storage, so in general the big OEMs, large enterprises and the mega datacenters all want to talk with us - not just about what we have or can sell, but what we could have and what we could do. They're generous enough to share what works and what doesn't. What the values of solutions are and what the pitfalls are too. Honestly? It's the mega datacenters in the lead both practically and in vision.

If you haven't nodded off to sleep yet, that's a long-winded way of saying - things have changed fast, and, boy, we've learned a lot in just a few years.

Most important thing we've learned...

Most importantly, we've learned it's latency that matters. No one is pushing the IOPs limits of flash, and no one is pushing the bandwidth limits of flash. But they sure are pushing the latency limits.

PCIe cards are great, but...

We've gotten lots of feedback, and one of the biggest things we've learned is - PCIe flash cards are awesome. They radically change performance profiles of most applications, especially databases allowing servers to run efficiently and actual work done by that server to multiply 4x to 10x (and in a few extreme cases 100x). So the feedback we get from large users is "PCIe cards are fantastic. We're so thankful they came along. But..." There's always a "but," right??

It tends to be a pretty long list of frustrations, and they differ depending on the type of datacenter using them. We're not the only ones hearing it. To be clear, none of these are stopping people from deploying PCIe flash... the attraction is just too compelling. But the problems are real, and they have real implications, and the market is asking for real solutions.

-

Stranded capacity & IOPs

- Some "leftover" space is always needed in a PCIe card. Databases don't do well when they run out of storage! But you still pay for that unused capacity.

- All the IOPs and bandwidth are rarely used - sure latency is met, but there is capability left on the table.

- Not enough capacity on a card - It's hard to figure out how much flash a server/application will need. But there is no flexibility. If my working set goes one byte over the card capacity, well, that's a problem.

-

Stranded data on server fail

- If a server fails - all that valuable hot data is unavailable. Worse - it all needs to be re-constructed when the server does come online because it will be stale. It takes quite a while to rebuild 2TBytes of interesting data. Hours to days.

-

PCIe flash storage is a separate storage domain vs. disks and boot.

- Have to explicitly manage LUNs, move data to make it hot.

- Often have to manage via different API's and management portals.

- Applications may even have to be re-written to use different APIs, depending on the vendor.

-

Depending on the vendor, performance doesn't scale.

- One card gives awesome performance improvement. Two cards don't give quite the same improvement.

- Three or four cards don't give any improvement at all. Performance maxed out somewhere below 2 cards. It turns out drivers and server onloaded code create resource bottlenecks, but this is more a competitor's problem than ours.

-

Depending on the vendor, performance sags over time.

- More and more computation (latency) is needed in the server as flash wears and needs more error correction.

- This is more a competitor's problem than ours.

-

It's hard to get cards in servers.

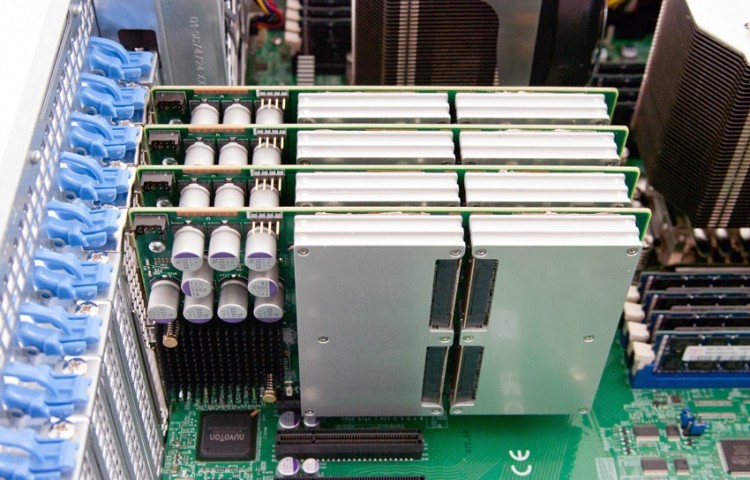

- A PCIe card is a card - right? Not really. Getting a high capacity card in a half height, half length PCIe form factor is tough, but doable. However, running that card has problems.

- It may need more than 25W of power to run at full performance - the slot may or may not provide it. Flash burns power proportionately to activity, and writes/erases are especially intense on power. It's really hard to remove more than 25W air cooling in a slot.

- The air is preheated, or the slot doesn't get good airflow. It ends up being a server by server/slot by slot qualification process. (yes, slot by slot...) As trivial as this sounds, it's actually one of the biggest problems

Of course, everyone wants these fixed without affecting single operation latency, or increasing cost, etc. That's what we're here for though - right? Solve the impossible?

A quick summary is in order. It's not looking good. For a given solution, flash is getting less reliable, there is less bandwidth available at capacity because there are fewer die, we're driving latency way below the actual write latency of flash, and we're not satisfied with the best solutions we have for all the reasons above.

The implications

If you think these through enough, you start to consider one basic path. It also turns out we're not the only ones realizing this. Where will PCIe flash solutions evolve over the next 2, 3, 4 years? The basic goals are:

- Unified storage infrastructure for boot, flash, and HDDs

- Pooling of storage so that resources can be allocated/shared

- Low latency, high performance as if those resources were DAS attached, or PCIe card flash

- Bonus points for file store with a global name space

One easy answer would be - that's a flash SAN or NAS. But that's not the answer. Not many customers want a flash SAN or NAS - not for their new infrastructure, but more importantly, all the data is at the wrong end of the straw. The poor server is left sucking hard. Remember - this is flash, and people use flash for latency. Today these SAN type of flash devices have 4x-10x worse latency than PCIe cards. Ouch. You have to suck the data through a relatively low bandwidth interconnect, after passing through both the storage and network stacks. And there is interaction between the I/O threads of various servers and applications - you have to wait in line for that resource. It's true there is a lot of startup energy in this space. It seems to make sense if you're a startup, because SAN/NAS is what people use today, and there's lots of money spent in that market today. However, it's not what the market is asking for.

Another easy answer is NVMe SSDs. Right? Everyone wants them - right? Well, OEMs at least. Front bay PCIe SSDs (HDD form factor or NVMe - lots of names) that crowd out your disk drive bays. But they don't fix the problems. The extra mechanicals and form factor are more expensive, and just make replacing the cards every 5 years a few minutes faster. Wow. With NVME SSDs, you can fit fewer HDDs - not good. They also provide uniformly bad cooling, and hard limit power to 9W or 25W per device. But to protect the storage in these devices, you need to have enough of them that you can RAID or otherwise protect. Once you have enough of those for protection, they give you awesome capacity, IOPs and bandwidth, too much in fact, but that's not what applications need - they need low latency for the working set of data.

What do I think the PCIe replacement solutions in the near future will look like? You need to pool the flash across servers (to optimize bandwidth and resource usage, and allocate appropriate capacity). You need to protect against failures/errors and limit the span of failure, commit writes at very low latency (lower than native flash) and maintain low latency, bottleneck-free physical links to each server... To me that implies:

- Small enclosure per rack handling ~32 or more servers

- Enclosure manages temperature and cooling optimally for performance/endurance

- Remote configuration/management of the resources allocated to each server

- Ability to re-assign resources from one server to another in the event of server/VM blue-screen

- Low-latency/high-bandwidth physical cable or backplane from each server to the enclosure

- Replaceable inexpensive flash modules in case of failure

- Protection across all modules (erasure coding) to allow continuous operation at very high bandwidth

- NV memory to commit writes with extremely low latency

- Ultimately - integrated with the whole storage architecture at the rack, the same APIs, drivers, etc.

That means the performance looks exactly as if each server had multiple PCIe cards. But the capacity and bandwidth resources are shared, and systems can remain resilient. So ultimately, I think that PCIe cards will evolve to more external, rack level, pooled flash solutions, without sacrificing all their great attributes today. This is just my opinion, but as I say - other leaders in flash are going down this path too...

What's your opinion?

Rob Ober drives LSI into new technologies, businesses and products as an LSI fellow in Corporate Strategy. Prior to joining LSI, he was a fellow in the Office of the CTO at AMD, responsible for mobile platforms, embedded platforms and wireless strategy. He was a founding board member of OLPC ($100 laptop.org) and OpenSPARC.

Republished with permission.