Why it matters: It's not exactly a well-kept secret that self-driving technologies have a few issues. Tesla's semi-autonomous "Autopilot" driving tool has made headlines on more than one occasion after allegedly making a decision that has led to a driver's injury or death. Unfortunately for Tesla, researchers have now found a reliable way to trick Autopilot into acting against its drivers best interests.

You may think they did this by hacking into the car's software or drastically altering the way Autopilot functions, but the reality is much simpler.

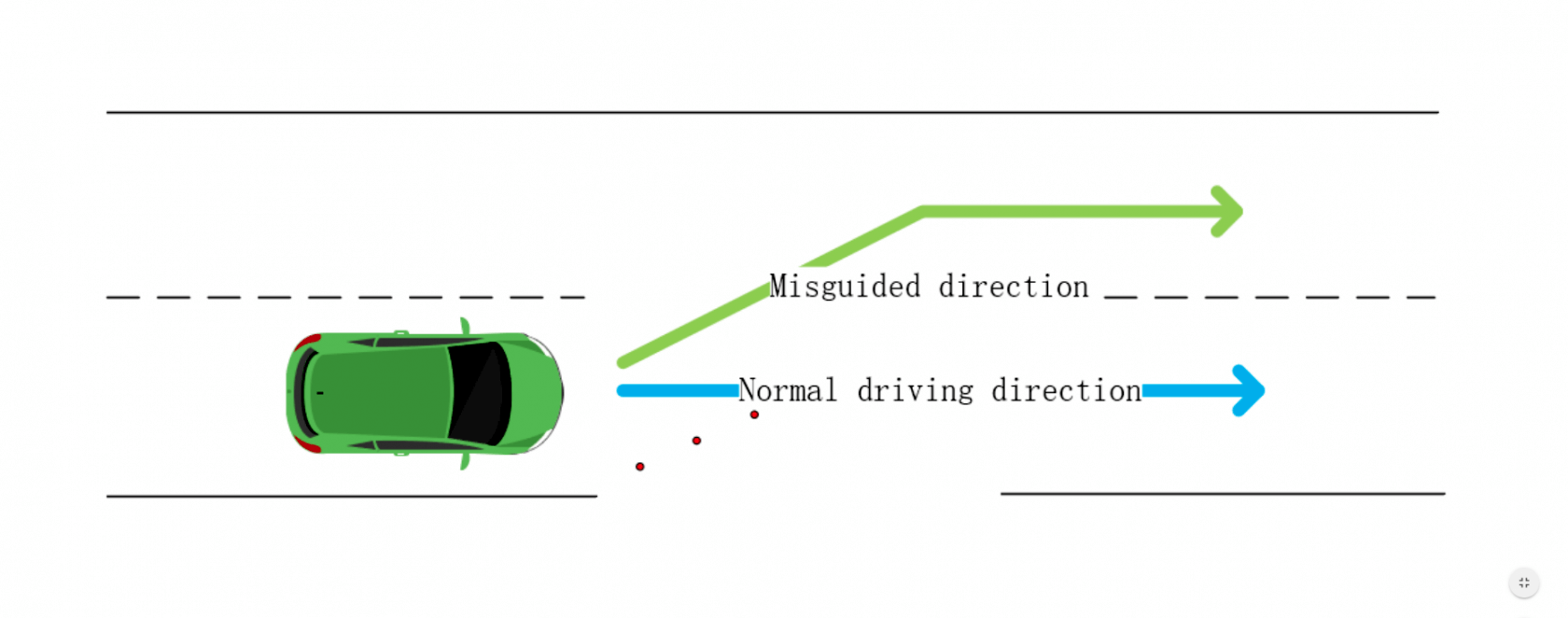

All researchers had to do to fool Autopilot was attach three small stickers to the road in a diagonal formation. Upon doing so, they found that Autopilot seems to read the stickers as some sort of lane merge or turn indicator, causing their tested vehicle to drive straight into what would be oncoming traffic.

Researchers note that this exploit is "simple to deploy" and only requires materials that are "easy to obtain." In theory, just about anybody could place these near-invisible stickers down and watch the potential carnage.

For better or worse, in a statement emailed to Ars Technica, Tesla made it clear that it doesn't feel this vulnerability is anything to be worried about. The company says that since this exploit requires someone to "alter" the environment around an Autopilot-equipped vehicle, it is "not a realistic concern."

Tesla goes on to place the burden of responsibility on drivers, noting that they can and should "always be prepared" to take control of the steering wheel or brakes if Autopilot makes a bad call.