Async Compute & Closing Thoughts

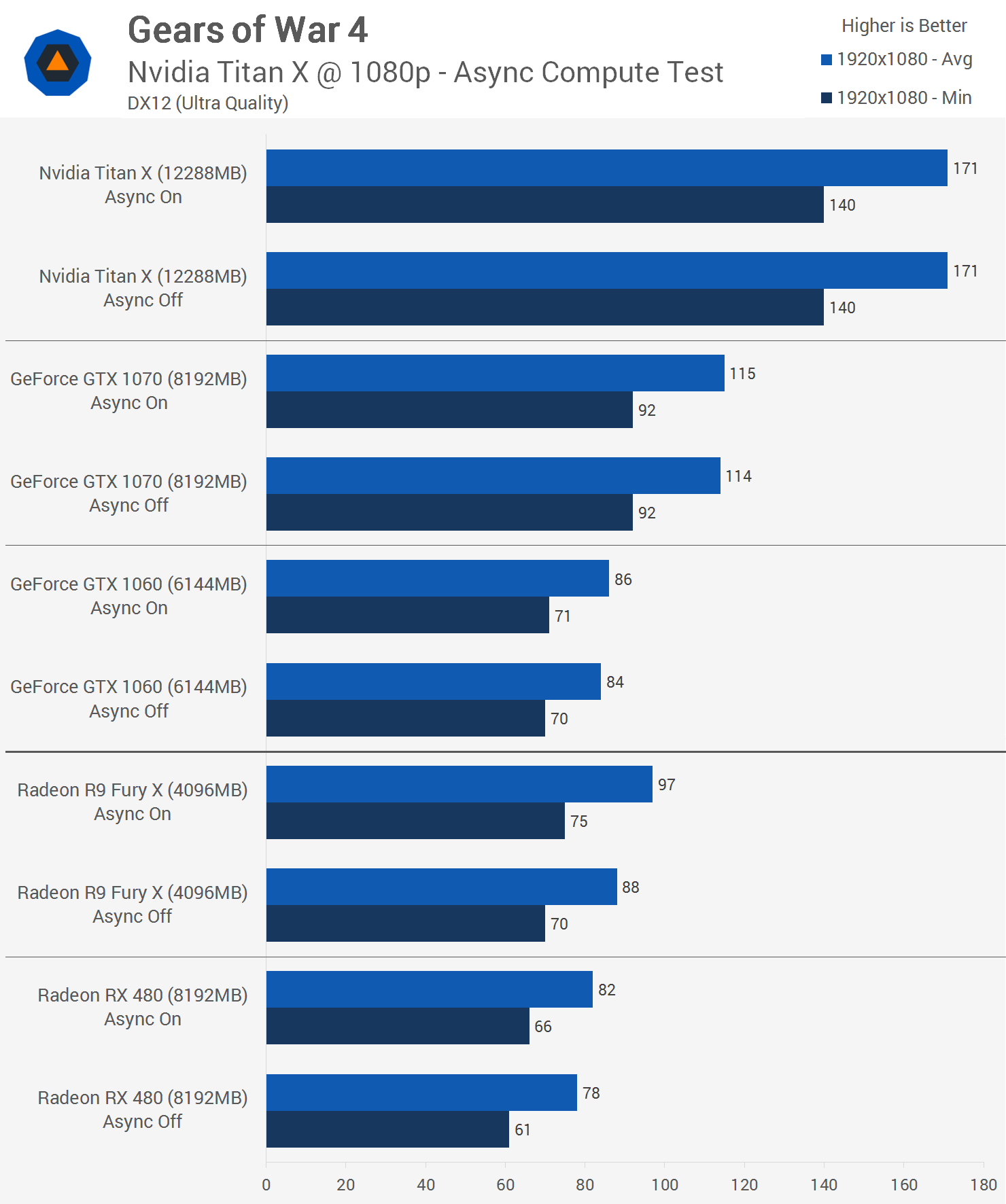

Before wrapping up testing, I wanted to see what kind of an impact asynchronous compute has on performance for this title. Apparently the game heavily leverages this technology but given Nvidia's involvement we are skeptical about how exactly it's being used.

As expected, async compute does nothing for the Pascal GPUs as the GTX 1060, GTX 1070 and Titan X all provided the same performance regardless. I should note that only Pascal GPUs can run with async compute enabled, though as we have just seen, Maxwell and older GPUs aren't exactly missing out.

Moving to the red team, we see that AMD finds 5% more performance with async compute enabled on the RX 480, taking the average frame rate from 78fps to 82fps. Not a significant difference but an improvement nonetheless. Interestingly, the Fury X sees a much more meaningful boost from async compute, gaining up to 10% more performance. With significantly more cores to organize, it makes sense that the Fury X benefits from a more free-flowing workload.

We're impressed with how Gears of War 4 runs on PC using both AMD and Nvidia hardware. Those gaming at 1080p shouldn't have much trouble maxing out the game, though as we noted earlier, maxed out image quality can vary from one graphics card to another. Unlike a game such as Mirror's Edge Catalyst which features a 'GPU memory restriction' that allows gamers to force certain quality settings and risk stuttering, Gears of War 4 doesn't. Instead, this title appears to actively adjust the quality settings depending on resources available.

This is both a curse and a blessing depending on how you look at it. For gamers who aren't interested in fine-tuning graphical settings, this hands-off approach probably works best. However, anyone who wants to tweak the visuals surely won't welcome the auto-retarding of their game's image quality.

Often times the 3GB GTX 1060 pushed well over 50fps and yet the graphics were still being noticeably downgraded compared to the 6GB model. I'm sure some gamers would be happy dropping down to 40fps in this title if it meant a prettier picture. This downgrading of textures, lighting and shadows on certain graphics cards makes it quite difficult to create an apples-to-apples performance comparison. Hopefully the ability to ignore memory limitations will be patched in soon.

When it comes to CPU performance in Gears of War 4, clock speed is king. The 6700K responded well to overclocking with the Nvidia Titan X in charge of the rendering. Massive increases in the minimum frame rate were seen each time we jumped 500MHz. Gamers can play with a dual-core processor though be aware that this hurts the minimum frame rate quite a bit. There was a surprisingly large variation between the Core i5 and Core i7 processors when looking at minimum frame rates.

Interestingly, those running an AMD Radeon or Nvidia Maxwell GPU shouldn't see much difference in performance between the Core i5 and Core i7 processors, in fact even the AMD FX-series looks quite competitive.

This all changes with a high-end Pascal GPU. For some reason the Core i7's minimum frame rate becomes much greater and we have no idea what is happening here. Further investigation will be required and this is something we will look into over the coming weeks. This issue is highlighted in an extreme fashion with the Titan X, which is why we were so shocked with the initial results, leading us to dig further with the Fury X, 980 Ti and 1070.

- Check out Gears of War 4 Reviews and Ratings

- Buy Gears of War 4 on Amazon

We may have more to report on the performance of this title if/when we get to the bottom of that mystery, if/when support for multi-GPU setups is introduced and if/when we gain the ability to disable the auto-adjusted image quality. For now, as the first sequel in this series to be developed by a studio outside of Epic Games, we're happy that it scales well enough to be enjoyed on max quality at 1080p across most modern gaming GPUs while simultaneously being able to cripple them when cranked up to 4K.