As the market leader and long time dominant force in the CPU space, Intel's been able to get away with a lot and this is partly because the competition has allowed them to. Some will rally behind AMD today, holding them up and saying how brilliant they are for increasing core counts and finally sending us forward after years of nothing but quad-cores on the mainstream.

What AMD's done with Ryzen is impressive, but they're also a big part of why the past, almost a decade, has been very slow on the CPU front. But now that things are getting seriously competitive we feel Intel needs to change some stuff, stuff we've been asking them to improve on for years.

Last week we were in Taiwan attending Computex, a very PC-centric trade show, and found ourselves discussing a few areas where Intel as well as AMD and Nvidia need to improve to become more consumer friendly. At the end of that discussion we realized it would make for a good column, so we'll do one for each company. We'll go over each point that was raised and please note that these won't be in any particular order, with some things being more important than others.

Personal computing is still Intel's number one revenue maker, followed by data center revenue which is closely tied to the development of the same kind of products. After missing the boat on mobile (showing how the company was resting on its laurels for years) today Intel is making longer-term bets on things like AI, non-volatile memory, IoT, FPGAs, and autonomous driving.

But once again, in this column we're looking at possible improvements from the consumers' perspective and specific to Intel's personal computing side of the business...

Drop the toothpaste

First order of business, the TIM, no not that one. I'm sure many of you are aware of this but Intel no longer solders their desktop CPUs. I've heard all the arguments for why Intel no longer solders their chips and honestly I don't buy it. The best case put forth is that solder can form micro cracks after a certain amount of thermal cycles, over an undetermined period of time. Thermal paste, on the other hand, is claimed to last a lot longer, especially for small die CPUs.

What we know for a fact is Intel's desktop CPUs ran much cooler back when they were soldered in 2011. That is Sandy Bridge days for the mainstream desktop, while the high-end desktop parts were soldered up until the Broadwell-E era in 2016.

As an example, the Core i7-3770K running at 4.7 GHz using 1.35v would peak at just over 90 degrees running an AIDA64 stress test using a large tower-style air cooler. Under the exact same conditions but with 1.4v, so a little more voltage, the 2600K runs at least 20 degrees cooler. We've seen time and time again with newer Intel CPUs that delidding drops load temperatures by at least 20 degrees.

I've also got quite a few Sandy Bridge CPUs that have been overclocked all their life and used on a regular basis over the past 7 years and thermal performance, as far as I can tell, is the same as the day I first tested them back in 2011. So I call BS on the micro cracks being a serious concern

I believe the real reason Intel doesn't solder their chips is because it's an expensive process, so this is a plain and simple cost-saving exercise. Intel's a big business and this is a classic big business decision.

Soldering is clearly a superior method for performance and this is why Intel still solders their Xeon server chips. I'd say it's easier to get away with shafting the general consumer and with no competition you can also get away with doing the same to enthusiasts. I get that pro overclockers are happy to delid and can even profit from the process by providing tools for other enthusiasts to do so, but for a majority of users it simply results in hotter processors that are more costly and difficult to cool down.

Metal shavings for heatsinks

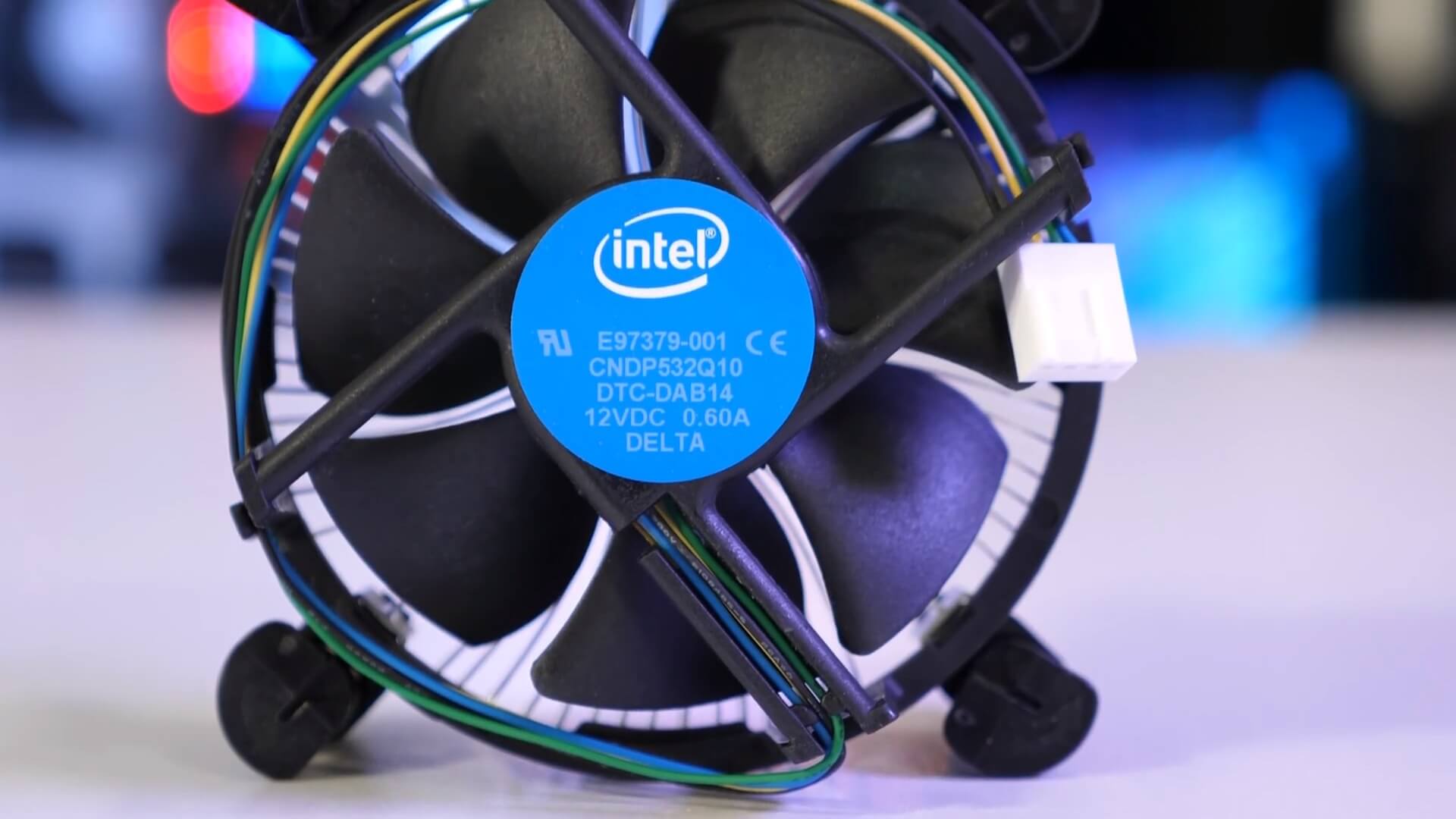

While on the subject of cooling, the box cooler could do with a serious upgrade. The 73-watt model supplied with the Core i7-8700 is a joke, and we don't want to see any more of that. Like the thermal paste situation, this is merely another cost saving exercise.

We've already seen how the Intel box cooler provided with the 8700 can't avoid extreme thermal throttling under load, even in a well ventilated case with an ambient air temperature of 21 degrees. So this one is pretty straightforward and I think everyone can agree, Intel needs to seriously up their box cooler game.

In summary, we want bigger coolers that not just eliminate thermal throttling but reduce thermals to acceptable levels and soldering would play a key role here as well. And, of course, coolers that don't sound like a jet about to take off would also be welcome.

Send reviewers retail CPUs

This one's pretty straightforward and as mentioned earlier these requests are not in any kind of order. What I want here is for Intel to start sending reviewers retail products in retail packages.

AMD sends retail versions of their CPUs to reviewers and sure they test them before shipping, but I don't have too much of an issue with that, step one is at least getting retail products into the hands of those who are testing them for potential buyers.

Intel sends reviewers QS or Qualification Sample chips and although these are technically identical to an OEM or retail CPU, I'd still much rather show a full blown retail chip on launch day with details on packaging and perhaps more importantly, the retail cooler, should it come with one. For those of you wondering QS chips come from an early production run and are generally sent out to OEMs and board partners to validate their hardware.

So anyone that got their hands on a Core i7-8700 or Core i5-8400 ahead of release from a board partner for example, would have needed to source the standard Intel box cooler if they wanted to test out of the box performance.

Getting an Intel box cooler isn't difficult but you probably had to assume Intel was sticking with the 7th gen box cooler for the 8th gen parts and for us at least that information wasn't made clear ahead of release.

Competitive pricing (where it applies)

We obviously want to pay as little as possible while still getting what we want and Intel wants to charge as much as they can while giving us as little as possible, and with limited competition prior to last year, that's been to Intel's benefit.

Recently though things have changed considerably, but while the competition continues to step up Intel hasn't stepped down on pricing. We're getting a little more than what we were a few years ago, but that should be the natural progression of things anyway.

Admittedly pricing for the mainstream Coffee Lake desktop parts isn't too bad, but Intel's still robbing us in a few areas. Their high-end desktop parts that are grossly overpriced in my opinion and this is largely because Intel wants to avoid cannibalizing their server parts where they have bigger margins.

Even though Skylake-X doesn't offer ECC memory support, Intel still doesn't want to risk hurting Xeon sales. The workstation equivalent of the Core i7-7980XE costs a further $550, for example.

But with AMD's recent Threadripper announcement, Intel will surely be forced to become more competitive and users won't be forced to pay a hideous premium if going Intel, or so we hope. Of course, you also have to stop overpaying for CPUs if you want Intel to stop overcharging.

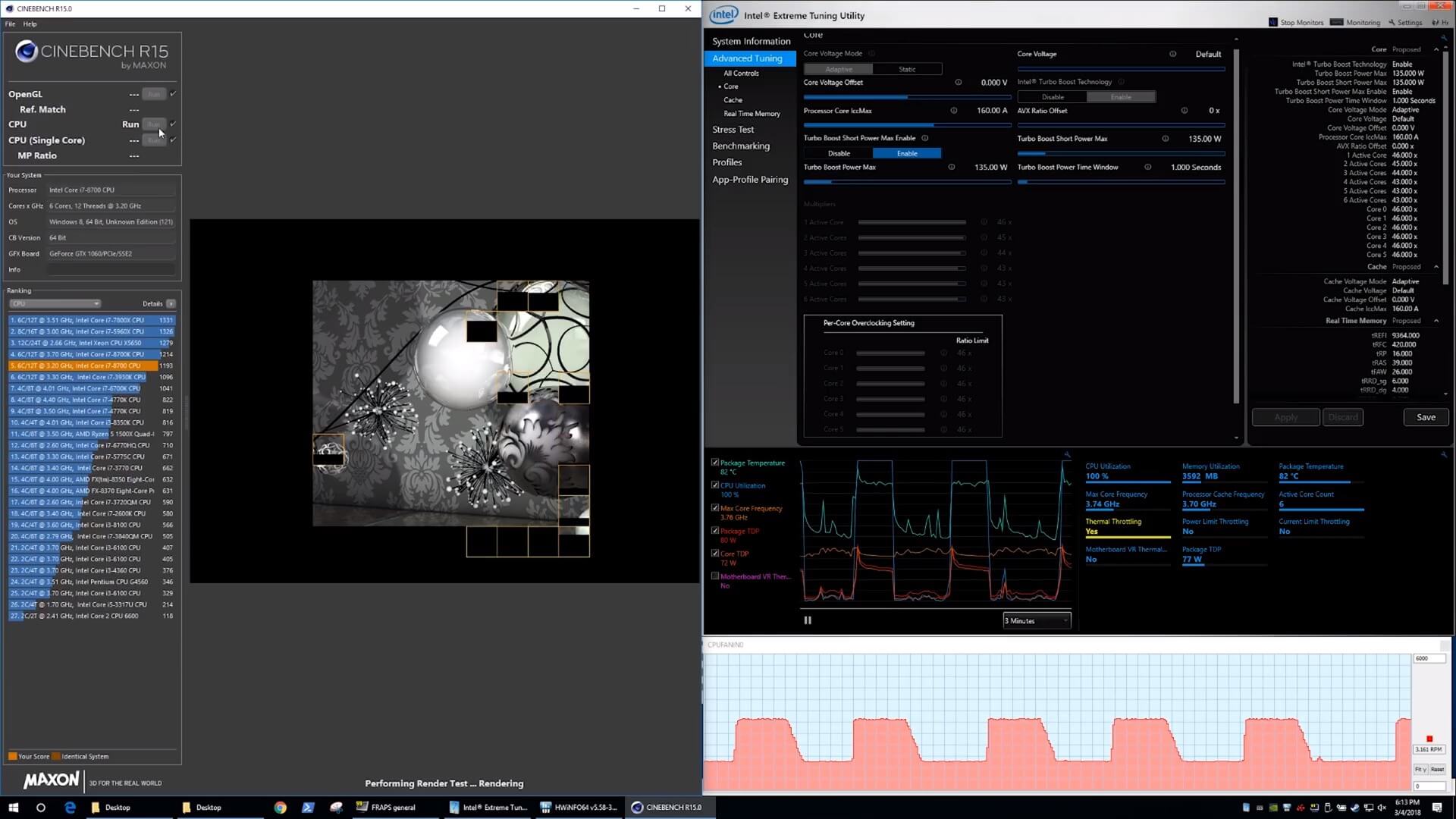

Stop abusing the TDP

The TDP (thermal design power) refers to the power dissipation when a processor is running at its base clock speeds, meaning the TDP tells us nothing about how a CPU runs at its boost clocks and what sort of cooling is required to run at higher than base frequencies.

In recent years, Intel has been pushing the boundaries of the TDP by continuing to shuffle base clocks lower and lower, while keeping boost clocks as high as possible. This allows them to release a CPU that falls under a certain TDP, while still bragging about high clock speeds.

Take the Core i7-8700 as an example. This is a CPU with a 65W TDP, which corresponds to its 3.2 GHz base clock. However the chip has a 4.6 GHz single-core boost frequency. It can boost 1.4 GHz higher than its base, and in the process blow power consumption well above 65W. In fact, we monitored it hitting 128W in a short stress test. In typical workloads where you want to and are running well above the base clocks, the TDP rating of the chip is completely meaningless.

The problem with this is that some OEMs and even Intel package CPUs like the Core i7-8700 with coolers that are barely capable of dissipating the power specified by the TDP. Some pre-builts even lock the CPU's power draw to the TDP to prevent their terrible cooler from being overwhelmed. Then when a user wants to hit the advertised boost clocks, they are unable to do so because the system and cooler have only been designed around the TDP.

In a modern landscape where CPUs boost more than a GHz higher than their base clock and are capable of sustaining that frequency for long periods, Intel should be using a TDP or similar metric that is an actual reflection of the typical power consumed during high-performance workloads. Buyers would then have a much better idea of the cooler they need, and Intel would be forced to upgrade their crappy box cooler to something capable of running the CPU at its full boost clocks.

We'd end up with better OEM systems, everyone would be better informed about power consumption and we'd all live happily ever after... okay, maybe I got a bit carried away with how great an accurate TDP rating would make everything, but still it would be nice.

Peasants have overclocking rights

The Core i3-8350K is a great idea and all, but at $180 there is more wrong here than just the CPU price. I talked about box coolers earlier and how crap they are, well in the case of the 8350K and other unlocked K-SKU models there's no cooler at all. Then making matters even worse is that you'll require a Z-series chipset to enable overclocking, in this case a Z370 motherboard.

Granted Z370 boards aren't crazy in terms of pricing as it's possible to snag one for a little over $100, though for that price you are getting a very basic motherboard. What would make far more sense is a $65 B360 board that supports CPU overclocking, and hey, why not throw in some memory overclocking support while you're at it.

Personally I'm over the locked chipset nonsense. Give enthusiasts on a budget the chance to get the most out of their CPU. Intel's no longer in a position to get away with this so you guys need to let them know about it. We realize that AMD is the underdog and as such they have to be extra competitive, but the fact remains that you can overclock any Ryzen CPU on a B350 board which is awesome. Intel should follow suit.

Overclocking for all: the Ryzen model

While on the topic of overclocking we don't just want to see support extended to more affordable chipsets, but also more affordable CPUs, like the good old days. Apart from being unlocked and more expensive, there really isn't a single thing special about the K models: they still use the same crappy TIM (thermal interface material) discussed earlier and unlike the locked models, don't come with a cooler at all.

So as a customer I want to see Intel drop the K models and unlock all parts. That would make Intel CPUs truly exciting again and fun for all enthusiasts. Perhaps though I'm not looking at this properly, but I honestly feel it really would be a win-win situation for Intel.

I can't see it cannibalizing other CPU lines that much as we've found the average gamer doesn't like to overclock anyway. So it's our opinion that this approach would certainly get more budget conscious enthusiasts talking about Intel CPUs, something they will no doubt start to see less and less of over the next few years if things continue the way they are.

I like my motherboard, don't make us breakup prematurely

This is a big one: compatibility. The current and previous few Core generations all use the same physically identical LGA1151 socket, so why can't you use an 8th gen Core processor on a 100 or 200-series motherboard? And why can't you use a 6th or 7th gen Core processor on a 300-series motherboard? No reason, no reason at all. Intel really needs to stop shafting us when it comes to compatibility and only limit support when absolutely necessary.

Last year Intel lied about the reasons why compatibility was dumped, claiming that the two additional cores of the new Core i5 and i7 models would require more power. While technically true and these new processors do use more power, there isn't a single Z170 or Z270 board they wouldn't work on.

Intel noted that Z370 motherboards have improved memory routing to support DDR4-2666, a slight increase over Kaby Lake's DDR4-2400. Uh uh, because Z170 and Z270 boards haven't been proven to handle DDR4-4000 without an issue, 2666 is really going to trip them up.

You might think I'm being a bit harsh and I have called Intel out for lying, without any evidence, so let's get to that. Back in October 2017, Andrew Wu, the Asus ROG product manager went on the record in a bit-tech interview and clearly said there is no reason why Coffee Lake CPUs can't work on 100 and 200 series motherboards. He said it was Intel's decision to remove compatibility. The power increase is small but makes no real difference and the takeaway was that backwards compatibility isn't a problem, but Intel would need to allow it.

It's plausible that overclocking headroom is greater on the more optimized Z370 boards, but there is simply no reason why the 8th gen CPUs can't work on older motherboards. More recently modders managed to get Coffee Lake CPUs working on 100 and 200-series boards, too.

I also haven't heard a single reason why 6th and 7th gen CPUs couldn't work on 300-series boards, if Intel had chosen to open up support. As an example, if your 100 or 200-series motherboard died in a year from now, chances are you'd be able to get a new 300-series board cheaper than a second hand board using an older chipset.

Some final words

This is merely a wish list and we don't expect Intel to address all of these concerns, but it would be pretty amazing to see a few of them tackled over the next year. With continuing pressure from AMD we'd be surprised if Intel didn't get serious about a few of these points, but so far they've been fairly stubborn to the idea of change.

We'll be following up next week tackling the question of what AMD needs to fix, and then Nvidia two weeks from now. Did we miss anything important? Let us know down in the comments section and if you have any suggestions for the upcoming AMD and Nvidia columns.

TechSpot Series: Needs to Fix

After attending Computex 2018, the very PC-centric trade show, we found ourselves discussing internally a few areas where Intel, AMD and Nvidia could improve to become more consumer friendly. At the end of that discussion we realized this would make for a good column, so we're doing one for each company.

- Part 1: Things Intel Needs to Fix

- Part 2: Things AMD Needs to Fix

- Part 3: Things Nvidia Needs to Fix