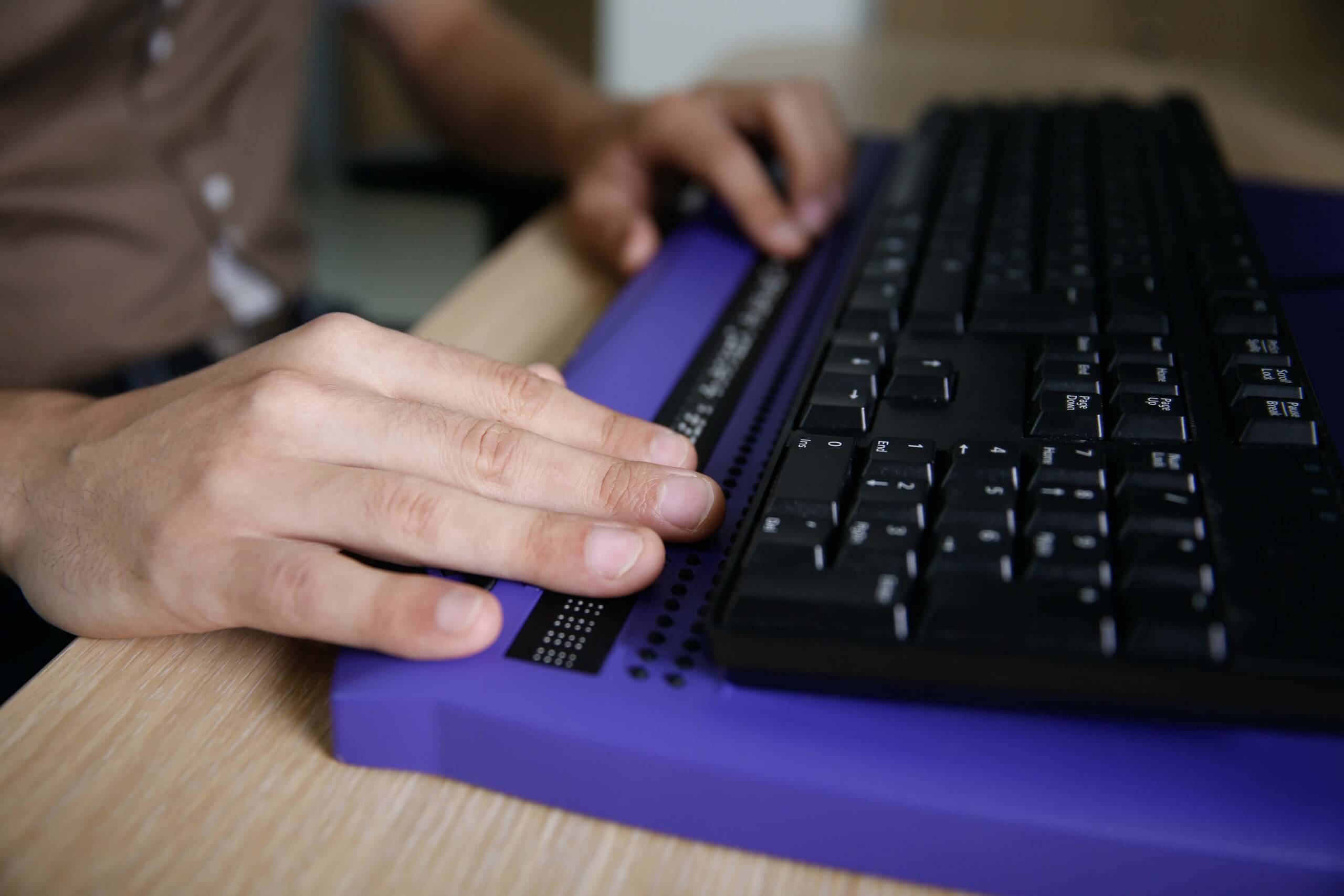

In context: As a primarily visual medium, the internet can be difficult for those with eyesight problems to use. Unless web designers use alt text for images, much of a website's content is lost to the visually challenged reader, as screen readers and Braille devices can't otherwise interpret the picture.

Google is looking to improve the web-browsing experience for those with vision conditions by introducing a feature into its Chrome browser that uses machine learning to recognize and describe images. The image description will be generated automatically using the same technology that drives Google Lens.

Google's Senior Program Manager for Accessibility Laura Allen, has vision problems herself. She says the feature will alleviate many of the headaches when trying to view a website with a screen reader.

"The unfortunate state right now is that there are still millions and millions of unlabeled images across the web. When you're navigating with a screen reader or a Braille display, when you get to one of those images, you'll actually just basically hear 'image' or 'unlabeled graphic,' or my favorite, a super long string of numbers which is the file name, which is just totally irrelevant."

The text descriptions use the phrase "appears to be" to let users know that it is a description of an image. So, for example, Chrome might say, "Appears to be a motorized scooter." This will be a cue to let the person know that it is a description generated by the AI and may not be completely accurate.

The feature is only available for those with screen readers or Braille displays. To enable it, users simply go to Chrome Settings > Advanced > Accessibility. From there, select "Get Image Descriptions from Google." Users can also enable the setting for a single webpage by right-clicking and pulling up the context menu where they will find the same option.