Today we're investigating claims that the new GeForce RTX 2060 is not a good buy because it only features 6GB VRAM capacity. The RTX 2060 offers performance similar to the GTX 1070 Ti, but that card packs an 8GB memory buffer, as did its non-Ti counterpart.

In other words, the RTX 2060 is the fastest graphics card to ever to come with a 6GB memory buffer. The previous king of this title would have been the GeForce GTX 980 Ti, and so far the Maxwell part has aged very well. So at least up until this point 6GB VRAM hasn't been an issue.

The RTX 2060 is only ~15% faster than the 980 Ti on average, so while a lot of people are freaking out and we don't believe the 6GB buffer is a short term issue, we wanted to run a few more tests and give a fuller perspective on the matter.

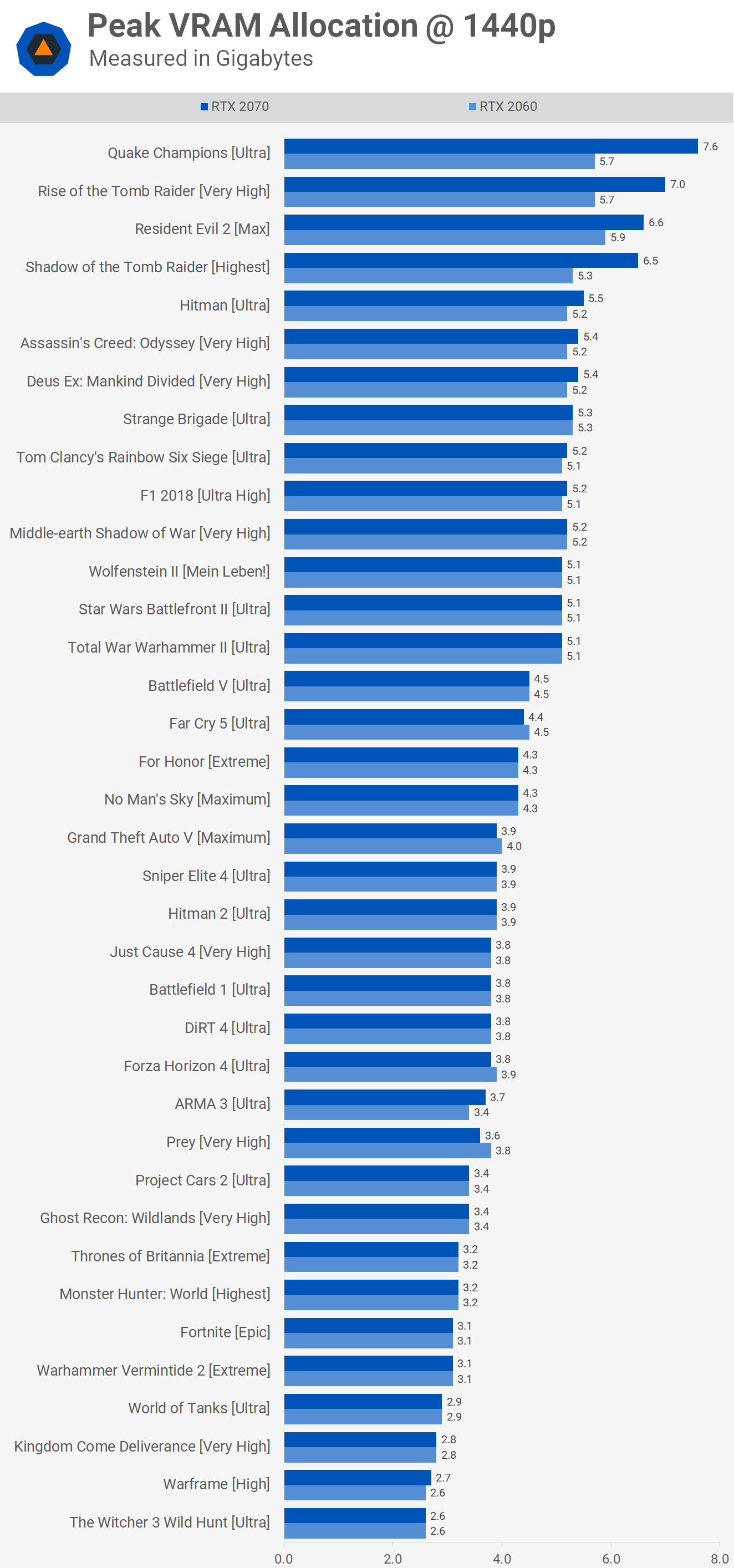

The first thing in our to-do list was to report peak VRAM allocation at 1440p in all the games we currently use to test GPU performance. That's 37 games in total, and let us tell you watching VRAM allocation while playing around in these games for over a day wasn't the most enjoyable experience.

Before we look at memory usage it's important to note that we're looking at allocation and not necessarily usage. Memory allocation sees memory reserved for storing data required by the GPU and some of this data allows the GPU to perform various calculations. Often games allocate more VRAM than is required, as having the memory reserved ahead of time can improve performance.

A recent example of this was seen on our Resident Evil 2 benchmark. We often saw VRAM allocation go as high as 8.5 GB when testing with the RTX 2080 Ti at 4K, but there was no performance penalty when using a graphics card with only 6GB of VRAM. There was however a big performance penalty for cards with less than 6 GB.

That is to say, while the game will allocate 8GB of VRAM at 4K when available, it appears to be using somewhere between 4 and 6 GB of memory, probably closer to the upper end of that range.

Games VRAM Allocation at 1440p

Here is a look at 37 PC games and how memory allocation differs between the RTX 2060 and RTX 2070 that comes with a larger 8GB memory buffer, allowing a greater amount of memory to be allocated if need be.

Starting from the top we find our most memory intensive titles. Quake Champions is a surprisingly hungry title though this game would simply appear to allocate all available memory. I estimate usage is actually below 4 GB based on what we've seen when testing.

Rise of the Tomb Raider is another hungry title, but usage for this one is probably closer to the allocation figure. We've already discussed Resident Evil 2 and I suspect Shadow of the Tomb Raider is very similar to Rise of the Tomb Raider. Beyond those, allocation drops below 6 GB. We see 10 titles allocating between 5 - 5.5 GB with everything else allocating 4 GB or less.

It's worth noting that almost all of these titles were tested using the maximum graphics quality preset with some form of anti-aliasing enabled. That being the case, it's easy to tweak those settings in most titles to reduce memory usage without noticeably impacting visual quality. We'll explore that in a moment.

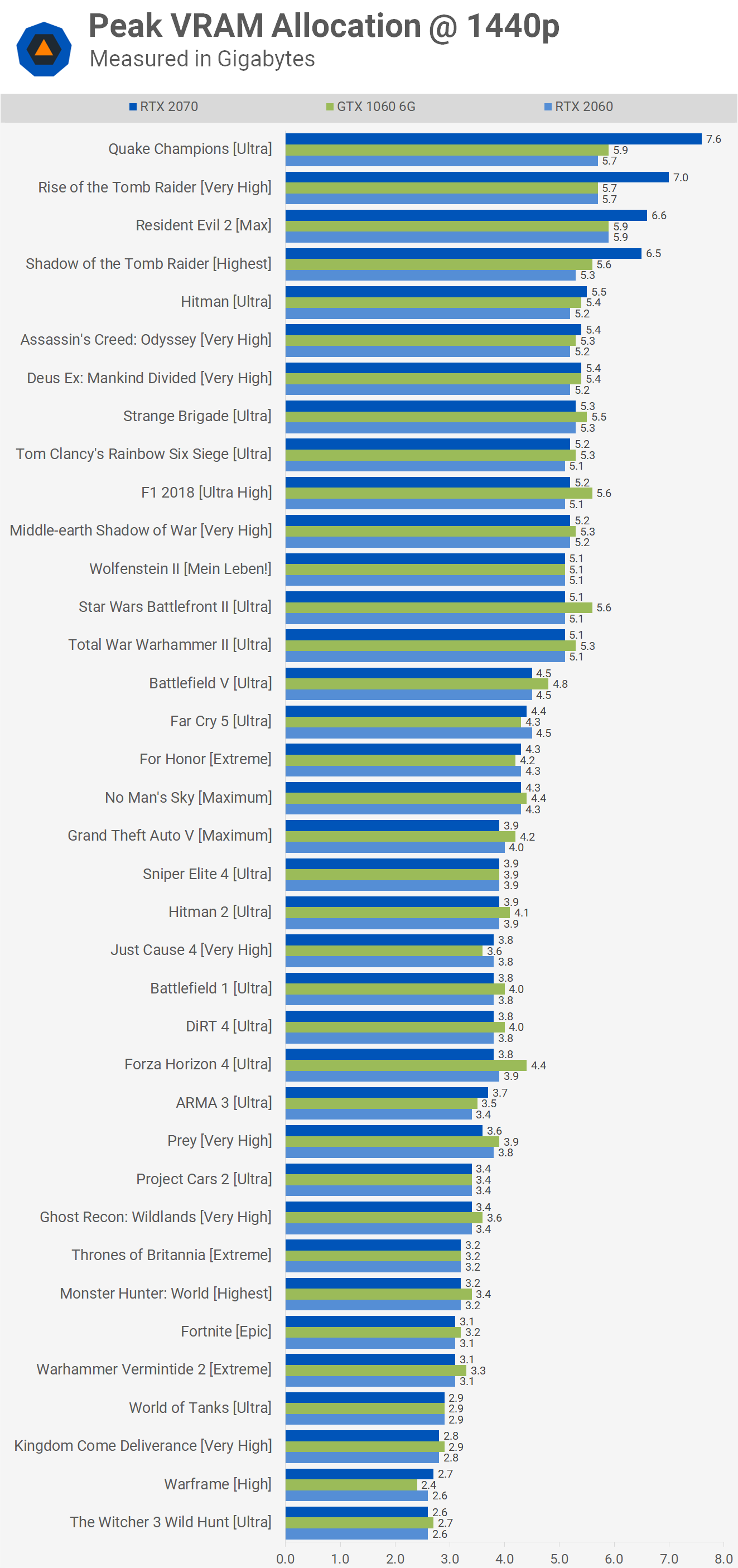

Moving on, we've seen people argue that Turing's improved memory compression makes all the difference when compared to Pascal. So they're saying the 6GB 2060's memory buffer is technically larger than that of the GTX 1060's. We don't consider that a valid argument for a few reasons... one being that the RTX 2060 is over 50% faster and therefore will be expected to handle drastically higher visual quality settings and resolutions. Further, we doubt any compression improvements are substantial enough to make up for that.

And yet we were interested to see how this impacted memory allocation, so we loaded up all 37 games once again, this time using the GTX 1060 6GB to see what we hit for peak allocation.

Typically memory allocation was higher with the GTX 1060, there were a few titles where it was half a gigabyte higher, but for the most part we're looking at 100 - 200 MB. Interestingly, in Warframe allocation was lower with the GTX 1060. So while Turing's memory management is indeed better, it's not enough to explain large performance differences.

How About Performance?

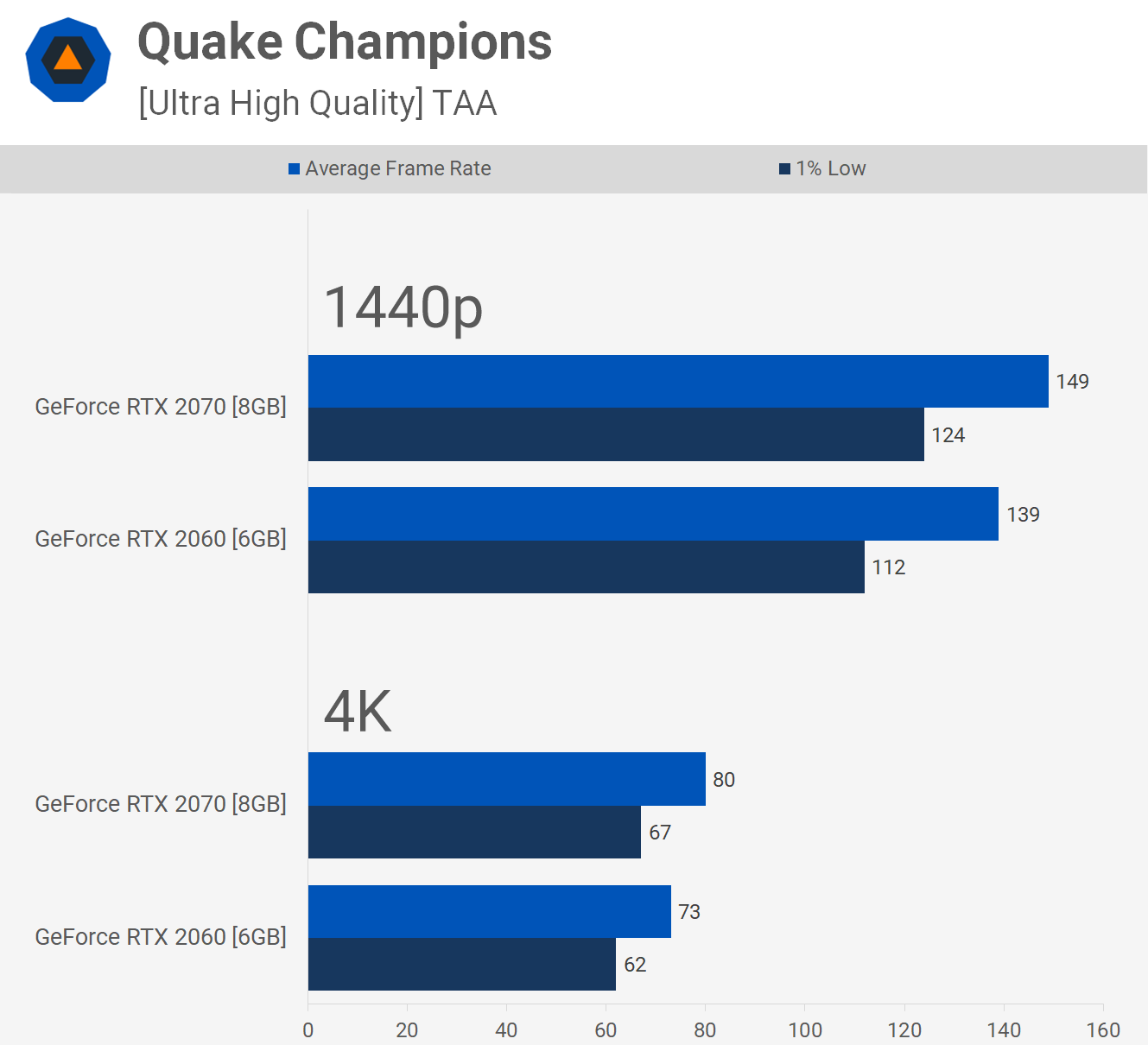

Our performance benchmarks were comprised of comparing the RTX 2060 and RTX 2070 in four of the most heavily memory allocated titles we found: Quake Champions, Resident Evil 2 and both Tomb Raider games. We want to see how they scale from 1440p to 4K, if the RTX 2060 is suffering from a lack of VRAM, if the margin should continue to grow at 4K, and in particular if frame time performance would take a nosedive in this scenario.

First up we have Quake Champions and looking at the 1440p results we see that the RTX 2060 is just 7% slower than the RTX 2070 when comparing the average frame rate and 5% slower for the 0.1% low result. Increasing the resolution to 4K, the RTX 2060 is 9% slower for the average frame rate and 8% slower for the 0.1% low frame time result. So while the margins grew at 4K, they're nowhere near big enough to claim a VRAM issue for the RTX 2060. It's just as likely down to the higher core count GPU being better utilized and less bottlenecked at the higher resolution.

In Rise of the Tomb Raider we see the RTX 2060 trailing by just 4% at 1440p. This margin is increased to ~13% at 4K. You could easily blame this on the RTX 2060's 6GB memory buffer, but I would advise against that. The 2060 packs 17% fewer CUDA cores and therefore should be anywhere from 10-17% slower than the 2070. This probably means we're running into some kind of system bottleneck at 1440p that's limiting performance of the RTX 2070.

In any case, the RTX 2060 was just as smooth as the 2070 at both resolutions. Of course, frame rates weren't ideal for either GPU at 4K, but they did offer a similar experience.

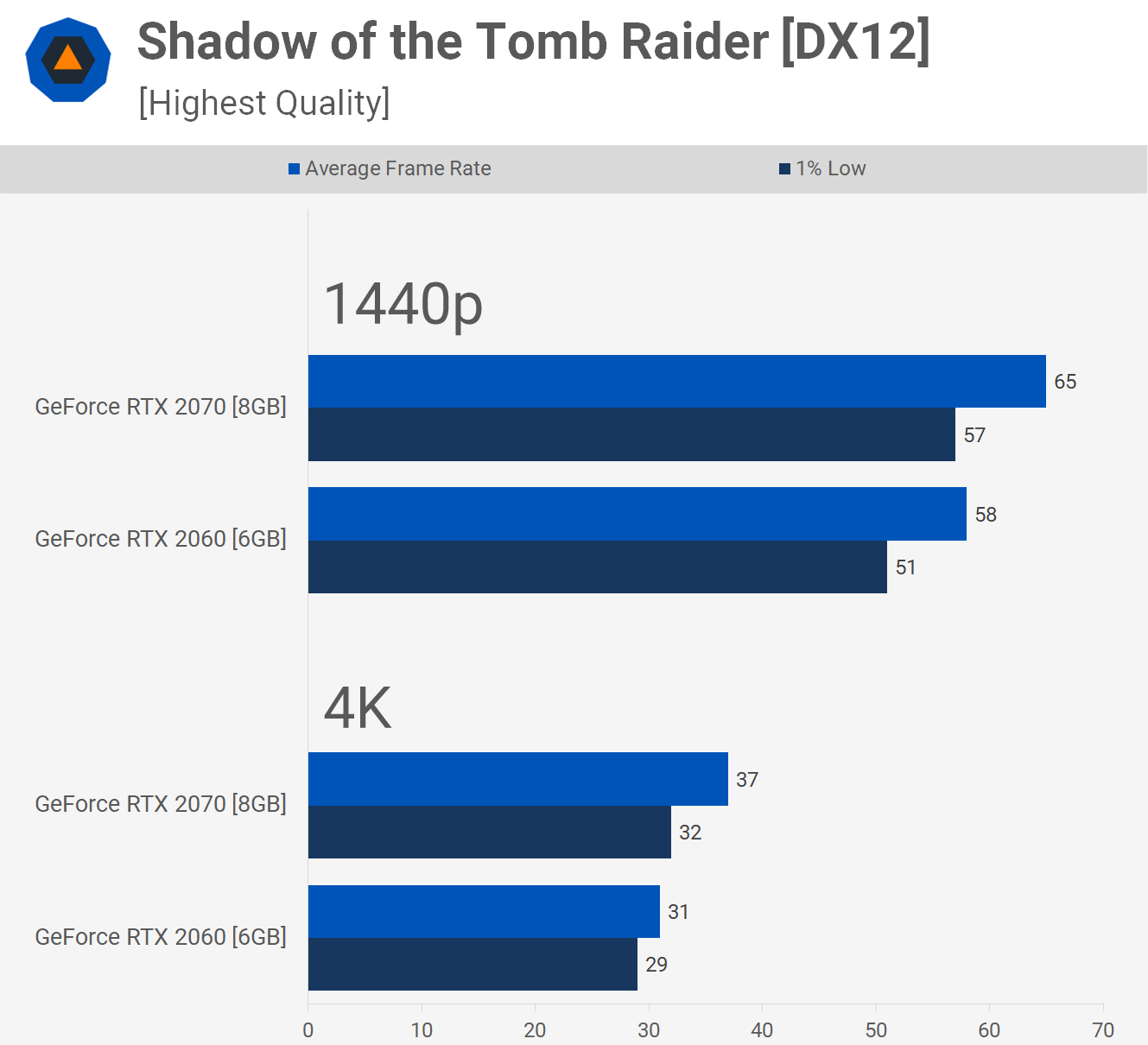

Shadow of the Tomb Raider is a fantastic looking title. At 1440p the RTX 2060 was 11% slower for the average frame rate and 0.1% low. Moving to 4K we see the average frame rate margin increased to 16%, but the 0.1% low reduced to just 7%.

Again, no memory capacity issues here. Frame time performance for the RTX 2060 is excellent despite its 17% fewer CUDA cores, so a 16% deficit in a heavily GPU bound scenario makes sense.

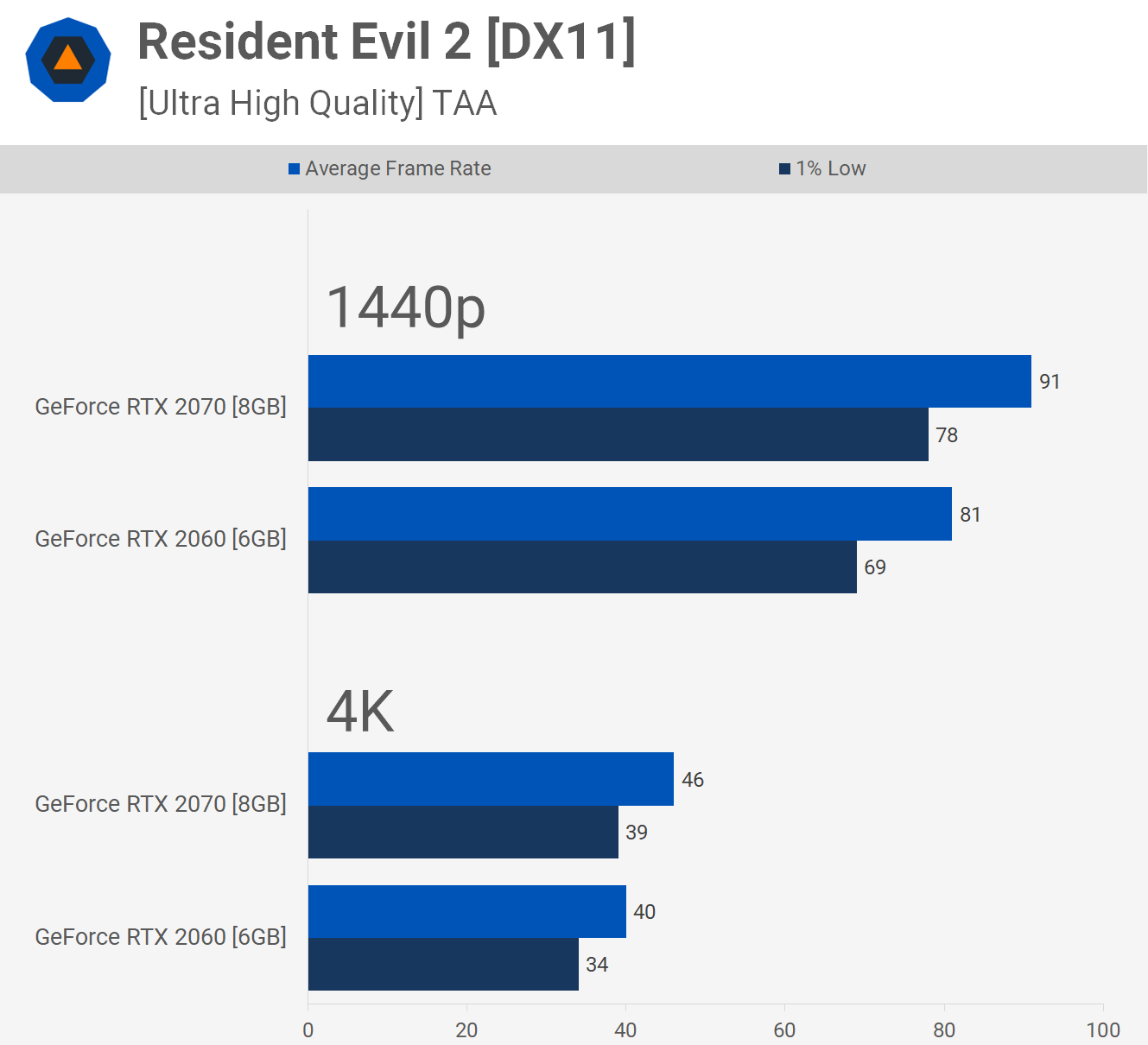

If you saw our recent Resident Evil 2 benchmark test featuring 57 GPUs, then you have seen these results already. At 1440p the RTX 2060 was 11% slower for the average frame rate and 10% slower for the 0.1% low. Those margins change ever so slightly at 4K, but with no evidence of running out of VRAM.

Bottom Line

It's clear that right now, even for 4K gaming, 6GB of VRAM really is enough. Of course, the RTX 2060 isn't powerful enough to game at 4K, at least using maximum quality settings, but that's not really the point. I can hear the roars already, this isn't about gaming today, it's about gaming tomorrow. Like a much later tomorrow...

The argument is something like, yeah the RTX 2060 is okay now, but for future games it just won't have enough VRAM. And while we don't have a functioning crystal ball, we know this is going to be both true, and not so true. At some point games are absolutely going to require more than 6GB of VRAM for best visuals.

The question is, by the time that happens will the RTX 2060 be powerful enough to provide playable performance using those settings? It's almost certainly not going to be an issue this year and I doubt it will be a real problem next year. Maybe in 3 years, you might have to start managing some quality settings then, 4 years probably, and I would say certainly in 5 years time.

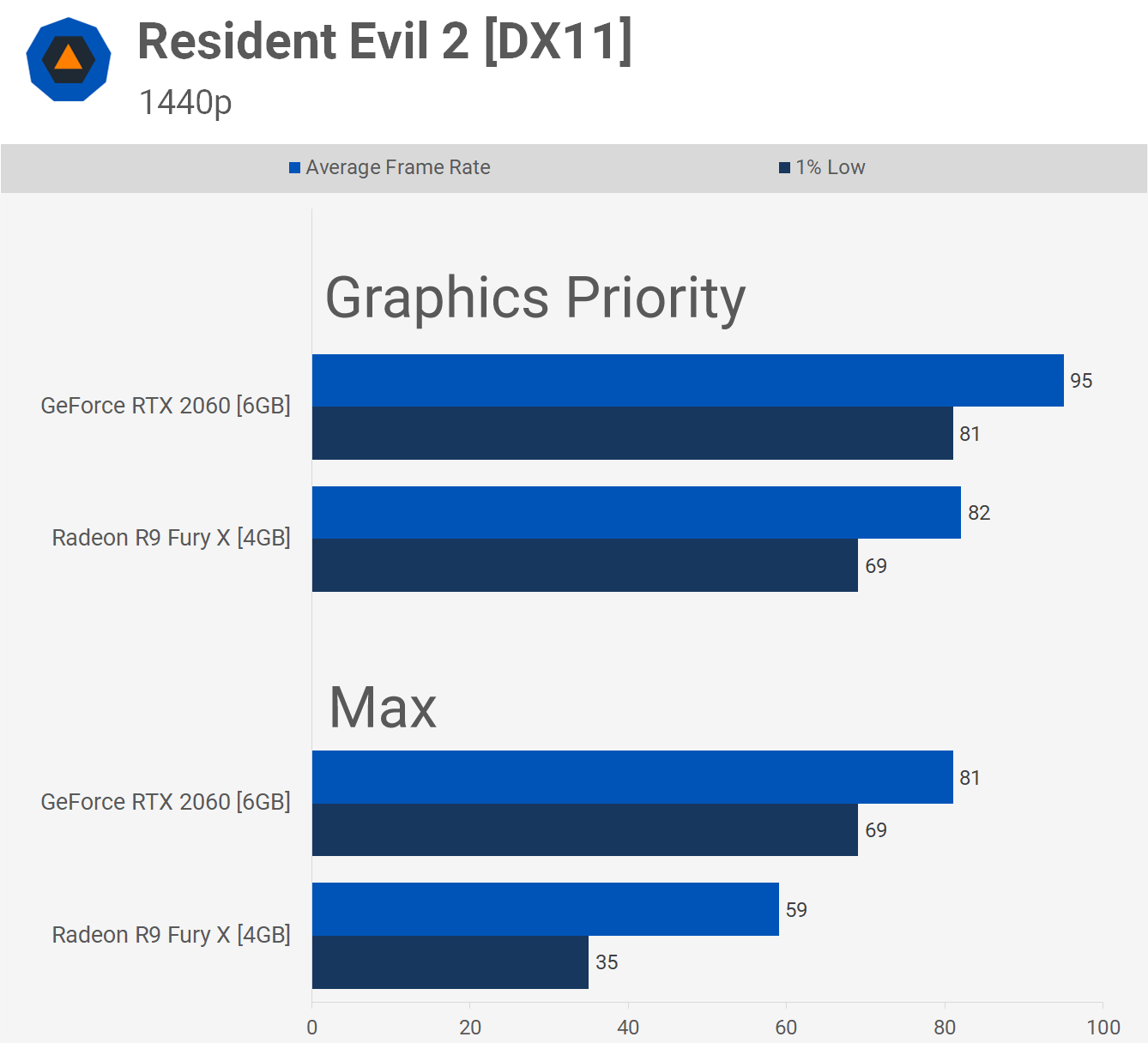

We can look to AMD's Fiji GPUs as an example of aging poorly due to limited VRAM. The Fury series was released in mid-2015 with 4GB of HBM memory. The Fury X was meant to compete with the GTX 980 Ti and the GeForce GPU packed a slower but larger 6GB buffer. Just 3 years later the Fury X was struggling to keep up with the GTX 980 Ti in modern titles at 1440p using high quality textures.

We recently saw the Fury X struggling in Resident Evil 2, so much so that it was slower than the RX 580 and the experience wasn't even comparable. Where as the RX 580 with it's 8GB memory buffer offered silky smooth game play the Fury X was a stutery mess. That said, while the Fury X can't handle Resident Evil 2 with the Max preset, you can manage the quality settings for smooth performance. Dropping the quality preset from 'Max' to 'Graphics Priority' reduced memory allocation from 7 GB at 1440p down to just 4.5 GB and this was enough to revive the Fury X.

Honestly image quality isn't that different. It's certainly not noticeably different, but what is different is the gaming experience using the Fury X. Whereas the RTX 2060 only sees a 17% boost to the average frame rate, the Fury X sees a massive 40% performance increase. The frame time performance was massively improved, too. We saw a 97% increase for the 1% low and 61% for the 0.1% low and most crucially all stuttering was gone.

But back to point, as it stands right now the GeForce RTX 2060 has enough VRAM to power through today's games using maximum quality settings. As a GPU targeting 1440p gaming or extreme high refresh rate 1080p gaming, it fits the bill nicely, at least in terms of performance.

We can all agree 8GB of GDDR6 memory would have been better down the road, but for those buying a new graphics card right now it seems like a non-issue. Rest assured, we'll be watching the RTX 2060 over the next few years and monitor it closely against its peers as we go.