LemmingOverlrd

Posts: 86 +40

Something to look forward to: AMD has delivered a boost to its datacenter offering by releasing new GPUs tailored for HPC and hardware virtualization. On the one side, it is addressing the market's current trend towards Machine Learning and HPC, on the other, it is leaving the door open for a new class of cloud services in GPU computing.

The flip side to today's big 'Next Horizon' Zen 2 news was the introduction of the much-awaited next-gen Radeon architecture, Vega 20, built on TSMC's 7nm process node. The first products to come out of the AMD forge are the AMD Radeon Instinct MI60 and MI50 GPUs.

At the AMD Next Horizon event today, David Wang, Senior Vice-President of Engineering at the Radeon Technologies Group, took to the stage to explain just why AMD thinks the new Radeon Instincts are such winners. Much like the Zen 2 presentation, the virtues of 7nm were played up once again: chips twice as dense, 1.25x higher performance and 50% lower power consumption, when compared to the previous 14nm process. Physically, the 7nm Vega is delivering a better deal on a little less real estate. In fact the 'old' Vega 10 measured 510mm2, yet the new 7nm Vega measures just 331mm2, and AMD did still manage to up the transistor count just a little (12.5bn in Vega 10 vs. 13.2bn in Vega 20).

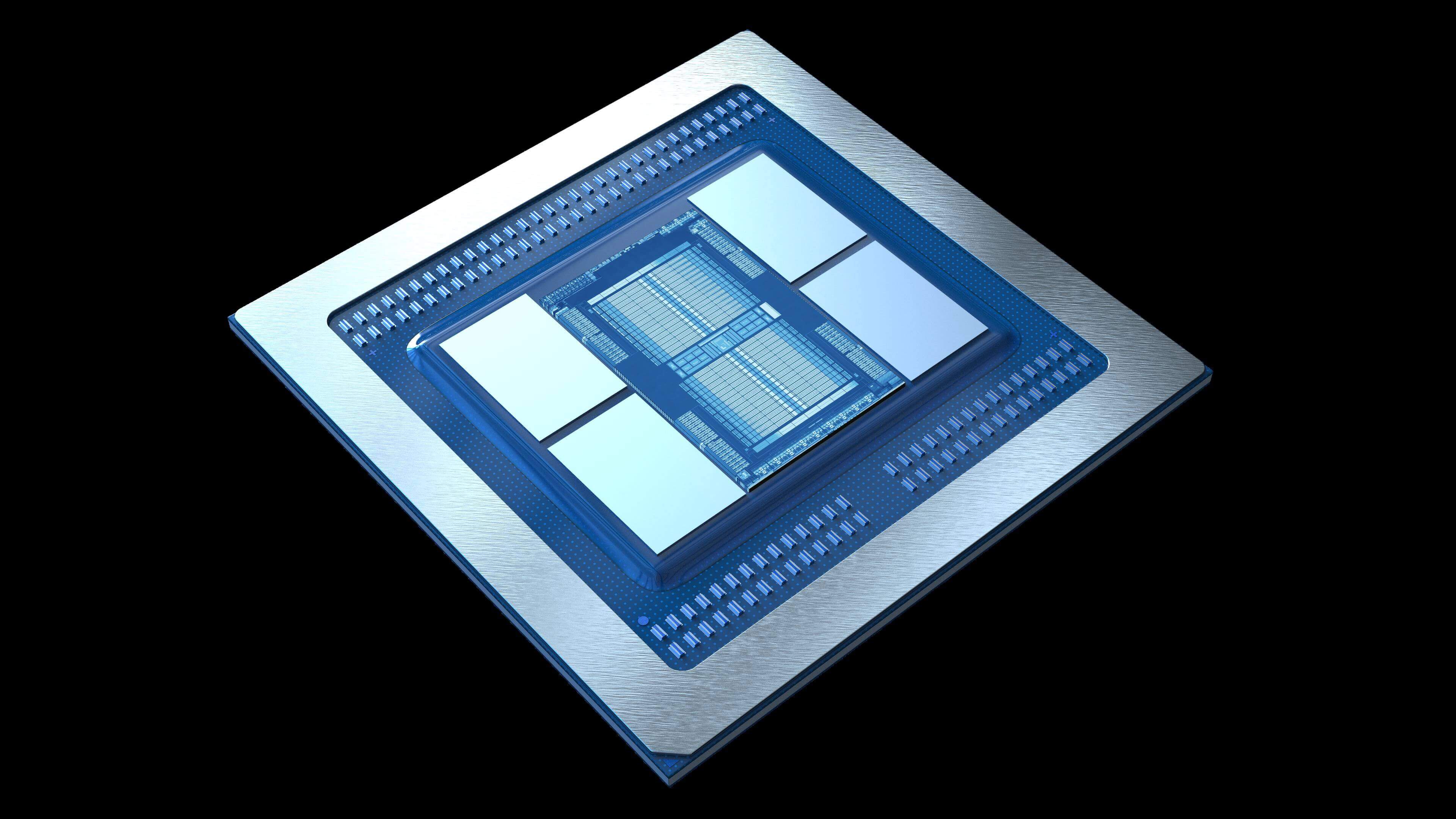

The 7nm Vega die at the center of the package, surrounded by 32GB of HBM2 memory.

The new 7nm Vega, delivers the same number of compute units (64) as its predecessor, while the MI50 delivers 60 compute units. While Wang did not actively disclose clock speeds on the cards, AMD press materials do mention 1800MHz/1746MHz boost clocks for the MI60 and MI50 respectively.

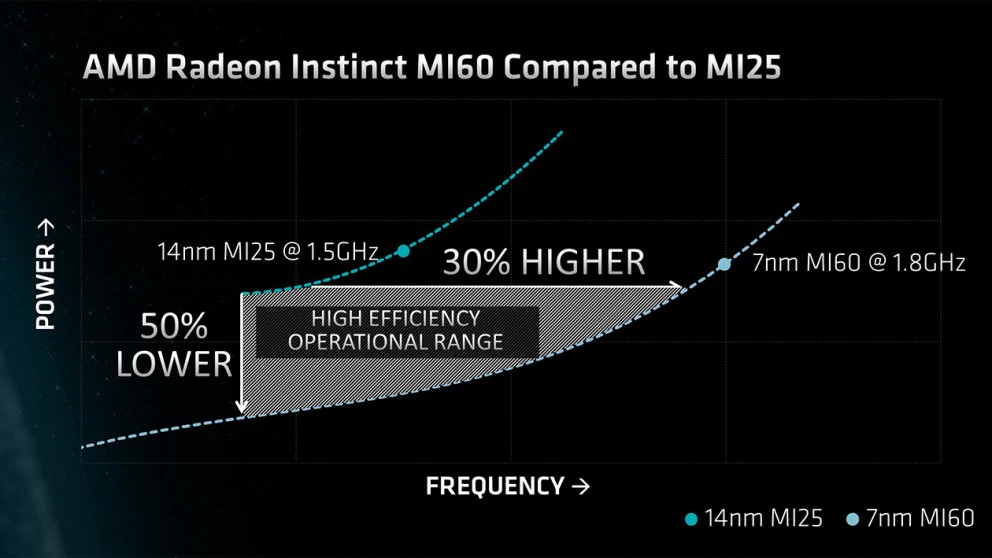

Below, taking a closer look at how the MI60 fares against its predecessor, as drawn up by AMD, we can see that the MI60 draws a little less power, at a higher 1.8GHz frequency than an MI25 running at 1.5GHz. That's a 30% gain in overall performance per watt at peak frequencies, but it also means that, should an MI60 be clocked at similar speeds as the MI25, there would be power savings of about 50%. Much like Zen 2, this gives AMD build options when shoving a whole bunch of these cards inside a server.

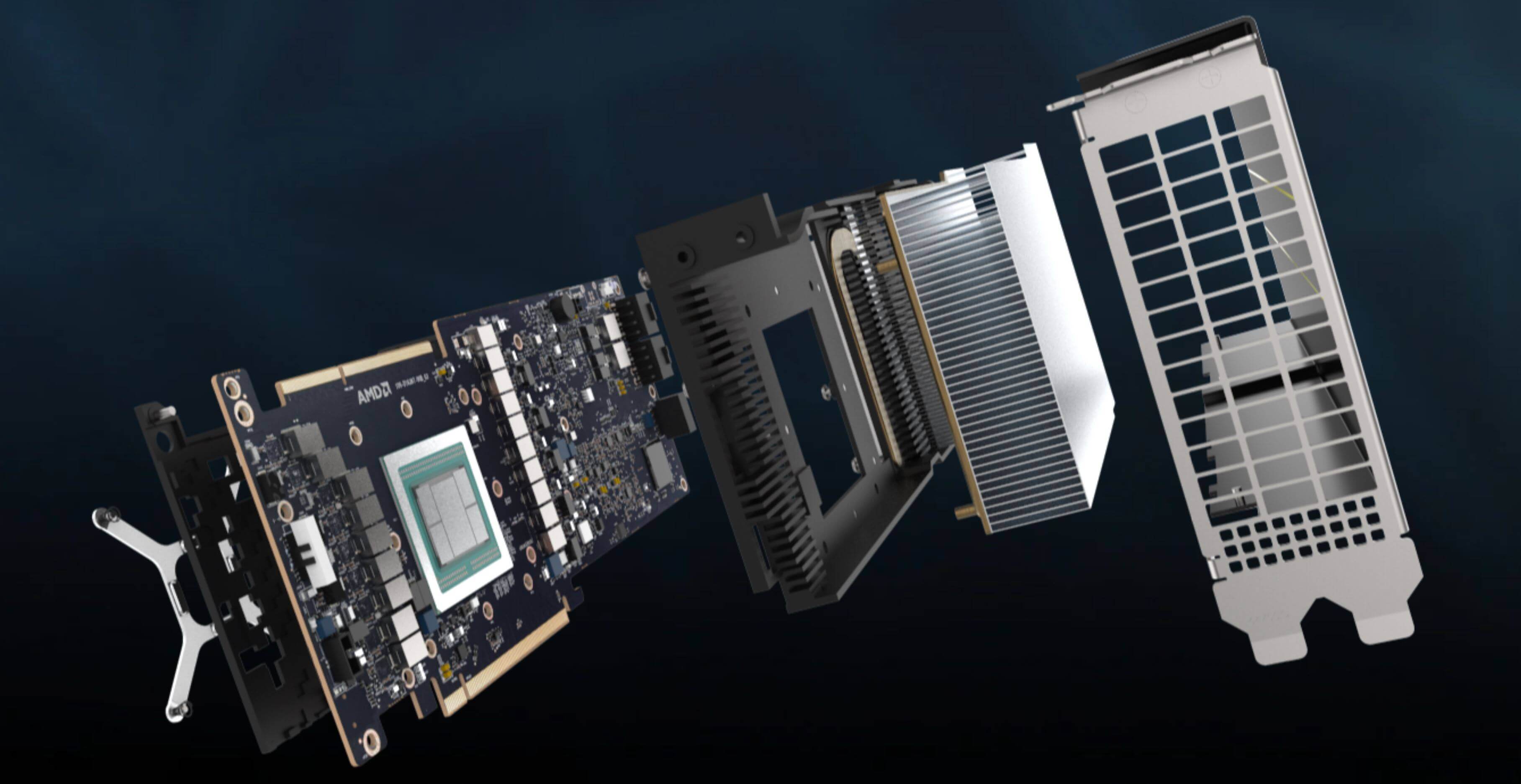

Despite the graphed-out 50% lower power at the same frequency, things get a little dicey when you look at the MI60's power connectors. In the exploded view, below, you can clearly see the dual 8-pin PCI express power connectors, suggesting it'll be once again draw in the high 200s or maybe even 300W of power.

All the horsepower on the MI60 is backed up by 32GB of HBM2 memory (16GB on the MI50) which top a mind boggling 1 TB/s of memory bandwidth. It also delivers, says AMD, 7.5 TFlops of FP64 (6.4 TFlops on the MI50) performance. The cards also support PCIe 4.0 which opens up options for server-side multi-GPU setups and enhanced CPU-to-GPU communication.

Contrary to Zen 2, the 7nm Vega does not seem to be a modular 'chiplet' design, and more of an evolution on the MI25 'Vega XT' chip. This is hardly awe-inspiring, as we are seeing Nvidia remain at the top of the GPU pecking order and hardly anything at AMD to fight them off. Also, contrary to what many speculated, AMD has not gone down the Ray Tracing path, with 7nm Vega. In a way, this clues us in about AMD's plans for Navi, AMD's consumer GPU in 2019, which may simply skip Ray Tracing entirely.

The new "datacenter GPUs", as AMD puts it, are optimized for Deep Learning, Inference, Training and general high-performance computing. But AMD has a new take on an old pitch, the MI60 and MI50 allow for full hardware-virtualized GPU (I.e. MxGPU), which might turn out to create new revenue streams in cloud-based GPU services.

Hardly as awe-inspiring as the CPU announcements today, the new 7nm Vega chips are shaping up to be little more than a shrink of their 14nm predecessors.

The MI60 will become available later this year, while the MI50 will only turn up at the end of Q1'2019. Prices were not discussed.

https://www.techspot.com/news/77289-amd-launches-7nm-vega-radeon-instinct-mi60-mi50.html