As encryption becomes more commonplace in our devices, the job of law enforcement is becoming increasingly difficult. The central question surrounding this "Crypto War" is whether or not there is a way to maintain a consumer's privacy, but still give law enforcement a way in during issues of national security or to help solve crimes.

The view among privacy advocates is that encryption should be so strong that no one, not even the device's manufacturer or government should be able to gain access to the information. On the other hand, law enforcement officials have called for limited encryption and security that is just weak enough to allow them access in times of need.

These two ideas may seem mutually exclusive, but former Microsoft executive Ray Ozzie has come out with a solution that aims to please both sides. Originally published in Wired, his "Clear" proposal provides a combination of strong cryptography with a method for government officials to gain legitimate access in times of crisis.

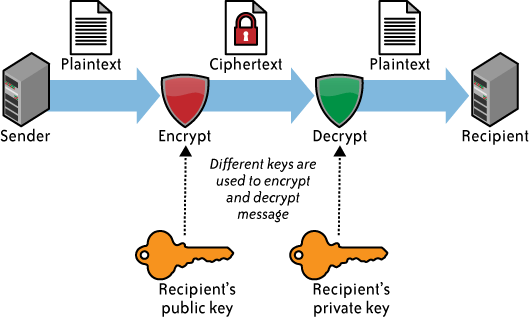

The system works through a pair of public and private keys, the same way the vast majority of encryption works today. Anything encrypted with the public key can only be decrypted with the private key, and vice versa.

Device manufacturers, like Google and Apple, would generate a keypair and install the public key on all of their devices. The private key would be kept in an ultra-secure location that only the manufacturer has access to, similar to the way code-signing keys are stored. The phone would then automatically encrypt the user's PIN using the public key pre-installed on their device. If "exceptional access" was required by law enforcement, they would then need to physically obtain the device as well as a search warrant for the data on it.

Once this warrant is obtained, a special recovery mode on the phone can be enabled that presents the investigators with the encrypted PIN. This encrypted PIN and proof of the warrant are sent back to the manufacturer who can then decrypt the PIN for that device specifically.

Once this recovery mode is accessed, the phone is effectively "bricked." Data cannot be erased from it and the phone cannot be used further. By requiring physical access and intentionally bricking the phone, this means law enforcement will not be able to covertly gain access to a device. This is meant to ensure that they go through the proper legal channels and cannot abuse the system.

While this is certainly one of the most robust solutions to the cryptography issue we have seen so far, it is not without its drawbacks. The most obvious would be that if a hacker was to obtain the manufacturer's private key, they would automatically gain access to every device. A similar key escrow system known as a Clipper Chip was attempted back in the 1990s and it failed miserably.

With no clear answer or way to please both sides of the argument, it's still good to see new proposals that produce a meaningful debate.