What just happened? Nvidia's DLSS feature is better than ever these days: many implementations of the technology can give players tremendous performance upticks at little to no visual cost. Though it's only available in a handful of select games so far, that number has just increased by four today, as DLSS arrives across several new and existing titles.

As Nvidia's Andrew Burnes announced over on the official GeForce blog, the company's AI-based super sampling tech (an image renders at a lower resolution, and then gets upscaled to a higher one) is now available in Call of Duty: Black Ops Cold War, War Thunder, Enlisted, and Ready or Not.

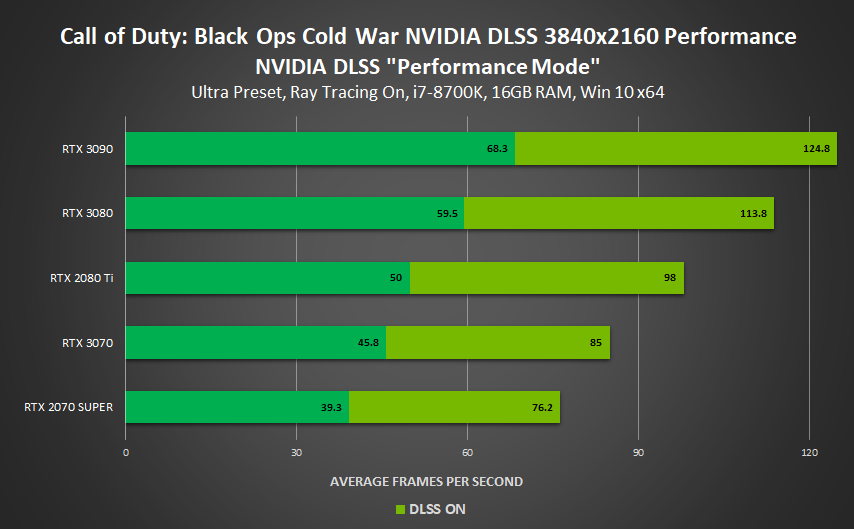

We'd need to extensively test each of these games to say how good their implementation of DLSS is, but we'll share some of Nvidia's performance claims -- which you should take with a grain of salt, as we're not sure how they perform their benchmarks -- now.

For starters, Black Ops Cold War is promising tremendous framerate improvements of up to 85 percent at 4K with ray tracing effects (ray-traced shadows and ambient occlusion) switched on. That takes the game from an uncomfortable 40 FPS on the RTX 2070 Super all the way up to a very playable 76 FPS with DLSS on.

Higher-end GPUS, such as the RTX 3090 or 3080, enjoy much higher DLSS-powered max framerates of up to 124 FPS (about 113 for the 3080). Combined arms warfare title War Thunder's DLSS gains are quite a bit smaller, but it's also worth noting that the game doesn't feature any RTX tech. Regardless, you can expect performance to be "accelerated" by up to 30 percent here.

Squad-based MMOFPS Enlisted (another combined arms title with infantry combat, tanks, and jets) is also an RT-free game, but it benefits from FPS gains of up to 55 percent at 4K. The game is already very playable across a wide range of GPUs, so DLSS is probably overkill: the 3090 bumps framerates up to 233 from 163. Even the humble RTX 2060 can already push a solid 60 frames, but with DLSS, it goes up to 98.

Finally, we've got Ready or Not, which benefits from tremendous performance improvements with DLSS. The game is completely unplayable on lower-end hardware with ray-traced reflections, shadows, and ambient occlusion, but it becomes tolerable with DLSS. The 2060 can only handle a pathetic 15 FPS with RT features cranked up, but DLSS pushes that number up to 40. At the high end, we're seeing pre and post-DLSS numbers of 46.7 and 95.2 (respectively) for the 3090, and 39 and 86 for the 3080.

Overall, the results are a bit of a mixed bag: some games see massive performance improvements, some get more modest increases, but in all cases, your FPS will improve materially with DLSS on in the above titles. It's worth noting that all of Nvidia's testing took place at 4K, so your mileage may vary at lower resolutions like 1080p and 1440p.

https://www.techspot.com/news/87659-nvidia-dlss-arrives-four-more-titles-including-call.html