Nvidia is not happy with the data that Intel has presented in a recent keynote involving the company's Xeon Phi compute processors. According to Nvidia, Intel has used old benchmarking software and hardware to paint Xeon Phi in a more competitive light against Nvidia's compute hardware.

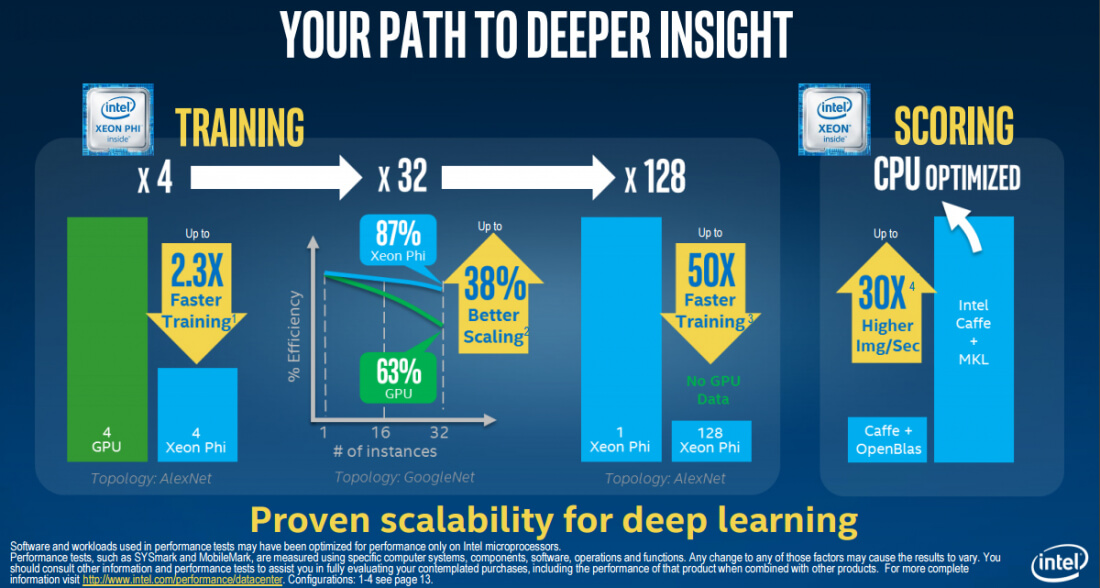

On a slide presented at ISC 2016, Intel claims that their Xeon Phi hardware is up to 2.3x faster at neural network training than a competing Nvidia GPU. Xeon Phi is also allegedly up to 38% better at scaling, according to the data and comparisons that Intel used on the above slide. Nvidia has disputed both of these claims in a recent blog post.

Intel has apparently manipulated these benchmarks by using an out-of-date version of the benchmarking software Caffe AlexNet, the latest versions of which give Nvidia a 30 percent training performance advantage over Intel. Nvidia also says that Intel compared Xeon Phi to older Maxwell-based products; had they compared to modern Pascal parts, Nvidia would have a 90 percent advantage.

The scalability claim is also disputed by Nvidia, with the company saying that Intel compared 32 Xeon Phi servers to 32 servers using Nvidia's four-year-old Kepler-based Tesla K20X hardware. Had Intel used Maxwell cards, which aren't even using the latest architecture, workloads would have scaled in a near-linear fashion up to 128 GPUs.

Naturally Nvidia believes that GPUs are a better solution for deep learning workloads, however Intel disagrees, and has defended their benchmarking in a statement to Ars Technica:

It is completely understandable that Nvidia is concerned about Intel in this space. Intel routinely publishes performance claims based on publicly available solutions at the time, and we stand by our data.