Over the past few weeks we've been looking into and exploring the world of FreeSync 2. Now this isn't a new technology - it was announced at CES 2017 - but it's only now that we're starting to see the FreeSync 2 ecosystem expand with new display options. As HDR and wide-gamut monitors become more of a reality over the next year, there's no better time to discuss FreeSync 2 than now.

And there's a fair bit of confusion around what FreeSync 2 really is, how it functions, and how it differs from the original iteration of FreeSync.

This article will explore and explain FreeSync 2 as the technology currently stands, as it's a little different to the tech AMD announced more than a year ago. Our detailed impressions of using a FreeSync 2 monitor will come next week.

What is FreeSync?

Here's a quick refresher on the original FreeSync. The name FreeSync is a brand name that refers to AMD's implementation of adaptive synchronization technology. It essentially allows a display to vary its refresh rate to match the render rate of a graphics processor, so that, for example, a game running at 54 FPS is displayed at 54 Hz, and when that games bumps up to 63 FPS the display also shifts to 63 Hz. This reduces stuttering and screen tearing compared to monitor operating at a fixed refresh rate, say 60 Hz, displaying a game running at an unmatched render rate like 54 FPS.

FreeSync requires a few modifications to the display's internal controllers, and also a compatible graphics processor, to function. Nvidia's competing technology that achieves similar results, G-Sync, uses an expensive proprietary controller module. FreeSync is an open standard, and was adopted as the official VESA Adaptive Sync standard, so any display controller manufacturer can implement the technology.

The core technology of FreeSync is just this one feature: adaptive sync. Display manufacturers are able to integrate FreeSync into their displays through whatever means they like, provided it passes adaptive sync validation.

A monitor certified as FreeSync compatible only means that monitor supports adaptive sync; there's no extra validation for screen quality or other features, so just because a monitor has a FreeSync logo on the box doesn't necessarily mean it's a high quality product.

What is FreeSync 2?

And this is where FreeSync 2 comes in. It's not a replacement to the original FreeSync, and it's not really a direct successor, so the name 'FreeSync 2' is a bit misleading. What it does provide, though, are additional features on top of the original FreeSync feature set. Every FreeSync 2 monitor is validated to have these additional features, so the idea is that a customer shopping for a gaming monitor can buy one with a FreeSync 2 badge knowing it's of a higher quality than standard FreeSync monitors.

Both FreeSync and FreeSync 2 will coexist in the market. While the naming scheme doesn't suggest it, FreeSync 2 is effectively AMD's brand for premium monitors validated to a higher standard, while FreeSync is the mainstream option.

You're not getting old technology by purchasing a monitor with original FreeSync tech, in fact the way adaptive sync works in FreeSync and FreeSync 2 is identical. Instead, FreeSync monitors simply miss out on the more premium features offered through FreeSync 2.

What are these new features? Well, it breaks down into three main areas: high dynamic range, low framerate compensation, and low latency.

FreeSync 2: High Dynamic Range

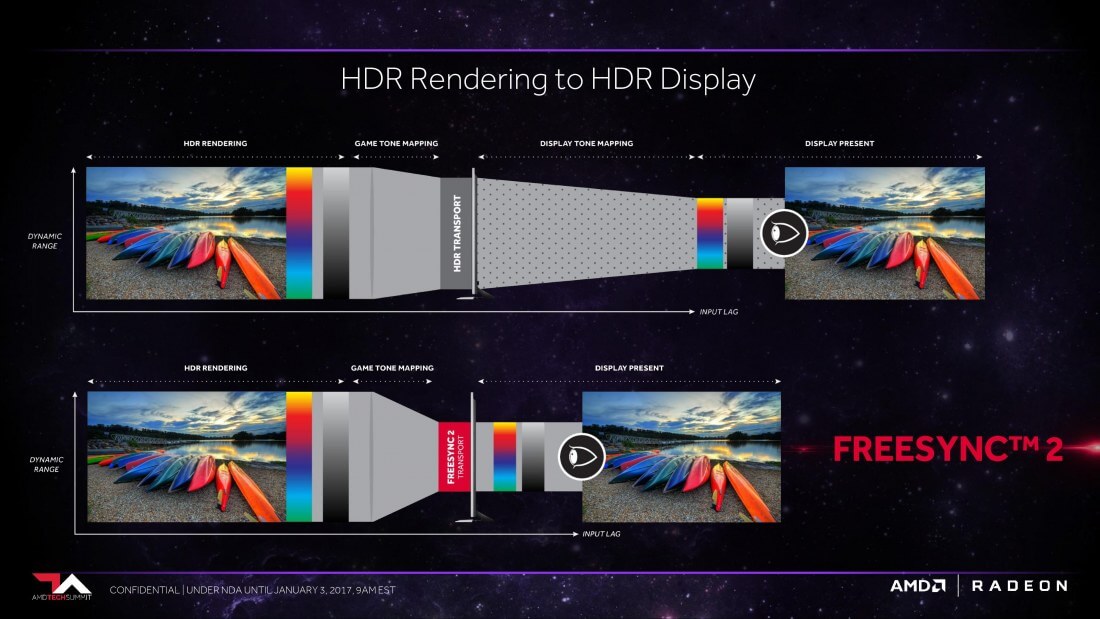

Let's tackle HDR support first. When AMD originally announced FreeSync 2 they went into detail on how their implementation of FreeSync 2 was going differ from a standard HDR pipeline. FreeSync 2's HDR tone mapping was supposed to use calibration and specification data sent from the monitor to the PC to simplify the tone mapping process.

The idea was the games themselves would tone map directly to what the display was capable of presenting, with the FreeSync 2 transport passing the data straight to the monitor without the need for further processing on the monitor itself. This was in contrast to standard HDR tone mapping pipelines that see games tone map to an intermediary format before the display then figures out how to tone map it to its capabilities. Having the games do the bulk of the HDR tone mapping work was supposed to reduce latency, which is an issue with HDR gaming.

That's how AMD detailed FreeSync 2's HDR implementation back at CES 2017. While it sounded nice in theory, one of the key issues raised at the time was that the games themselves had to tone map specifically to FreeSync 2 displays. This meant games would need to integrate a FreeSync 2 API if this HDR implementation was ever to succeed, and we all know how difficult it is to convince a game developer to integrate a niche technology.

As FreeSync 2 stands right now, that original HDR implementation isn't quite ready yet. AMD's website on FreeSync 2 simply lists the technology as including "support for displaying HDR content," and there is no mention anywhere of FreeSync 2 supported games. And when you actually use a FreeSync 2 monitor, HDR support relies entirely on Windows 10's HDR implementation for now, which is improving slowly but isn't at the same level AMD's original solution is set to provide in an ideal environment.

The reason for this is FreeSync 2 support was only introduced in AMD's GPU Services 5.1.1 in September 2017, so game developers have only had the tools to implement FreeSync 2's GPU-side tone mapping for a bit over 7 months now. Getting these sorts of technologies implemented in games can take a long time, and right now there's no word on whether any currently released games have used AGS 5.1.1 in the development process.

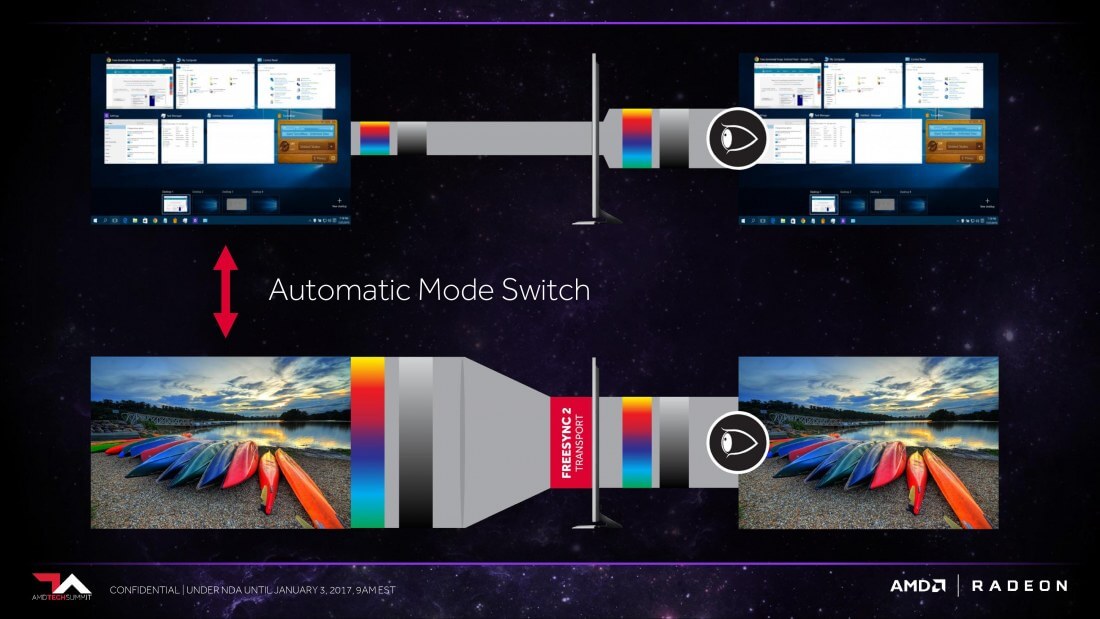

One of the features AMD mentioned as part of their HDR implementation was automatic switching between HDR and SDR modes, so you could game using the full HDR capabilities of your display while returning to a comfortable SDR for desktop apps. Unfortunately this doesn't seem to be functional at the moment either, instead FreeSync 2 once again makes use of Windows' standard HDR implementation that doesn't handle the HDR to SDR transition too well.

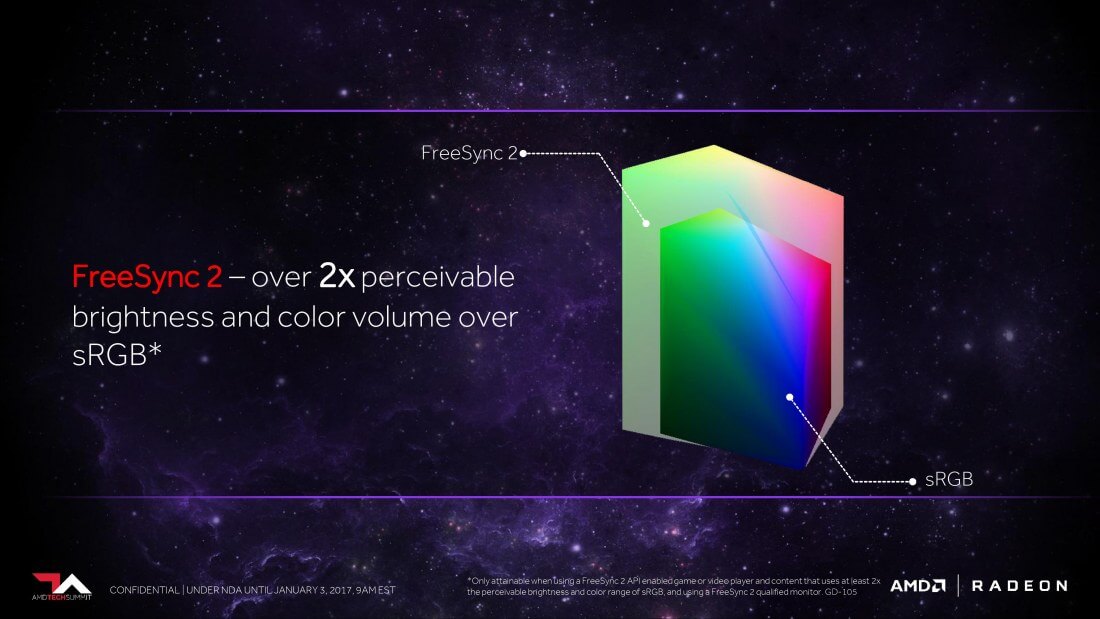

However, while the implementation might not be anything special at the moment, FreeSync 2 does guarantee several things relating to HDR. All FreeSync 2 monitors support HDR, so you're guaranteed to get an HDR-capable monitor if it has a FreeSync 2 badge. FreeSync 2 also ensures you can run both adaptive sync and HDR at the same time for an optimal gaming experience. And finally, AMD states that all FreeSync 2 monitors require "twice the perceptual color space of sRGB for better brightness and contrast."

It's unclear exactly what AMD means by "twice the perceptual color space," but the idea is a FreeSync 2 monitor would support a larger-than-sRGB gamut and higher brightness than a basic gaming monitor.

And it does appear that AMD's FreeSync 2 validation process is looking for more than just a basic HDR implementation. So far, every FreeSync 2 monitor that's available or has been announced meets at least the DisplayHDR 400 specification. This is a fairly weak HDR spec but we have seen some non-FreeSync 2 supposedly HDR-capable monitors fail to meet even the DisplayHDR 400 spec, so at least with FreeSync 2 you're getting a display that meets the new minimum industry standard for monitor HDR.

Of course, some monitors will exceed DisplayHDR 400, like the original set of Samsung FreeSync 2 monitors such as the CHG70 and CHG90; both of these displays meet the DisplayHDR 600 spec. Ideally I'd have liked to see FreeSync 2 stipulate a DisplayHDR 600 minimum, but 400 nits of peak brightness from DisplayHDR 400 should be fine for an entry-level HDR experience.

FreeSync 2: Low Input Latency

The second main FreeSync 2 feature is reduced input latency, which we briefly touched on earlier. HDR processing pipelines have historically introduced a lot of input lag, particularly on the display side, however FreeSync 2 stipulates low latency processing for both SDR and HDR content. AMD hasn't published a specific metric they are targeting for input latency, however it's safe to say 50 to 100ms of lag like you might get with a standard HDR TV would not be acceptable for a gaming monitor.

How FreeSync 2 is achieving low latency support in 2018 appears to be more on the display side than the original implementation announced at the start of 2017.

As we mentioned when discussing FreeSync 2's HDR implementation, the original idea was to push all tone mapping into the game engine to cut down on display-side tone mapping, thereby reducing input latency as the display's slow processor wouldn't need to get involved as much. As games haven't started supporting FreeSync 2 yet, today it seems this latency reduction is purely coming from better processing hardware in the display, for example current Samsung FreeSync 2 monitors include a 'low latency' mode that is automatically enabled when FreeSync 2 is enabled.

FreeSync 2: Low Framerate Compensation

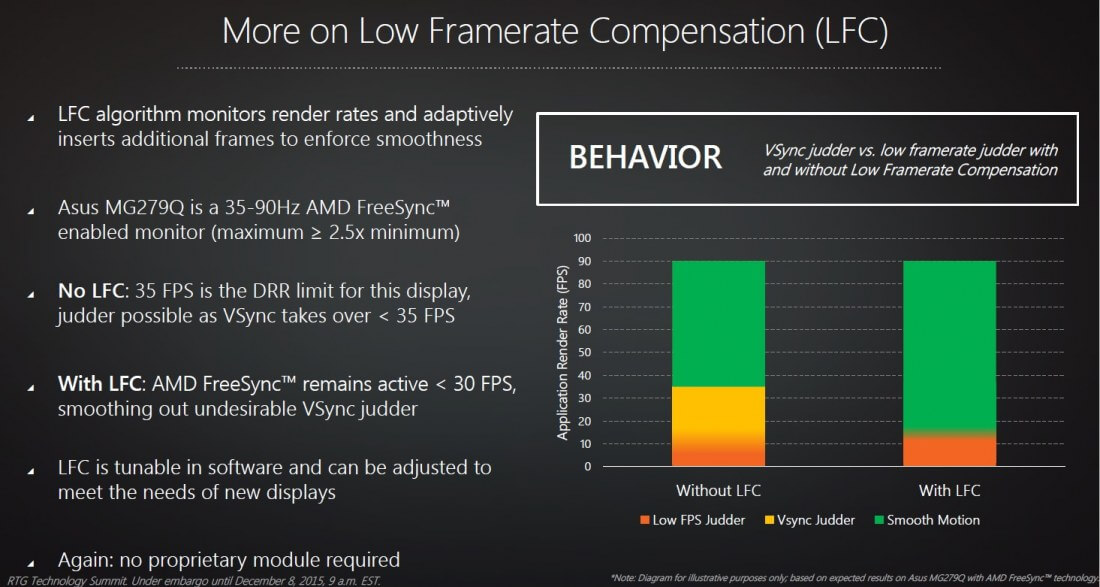

The final key feature is low framerate compensation. This is a feature that goes hand-in-hand with adaptive sync, ensuring adaptive sync functions at every framerate from 0 FPS up to the maximum refresh rate supported by the display.

There is one simple reason why we need low framerate compensation: displays can only vary their refresh rate within a certain window, for example 48 to 144 Hz. If you wanted to run a game below the minimum supported refresh rate, say at 40 FPS when the minimum refresh is 48 Hz, normally you'd be stuck with standard screen tearing or stuttering issues like you'd get with a fixed refresh monitor. That's because the GPU's render rate is out of sync with the display refresh rate.

Low framerate compensation, or LFC, extends the window in which you can sync the render rate to the refresh rate using adaptive sync. When the framerate falls below the minimum refresh rate of the monitor, frames are simply displayed multiple times and the display runs at a multiple of the required refresh rate.

In our previous example, to display 40 FPS using LFC, every frame is doubled and then this output is synced to the display running at 80 Hz. You can even run games at, say, 13 FPS and have that synced to a refresh rate; in that case the monitor would run at 52 Hz (to exceed the 48 Hz minimum) and then every frame would be displayed 4 times.

The end result is LFC effectively removes the minimum refresh rate of adaptive sync displays, but for LFC to be supported, the monitor needs to have a maximum refresh rate that is at least double the minimum refresh rate. This is why not all FreeSync monitors support LFC; some come with just 48 to 75 Hz refresh windows, which doesn't meet the criteria for LFC. However in the case of FreeSync 2, every monitor validated for this spec will support LFC so you won't have to worry about the minimum refresh rate of the monitor.

Current FreeSync 2 Monitors

This wouldn't be a look at FreeSync 2 in 2018 without exploring what FreeSync 2 monitors are actually available right now, and what monitors are coming.

Currently there are only three FreeSync 2 monitors on the market, and all are from Samsung's Quantum Dot line-up: the C27HG70 and C32HG70 as 27- and 32-inch 1440p 144Hz monitors respectively, along with the stupidly wide C49HG90, a double-1080p 144Hz monitor. All three are DisplayHDR 600 certified.

Set for release this year are several other options. We have the BenQ EX3203R, a 32-inch curved VA panel with a 1440p resolution and 144Hz refresh rate, certified for DisplayHDR 400. The AOC Agon AG322QC4 appears to use the same panel, so it has the same specifications. Then there's the Philips 436M6VBPAB (seriously, who named that monitor) which is a 43-inch 4K 60Hz display sporting DisplayHDR 1000 certification.

The last thing I'll mention here is GPU support: FreeSync 2 requires an AMD graphics card as you might expect of an AMD technology, and according to this list, everything from AMD's RX 200 series or newer with the exception of a few products is supported. There are also a bunch of APUs with integrated graphics that work.

If you have an Nvidia GPU, FreeSync 2 monitors will work, and you will get most of the benefits including support for HDR. What you won't get is adaptive sync support; if you want that, you'll need to find a monitor with Nvidia's equivalent G-Sync HDR technology.

Next week we'll follow up with our hands-on impressions on FreeSync 2 monitors, thoughts on using FreeSync and what needs to be improved.

Shopping Shortcuts: