As you've undoubtedly heard, the third installment of BioWare's Mass Effect trilogy hit shelves last Tuesday. Being one of the year's most anticipated launches, it's no surprise to see it with an aggregate review score of over 90. However, in what has become common among high-profile PC game releases, tons of unsatisfied users have slapped the title with negative feedback on Metacritic, Amazon and elsewhere.

Besides hostility over day-one DLC and forced Origin, many fans seem displeased with core aspects of the game, noting that it makes little use of decisions imported from previous saves and lacks an epic conclusion. As usual, we have no intentions on reviewing the gameplay or storytelling of Mass Effect 3 (full blown review on the PC is here instead). But this piece is dedicated to the nuts and bolts of the game, analyzing how it runs on today's finest PC hardware.

As with previous entries, Mass Effect 3 is built using Unreal Engine 3. In other words, it's a DirectX 9 title. As such, there are no fancy tessellation, additional dynamic lighting, depth of field or ambient occlusion effects. Although ME3 probably won't win any awards for being the best looking PC game of 2012, fans have generally approved of the way previous titles looked, so they shouldn't be too disappointed this time.

Besides, there are some benefits to sticking with the UE3 engine. For starters, its performance must be highly optimized by now. The developers say these tweaks have allowed them to improve everything, from better storytelling methods to better overall ground pics and cinematics. They also say the extra performance has allowed them to display more enemies at once, making for a richer and livelier experience.

Again, we'll have to take their word on all because we won't be examining those aspects of the game. However, what we will do is benchmark ME3 across three different resolutions with two dozen GPU configurations – including AMD's new Radeon HD 7000 series. We'll also see how the performance scales when overclocking an eight-core FX-8150, along with benching a handful of other Intel and AMD processors.

In case you lost count, we'll be testing 24 graphics card configurations from AMD and Nvidia across all price ranges. The latest official drivers were used for every card. We installed an Intel Core i7-3960X in our test bed to remove any CPU bottlenecks that could influence high-end GPU scores.

Core i7 Test System Specs

- Intel Core i7-3960X Extreme Edition (3.30GHz)

- x4 4GB G.Skill DDR3-1600(CAS 8-8-8-20)

- Gigabyte G1.Assassin2 (Intel X79)

- OCZ ZX Series (1250w)

- Crucial m4 512GB (SATA 6Gb/s)

- AMD Radeon HD 7970 (3072MB)

- Gigabyte Radeon HD 7950 (3072MB)

- AMD Radeon HD 7870 (2048MB)

- AMD Radeon HD 7850 (2048MB)

- HIS Radeon HD 7770 (1024MB)

- HIS Radeon HD 7750 (1024MB)

- HIS Radeon HD 6970 (2048MB)

- HIS Radeon HD 6950 (2048MB)

- HIS Radeon HD 6870 (1024MB)

- HIS Radeon HD 6850 (1024MB)

- HIS Radeon HD 6790 (1024MB)

- HIS Radeon HD 6770 (1024MB)

- HIS Radeon HD 6750 (1024MB)

- HIS Radeon HD 6670 (1024MB)

- AMD Radeon HD 5870 (2048MB)

- AMD Radeon HD 5830 (1024MB)

- HIS Radeon HD 5670 (1024MB)

- Gigabyte GeForce GTX 580 (1536MB)

- Gigabyte GeForce GTX 570 (1280MB)

- Gigabyte GeForce GTX 560 Ti (1024MB)

- Gigabyte GeForce GTX 560 (1024MB)

- Nvidia GeForce GTX 480 (1536MB)

- Gigabyte GeForce GTX 460 (1024MB)

- Gigabyte GeForce GTX 550 Ti (1024MB)

- Microsoft Windows 7 Ultimate SP1 64-bit

- Nvidia Forceware 295.73

- AMD Catalyst 12.2 + AMD Radeon HD 7800 (8.95.5)

We used Fraps to measure frame rates during a minute of gameplay from ME3's second single-player level: Mars Prothean Archives. The test begins at the first checkpoint where you on a rocky cliff. After running for roughly 10 seconds we found our first gang of badies and engaged.

By default, ME3's frame rate limited to 60fps or your monitor's refresh rate. As a workaround, you'll have to add "UseVsync=False" (without the quotes) to the GameSettings.ini config file in your My Documents folder. With VSync disabled, the game's frame rates will max out.

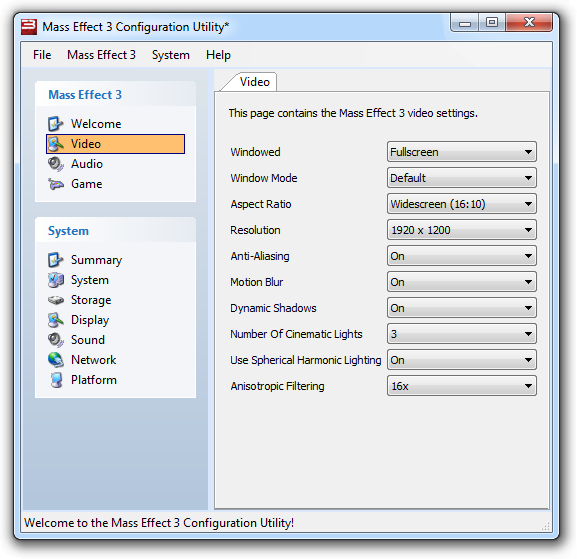

We tested ME3 at three common desktop display resolutions: 1680x1050, 1920x1200 and 2560x1600 with Anti-Aliasing enabled. Other quality settings include Motion Blur, Dynamic Shadows, Spherical Harmonic Lighting, Number of Cinematic Light (3) and Anisotropic Filtering (16x).