Equipped with his iconic night vision goggles and a new counter-terror agency, Sam Fisher has returned to foil another anti-US plot in Ubisoft's sixth Splinter Cell game. Titled Blacklist, the latest entry is a sequel to 2010's Splinter Cell: Conviction and attempts to find a better balance between stealth and action while reintroducing some franchise favorites.

Many Splinter Cell fans felt that Conviction veered decidedly toward the "bag 'em and tag 'em" side of shooters, encouraging you to kill jumpy guards instead of avoiding them. While Blacklist refines Conviction's "Mark and Execute" system with a mechanic called "Killing in Motion," you can also look forward classic stealth abilities such as dragging downed foes and baiting enemies with vocal noise – even if it's not from Michael Ironside, the longstanding voice of Fisher who is replaced by actor Eric Johnson in Blacklist.

All of that seems to have struck a positive chord with most reviewers, but some have been less than enthusiastic about Blacklist's graphics. For whatever it's worth, while Blacklist may not be the best looking game of 2013, we wouldn't dismiss it as a cheap console port either, as a partnership between Ubisoft and Nvidia helped ensure the title was done right on PC (as should also be the case with Watch Dogs and Assassin's Creed 4).

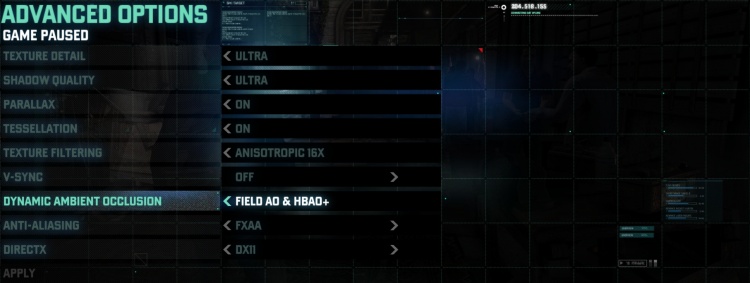

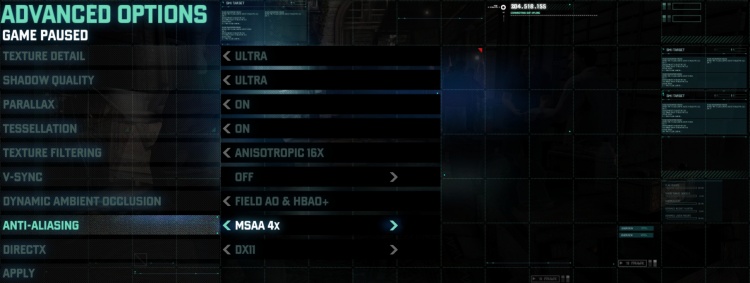

Like Conviction, Blacklist was built using LEAD, a heavily modified version of Unreal Engine 2.5 with Havok physics that Ubisoft seems to prefer over UE3. PC gamers can look forward to a typical array of graphics options including TXAA antialiasing, soft shadows, horizon-based ambient occlusion and advanced DX11 tessellation – all of which we plan to test with nearly two dozen graphics setups and a handful of processors...

Testing Methodology

In total, we tested 23 AMD and Nvidia graphics card configurations spanning all budgets. We tested using the ultra-quality preset with FXAA, Field AO, HBAO+ (default), MSAAx4, Field AO and HBAO+ as well as FXAA and Field AO with HBAO+ disabled.

We used the latest beta driver designed for Blacklist. The AMD Catalyst 13.8 beta 2 driver claims a 9% gain in the title when it's running on ultra at 2560x1600.

The GeForce 326.80 beta is said to offer the best experience for Blacklist while also providing a SLI profile. No performance claims have been made, so we're not exactly sure how much faster this beta driver is compared to the current official driver.

To measure frame rates, we used Fraps to record a minute of gameplay from the first mission. The Core i7-4770K was used at its default operating frequency for all the GPU tests.

Finally, we know that many of you will be interested in CPU scaling performance as well, so we clocked the Core i7-4770K and FX-8350 processors at several frequencies to see what kind of impact this has on performance when using a GTX Titan with the ultra-quality preset. Additionally, we ran similar tests using a range of processors including the Core i7-3960X, i5-3570K, i3-3220 and AMD Phenom II X6 1100T.

We'll look for an average of 60fps for stutter-free gameplay.

|

|