1974 - 1980: Bootstrapping a New Industry

Intel and Motorola's virtual duopoly comes to an abrupt end

Like its predecessor the 8008, Intel's 8080 suffered from initial delays in development but would later be recognized as one of the most influential chips in history. Company management focused on the high profit memory business, particularly complete memory systems that were compatible with the lucrative mainframe market.

If you missed part one,

click here to read that first...

The 8080 launched in April 1974. While initial development had been delayed, Intel's primary competitor, Motorola's 6800, also had its share of issues adapting the chip and n-MOS process to a single 5-volt input (the 8080 required three separate voltage inputs), delaying its introduction almost seven months. Without a direct competitor, Intel had a new market mostly to itself, it just needed to find customers with enough imagination to see the possibilities.Initial development of the 8080 didn't start until mid-1972, some six months after Federico Faggin began lobbying Intel's management for its development. By this time, the potential microprocessor markets had started to present themselves. The prevailing attitude up until now had centered on the microprocessor having to co-exist with or otherwise usurp the more powerful mainframe and minicomputer. Computers were still seen as an expensive business and research tool, and the markets for a new generation of relatively inexpensive personal machines and industrial controllers didn't exist, nor was it imagined in many cases.

Ted Hoff shows the Intel 8080 processor.

The remaining parts of the puzzle, an operating system and consumer-friendly packaging, were also taking their first steps. Intel hired Gary Kildall, a Ph.D. in compiler design who taught at the U.S. Navy's Postgraduate School, to write software that would emulate a (yet to be built) 8080 system on a DEC PDP-10 minicomputer. The software, Interp/80, would be complemented by a high-level language mirroring XPL (in use for mainframes at the time) called PL/M.

Kildall accelerated the process by cobbling together a system from an Intellec-8 development kit and a donated floppy drive, which alleviated downtime from lengthy delays associated with the teletype method of data input-output on the DEC time-shared minicomputer. The resulting code from the Intellec-8 system would become CP/M (Control Program for Microcomputers), the dominant operating system for the next seven years until the widespread use of MS-DOS. By the time MS-DOS debuted, 500,000 computers had shipped with the CP/M operating system, and it could have been many times more had history played out a little differently.

Kildall offered CP/M to Intel for $20,000 and the company declined the offer. It wasn't interested in an operating system that ran from disc nor any non-business related software in general. CP/M sales were initially limited to a couple users: Omron Corporation of Japan and Lawrence Livermore Labs. Kildall's third customer, IMSAI, would propel CP/M to pre-eminence among personal computer operating systems for the next seven years.

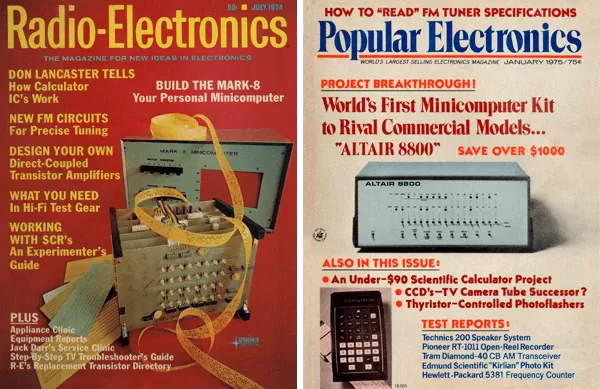

As Intel's 8080 began quantity production, MITS capitalized on the interest shown in Jonathan Titus' Mark-8 "source and build it yourself" kit with its own Altair 8800, designed exclusively for the hobbyist and the new wave of computing field college students.

As Intel's 8080 began quantity production, Ed Roberts, owner of a small company called Micro Instrumentation and Telemetry Systems, or MITS, which originally catered to amateur (ham) radio and model rocketry enthusiasts, looked toward the budding hobbyist computer movement for his company's continued survival.

MITS' latest venture, a basic calculator kit, had started production no sooner than Texas Instruments launching its own range of cheaper and more sophisticated models that killed off many smaller companies. Roberts looked quickly into the feasibility of capitalizing on the interest shown in Jonathan Titus' Mark-8 "source and build it yourself" kit, which had recently gained wide recognition among electronics aficionados.

Roberts was able to secure supply of the 8080 for $75 a processor (Intel's list price was $360, as was Motorola's 6800) and constructed the Altair 8800 to use sourced component boards plugged into a common back pane that MITS designed. Very crude when judged by any consumer electronics standard, it was designed exclusively for the hobbyist and the new wave of computing field college students.

As noted, Intel's 8008 processor provided the impetus for Jonathan Titus to build his own computer. The resulting Mark-8 was featured in the July 1974 issue of Radio-Electronics Magazine in an article with assembly instructions and a list of parts manufacturers since the components had to be sourced by hobbyists. Not to be outdone, Popular Electronics would feature the Altair 8800 in its January 1975 issue.

Ed Roberts had expected that a few hundred hobbyists would buy his computer. The reality was that the magazine article led to around a thousand orders, which grew to 5,000 in six months, and 10,000 by December 1976. This wasn't quite the windfall for MITS that it might appear.

The basic cost of the system was kept low to encourage sales, with profit loaded against expensive hardware expansion options. This approach was modelled upon what had served IBM well, at least until core memory prices tumbled and Intel began selling IBM System/360 compatible DRAM memory systems. The same issue befell MITS as hobbyists began offering cheaper (and often more reliable) alternatives to MITS's own range, beginning with Homebrew Computer Club member Robert Marsh's Altair compatible four-kilobyte static RAM module.

The Popular Electronics article made an immediate impression on Bill Gates and Paul Allen, who approached MITS immediately regarding writing a custom BASIC program for the machine. Gates, Allen, and Monte Davidoff, who would write the floating point routines, had what would become Altair BASIC ready for demonstration in February.

The first computer language written expressly for a personal computer began shipping with the Altair (or expansion options for the machine) at $75 a copy or $500 as standalone retail software. Bootleg copies surfaced almost immediately, leading to Bill Gates' (in)famous "Open Letter to Hobbyists" in January 1976. Bill Gates made 'Micro-Soft' in his own image – unashamed to be a business when most of the era belonged to the enthusiast entrepreneur.

Altair BASIC, the first computer language written expressly for a personal computer, began shipping with the Altair at $75 a copy or $500 as standalone retail software.

The Altair BASIC punch tape copying ushered in a standing tradition of new software being quickly made available by piracy, followed by the software vendor claiming exorbitant losses. Bill Gates and Paul Allen's Micro-Soft, would go on to tailor BASIC to the needs of Amiga and Commodore, Radio Shack, Atari, IBM, NEC, Apple and a host of specific systems.

The Altair 8800 proved a resounding success, not least because it had no real competition when it became available. IMS Associates quickly cloned the Altair as the IMSAI 8080, albeit using a derivative of the CP/M operating system rather than BASIC.

Within a year of its release, IMSAI was fast eroding the Altair's sales with 17% of the personal computer market versus the latter's 22%. Processor Technology's newly launched SOL-10 and SOL-20 systems (also Intel 8080-based) would account for a further 8% of the market as would Southwest Technical Products' 6800 (Motorola 6800-based).

Bill Gates and Paul Allen's Micro-Soft, would go on to tailor BASIC to the needs of Amiga and Commodore, Radio Shack, Atari, IBM, NEC, Apple and a host of specific systems.

Time to market with a finished design and a fairly smooth manufacturing ramp assured Intel of high visibility even if its 8080 was likely inferior to Motorola's 6800. Motorola and Intel were for the most part pitching the 8080 and 6800 to industrial and business interests.

At this time, the entertainment aspect of microprocessor-based computers had not been realized. Home consoles like the Magnavox Odyssey and its spiritual predecessor, Atari's Pong arcade game, weren't part of the greater public consciousness, so Intel and Motorola looked to industrial and business terminal machine applications with their lucrative add-on and support contracts. The following year would begin to show what kind of potential lay in entertainment-based computing.

1977 proved a watershed year in the industry as Motorola's and Intel's virtual duopoly in personal computing came to an abrupt end. The processor teams of both vendors splintered and reorganized themselves. A faction within Motorola's design team led by Chuck Peddle lobbied Motorola to produce a cheaper 6800. Being priced at $300-$360 limited the applications it could be used for and Peddle saw opportunities in a cheaper alternative for entry-level computing and low-cost industrial applications.

Chuck Peddle, Bill Mensch, and five fellow engineers left Tom Bennett's Motorola 6800 design team and set up shop at MOS Technology, another chip company that had been severely mauled in the calculator price war initiated by Texas Instruments. They set about building their vision of a streamlined, reduced cost 6800 and announced the MC6500 series 11 months after the 6800's launch. At $25, the 6502 not only undercut the 6800 (and 8080/8085), it also provided better yields than Motorola's product thanks to a less exacting manufacturing process.

Federico Faggin, chief architect of the 8080, had also parted ways with Intel soon after the chip was finalized. Faggin was increasingly frustrated with Andy Grove's micro-management of the company and he was livid that Intel project chief Les Vadász had filed (and received credit for) a patent on Faggin's invention of the buried contact, a vital step in the production of MOSFET transistors. It didn't help that Intel saw the microprocessor as little more than a component of a package which could be leveraged to sell more memory products.

1977 proved a watershed year in the industry as Motorola's and Intel's virtual duopoly came to an abrupt end.

Faggin and fellow Intel engineer Ralph Ungermann left in late 1974 to pursue their own vision with the founding of Zilog, a company named by Ungermann (Zilog being an acronym of sorts with "Z" being the last word in integrated logic). The company was quickly approached by Exxon, the world's largest oil company, with an investment offer.

For a 51% stake in the company, Exxon would pay $1.5 million. The two men quickly set about implementing improvements to the 8080 design and offered the redesigned chip to Intel as sub-contractor. Intel refused, believing that going into business with ex-employees would act as an incentive for other engineers to follow Faggin's lead.

Zilog's Z80 was chosen to power the Tandy (Radio Shack) TRS 80. (Photo: Maximum PC)

Zilog's first chip, the Z80, was designed in an astounding nine months by only three principle engineers (Faggin, Ungermann, and Masatoshi Shima who had joined from Intel), a couple of development and systems engineers, and a small number of graphics artists for chip lithography layout. The design was complete by December 1975 and fabricated by Mostek, a recent start-up formed by Texas Instruments employees that would rival Intel in the early days of integrated circuit production and eclipse it in DRAM production.

Like the MOS Tech 6502, the Z80 was simpler to implement into a system than the Intel 8080 and cheaper than both the 8080, its 8085 follow up, and the Motorola 6800. This simplicity and lower cost led to the Z80 being chosen to power the Tandy (Radio Shack) TRS 80, whilst the 6502 began a long association with the hugely popular Commodore company (who would soon acquire MOS Technology) with the PET 2001 model.

A MOS 6502 processor. The four-digit date code indicates it was made in the 37th week of 1984. (HWHunpage)

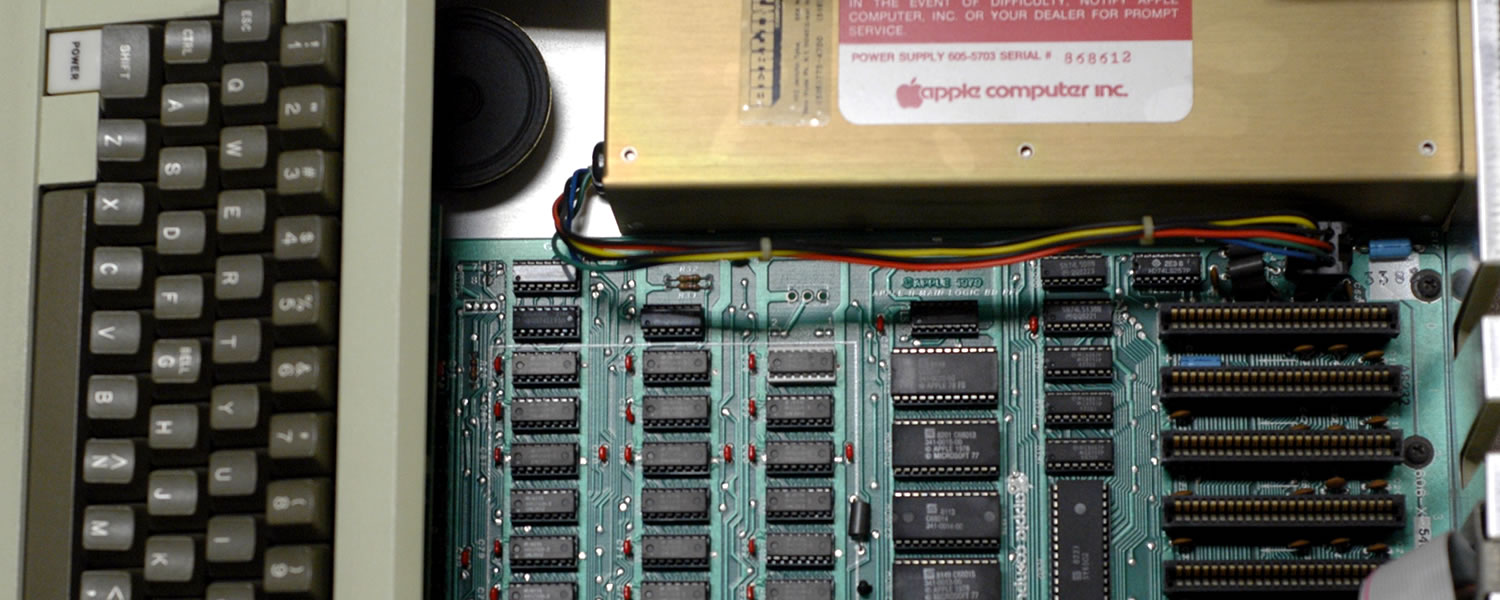

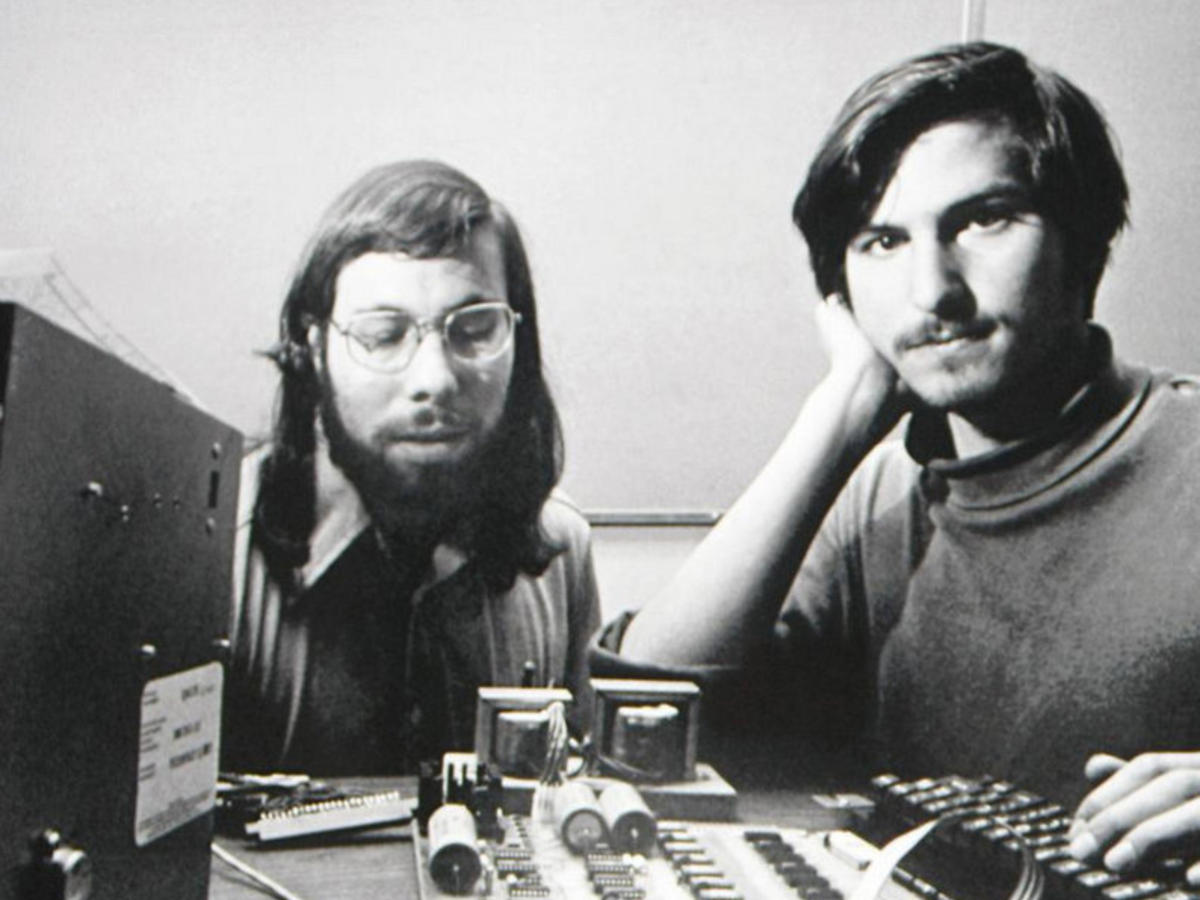

The MOS 6502 would also find a home in the Apple line of computers, another influential package. A passionate electronics enthusiast and a member of the Homebrew Computer Club, Steve Wozniak had urged his employer Hewlett-Packard to pursue development of a personal computer system. The archetypal hobbyist-hacker assembled the first Apple I mainboard in just a couple of months of spare time, yet Apple's company persona was to distinguish itself from the image of the hobbyist kit-building machines of the day. Wozniak would be the catalyst for Apple Computer's existence while Steve Jobs would mould the company, defining its strategy and image.

Steve Jobs and Steve Wozniak work on the original Apple I, powered by the MOS 6502 processor. While still technically a kit, since the buyer had to source an enclosure and peripherals, the mainboard was sold fully assembled.

If Steve Wozniak liked building computers, then Steve Jobs absolutely loved the idea of selling them. Lacking the technical electronics skill and inspiration of Wozniak, he possessed a keen business sense and saw opportunity in the new electronics market. Partnering with Wozniak (who he met as a fellow employee at Hewlett-Packard) and Ronald Wayne (who would leave Apple a few weeks later), the team set about building 200 Apple I computers in the garage of Jobs' parents with the help of a couple high school-aged assistants. All but 25 machines were sold in 10 months from the July 1976 introduction. While still technically a kit, since the buyer had to source an enclosure and peripherals, the mainboard was sold fully assembled.

Computer companies of the day were defined by engineers and their prowess. The public face of most companies reflected that they dealt with other companies or governmental engineers. Style had very little to do with the state of computer sales in the early to mid-1970s and was often frowned upon as needlessly frivolous. Steve Jobs made style a major selling point for Apple Computer, from the multi-hued Apple logo to advertising aimed at professionals in business, arts and sciences.

Steve Jobs envisaged Apple supplying the whole package: sales, support, software, and peripherals, making the experience as seamless and professional as possible.

He also recognised that many of the computer companies of the day lacked a full infrastructure. More than a few required mail order purchase or were available through select chain stores but not stores dedicated to the machines. Most users had to be creative in sourcing (or writing) software, and troubleshooting problems with the hardware. Steve Jobs envisaged Apple supplying the whole package: sales, support, software, and peripherals, making the user's experience as seamless and professional as possible.

The Apple I proved to be a valuable proof of concept, but to take the next step the company needed capital. Wozniak and Jobs were introduced to A.C. "Mike" Markkula, a friend of Robert Noyce who had recently been fired from his marketing position at Intel on Andy Grove's instruction. Markkula supplied $91,000 of his own money and secured a $250,000 line of credit in exchange for a third of the company.

Wozniak turned his attentions toward refining his design into the Apple II, which became the world's first financially successful personal computer. Once again utilizing MOS Tech's 6502 processor, the Apple II attained a much wider audience in part due to Wozniak's insistence of including multiple expansion slots in the Apple II's design over Steve Jobs objections.

The eight slots offered the option of using co-processors – expansion boards beginning with the SoftCard in March 1980 which supplied the Zilog Z80 and a copy of Microsoft's Disk BASIC for $349. Similar options followed for the Motorola MC6809 (OS-9), Intel 8088 (CP/M-86 and MS-DOS) and later, the Motorola 68008.

The expansion slots also allowed a wide range of connectivity and customization, from floppy disks to sound cards, serial controllers, drives, and additional memory. This range of options gave the Apple II an unrivalled advantage in the business sector where the personal computer was just gaining traction as a viable alternative to a time-shared mainframe or minicomputer. The level of customization also provided many people with their first experience or at least their first sight of a personal computer as schools, medical and research facilities, government, and business all gravitated to the machine.

Apple II populated with some common expansion boards. From left to right: 16 kByte RAM "language card" expansion, 80 column board, Z80 board and dual floppy controller board. (The HP 9845 Project)

Of the 48,000 personal computers were sold worldwide in 1977, the Apple II was easily the most recognizable and coveted thanks to extensive marketing that overshadowed more established brands from Vector, Ontel, Polymorphic, Heathkit, IMSAI, MITS, and Cromemco.

The Apple II attained a much wider audience in part due to Wozniak's insistence of including multiple expansion slots in the Apple II's design over Steve Jobs objections.

The financial success of the Apple II and its quick elevation into the public consciousness wasn't lost on the established powerhouses in the semiconductor world. Once the Apple became established as a brand and the all-important software range increased, smaller companies were eager to ride Apple's coattails – notably the Franklin Computer Corporation, whose blatant cloning of the Apple II under the ACE product line kept courtrooms occupied for some years.

Other companies would look to emulate but not outright copy Apple as the number of personal computers sold in 1978 jumped to 200,000, representing half a billion dollars in sales. Of that total, Apple II sales accounted for $30 million from just 20,000 units sold compared to Tandy's TRS 80, which required the sale of 100,000 units to generate $105 million, and Commodore's PET 2001, which hit $20 million in sales from 25,000 computers shipped.

A large part of Apple's success in the business market – a market not originally foreseen as being the Apple II's primary focus – stemmed from the close association with the hugely influential VisiCalc spreadsheet software that was only initially compatible with the Apple machine. VisiCalc's appeal across the entire business spectrum was such that it alone justified the purchase of the computers needed to run it.

For consumers, the next few years mostly brought incremental advances in technology as the infrastructure required for the industry strengthened. The 8-bit microprocessor grew in ubiquity as processors, support and memory chips became cheaper to the point where they were a commodity product turning up in a variety of low cost applications. With the expanding range of personal computers came the deluge of software that sustained further hardware sales.

Before the revolution of 8-bit machines came into stride, a worldwide recession arrived and the semiconductor business suffered a downturn in sales and average selling prices in 1974. Larger companies weathered the lean year relatively unscathed but smaller companies just finding their footing in the business suffered, resulting in a vast number of reorganizations, buyouts and new partnerships.

One company seriously affected was AMD. As mainly a second source for chips rapidly approaching commodity pricing, the year saw AMD's stock price fall to $1.50 per share – a tenth of its initial public offering price with threat of Intel suing for IP infringement over its line of EPROM chips. AMD desperately needed a microprocessor to sell for its continued existence and to forestall a lawsuit it was sure to lose.

For its part, Intel desired AMD's newly designed floating point unit (FPU) chip, a math co-processor that could act in concert with the microprocessor to calculate arithmetic functions. This FPU also tied into a larger picture of market dominance as Intel was locked in a four way marketing battle with Zilog, Motorola, and MOS Tech. Having AMD as a second source for the 8085 and its successors gave Intel's design a greater market penetration, and just as importantly, AMD wouldn't be selling any of Intel's competitors' processors.

AMD became an authorized second-source of Intel 8085 microprocessors. (Photo: CryptoMuseum)

An agreement was reached after a few months of posturing and bargaining from both sides. Intel would receive royalties and penalty payments from AMD for the designs they were already selling and access to licensing AMD designs on a case by case basis. AMD would receive a license for the 8085 including full access to the name, manufacturing masks, and the right to market the AMD chip as fully "Intel compatible".

To seal the deal, AMD was offered Intel microcode, as it was made clear to AMD representatives that future designs would probably include microprogramming. Not particularly relevant at the time, this clause in the contract was to have major ramifications within a few years. AMD's continued survival now looked a lot brighter and was fully assured the following year with a joint venture with Siemens after the German company had failed in a bid to acquire Intel in an effort to bridge the growing gulf between U.S. and European IC prowess.

From the consumer's point of view, the personal computer landscape remained rather static as the vendors tentatively embellished their product line-ups. 8-bit computing became widespread as software applications compatible with the first wave of machines hindered 16-bit uptake. By the time the first mainstream 16-bit processors were ready for widespread sale, available software applications for 8-bit machines numbered over 5,000 for use with the CP/M operating system, with a further 3,000 tailored for the Apple II.

After a cross-licencing deal with Intel, AMD's continued survival now looked a lot brighter and was fully assured the following year with a joint venture with Siemens.

As the microprocessor continued its refinement and grew in complexity, the support structure also blossomed. Memory density increased as process improvements allowed for higher transistor counts, while floppy disk and hard drive density and refinement followed suit. Many aspects of modern personal computing we now take for granted had been in development first by SRI International's Augmentation Research Center (ARC) under the stewardship of Douglas Engelbart, and later Xerox's Palo Alto Research Center (PARC).

Engelbart's work with the development of bitmapping led directly to the modern interactive graphics user interface (GUI) as we know it. Displaying information visually prior to this usually meant that only the last line of data on a screen was "active". The beginning of a new line consigned the previous one to permanent (or semi-permanent if using a line editor at a later juncture) storage, which was little removed from input by a sequence of punch cards.

Engelbart's approach was to make the whole screen interactive. With this came a need for pointer navigation around the screenspace, and the mouse was developed to achieve this. These technologies, as well as copy and paste, hypermedia (including hypertext), screen windows, real-time editing, video conferencing, and dynamic file linking were all showcased in the "Mother of All Demos" on December 9, 1968.

PARC refined and expanded on Engelbart's work. A company built on paper, Xerox realized it was quickly being marginalized by the computer and could imagine a future office being paperless. The company set up PARC to research possible alternatives to Xerox's main business should it begin to suffer.

The Computer Science Laboratory (CSL) at PARC was under the astute leadership of Bob Taylor, who had risen in stature as a director at ARPA (now DARPA) and was instrumental in the creation of the ARPANET, forerunner of the modern internet. The CSL was primarily a pure (or basic) research program, which is to say it researched for the sake of a greater understanding rather than research and development with the end goal being a marketable product.

In the first few years following its creation in 1970, PARC amassed a prodigious list of achievements: refinement of the GUI (something that would make a lasting impression on a young Steve Jobs when he visited PARC), the invention of the laser printer, and the creation of the world's first workstation computer, the Alto.

Often remembered as an expensive failure, the Alto was commonly viewed as being over-engineered. However, given the workloads it was designed for, the level of features it had, its programming language and a raw component cost that topped $10,000 per machine in 1973, the Alto represents an analogue of the expensive workstations used today. The infrastructure associated with the system and its probable $25,000 to 30,000 retail price tag put it far beyond any reasonable expectation of a home user if it were intended for commercial release.

The Xerox Alto was the first desktop computer with a graphical user interface, among many other innovations.

The Alto combined PARC's research into a single machine. These machines were also networked within PARC, one per researcher, and to the newly developed laser printers. One by-product of this networking was that the Alto's and printers (which incorporated their own terminals) became so fast that the connections between them were the limiting factor in workload throughput. PARC's solution was to invent and develop Ethernet to connect the system thereby developing the first high-speed computer network.

A 600 dpi page could now be transmitted across the 2.67 mbps network in a blistering twelve seconds from the previous 15 minute requirement. Not content with this speedup, PARC's scientists immediately set about trying to adapt Ethernet to carry 10 mbps – far beyond any reasonable data traffic expectation for the near future.

Many products from this creative free-for-all didn't benefit home users for years to come and benefitted the company less than they should have due to Xerox management being firmly entrenched in the copier business with little understanding or unified vision of the electronics revolution underway. While Xerox built the Alto as an in-house project and began development of the follow-on Xerox Star at $16,000 per bare machine, Tandy would be selling 10,000 TRS-80s or more a month for $599 each. Even the more substantial Commodore PET could be had for $1,298.

Towards the end of 1978 Apple, Radio Shack, and Commodore would have a new competitor when Atari announced its 400 and 800 models. While the new systems wouldn't start shipping until October the following year, Atari already had a sizeable presence in home entertainment market with its popular Atari 2600 console.

The gaming potential of the personal computer was about to be realized as companies such as Avalon Hill (Planet Miners, Nukewar, North Atlantic Convoy Raider) and Automated Simulations (Starfleet Orion) prepared a range of games for the new market that grew alongside the proliferation of arcade and console-based gaming in the late 1970s. Bruce Artwick's Flight Simulator would become the first example of the concerted strategy to differentiate PC gaming from console gaming when Microsoft licensed the game to showcase IBM PC and MS-DOS.

The gaming potential of the personal computer was about to be realized as companies such as Avalon Hill and Automated Simulations prepared a range of games. Bruce Artwick's Flight Simulator would become the first example of the concerted strategy to differentiate PC gaming from console gaming.

The microprocessor industry and personal computing in particular was growing steadily, if not spectacularly, leading into the 1980s. The seeds sown almost 20 years before blossomed at the turn of the new decade as the industry reached a major point of inflection.

The primary focus of semiconductor companies (almost entirely U.S. derived) remained on high profit DRAM circuits. The microprocessor was mostly seen as part of a range of chips that could be sold as a multi-chip package. Intel, and more recently Mostek, were built on the profits of dynamic memory. That changed as Japanese semiconductor companies with little regard for U.S. patents and copyrights received generous tax breaks, low interest loans, and institutionalized protectionism from a government desperately trying to keep the Japanese computer industry from falling into the abyss.

IBM's growth in Japan had resulted in the Japanese government forming the Japan Electronic Computer Company (JECC) in 1961 to buy Japanese electronics at generous prices to keep the local industry afloat. JECC wasn't a company as such, but an umbrella corporation that organized independent Japanese companies to minimize competition and maximize individual market options.

IBM's System/360 and 370 mainframe leasing business caused further problems for home-grown Japanese competition in the mid-1960s. Local companies had neither the expertise nor the infrastructure to cope with Big Blue. In response, the Japanese government restructured the industry, including allocating markets to individual companies or combines, as well as limiting U.S. company growth and opportunity in Japan. These companies also controlled the entire supply chain from manufacturing to sales in their chosen market segment to aid in efficiency and increase opportunities in time-to-market.

Demand for new integrated circuits, particularly in the U.S., had been growing at an average of 16% a year through the mid to late 1970s and Japan's government along with electronics companies saw ICs and particularly the lucrative DRAM market as an ideal opportunity to build their industry. Backed by $1.6 billion in government subsidies, tax credits, and low interest loans as well as large private investments, Japanese companies embarked on building state of the art foundries for IC manufacturing. These same Japanese companies also needed increased imports of U.S. made DRAM for their consumer and business products while their own plants were being built.

U.S. companies expanded their manufacturing base to cope with larger exports and were left with massive overcapacity once the Japanese chips from Hitachi, NEC, Fujitsu, and Toshiba arrived. The result was a 90% fall in DRAM prices within one year and by March 1982 a 64K DRAM chip that had sold for $100 in 1980 was now $5. The U.S. memory business lay in ruin.

The Intel 8088 would go on to power the IBM PC and turn the platform into an industry standard. (Grinnell College)

For Intel, its primary business ceased being memory-based just as its 8-bit/16-bit 8086 and 16-bit 8088 processors reached production. The future was now the microprocessor and microcontroller. As luck would have it, IBM had also noted the rise of the personal computer industry and while the new opportunity wasn't compelling enough to divert the company from its core business, it did represent a market ripe for profit and a new customer base. An unlikely partnership was about to change the case of personal computing.

This article is the second installment on a series of five. If you enjoyed this, read on as we dive into the defining arrival of the IBM PC 5150 and Intel's eventual cementing of the x86 platform as the industry standard. If you feel like reading more about the history of computing, check out our feature on iconic PC hardware.