1996 and Beyond: New Frontiers

Computing Goes Mainstream, Mobile, Ubiquitous

The microprocessor made personal computing possible by opening the door to more affordable machines with a smaller footprint. The 1970s supplied the hardware base, the 80s introduced economies of scale, while the 90s expanded the range of devices and accessible user interfaces.

The new millennium would bring a closer relationship between people and computers. More portable, customizable devices became the conduit that enabled humans' basic need to connect. It's no surprise that the computer transitioned from productivity tool to indispensable companion as connectivity proliferated.

As the late 1990s drew to a close, a hierarchy had been established in the PC world. OEMs who previously deposed IBM as market leader found that their influence was now curtailed by Intel. With Intel's advertising subsidy for the "Intel Inside" campaign, OEMs had largely lost their own individuality in the marketplace.

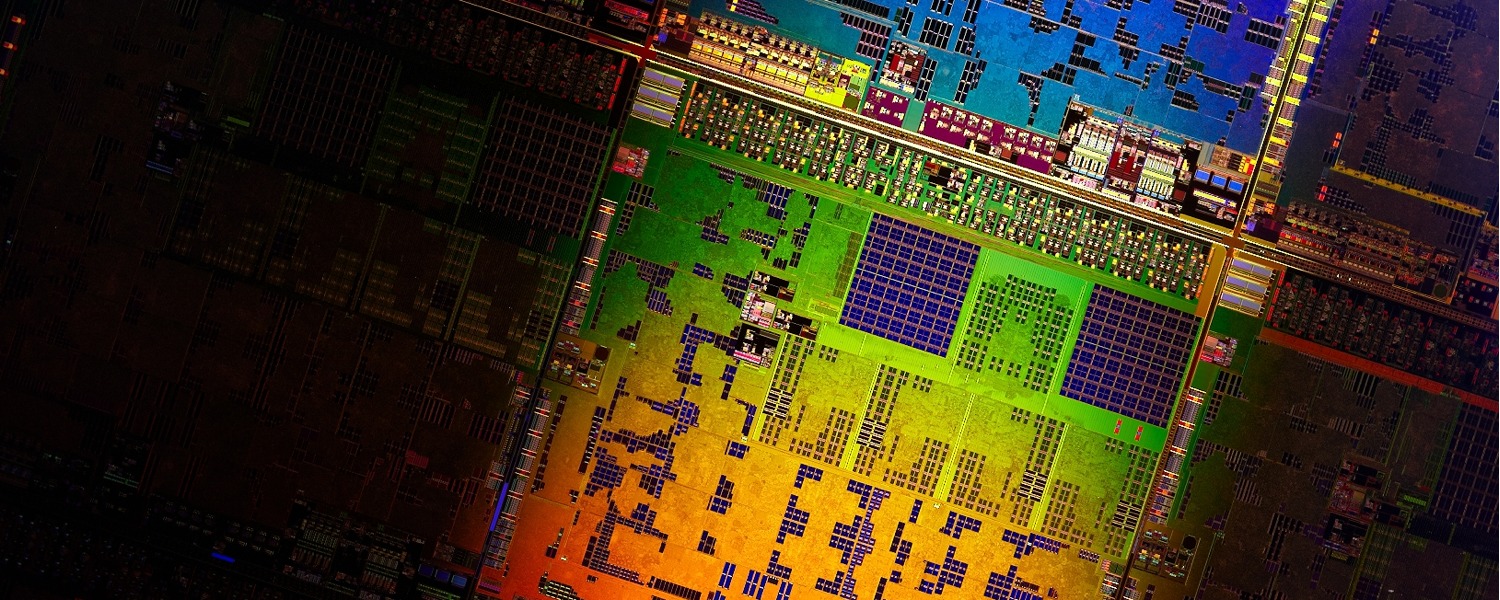

The Pentium III had a lead role in the 'Gigahertz War' against AMD's Athlon processors between 1999 and 2000. Ultimately it was AMD who crossed the finish line first, shipping the 1GHz Athlon days before Intel could launch theirs (Photo: Wiki Commons)

Intel, in turn, had been usurped by Microsoft as the industry leader after backing off its intention to increase multimedia efficiency by pursuing NSP (Native Signal Processing) software. Microsoft explained to OEMs that the company would not support NSP with Windows operating systems, as was the case with the then current MS-DOS and Windows 95.

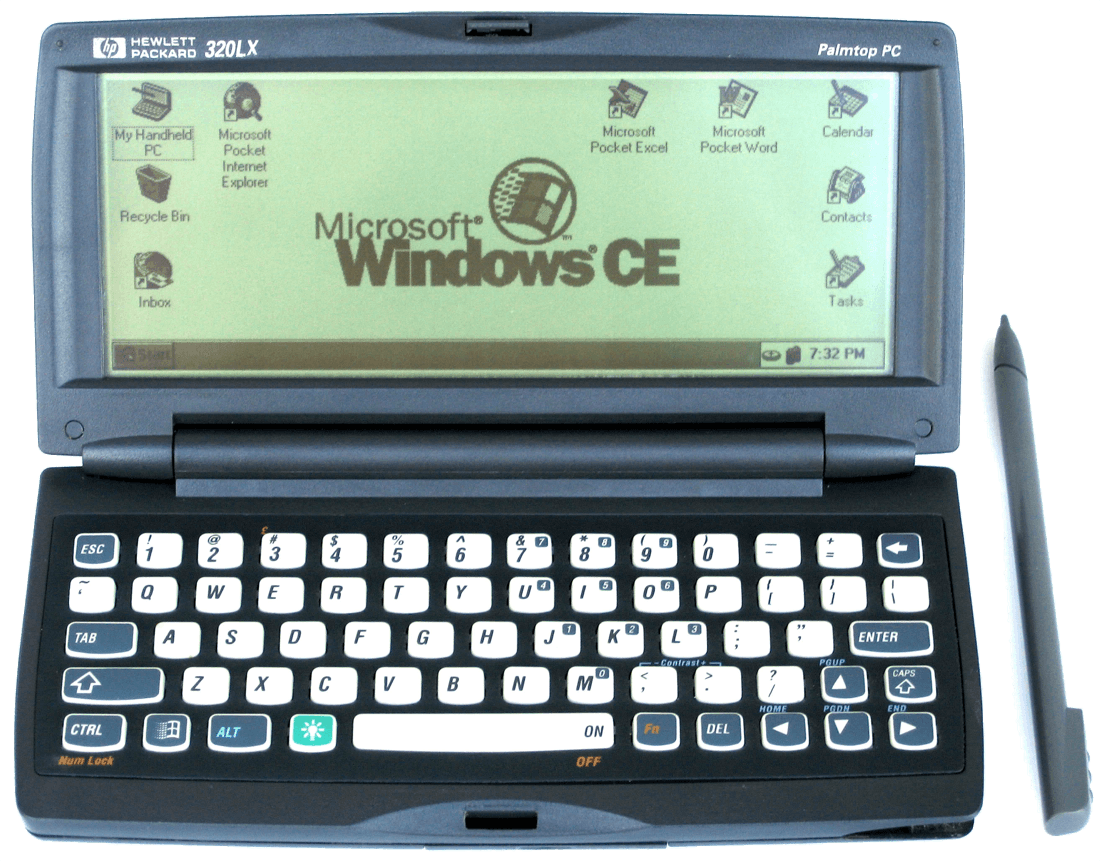

Intel's attempt to move onto Microsoft's software turf had almost certainly been at least partly motivated by Microsoft's increasing influence in the industry and the arrival of the Windows CE operating system, which would decrease Microsoft's reliance on Intel's x86 ecosystem with support for RISC-based processors.

Intel's NSP initiative marked only one facet of the company's strategy to maintain its position in the industry. A more dramatic change in architectural focus would arrive in the shape of the P7 architecture, renamed Merced in January 1996. Planned to arrive in two stages, the first would produce a consumer 64-bit processor with full 32-bit compatibility while the second more radical stage would be a pure 64-bit design requiring 64-bit software.

The HP 300LX, released in 1997, was one of the first handheld PCs designed to run the Windows CE 1.0 operating system from Microsoft. It was powered by a 44 MHz Hitachi SH3 (Photo: evanpap)

The hurdles associated with both hardware execution and a viable software ecosystem led Intel to focus its efforts in competing with RISC processors in the lucrative enterprise market with the Intel Architecture 64-bit (IA-64) based Itanium in partnership with Hewlett-Packard. The Itanium's dismal failure – stemming from Intel's overly optimistic predictions for the VLIW architecture and subsequent sales – provided a sobering realization that throwing prodigious R&D resources into a bad idea just makes for an expensive bad idea. Financially, Itanium's losses were ameliorated by Intel moving x86 into the professional markets with the Pentium Pro (and later Xeon brand) from late 1996, but Itanium remains an object lesson in hubris.

In contrast, AMD's transition from second source vendor to independent x86 design and manufacture moved from success to success. The K5 and following K6 architectures successfully navigated AMD away from Intel dependency, rapidly integrating its own IP into both processors and motherboards as Intel moved to the P5 architecture with its Slot 1 and 440 chipset mainboard (both denied to AMD under the revised cross-license agreement).

AMD first adapted the existing Socket 7 into the Super Socket 7 with a licensed copy of VIA's Apollo VP2/97 chipset, which provided AGP support to make it more competitive with Intel's offerings. AMD followed that with its first homegrown chipset (the "Irongate" AMD 750) and Slot A motherboard for the product that would spark real competition with Intel. So much so that Intel initially pressured AMD motherboard makers to downplay AMD products by restricting supply of 440BX chipsets to board makers who stepped out of line.

AMD's transition from second source vendor to independent x86 design and manufacture moved from success to success.

The K6 and subsequent K6-II and K6-III had increased AMD's x86 market share by 2% a year following their introduction. Gains in the budget market were augmented with AMD's first indigenous mobile line with the K6-II-P and K6-III-P variants. By early 2000, the much refined mobile K2-II and III + (Plus) series were added to the line-up, featuring lower voltage requirement and higher clock speed thanks to a process shrink as well as AMD's new PowerNow dynamic clock adjustment technology to complement the 3D Now! instructions introduced with the K6-II to boost floating point calculations.

Aggressive pricing would be largely offset by Intel's quick expansion into the server market, and what AMD needed was a flagship product that moved the company out of Intel's shadow. The company delivered in style with its K7 Athlon.

The K7 traced its origins to the Digital Equipment Corporation, whose Alpha RISC processor architecture seemed to be a product in search of a company that could realize its potential. As DEC mismanaged itself out of existence, the Alpha 21064 and 21164 co-architect Derrick Meyer moved to AMD as the K7's design chief, with the final design owing much to the Alpha's development including the internal logic and EV6 system bus.

As the K6 architecture continued its release schedule, the K7's debut at the Microprocessor Forum in San Jose on October 13, 1998 rightfully gained the lion's share of the attention. Clock speeds beginning at 500MHz already eclipsed the fastest Pentium II running at 450MHz with the promise of 700MHz in the near future thanks to the transition to copper interconnects (from the industry standard aluminum) used in the 180µm process at AMD's new Fab 30 in Dresden, West Germany.

The Athlon K7 would ship in June 1999 at 500, 550 and 600MHz to much critical acclaim. Whereas the launch of Intel's Katmai Pentium III four months earlier was tainted by problems with its flagship 600MHz model, the K7's debut was flawless and quickly followed up by the promised Fab 30 chips of 650 and 700MHz along with new details about the HyperTransport system data bus developed in part by another DEC alumnus, Jim Keller.

The Athlon's arrival signaled the opening salvos in what was coined 'The Gigahertz War'. Less about any material gains than the associated marketing opportunities, the battle between AMD and Intel allied with new chipsets provided a spark for a new wave of enthusiasts. Incremental advances in October 1999 from the 700MHz Athlon to the 733MHz Coppermine Pentium III gave way to November's Athlon 750MHz and Intel's 800MHz model in December.

The Athlon's arrival signaled the opening salvos in the so-called 'Gigahertz War'.

AMD would reach the 1GHz marker on January 6, 2000 when Compaq demonstrated its Presario desktop incorporating an Athlon processor cooled by KryoTech's Super G phase change cooler at the Winter Consumer Electronics Show in Las Vegas.

This coup was followed by the launch of AMD's Athlon 850MHz in February and 1000MHz on March 6, two days before Intel debuted its own 1GHz Pentium III. Not content with this state of affairs, Intel noted that its part had begun shipping a week earlier, to which AMD replied that its own Athlon 1000 had begun shipping in the last week of February – a claim easily verified since Gateway was in the process of shipping the first customer orders.

This headlong pursuit for core speed would continue largely unabated for the next two years until the core speed advantage of Intel's NetBurst architecture moved AMD to place more reliance on its rated speed instead of actual core frequency. The race was not without casualties. While prices tumbled as a constant flow of new models flooded the market, OEM system prices began climbing – particularly those sporting the 1GHz models as both AMD and Intel had supplied small voltage increases to maintain stability, necessitating more robust power supplies and cooling.

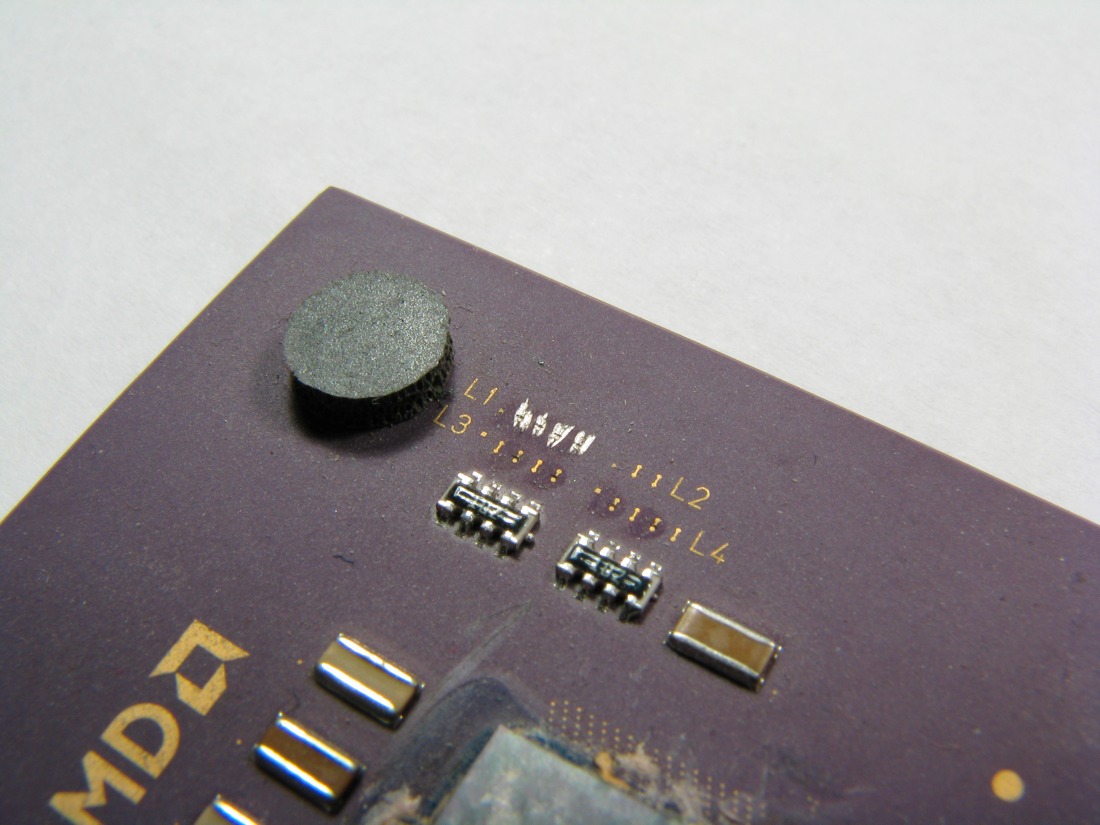

AMD's Athlon would also be held back initially by an off-die cache running slower than the CPU, causing a distinct performance disadvantage against the Coppermine-based Pentium III and teething troubles with AMD's Irongate chipset and Viper southbridge. VIA's excellent alternative, the KX133 chipset with its AGP 4x bus and 133MHz memory support, helped immensely with the latter while revising the Athlon to include an on-die full speed level 2 cache enabled the Athlon to truly show its potential as the Thunderbird.

AMD Athlon CPU "The Pencil Trick" Using pencil lead to reconnect the L1 bridges, allowing the processor to be set at any clock frequency. (Wikipedia)

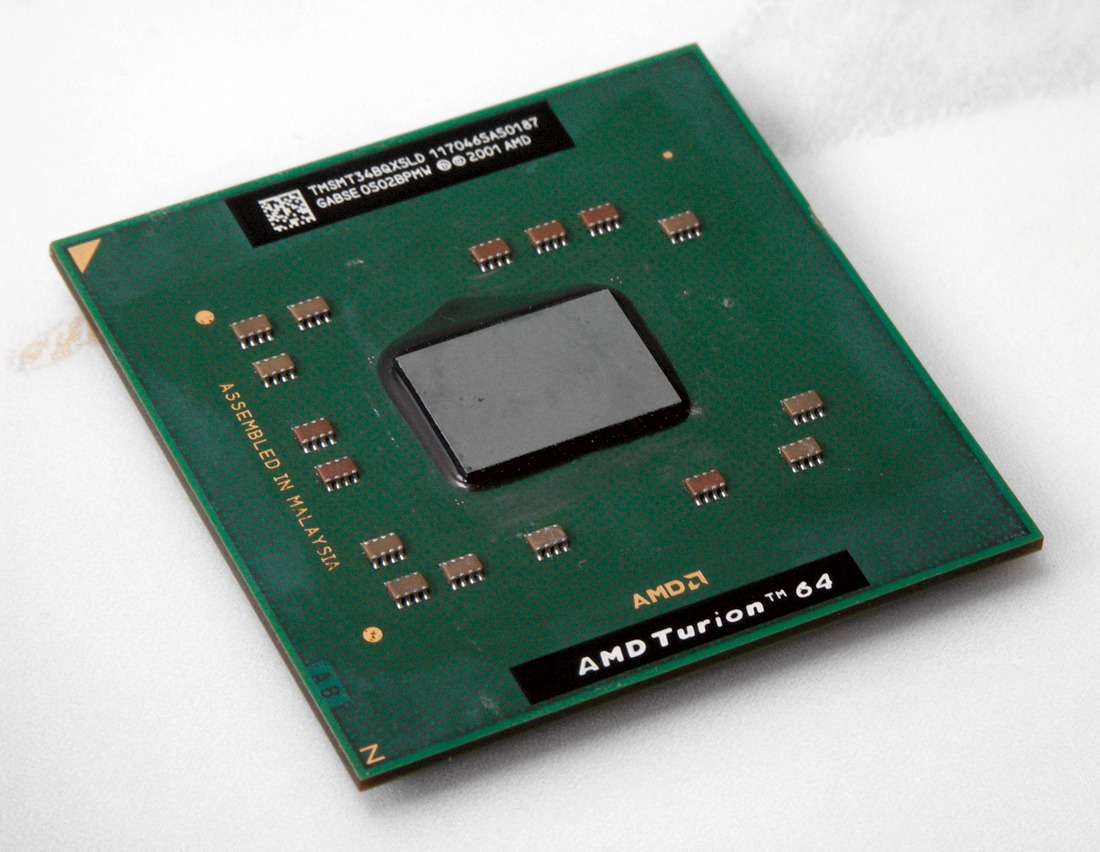

With AMD now alongside Intel in the performance segment, the company introduced its cut-down Duron line to compete with Intel's Celeron chipsets, southbridges and mobile processors in the growing notebook market where Intel's presence was all-pervasive in flagship products from Dell (Inspiron), Toshiba (Tecra), Sony (Vaio), Fujitsu (Lifebook), and IBM (ThinkPad).

Within two years of the K7's introduction AMD would claim 15% of the notebook processor market – a tenth of a percent more than its desktop market share at the same point. AMD's sales fluctuated wildly between 2000 and 2004 as shipping was tied heavily to the manufacturing schedule of AMD's Dresden foundry.

AMD management had been unprepared for the level of success gained by the K7, resulting in shortages during a critical time where it was beginning to cause anxiety within Intel.

AMD management had been unprepared for the level of success gained by the company's design, resulting in shortages that severely restricted the brand's growth and market share during a critical window where AMD was beginning to cause some anxiety within Intel. The supply constraints would also impact company partners, notably Hewlett-Packard, while strengthening Dell, a principal Intel OEM who had been selling systems at a prodigious rate and collecting payments for carrying Intel-only platforms since shortly after the mid-2003 launch of AMD's K8 "SledgeHammer" Opteron, a product which threatened to derail Intel's lucrative Xeon server market.

A share of this failure to fully capitalize on the K7 and following K8 architectures arose through the mindset of AMD CEO Jerry Sanders. Like his contemporaries at Intel, Sanders was a traditionalist from an era where a semiconductor company both designed and fabricated its own products. The rise of the fabless companies drew distain and prompted his "real men have fabs" outburst against newly formed Cyrix.

The stance was softened as Sanders stepped aside for Hector Ruiz to assume the role of CEO, with an outsourcing contract going to Chartered Semiconductor in November 2004 that began producing chips in June 2006, a couple of months after AMD's own Dresden Fab 36 expansion began shipping processors. Such was the departure from the traditional semiconductor model that companies who design and manufacture their own chips became increasingly rare with foundry costs escalating and a whole mobile-centric industry built upon buying off-the-shelf ARM processor designs while contracting out chip production.

AMD's Fab 36 in Dresden, now part of spinoff company GlobalFoundries.

Just as Intel had coveted the high margin server market, AMD also looked to the sector as a possible means of expansion where its presence was basically non-existent. Whereas Intel's strategy was to draw a line under x86 and pursue a new architecture devoid of competition using its own IP, AMD's answer was more conventional. Both companies looked to 64-bit computing as the future; Intel because it perceived a strong possibility that RISC architectures would in future outperform their x86 CISC designs, and AMD because Intel had the influence as an industry leader to ensure that 64-bit computing became a standard.

With x86 having moved from 16-bit to 32-bit, the next logical step would be to add 64-bit functionality with backwards compatibility for a bulk of existing software to ensure a smooth transition without breaking the current ecosystem. This approach was very much Intel's fallback position rather than its preferred option.

AMD led the way in 64-bit computing, and with Intel's need to make EM64T compatible with AMD64, the Sunnyvale company gained validation in the wider software community.

Being eager to lead in processor design on multiple fronts, Intel was attracted to steering away from the quagmire of x86 licenses and IP ownership, even if pursuing a 64-bit x86 product line also raised the issue of Intel's own products working against IA64's acceptance. AMD had no such issues.

Once the decision had been made to incorporate a 64-bit extension into the x86 framework rather than work on an existing architecture from DEC (Alpha), Sun (SPARC), or Motorola/IBM (PowerPC), what remained was to forge software partnerships in bringing the instruction set to realization since AMD was without the luxury of Intel's sizeable in-house software development teams. This situation was to work in AMD's favor as the collaboration fostered strong ties between AMD and software developers, aiding in industry acceptance of what would become AMD64.

Working closely with K8 project chief Fred Weber and Jim Keller would be David Cutler and Robert Short at Microsoft, who along with Dirk Meyer (AMD's Senior Vice President of the Computation Products Group) had strong working relationships from their time at DEC. AMD would also consult with open source groups including SUSE who would provide the compiler. The collaborative effort allowed for a swift development and publishing of the AMD64 ISA and forced Intel to provide a competing solution.

Six months after Fred Weber's presentation of AMD's new K8 architecture at the Microprocessor Forum in November 1999, Intel began working on Yamhill (later Clackamas) which would eventuate as EM64T and later Intel64. With AMD leading the way and Intel's need to make EM64T compatible with AMD64, the Sunnyvale company gained validation in the wider software community - if any more were needed with Microsoft aboard.

The arrival of the Opteron server based Athlon 64 in July 2003 as well as the desktop and mobile consumer version in October marked a period of sustained growth and heightened market presence for AMD. Server market share that had previously not existed rose to 22.9% of the x86 market at Intel's expense by early 2006, prompting aggressive Intel price cuts. Gains in the consumer market were equally impressive with AMD's share rising from 15.8% in Q3 2003 to an all-time high of 25.3% in Q1 2006 when the golden age for the company came to an abrupt halt.

AMD's share rose to an all-time high of 25.3% in Q1 2006 when the golden age for the company came to an abrupt halt.

AMD would suffer a number of reverses that the company has still to recover from. After being snubbed by Dell for a number of years, the companies entered into business together for the first time in 2006 with Dell receiving allocation preference over other OEMs. At the time, Dell was the largest system builder in the world, shipping 31.4 million systems in 2004 and almost 40 million in 2006, but was locked in a fierce battle with Hewlett-Packard for market dominance.

Dell was losing in part to a concerted marketing campaign mounted by HP, but its position was also hit by the sale of systems at a loss, falling sales in the high-margin business sector, poor management, run-ins with the SEC and a recall of over four million laptop batteries. By the time the company turned its fortunes around, it had competition not just from new market leader HP, but from a growing list OEMs riding the popularity wave of netbooks and light notebooks.

2006 would also see a new competitor from Intel – one that pushed AMD back to being a peripheral player once again. At the August 2005 Intel Developer Forum, CEO Paul Otellini publicly acknowledged the failings of NetBurst with the rising power consumption and heat generation of the then current Pentium D. Once Intel realized that its high speed, long pipeline NetBurst architecture required increasing amounts of power as clock rates rose, the company shelved its NetBurst-based Timna system-on-a-chip (SoC) and set the design team on a course for a low-power processor that borrowed from the earlier P6 Pentium Pro.

The subsequent Centrino and Pentium M led directly to the Core architecture and a lineage of that leads to the present day model lineup. For its part, AMD continued to tweak the K8 while the following 10h architecture offset a lack of evolution with vigorous price cutting to maintain market share until its plan to integrate graphics and processor architectures could bear fruit.

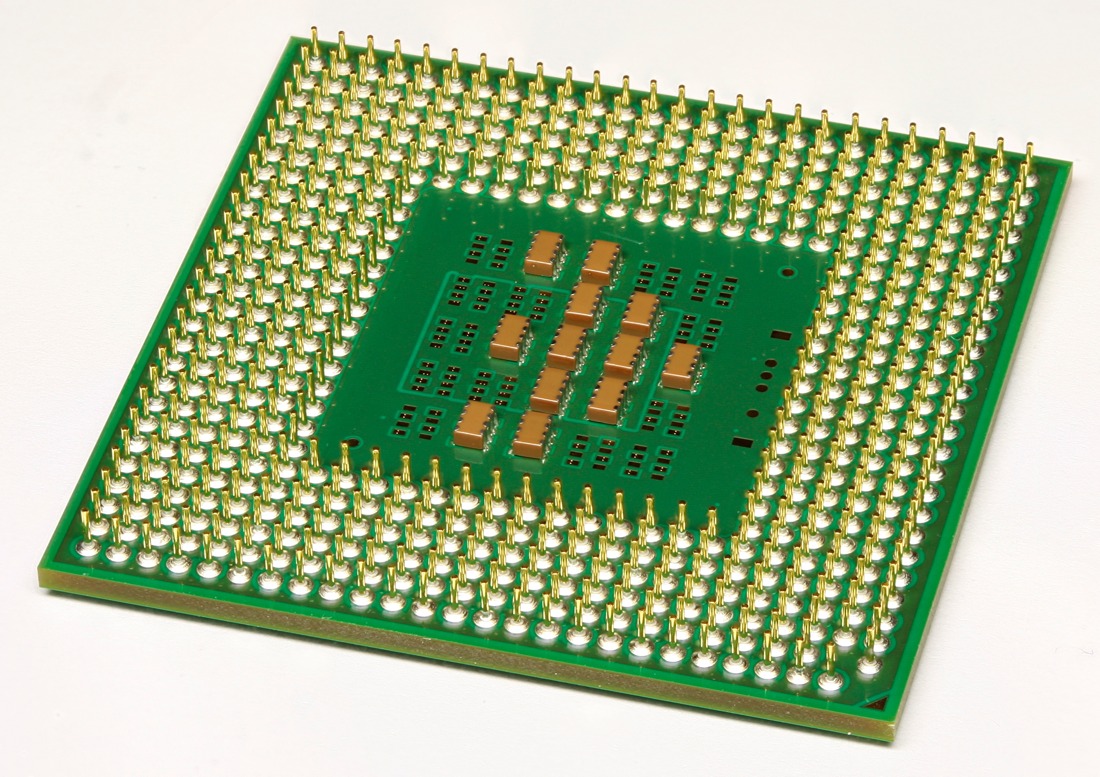

Bottom side of an Intel Pentium M 1.4. The Pentium M represented a new and radical departure for Intel, optimizing for power efficiency at a time laptop computer use was growing fast.

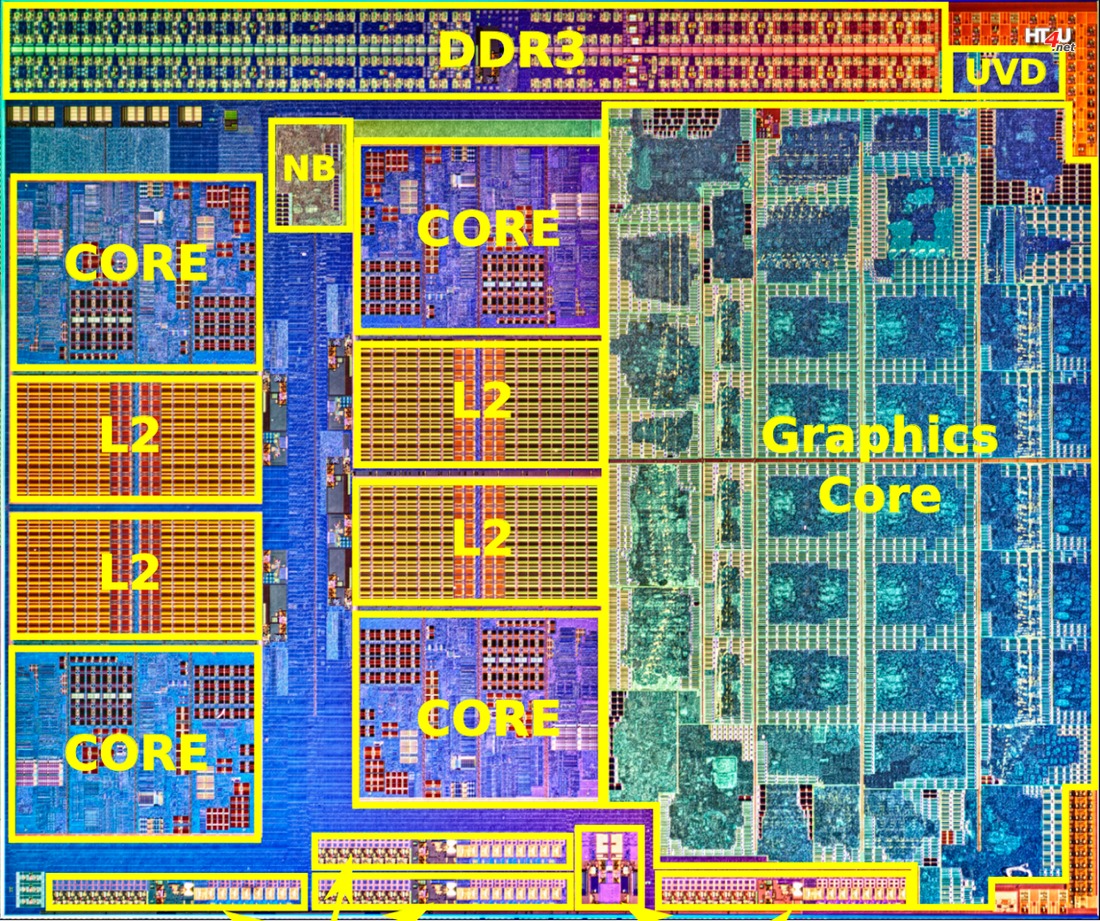

Severely constrained by the debt burden from acquiring an overpriced ATI in 2006, AMD was faced not only with Intel recovering with a competent architecture, but also a computing market which was beginning to embrace mobile systems for which the Intel architectures would be better suited. An early decision to fight for the hard-won server market resulted in AMD choosing to design high-speed multi-core processors for the high-end segment and deciding to repurpose these core modules in conjunction the newly acquired ATI graphics IP.

The Centrino and Pentium M signaled a renewed Intel and led directly to the Core architecture whose lineage extends to the present day model lineup.

The Fusion program was officially unveiled on the day AMD completed its acquisition of ATI on November 25, 2006. Details of the actual architectural makeup and Bulldozer followed in July 2007 with roadmaps for both to be introduced in 2009.

The economic realities of debt servicing and poor sales that dropped AMD's market share back to pre-Athlon 64 days would provide a major rethinking a year later when the company pushed its roadmaps out to 2011. At the same time, AMD decided against pursuing a smartphone processor, prompting it to sell its mobile graphics IP to Qualcomm (later emerging as the Adreno GPU in Qualcomm's ARM-based Snapdragon SoCs).

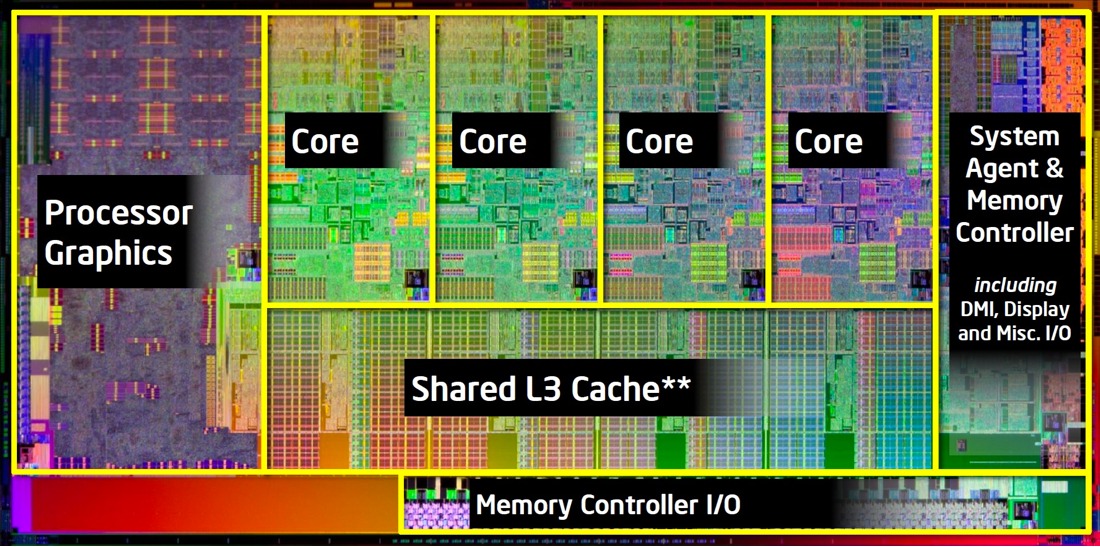

Both Intel and AMD targeted integrated graphics as a key strategy in their future development as a natural extension of the ongoing practice of reducing the number of discrete chips required for any platform. AMD's Fusion announcement was followed two months later by Intel's own (intended) plan to move its IGP to the Nehalem CPU. Integrating graphics proved just as difficult for Intel's much larger R&D team as the first Westmere-based Clarkdale chips in January 2010 would have an "on package" IGP, not fully integrated in the CPU die.

In the end, both companies would debut their IGP designs within days of each other in January 2011, with AMD's low-power Brazos SoC quickly followed by Intel's mainstream Sandy Bridge architecture. The intervening years have largely seen a continuation of a trend where Intel gradually introduces small incremental performance advances, paced against its own product and process node cadence, safely reaping the maximum financial return for the time being.

Both Intel and AMD targeted integrated graphics as a key strategy in their future development.

Llano became AMD's first performance oriented Fusion microprocessor, intended for the mainstream notebook and desktop market. The chip was criticised for its poor CPU performance and praised for its better GPU performance.

For its part, AMD finally released the Bulldozer architecture in September 2011, a debut delayed long enough that it fared poorly on most performance metrics thanks to Intel's foundry process execution and remorseless architecture/die shrink (Tick-Tock) release schedule. AMD's primary weapon continues to be aggressive pricing, which somewhat offsets Intel's "top of the mind" brand awareness among vendors and computer buyers, allowing AMD to maintain a reasonably consistent 15-19% market share from year to year.

The microprocessor rose to prominence not because it was superior to mainframes and minicomputers, but because it was good enough for the simpler workloads. The same dynamic unfolded with the arrival of a challenger to the x86 CPU hegemony: ARM.

The microprocessor rose to prominence not because it was superior to mainframes and minicomputers, but because it was good enough for the simpler workloads required of it while being smaller, cheaper, and more versatile than the systems that preceded it. The same dynamic unfolded with the arrival of a challenger to the x86 CPU hegemony of low cost computing as new classes of products became envisioned, developed and brought to market.

ARM has been instrumental in bringing personal computing to the next step in its evolution, and while it traces its development back over 30 years, it required advances in many other fields of component and connectivity design as well as its own evolution to truly propel it into the ubiquitous architecture we see today.

The ARM processor grew out of the need of a cheap co-processor for the Acorn Business Computer (ABC), which Acorn was developing to challenge IBM's PC/AT, Apple II, and Hewlett-Packard's HP-150 in the professional office machine market.

With no chip meeting the requirement, Acorn set about designing its own RISC-based architecture with Sophie Wilson and Steve Furber, who had both previously designed the prototype that later became the BBC Micro educational computer system.

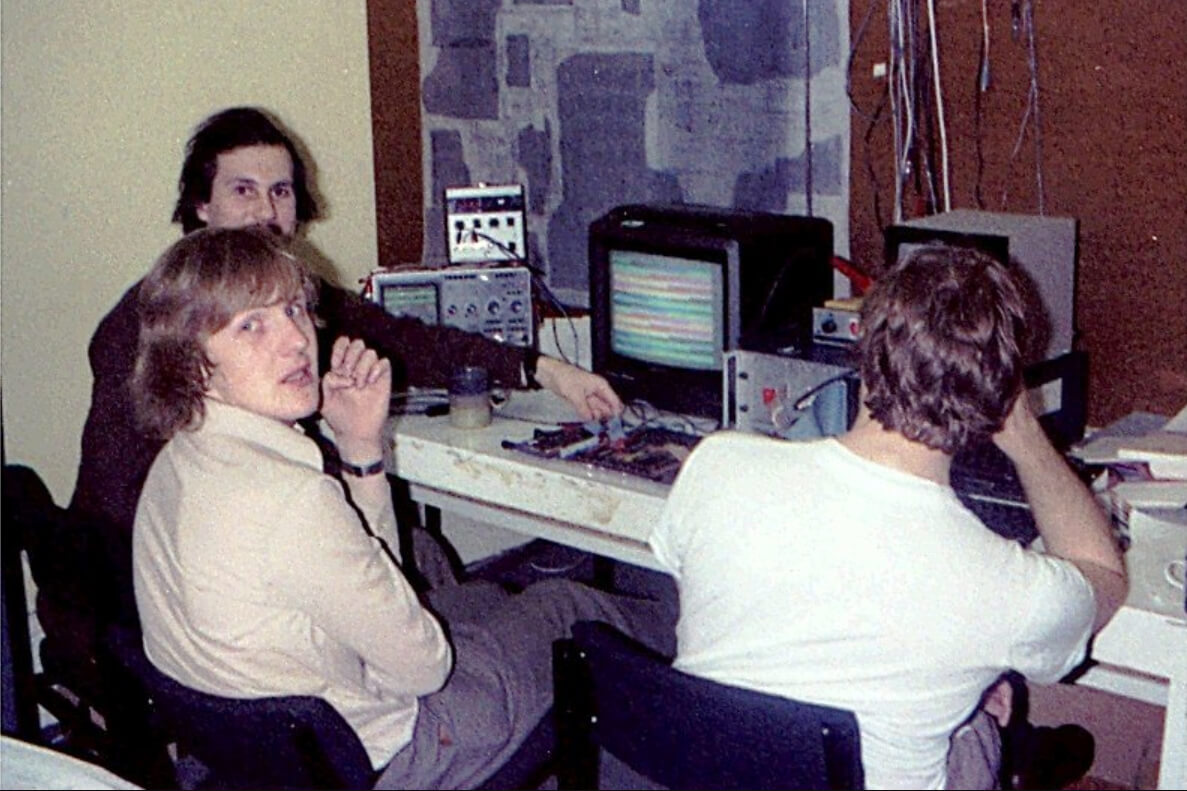

Steve Fulber at work around the time of the BBC Micro development in the early 1980s. He led the design of the first ARM microprocessor along with Sophie Wilson. (British Library)

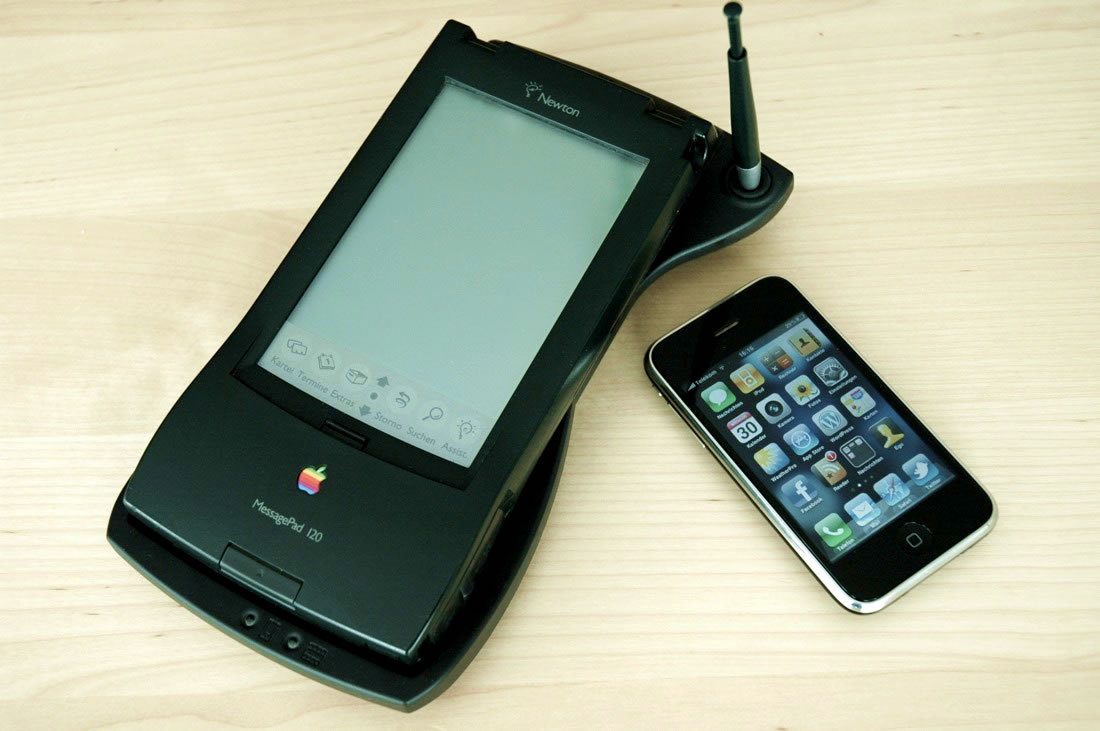

The initial development ARM1 would be followed by the ARM2, which would form the processing heart of Acorn's Archimedes and spark interest from Apple as a suitable processor for its Newton PDA project. The chip's development timetable would coincide with a slump in demand for personal computers in 1984 that strained Acorn's resources.

While the Newton wasn't an economic success, its entry into the field of personal computing elevated ARM's architecture significantly.

Facing mounting debts from unsold inventory, Acorn spun off ARM as 'Advanced Risc Machines.' Acorn's major shareholder, Olivetti, and Apple's stake in the new company were set at 43% each, in exchange for Acorn's IP and development team and Apple's development funding. The remaining shares would be held by manufacturing partner VLSI Technologies and Acorn co-founder Hermann Hauser.

The first design, the ARM 600, was quickly supplanted by the ARM 610, which replaced AT&T's Hobbit processor in the Newton PDA. While the Newton and its licensed sibling (the Sharp Expert Pad PI-7000) initially sold 50,000 units in the first 10 weeks after August 3, 1993, the $499 price and an ongoing memory management bug which affected the handwriting recognition feature slowed sales to the extent that the product line would cost Apple nearly $100 million, including development costs.

The Apple Newton concept would spark imitators. AT&T, who had supplied the Hobbit processor for the original Newton, envisaged a future development where the Newton could incorporate voice messaging – a forerunner to the smartphone – and was tempted to acquire Apple as the Newton neared production status. In the end it would be IBM's Simon Personal Communicator that would lead the way to the smartphone revolution – albeit briefly.

While the Newton wasn't an economic success, its entry into the field of personal computing elevated ARM's architecture significantly. The introduction of the ARM7 core followed by its influential Thumb ISA ATM7TDMI variant would lead directly to Texas Instruments signing a licensing deal in 1993 followed by Samsung in 1994, DEC in 1995, and NEC the following year. The landmark ARMv4T architecture would also be instrumental in securing deals with Nokia to power the 6110, Nintendo for the DS and Game Boy Advance, and it has a long running association with Apple's iPod.

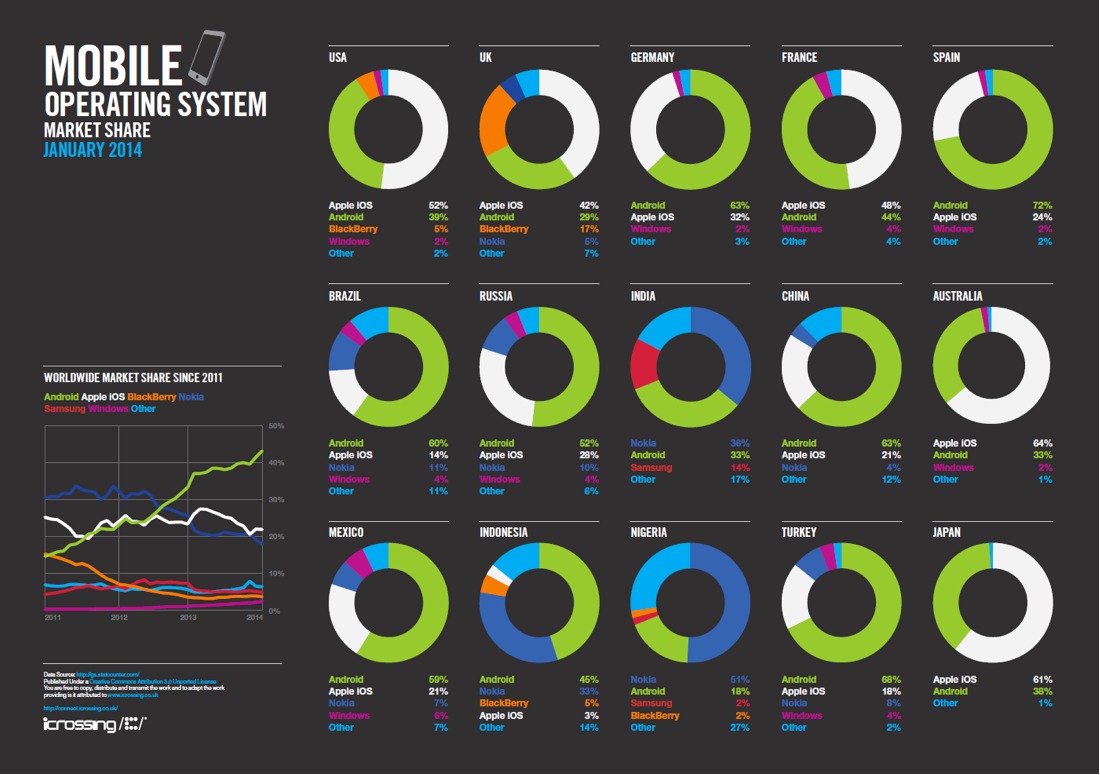

Sales exploded with the evolution of the mobile internet and the increasingly capable machines that provide access to the endless flow of applications, keeping people informed, entertained and titillated. This rapid expansion has led to a convergence of sorts as ARM's architectures become more complex while x86 based processing pares away its excess and moves down market segments into low-cost, low-power areas previously reserved for ARM's RISC chips.

Apple MessagePad 120 next to the iPhone 3G (Photo: Flickr user admartinator)

Intel and AMD have hedged their bets by allying themselves with ARM-based architectures – the latter designing its own 64-bit K12 architecture and the former entering into a close relationship with Rockchip to market Intel's SoFIA initiative (64-bit x86 Silvermont Atom SoCs) and to fabricate its ARM architecture chips.

Both Intel strategies aim to ensure that the company doesn't fall into the same mire that affected other semiconductor companies who fabricated their own chips, ensuring high production, so the foundries continue to operate efficiently. Intel's foundry base is both extensive and expensive to maintain and thus requires continuous high volume production to remain viable.

Intel and AMD have since hedged their bets by allying themselves with ARM-based architectures.

The biggest obstacles facing the traditional powers of personal computing, namely Microsoft and Intel, and to a lesser degree Apple and AMD, are the speed that the licensed IP model brings to vendor competition and the all-important installed software base.

Apple's closed hardware and software ecosystem has long been a barrier to fully realize its potential market penetration, with the company focusing on brand strategy and repeat customers over outright sales and licensing. While this works in developed markets, it's comparably less successful in vast emerging markets where MediaTek, Huawei, Allwinner, Rockchip and others cash in on affordable ARM-powered, open source Android smartphones, tablets and notebooks.

Such is Android's all-pervasive presence in the smartphone market that even as Microsoft collects handsome royalties tied up with the open source ecosystem, and divests itself of Android-based Nokia products, the company looks likely to make overtures to Cyanogen, the software house that caters to people who prefer their Android without Google.

Amazon and Samsung are reportedly matching Microsoft's interest in either partnering with or outright purchasing Cyanogen, too. As the scale of the world's mobile computing society becomes apparent, Microsoft's keystone product, Windows, is at a crossroads between being primarily based on desktop users and moving to a mobile-centric touchscreen GUI.

For once, Microsoft has found itself second in software choice. Along with facing Android's wrath in the mobile sector, Microsoft failed to learn from Hewlett-Packard's experience with the touchscreen HP-150 of some 30 years ago – namely that productivity suffers when alternating between mouse/keyboard and touchscreen, not to mention an inherent reluctance of some users to embrace a different technology, leading many desktop users to resist the company's vision of a unified operating system for all consumer computing needs.

For once, Microsoft has found itself second in software choice, and its keystone product, Windows, is at a crossroads between being primarily based on desktop users and moving to a mobile-centric GUI.

Time will tell how these interrelationships resolve, whether the traditional players merge, fail, or triumph. What seems certain is that computing is coalescing across what used to be distinct, clearly defined market segments. Heterogeneous computing aims to unite the many disparate systems with universal protocols that enable not just increased user-to-user and user-to-machine connectedness, but a vast expansion of machine-to-machine (M2M) communication.

Coined 'The Internet of Things', this initiative will rely heavily upon cooperation and common open standards to reach fruition – not exactly a given with the rampant self-interest generally displayed by big business. However, if successful, the interconnectedness would involve over seven billion computing devices, including personal computers (desktop, mobile, tablet), smartphones and wearables and close to 30 billion other smart devices.

This article series has largely been devoted to the hardware and software that defined personal computing from its inception. Conventional mainframe and minicomputer builders first saw the microprocessor as a novelty – a low-cost solution for a range of rudimentary applications. Within 40 years the technology has evolved from being limited to those skilled in component assembly, soldering and coding, to pre-school children being able access every corner of the world with the swipe of a touchscreen.

The computer has evolved into a fashion accessory and a method of engaging the world without personally engaging with the world – from poring over hexadecimal code and laboriously compiling punch tape to the sensory overload of today's internet with its siren song of a pseudo-human connectivity. Meanwhile, using the most personal of computers, the human brain, is encouraged to be abbreviated, almost as much as modern language has embraced emojis and text speak.

People have long been dependent on the microprocessor. While it may have started with company bookkeeping, secretarial typing and stenography pools, much of humanity now relies on computers to tell us how, when and why we move through the day. The next stage in computing history may just center on how we went from shaping our technology to how our technology shaped us.

This is the fifth and final installment on our History of the Microprocessor and the PC. Don't miss on reading the complete series for a stroll down a number of milestones from the invention of the transistor to modern day chips powering our connected devices.