So far, Nvidia's GeForce 700 series comprises of the high-end GTX 780 and GTX 770, as well as the mid-range GTX 760. But in preparation for this holiday season's blockbuster game releases, such as Battlefield 4 and Call of Duty: Ghosts, Nvidia are reportedly preparing a poster-boy GPU: the GeForce GTX 750 Ti.

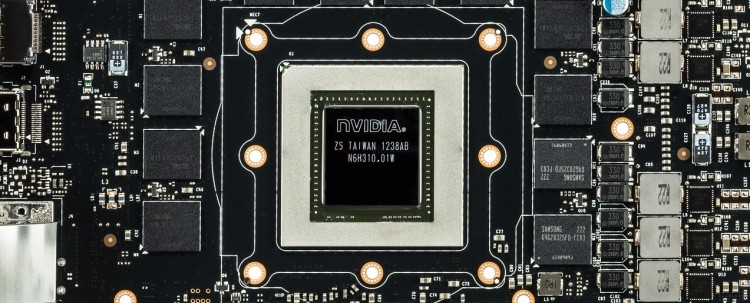

The mid-range graphics card from Nvidia is allegedly being designed to succeed the GTX 650 Ti, while also outperforming the GTX 660. It's said to be based on the same GK104 GPU that's found in the GTX 770 and GTX 760, but obviously with some features stripped out to keep the prices low.

MyDrivers reports that the GTX 750 Ti will come with 960 CUDA cores - less than the GTX 760's 1152, and the same as the GTX 660 - but with 32 ROPs (up from 24 on the GTX 660) and a 256-bit memory bus width (up from 192 on the GTX 660). Clock speeds are also said to be higher, with a base clock of 1033 MHz, a boost clock of 1098 MHz, and a memory clock of 6000 MHz (effective) on GDDR5 DRAM.

It isn't known when the GeForce GTX 750 Ti will be officially released, but it's unlikely Nvidia will schedule a launch at the same time as AMD's late-September 'Volcanic Islands' GPU unveiling. This means we're looking at either a release in the next few weeks, or in early October, if the rumors prove to be correct.