Hewlett Packard Enterprise last week unveiled a working prototype of their long awaited new computing architecture called "The Machine". Rather than focusing solely on a faster processor or more memory, The Machine employs a new memory-driven computing design.

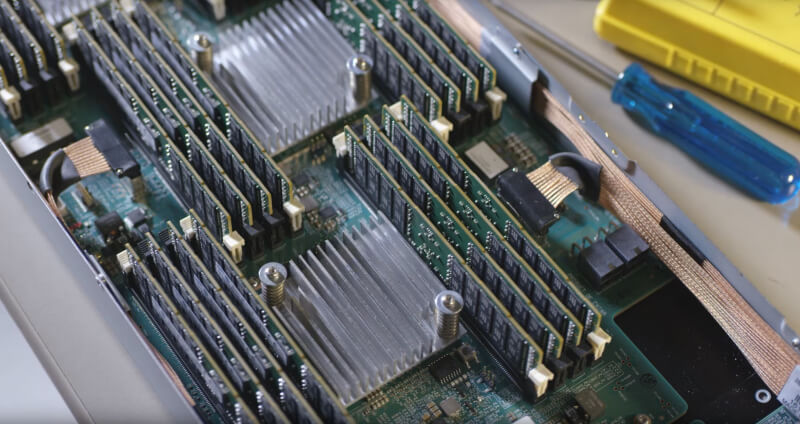

HPE announced the project back in 2014 with up to 75% of their research labs focused on its development. The core idea behind memory-driven computing is using memory at the center of the design rather than the processor. Traditionally high performance computers used multiple processors each with their own set of memory. This creates bottlenecks and requires extra data to be transferred between these collections of memory. The Machine uses a single massive pool of memory across all the processors.

The prototype system currently has 8 terabytes of memory but HP is looking to expand that to hundreds of terabytes in the future as more nodes are linked together. HP's goal is to use NVM (non-volatile memory) as a replacement for traditional DRAM. Instead of using copper interconnects on the board, HP has also developed a new X1 photonics module that uses light to transfer data in place of electrons. It is capable of transmitting up to 1.2Tbps at short distances.

The project has hit some bumps along the way however. The original design was slated to be released as a standalone product, but that idea has since changed. The Machine's core architecture will instead be built into future HP products. Elements like the photonics data transmission module and memristor technology – memory that can retain data when powered down – will make their way onto the market in 2018 or 2019.

The goal is to use this memory-focused technology to analyze huge data sets very quickly, for example to scan millions of financial transactions each second for fraud. HP claims The Machine will be able to address any data in a 160PB storage space in 250ns. As technology continues to evolve, these kinds of computations on the Petabyte and Exabyte scale don't look too far off.