Something to look forward to: AMD's Computex keynote just took place and we have all the details thanks to a pre-briefing which included three key announcements: FidelityFX Super Resolution (FSR), new Ryzen 5000 desktop APUs and Radeon RX 6000M GPUs for laptops. Here are our preliminary thoughts on what's coming from Team Red.

FidelityFX Super Resolution (FSR)

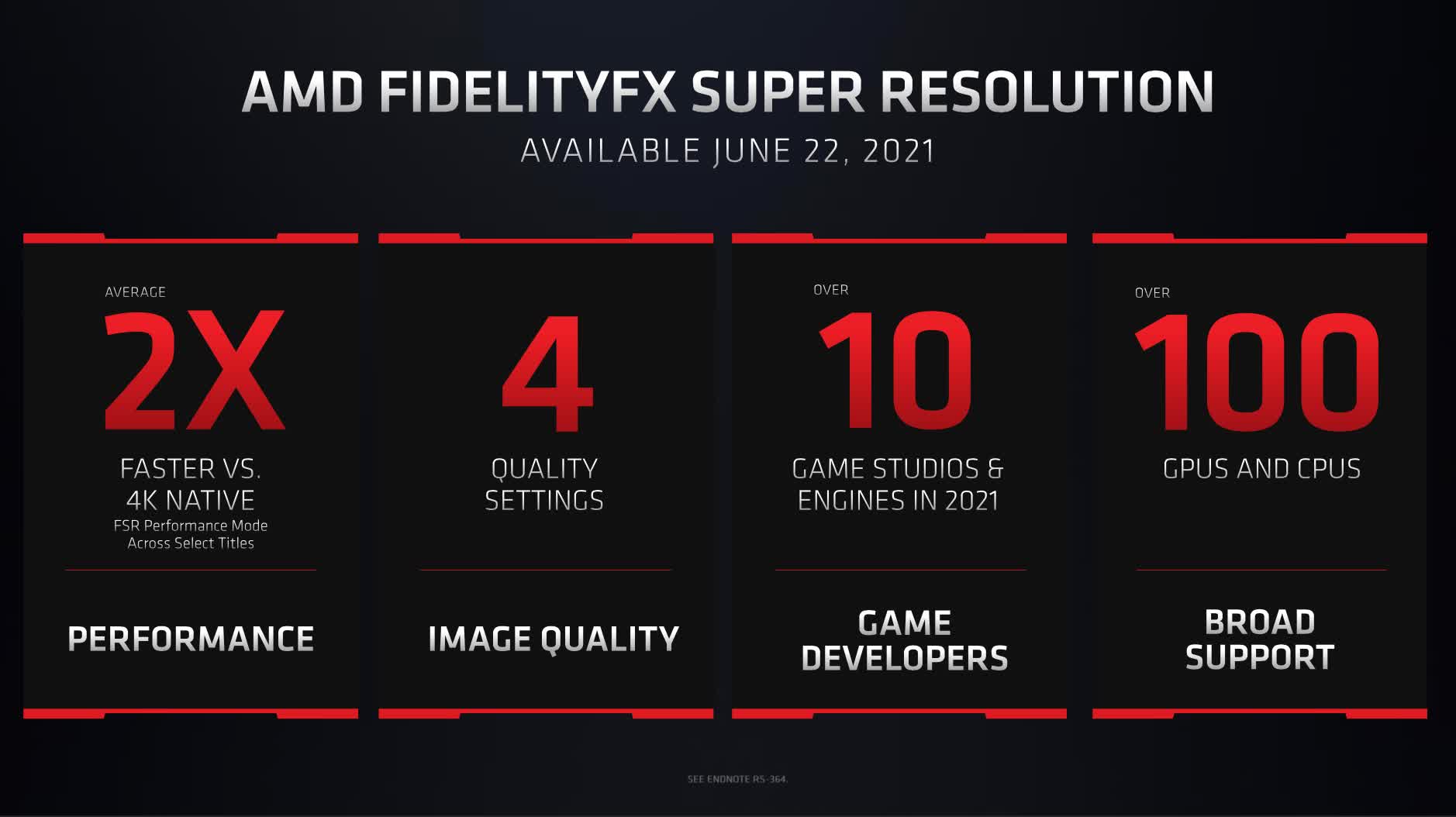

One thing that Radeon GPUs have lacked for quite some time now is a true competitor to Nvidia’s DLSS, but that's changing this month with the launch of FidelityFX Super Resolution or FSR. This is AMD’s long awaited alternative that will attempt to upscale games from a lower render resolution to a higher output resolution, allowing for higher levels of performance without a significant reduction to visuals – or ideally the same visual quality.

AMD is not providing a ton of details just yet, but we do have a release date of June 22, which is just around the corner. This is when we’ll see the first game get patched with FSR support.

FSR is part of AMD’s GPUOpen program, and as such it's supposed to work on both AMD and Nvidia GPUs, even those that don’t support DLSS, like the GeForce 10 series. AMD lists support for RX Vega, RX 500, RX 5000 and RX 6000 series GPUs, and all Ryzen APUs with Radeon Graphics. They won’t be providing technical support on GPUs from other vendors but we do know that FSR will work on Nvidia GPUs from at least Pascal and newer.

AMD teased FSR performance in two situations: the first is Godfall running on an RX 6800 XT using Epic settings and ray tracing at 4K resolution. Without FSR, the game was running at 49 FPS. With FSR using the Ultra Quality mode, performance increased to 78 FPS, a 59% increase. That margin jumped to 2X using the Quality mode, and exceeded 2X in both the Balanced and Performance modes.

AMD hasn’t shared what render resolutions each of these modes are running at, but the performance claims are impressive, and we can see there will be a range of FSR options to choose from. These performance uplifts are in line with what Nvidia claims with DLSS 2.0 in the latest titles, but of course, this is just one game and we have no idea how it was tested.

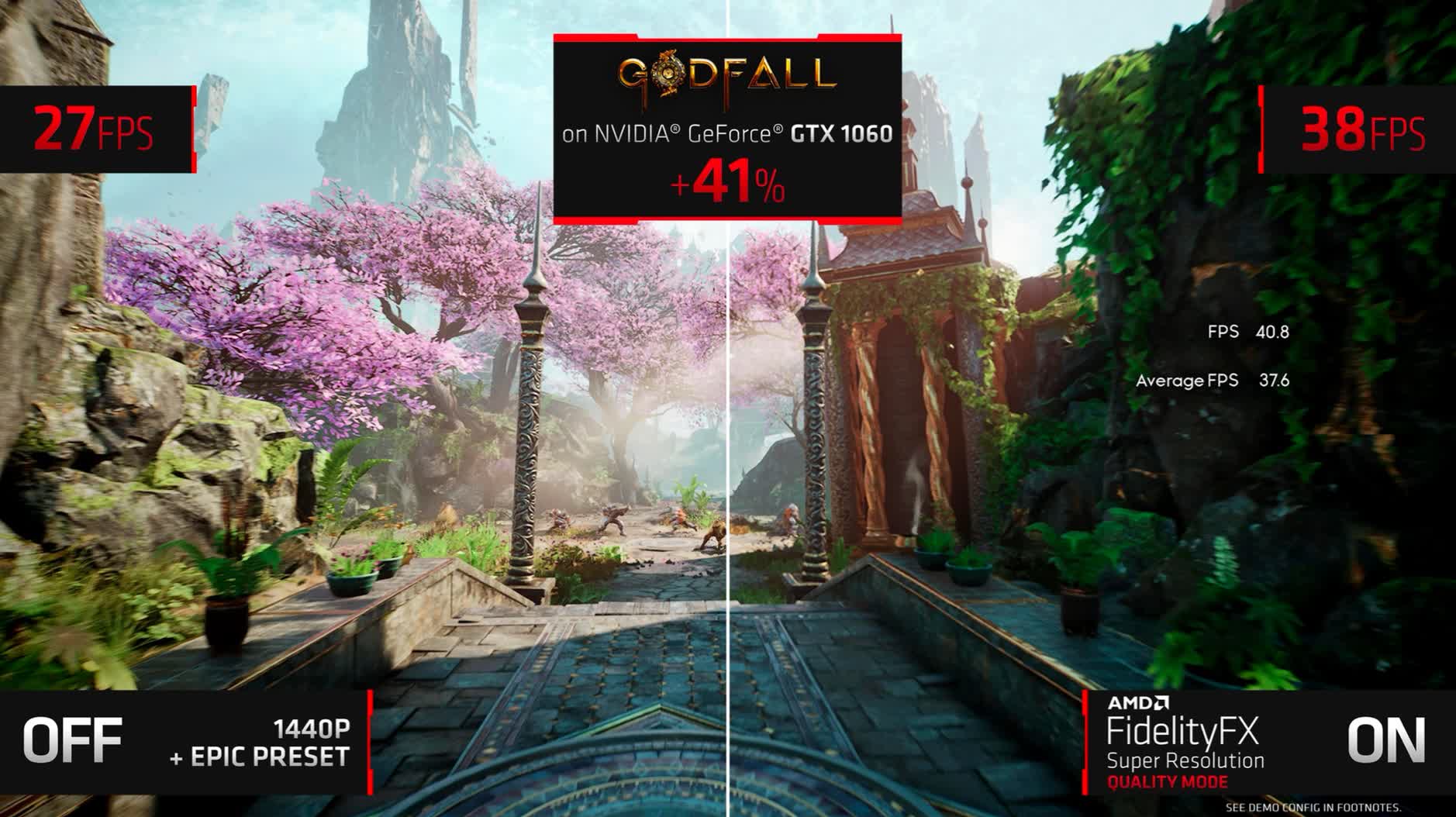

The second performance teaser we got was actually on Nvidia’s GeForce GTX 1060 running Godfall again at 1440p Epic settings. FSR running in the Quality mode, so one step down from the highest mode, delivered a 41% higher frame rate and took the game above 30 FPS on average. AMD said they chose this demo because the GTX 1060 is the most popular GPU used today on Steam, and of course, they want to boast about how FSR works on this GPU while DLSS does not. This performance uplift is lower than what was seen previously at 4K using the 6800 XT, so that’s something to explore in the future as there is a chance that FSR won't perform the same on AMD as it does on Nvidia.

The big question mark remains visual quality. We only saw two still images with side-by-side comparison shots, no video comparisons, and certainly nothing in true detail. The first image looked quite good but the second showed clear differences and the FSR image was obviously inferior, showing less detail and more blur.

Update: AMD uploaded this 4K video to YouTube after the keynote...

That's all the information we have for now and we'll have to try it for ourselves soon to assess visual quality in depth. What we do know are two additional things: AMD is calling this a "spatial upscaling technology," with no mention of AI or temporal upscaling, although I’d be surprised if there wasn’t a temporal element that used information from multiple frames. No mention of "better than native" quality either, which most likely would just be marketing speak.

As for game support. Like DLSS, FSR requires integration on a per-game basis. AMD claims that 10 game studios and engines will integrate FSR in 2021, with an unspecified number of games. Godfall is one of those, and we might see a list of games as early as today on AMD’s website, but we weren’t provided one during the pre-briefing. In this sense, AMD is starting miles behind Nvidia which has been able to grow DLSS 2.0 support substantially in the 18 months since it was first deployed.

Ryzen 7 5700G and Ryzen 5 5600G

Next up we have news of two new APUs for the desktop market, the Ryzen 7 5700G and Ryzen 5 5600G had been previously announced for the OEM market, but will now be coming to the DIY market in essentially the same form.

The Ryzen 7 5700G is an 8-core, 16-thread processor using AMD’s Zen 3 architecture, clocked up to 4.6 GHz with 16 MB of L3 cache and a 65W TDP. There is also a Vega GPU inside with 8 compute units clocked up to 2 GHz. This is the same Cezanne die that AMD are using for Ryzen Mobile 5000 APUs like the Ryzen 9 5900HX, so it’s a monolithic design rather than chiplet based, and has half the L3 cache but still features a unified CCX.

Complementing this CPU is the Ryzen 5 5600G, which is a six-core processor clocked up to 4.4 GHz, also with 16 MB of L3 cache and with 7 Vega compute units clocked up to 1.9 GHz. The Ryzen 3 quad-core option announced for OEMs is not being brought across to the desktop market.

AMD is positioning these processors as lower cost Zen 3 alternatives to the current X-models with no integrated graphics that have been in the market for some time now. The company acknowledged that there has been a lot of demand for non-X CPUs and apparently these parts are set to fill that gap.

Unfortunately though, these aren’t really the low cost CPUs many have been looking for. The Ryzen 5 5600G is priced at $260, $40 less than the Ryzen 5 5600, but not the $200-220 that many people were hoping a Ryzen 5 5600 would slot into, so even after this announcement we still don’t have a $200 Zen 3 processor of any kind (your only option in this segment are previous generation AMD products, or Intel).

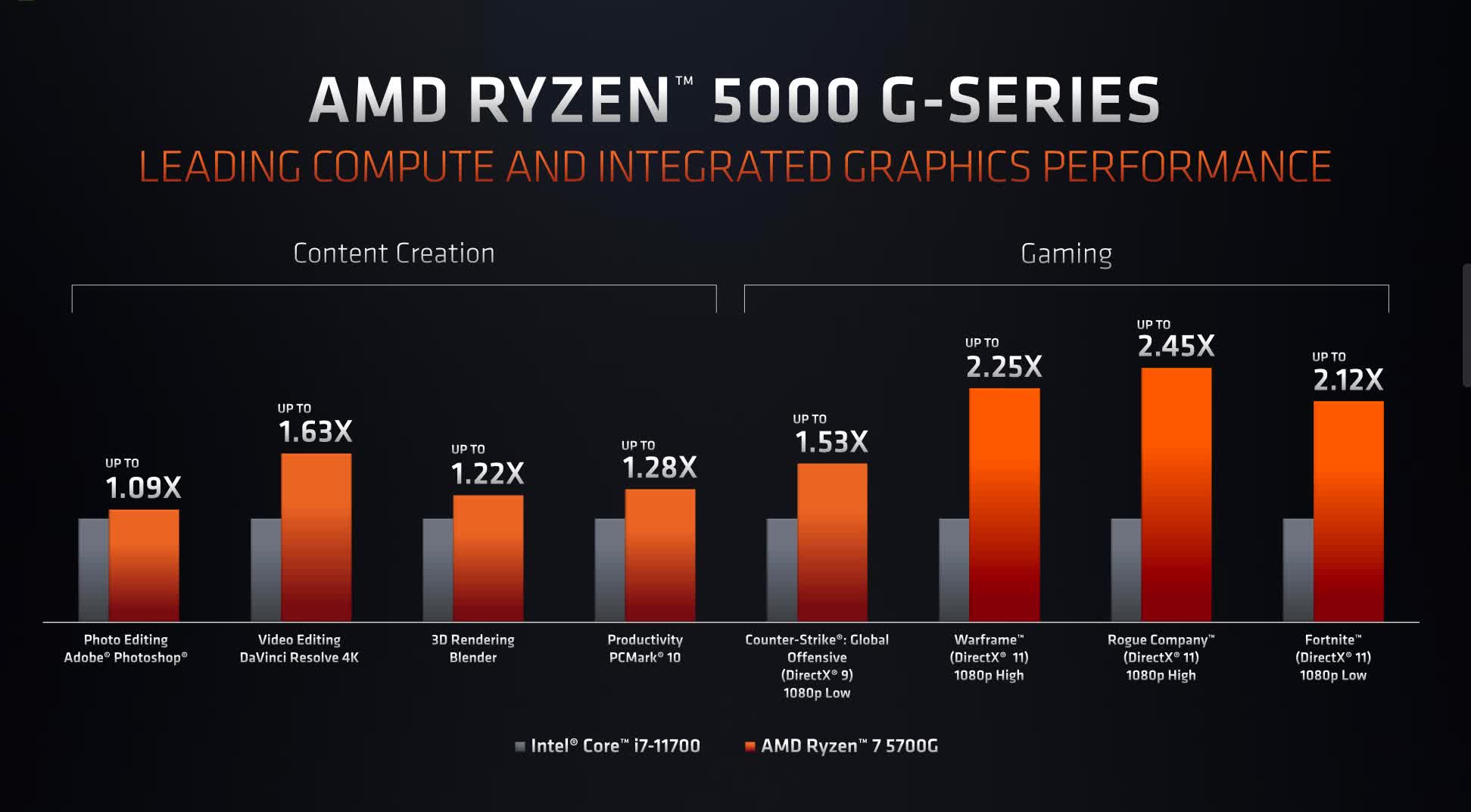

The Ryzen 7 5700G sits between the 5600X and 5800X with its $360 price tag, and both CPUs will be available on August 5th. AMD provided some performance slides comparing the Ryzen 7 5700G to the Intel Core i7-11700 which is Intel’s direct competitor at $370, and of course you can take these numbers with a grain of salt as they are directly from AMD. With that said, AMD do expect their integrated GPU to be much better than the iGPU Intel are offering in Rocket Lake parts like the 11700.

It will be quite interesting to see how these monolithic chips compare to the chiplet-based desktop processors already on the market in both productivity and gaming performance, to see which approach is superior. I don’t expect these new APUs to be as fast given the lower amount of cache and slightly lower clock speeds, but latencies will be interesting.

Radeon RX 6800M and more gaming laptop GPUs

AMD is making a new push into gaming laptops with much more capable RDNA 2 GPUs. We had seen some RX 5000M GPUs hit the market in the last few years, and AMD has made several attempts at laptop gaming in the past, but the big issue for them has been efficiency.

Nvidia simply has had the more efficient architecture for generations now, which has led them to dominate the laptop gaming landscape. AMD is attempting to shift that narrative with new GPUs that feature low idle power and real time power cycling, optimized for mobile form factors. We know already the RDNA2 architecture is a lot more efficient than RDNA1, allowing for higher performance and/or lower power draw depending on the circumstances.

The top laptop GPU that AMD is announcing is the Radeon RX 6800M, which is based on their Navi 22 GPU die, the same that powers the Radeon RX 6700 XT on the desktop. Thankfully though, AMD is being sensible with naming, calling this product the “6800M” which should clearly distinguish it from the desktop parts.

The RX 6800M features 40 compute units and 96 MB of infinity cache, plus game clock speeds up to 2300 MHz which is only slightly lower than the 2424 MHz game clock for the desktop RX 6700 XT. The memory subsystem includes 12 GB of GDDR6 on a 192-bit interface. Despite the RX 6700 XT being a 230W desktop GPU, AMD is listing the mobile 6800M with a very similar configuration at 145W with that 2300 MHz game clock expected to be achieved at 145W according to AMD’s specification table. We’ll have to see how that plays out, but it does point to the 6800M being efficient.

About those power ratings, AMD says there will be some wiggle room for OEMs to customize the power level depending on the cooling design and also whether technologies are enabled like SmartShift – AMD’s dynamic power allocation system that shifts power between the CPU and GPU as needed. However, AMD has not hinted at any sort of lower power variants, so it doesn’t sound like they will be going down the Max-Q route.

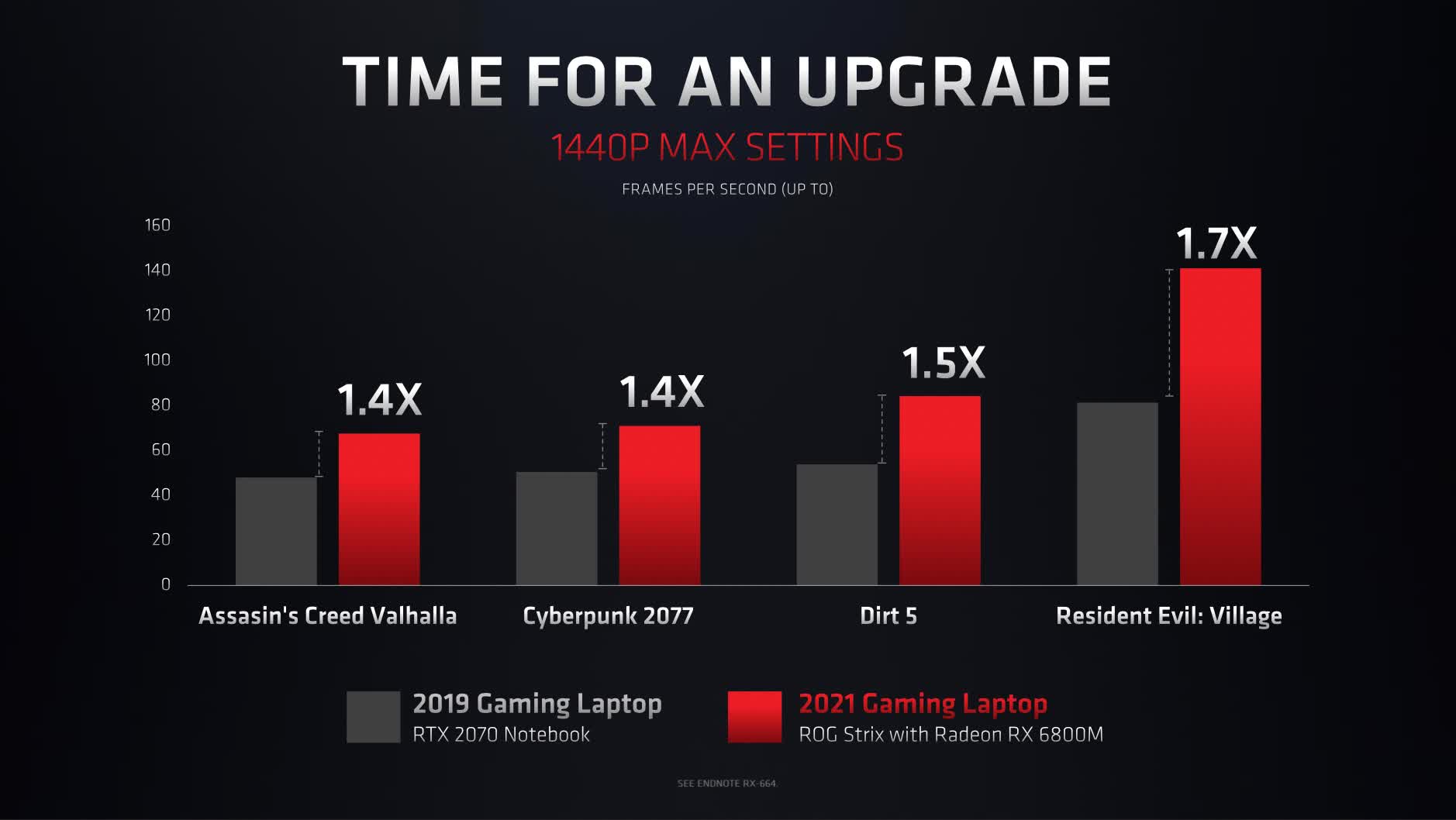

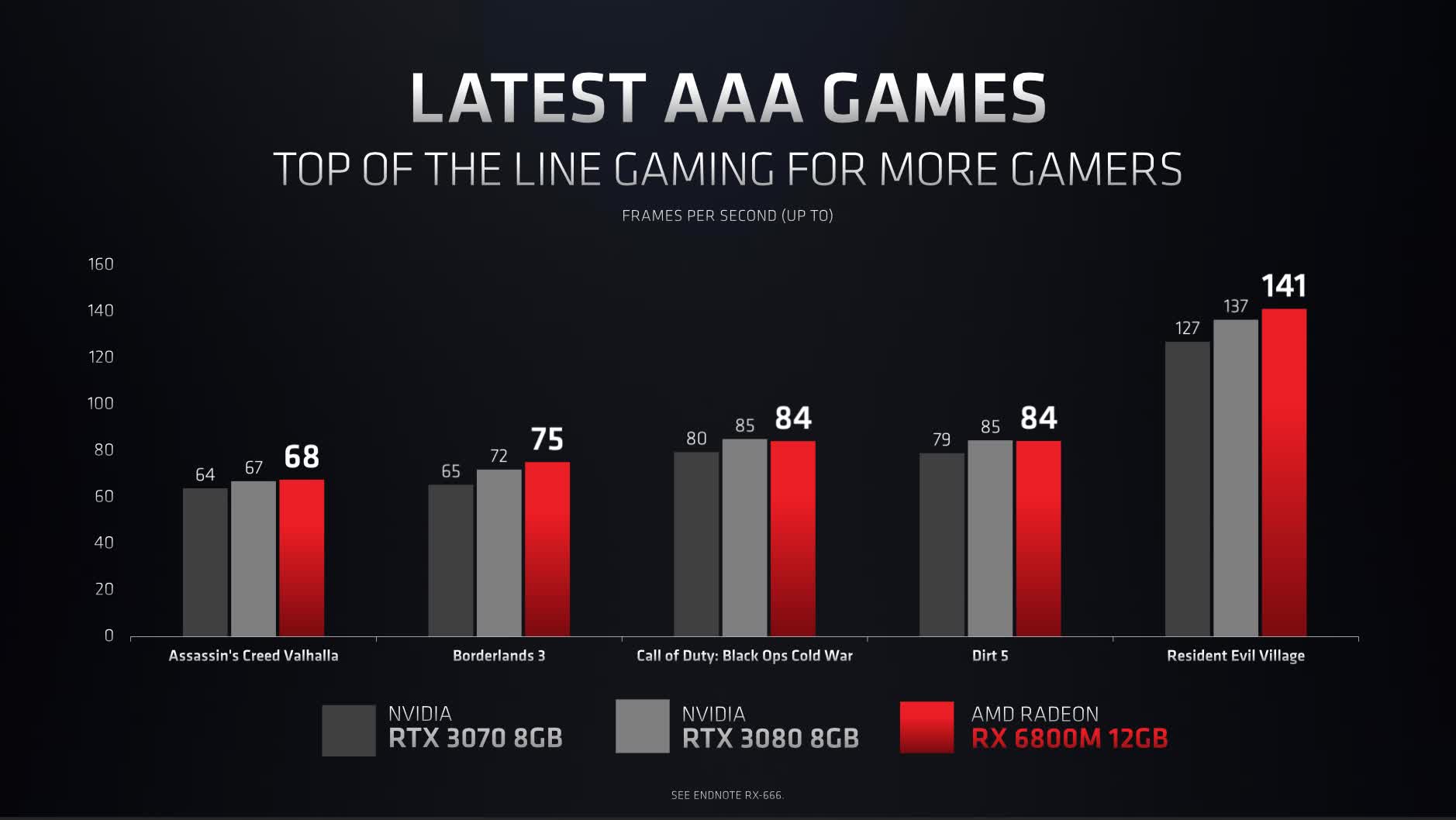

The RX 6800M will arrive first in the Asus ROG Strix G15, a laptop that we already have on hand and plan to test and benchmark for you later this week. For now, here are AMD’s provided benchmarks which you should take with a grain of salt. They believe the 6800M will be between 1.4 and 1.7x faster than the RTX 2070 for laptops, while delivering performance around the same mark as the RTX 3080 Laptop GPU, targeting 1440p gaming.

AMD is also suggesting that the RX 6800M will deliver much better performance on battery than the RTX 3080 depending on the game. We don’t normally test gaming on battery but AMD did make specific note on this, suggesting that RDNA2 is particularly efficient at lower power levels that can be sustained on battery power.

The Radeon RX 6700M was also announced. This is a cut down version of the Navi 22 die with 36 compute units at the same 2300 MHz game clock, along with 10 GB of GDDR6 memory on a 160-bit bus. 80 MB of infinity cache is included. AMD is listing this as a 135W GPU, which in comparison to Nvidia is similar to the upper end of the RTX 3070’s range and the middle of the RTX 3080.

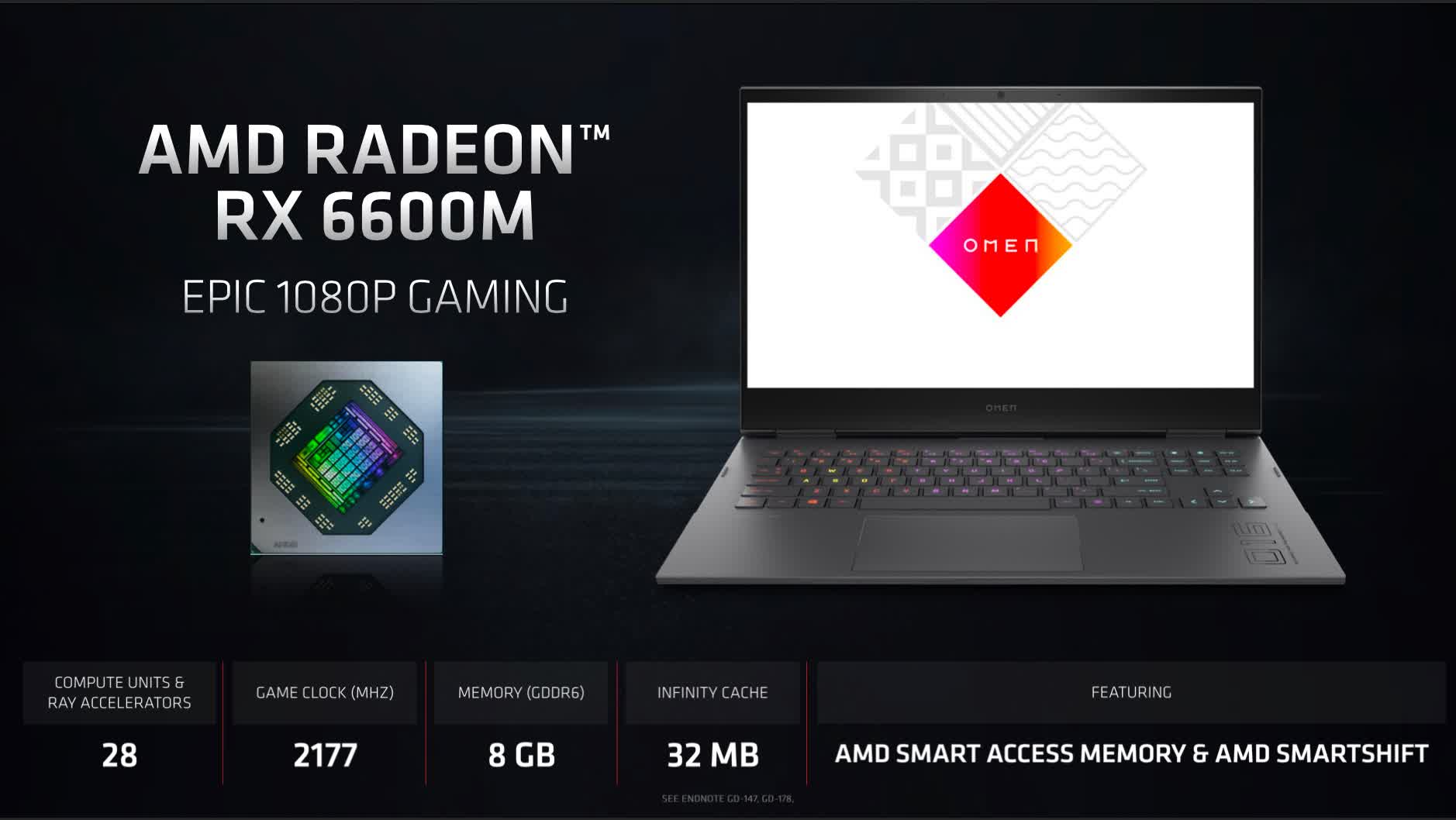

Next is the Radeon RX 6600M, using a new Navi 23 die that we also expect to feature on the desktop at some point. This GPU features 28 compute units and a game clock of 2177 MHz, with AMD listing a 100W power limit. 8 GB of GDDR6 memory is included here along with 32 MB of infinity cache. The first system to use this GPU should be an HP Omen 16 model, and AMD is touting high levels of performance for 1080p gaming, comparable to the RTX 3060.

The Radeon 6800M and 6600M should be available right away, while the 6700M will be available soon – and that’s probably due to the 6700M relying on cut down silicon. We're excited to test all of these GPUs in the coming weeks to see just how well AMD can stack up to Nvidia for gaming performance in a laptop form factor and whether we finally have genuine competition in this space.

The final thing AMD talked about was their new laptop program called "AMD Advantage," which is essentially a laptop design and certification process to ensure the highest quality laptops using AMD hardware. The Advantage program combines Ryzen GPUs with Radeon GPUs, low latency 144Hz+ displays, fast SSDs, decent thermals and battery life, plus more. The Asus and HP laptops we just mentioned are the first that will launch as part of this program and the first to use RDNA2 graphics.

https://www.techspot.com/news/89879-amd-computex-2021-fsr-vs-dlss-ryzen-5000.html