Forward-looking: As of late, every time a major tech company hosts an event, it almost inevitably ends up discussing their strategy and products focused on AI. That's just what happened at AMD's Advancing AI event in San Jose this week, where the semiconductor company made several significant announcements. The company unveiled the Instinct MI300 GPU AI accelerator line for data centers, discussed the expanding software ecosystem for these products, outlined their roadmap for AI-accelerated PC silicon, and introduced other intriguing technological advancements.

In truth, there was a relative scarcity of "truly new" news, and yet you couldn't help but walk away from the event feeling impressed. AMD told a solid and comprehensive product story, highlighted a large (perhaps even too large?) number of clients/partners, and demonstrated the scrappy, competitive ethos of the company under CEO Lisa Su.

On a practical level, I also walked away even more certain that the company is going to be a serious competitor to Nvidia on the AI training and inference front, an ongoing leader in supercomputing and other high-performance computing (HPC) applications, and an increasingly capable competitor in the upcoming AI PC market. Not bad for a 2-hour keynote.

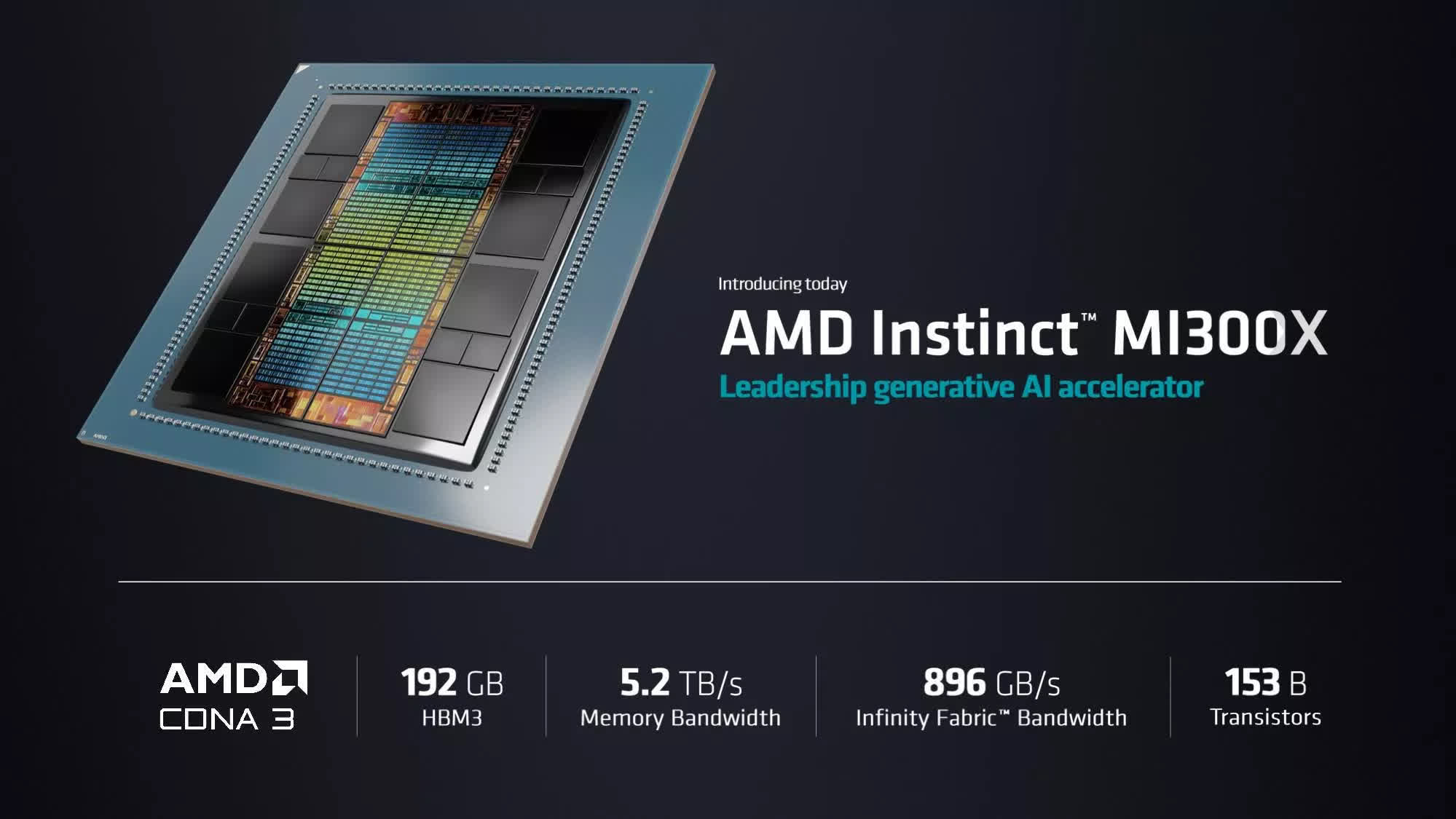

Not surprisingly, most of the event's focus was on the new Instinct MI300X, which is clearly positioned as a competitor to Nvidia's market dominating GPU-based AI accelerators, such as their H100. While much of the tech world has become infatuated with the GenAI performance that the combination of Nvidia's hardware and CUDA software have enabled, there's also a rapidly growing recognition that their utter dominance of the market isn't healthy for the long term.

As a result, there's been a lot of pressure for AMD to come up with something that's a reasonable alternative, particularly because AMD is generally seen as the only serious competitor to Nvidia on the GPU front.

The MI300X has so far triggered enormous sighs of relief heard 'round the world as initial benchmarks suggest that AMD achieved exactly what many were hoping for. Specifically, AMD touted that they could match the performance of Nvidia's H100 on AI model training and offered up to a 60% improvement on AI inference workloads.

In addition, AMD touted that combining eight MI300X cards into a system would enable the fastest generative AI computer in the world and offer access to significantly more high-speed memory than the current Nvidia alternative. To be fair, Nvidia has already announced the GH200 (codenamed "Grace Hopper") that will offer even better performance, but as is almost inevitably the case in the semiconductor world, this is bound to be a game of performance leapfrog for many years to come. Regardless of how people choose to accept or challenge the benchmarks, the key point here is that AMD is now ready to play the game.

Given that level of performance, it wasn't surprising to see AMD parade a long list of partners across the stage. From major cloud providers like Microsoft Azure, Oracle Cloud and Meta to enterprise server partners like Dell Technologies, Lenovo and SuperMicro, there was nothing but praise and excitement from them. That's easy to understand given that these are companies who are eager for an alternative and additional supplier to help them meet the staggering demand they now have for GenAI-optimized systems.

In addition to the MI300X, AMD also discussed the Instinct MI300A, which is the company's first APU designed for the data center. The MI300A leverages the same type of GPU XCD (Accelerator Complex Die) elements as the MI300X, but includes six instead of eight and uses the additional die space to incorporate eight Zen 4 CPU cores. Through the use of AMD's Infinity Fabric interconnect technology, it provides shared and simultaneous access to high bandwidth memory (HBM) for the entire system.

One of the interesting technological sidenotes from the event was that AMD announced plans to open up the previously proprietary Infinity Fabric to a limited set of partners. While no details are known just yet, it could conceivably lead to some interesting new multi-vendor chiplet designs in the future.

This simultaneous CPU and GPU memory access is essential for HPC-type applications and that capability is apparently one of the reasons that Lawrence Livermore National Labs chose the MI300A to be at the core of its new El Capitan supercomputer being built in conjunction with HPE. El Capitan is expected to be both the fastest and one of the most power efficient supercomputers in the world.

On the software side, AMD also made numerous announcements around its ROCm software platform for GenAI, which has now been upgraded to version 6. As with the new hardware, they discussed several key partnerships that build on previous news (with open-source model provider Hugging Face and the PyTorch AI development platform) as well as debuting some key new ones.

Most notable was that OpenAI said it was going to bring native support for AMD's latest hardware to version 3.0 of its Triton development platform. This will make it trivial for the many programmers and organizations eager to jump on the OpenAI bandwagon to leverage AMD's latest – and gives them an alternative to the Nvidia-only choices they've had up until now.

The final portion of AMD's announcements covered AI PCs. Though the company doesn't get much credit or recognition for it, they were actually the first to incorporate a dedicated NPU into a PC chip with last year's launch of the Ryzen 7040.

The XDNA AI acceleration block it includes leverages technology that AMD acquired through its Xilinx purchase. At this year's event, the company announced the new Ryzen 8040 which includes an upgraded NPU with 60% better AI performance. Interestingly, they also previewed their subsequent generation codenamed "Strix Point," which isn't expected until the end of 2024.

The XDNA2 architecture it will include is expected to offer an impressive 3x improvement versus the 7040. Given that company still needs to sell 8040-based systems in the meantime, you could argue that the "teaser" of the new chip was a bit unusual. However, what I think AMD wanted to do – and what I believe they achieved – in making the preview was to hammer home the point that this is a incredibly fast moving market and they're ready to compete.

Of course, it was also a shot across the competitive bow to both Intel and Qualcomm, both of whom will introduce NPU-accelerated PC chips over the next few months.

In addition to the hardware, AMD discussed some AI software advancements for the PC, including the official release of Ryzen AI 1.0 software for easing the use of and accelerating the performance GenAI-based models and applications on PCs. AMD also brought Microsoft's new Windows leader Pavan Davuluri onstage to talk about their work to provide native support for AMD's XDNA accelerators in future version of Windows as well as discuss the growing topic of hybrid AI, where companies expect to be able to split certain types of AI workloads between the cloud and client PCs. There's much more to be done here – and across the world of AI PCs – but it's definitely going to be an interesting area to watch in 2024.

All told, the AMD AI story was undoubtedly told with a great deal of enthusiasm. From an industry perspective, it's great to see additional competition, as it will inevitably lead to even faster developments in this exciting new space (if that's even possible!). However, in order to really make a difference, AMD needs to continue executing well to its vision. I'm certainly confident it's possible, but there's a lot of work still ahead of them.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech

https://www.techspot.com/news/101112-amd-makes-ai-statement-new-hardware-roadmap-set.html