Why it matters: There's an epidemic of child sexual abuse material on online platforms, and while companies like Facebook have responded by flagging it wherever it popped up, Apple has been quietly developing a set of tools to scan for such content. Now that its response has come into focus, it's become a major source of controversy.

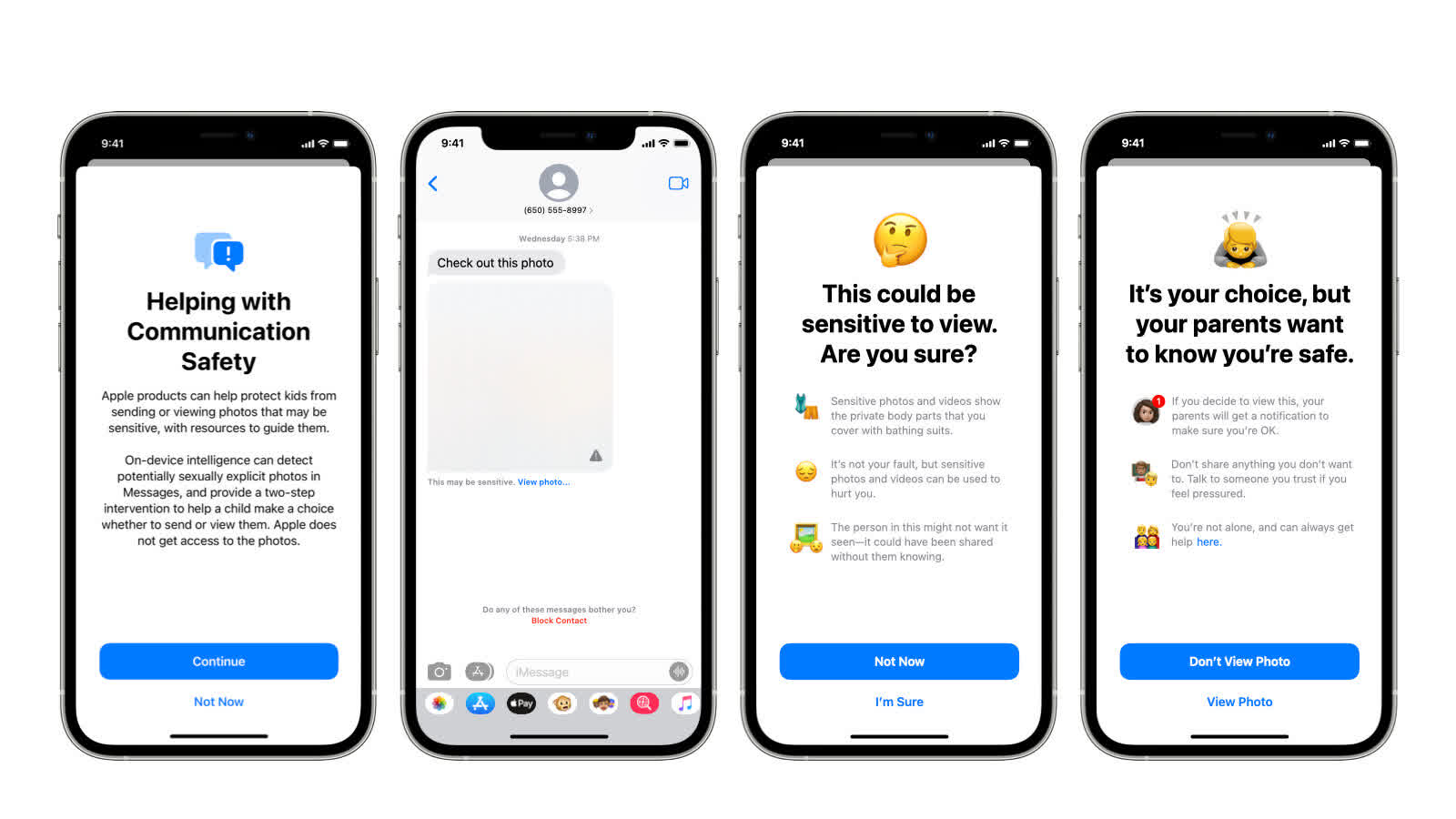

Earlier this month, Apple revealed that it plans to start scanning iPhones and iCloud accounts in the US for content that can be described as child sexual abuse material (CSAM). Although the company insisted the feature is only meant to help criminal investigations and that it wouldn't be expanded beyond its original scope regardless of government pressure, this left many Apple fans confused and disappointed.

Apple has been marketing its products and services in a way that created the perception that privacy is a core focus and high on the list of priorities when considering any new features. In the case of the AI-based CSAM detection tool the company developed for iOS 15, macOS Monterey, and its iCloud service, it achieved the exact opposite, and sparked a significant amount of internal and external debate.

Despite a few attempts to clarify the confusion around the new feature, the company's explanations have only managed to raise even more questions about how exactly it works. Today, the company dropped another bomb when it told 9to5Mac that it already scours iCloud Mail for CSAM, and it has been doing that for the past three years. On the other hand, iCloud Photos and iCloud Backups haven't been scanned.

This could be a potential explanation for why Eric Friedman -- who presides over Apple's anti-fraud department -- said in an iMessage thread (revealed in the Epic vs. Apple trial) that "we are the greatest platform for distributing child porn." Friedman also noted that Apple's obsession with privacy made its ecosystem the go-to place for people looking to distribute illegal content, as opposed to Facebook where the extensive data collection makes it very easy to reveal nefarious activities.

It turns out that Apple has been flying an "image matching technology to help find and report child exploitation" largely under the radar for the last few years, and only mentioned it briefly at a tech conference in 2020. Meanwhile, Facebook flags and removes tens of millions of images of child abuse every year, and is very transparent about doing it.

Apple seems to be operating under the assumption that since other platforms make it hard for people to do nefarious things without getting their account disabled, they'd naturally gravitate towards using Apple services to avoid detection. Scanning iCloud Mail for CSAM attachments may have given the company some insight into the kind of content people send through that route, and possibly even contributed to the decision to expand its CSAM detection tools to cover more ground.

Either way, this doesn't make it any easier to understand Apple's motivations, nor does it explain how its CSAM detection tools are supposed to protect user privacy or prevent governmental misuse.

https://www.techspot.com/news/90903-apple-already-scouring-emails-child-abuse-material.html