Editor's take: GPUs are the leading semiconductor option for most AI systems today, but we think other chips will play important roles down the path including CPUs and possibly even FPGAs.

With all the hype excitement around AI recently, we have noticed that most people have taken the default position that all AI workloads will always be run on GPUs. See Nvidia's share price as Exhibit 1 for this line of thinking. We think the reality may end up a little differently with a much broader range of chips coming into play.

To be clear, GPUs are pretty well designed for AI work – and there is no threat to Nvidia's share price on the horizon. What we call AI is really applied statistics, more specifically very advanced statistical regression models.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

At the heart of these are matrix algebra which involve fairly simple math problems, say one number multiplied by another, but done at massive scale. GPUs are designed with smaller cores (simpler math) in large quantities (massive scale). So it made sense to use these instead of CPUs with their smaller number of larger cores.

That picture is getting a bit blurrier now. For one, GPUs have gotten much more expensive. They are better at AI math, but at some point switching to CPUs starts to make sense economically.

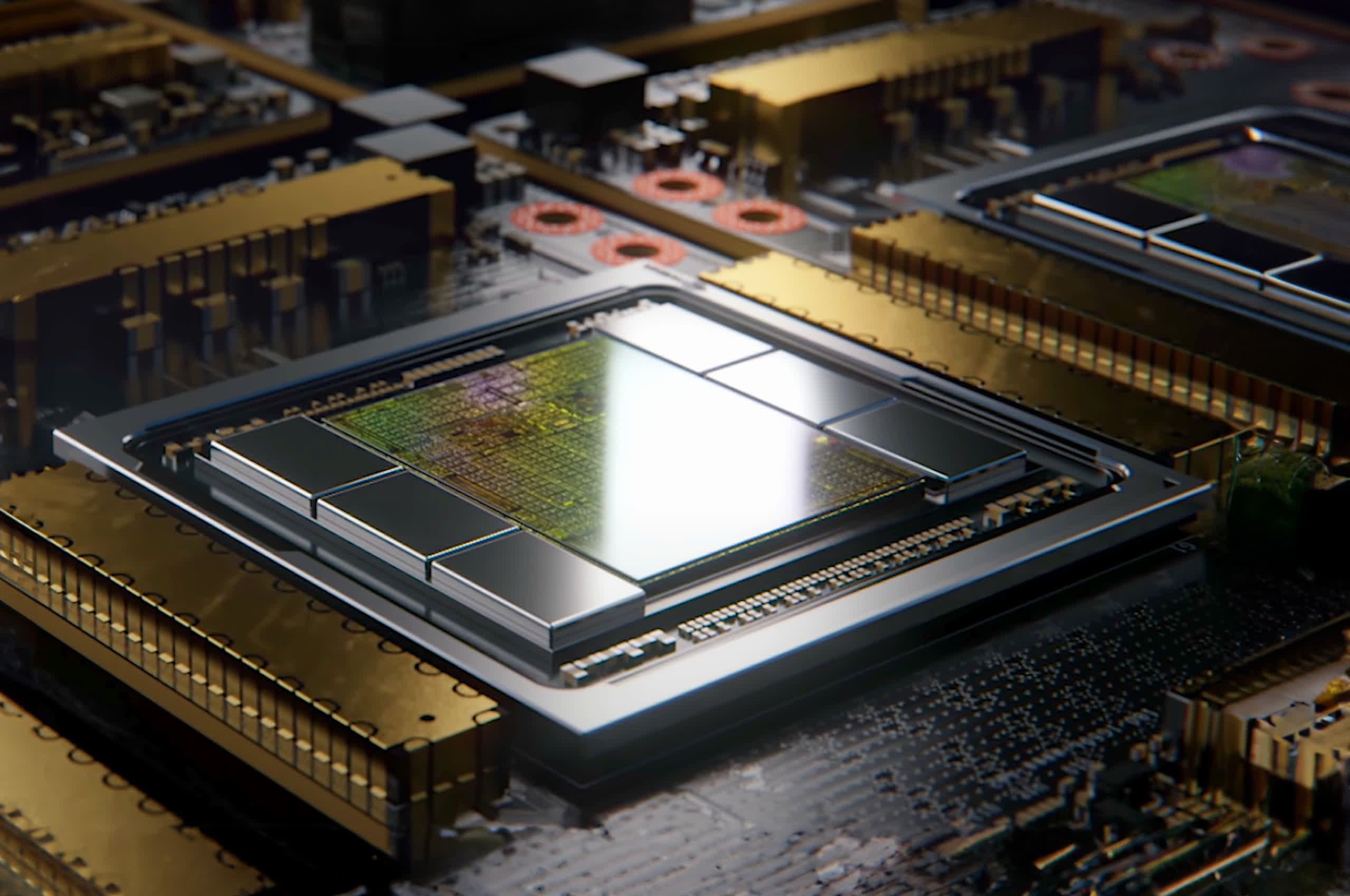

The market for AI semis is really divided into three segments: training, cloud inference and edge inference. Training for now essentially equals Nvidia, but that is just a small portion of the market. Cloud inference is going to be a much larger market – as more people use those AI models, the demand for inference will grow and for now much of that work will be done in the cloud. This looks like it is going to be punishingly expensive for many companies to build out capacity. Dylan Patel, as usual, lays out this math the best, but suffice it to say the bill for inference is likely to be a major stumbling block for consumer AI adoption for the next year or so.

Given the cost, and relative scarcity of GPUs, we think many companies will start exploring alternatives to GPUs for running inference workloads, especially for companies that combine those AI results with some other function, like search or social media, workloads which still largely run best on CPUs anyway.

Further down the road, we suspect that the economics of AI are going to require much more inference work to be down at the edge, meaning devices that consumers pay for. This means mobile phones and PCs are going to need AI functionality and this will likely mean they run on CPUs and mobile SoCs.

AMD recently unveiled AI functionality built into its client CPUs, and Apple has neural engines in both its A series mobile processors and M series CPUs. We expect this to be commonplace in edge devices soon.

Of course, many companies are looking to build AI accelerators – special purpose chips designed to just do AI math. But this has proven to be only workable at companies like Google which control all of their software. A big part of the problem with these special purpose chips is that they tend to be over-engineered for a specific set of workloads or AI models, and when those models change, those chips lose their performance advantage.

All of which leads to one of the most un-sung corners of semiconductors – FPGAs. These are 'programmable' chips (the 'P' stands for programmable), meaning they can be repurposed for different tasks after production. These are at the opposite end of the spectrum from purpose built ASICs, designed for a specific task like AI accelerators.

FPGAs have been around for years. The economics of semis usually mean that past some volume threshold an ASIC makes more sense, but for low-volume applications like industrial and aerospace systems they work really well. And so FPGAs are everywhere touching on dozens of end markets, but we are less familiar with them because they do not typically show up in the high-volume electronics the average consumer uses every day.

The advent of AI changes this calculation a bit. For these workloads FPGAs may make sense for AI inference needs where the underlying model is changing frequently. Here the programmability of FPGAs outweigh the typical economics of FPGA use. To be clear, we do not think that FPGAs will be a serious rival to massive AI systems using thousands of GPUs, but we do think the range of applications for FPGAs will grow as AI permeates further across electronics.

Put simply, GPUs are likely to remain the dominant chip for much of the AI landscape, especially for high profile, high volume models. But beyond that we think the use of alternative chips will become an important part of the ecosystem, a larger opportunity than may seem likely today.

https://www.techspot.com/news/99197-beyond-nvidia-does-ai-processing-gpus.html