Earlier this week we reported on the upcoming PCI Express 4.0 standard, with information from Tom's Hardware suggesting the updated specification would support up to 300 watts of power through the PCIe slot. It turns out this information was inaccurate.

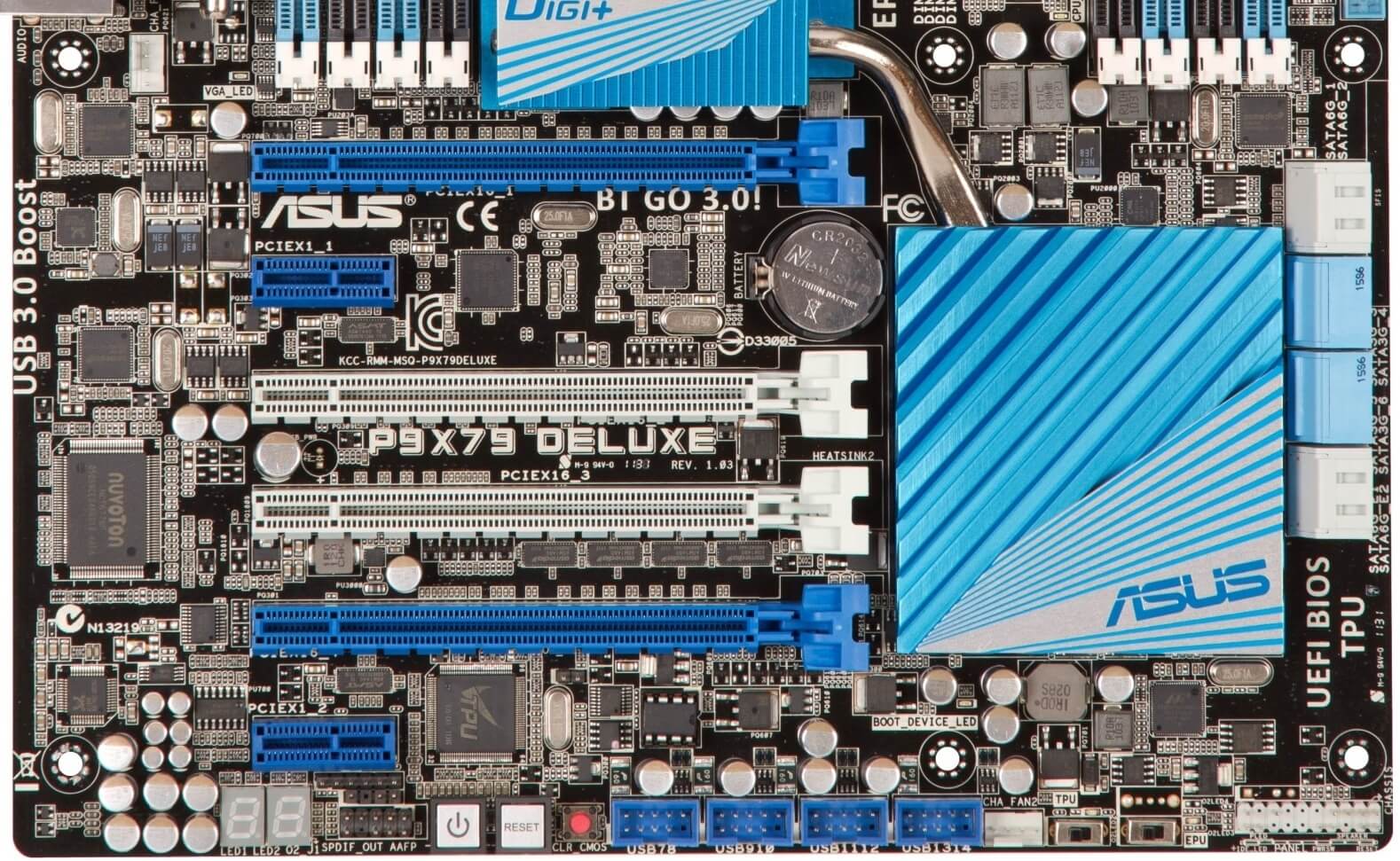

Upping the amount of slot power from 75 watts to 300 watts would have made PCIe power cables redundant for most high-end graphics cards. A powerful GPU like the Nvidia GeForce GTX 1080, which consumes around 180 watts at load, would have been able to draw all this power through the motherboard, rather than through a combination of external cables and slot power.

Unfortunately, a spokesperson for the PCI Special Interest Group (PCI-SIG) incorrectly stated to Tom's Hardware that slot power limits would be raised in the PCIe 4.0 standard. This is not the case: PCIe 4.0 will still have a 75 watt limit on slot power, the same as previous iterations of the spec.

The increased power limit instead refers to the total power draw of expansion cards. PCIe 3.0 cards were limited to a total power draw of 300 watts (75 watts from the motherboard slot, and 225 watts from external PCIe power cables). The PCIe 4.0 specification will raise this limit above 300 watts, allowing expansion cards to draw more than 225 watts from external cables.

Having a graphics card that draws all its power through the PCIe slot would be fantastic as it would reduce cable clutter in the case, however the increased power flow through the motherboard would present design challenges for motherboard manufacturers. For the foreseeable, powerful graphics cards will still require external PCIe cables.

https://www.techspot.com/news/66108-pcie-4-wont-support-300-watts-slot-power.html