During the last few years, progress on the CPU performance front has seemingly stopped. Granted, last-generation CPUs are cool, silent and power-efficient. Anecdotal evidence: my new laptop, a brand new MacBook, is about as fast as the Dell ultrabook it replaced. The problem? I bought the Dell laptop some five years ago. The Dell was thicker and noisier, its battery never lasted longer than a few hours, but it was about as fast as the new MacBook.

Editor’s Note:

Guest author Oleg Afonin works for Russian software developer Elcomsoft. The company is well known for its password recovery tools and forensic solutions. This article was originally published on the Elcomsoft blog.

Computer games have evolved a lot during the last years. Demanding faster and faster video cards, today’s games are relatively lax on CPU requirements. Manufacturers followed the trend, continuing the performance race. GPUs have picked up where CPUs have left.

Nvidia recently released their new GeForce GTX 1080 graphics card based on the new Pascal architecture. Elcomsoft Distributed Password Recovery 3.20 added support for the new architecture. What does it mean for us?

GPU Acceleration: The Present and Future of Computer Forensics

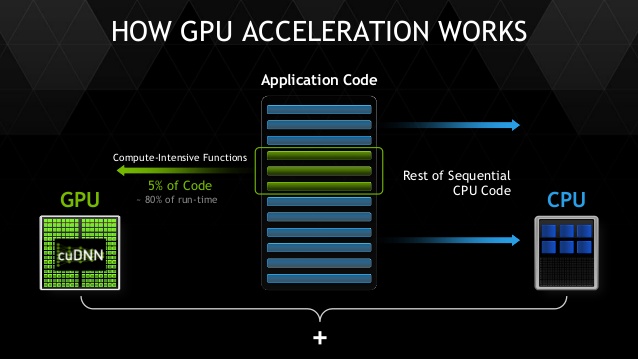

Today’s desktop video cards pack significantly more grunt compared to contemporary desktop CPUs. The powerful GPU units can deliver unmatched performance in massively parallel computations, offering 100 to 200 times greater performance compared to CPUs. All this performance is still relatively useless when it comes to regular computing.

The several hundred individual GPU cores are built specifically for “one code, different data” scenarios, while general-use CPUs can run different code on each kernel. Since breaking passwords involves executing the same code repeatedly, just with different data (encryption keys or passwords), a large array of GPU units makes lots of sense.

How does it scale to real-world applications? A low-end Nvidia or AMD board will deliver 20 to 40 times the performance of the most powerful Intel CPU. A high-end accelerator such as the Nvidia GTX 1080 can crack passwords up to 250 times faster compared to a CPU alone.

Just how important is GPU acceleration, exactly? As an example, a common 6-character password (lower-case letters with numbers) has just about 2 billion combinations. If that password protects a Microsoft Office 2013 document, you’ll spend 2.2 years trying all possible combinations. Using the same computer, add a single GTX 1080 card, and the same password will be cracked in under 83 hours. That’s 3.5 days vs. 2.2 years!

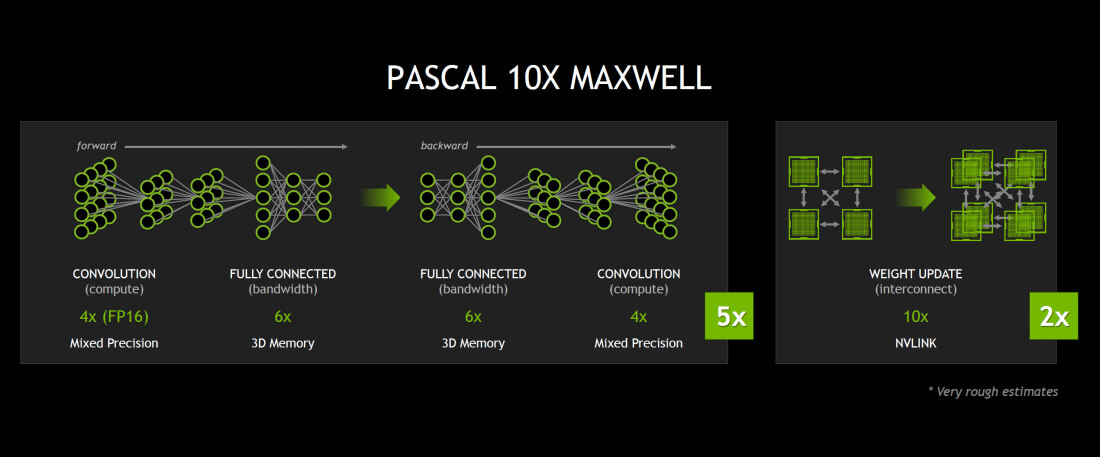

Nvidia Pascal Architecture

Nvidia’s latest GPU architecture gives a significant performance boost compared to Nvidia’s previous flagship. With 21 half-precision teraflops, GTX 1080 boards are 1.5 to 2 times faster breaking passwords compared to GTX 980 units.

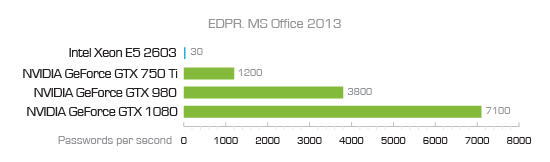

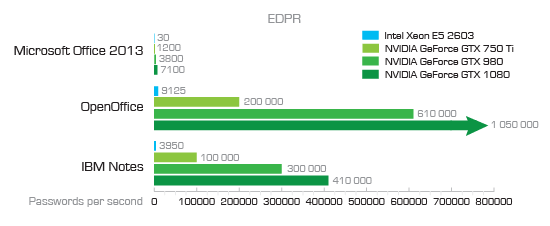

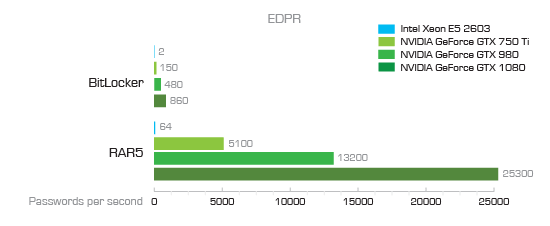

According to ElcomSoft’s internal benchmarks, Elcomsoft Distributed Password Recovery can try 7,100 passwords per second for Office 2013 documents using a single Nvidia GTX 1080 board compared to 3,800 passwords per second on an Nvidia GTX 980. When recovering RAR 5 passwords, using a single GTX 1080 results in 25,000 passwords per second compared to 13,000 passwords per second on a GTX 980.

Cannot see the numbers for CPU-based benchmarks without a magnifying glass? In case you wonder, we were only able to try 30 (yes, thirty) MS Office 2013 passwords per second on an Intel Xeon E5 2603 without GPU acceleration. Compare that to 7,100 passwords per second using a single Nvidia GTX 1080 board!

Nvidia Pascal is a major break-through in GPU computations. If you need a reliable powerhouse to break passwords faster, consider adding a GTX 1080 board to your workstation.

What if your computer already has a GTX 980 installed? If you have a free PCIe slot and sufficient cooling, and if your computer’s power supply can deliver enough juice for an extra GTX 1080 board, then you can just add the new board without removing the old one. Elcomsoft Distributed Password Recovery will use both GPUs together for even faster attacks.

Does it make sense keeping a GTX 980 along with the new GTX 1080? By keeping the old card together with the new GTX 1080, you’ll get an additional performance boost of about 20 to 30 percent. Whether this extra performance is worth the increased power consumption and excess heat is debatable, but if your power supply and cooling can reliably manage both cards working at their maximum performance, by all means go for it!

https://www.techspot.com/news/66034-cracking-passwords-using-nvidia-latest-gtx-1080-gpu.html