Forward-looking: Details about the upcoming RTX 4070 have seemingly been leaked by Gigabyte. Also within the leak is some information on the RTX 4060, however these leaks have left many users somewhat disappointed.

Gigabyte Control Center is a utility that allows users to manage their various Gigabyte hardware. One of the more prominent products that take advantage of this software is the company's graphics cards. Gigabyte is usually quick to update the program to enable support for the latest GPUs but this time, maybe it was too quick.

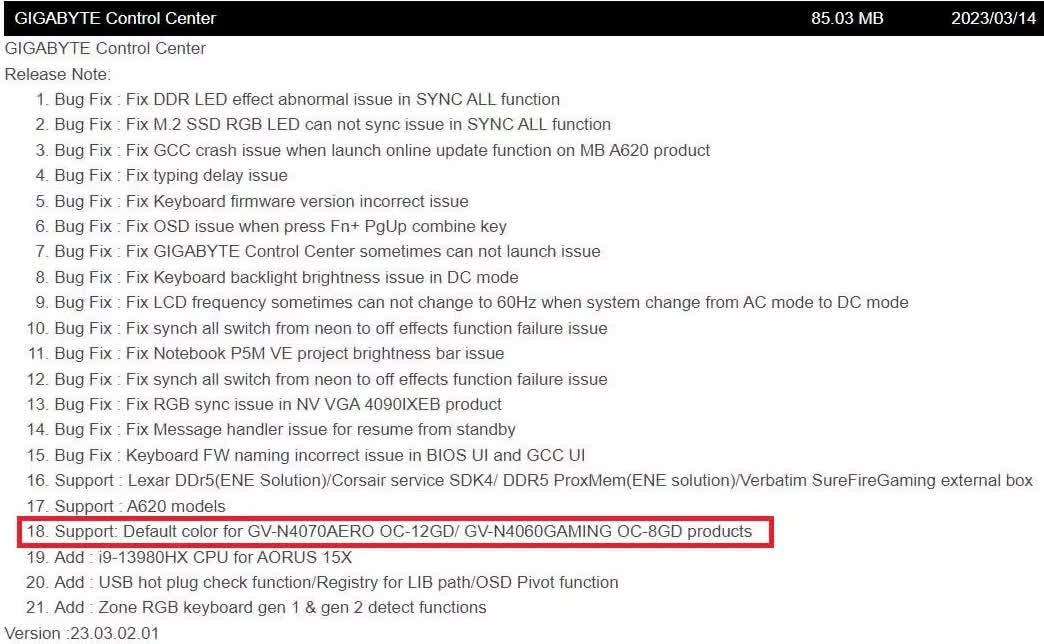

For Control Center's latest update, version 23.03.02.01, the release notes had your standard bug fixes: clearing issues with RGB, settings not applying, etc. However, at the bottom of the page, there was one more entry in the "Support" category of changes. According to Gigabyte, this version added support for the RTX 4070 Aero OC 12 GB (GV-N4070AERO OC-12GD) and the RTX 4060 Gaming OC 8 GB (GV-N4060GAMING OC-8GD).

(Click to expand)

Gigabyte clearly made a mistake here, as the line denoting support for these new graphics cards was recently removed. However, this single accidental note appears to have confirmed small bits of information regarding Nvidia's next two Ada Lovelace GPUs.

Details about the soon-to-launch RTX 4070 were bound to leak eventually, and Gigabyte appears to have leaked the memory capacity of the card. The RTX 4070 will have 12 GB of (likely GDDR6X) memory, an improvement over the RTX 3070 which featured only 8 GB of GDDR6. This means the RTX 4070 will boast the same memory setup as its bigger brother, the RTX 4070 Ti.

More interestingly, but also disappointing, is the leaked information about the RTX 4060. Last generation, the RTX 3060 featured 12 GB of GDDR6 memory, more than almost every other card in the Ampere family. This time around, Nvidia plans to be very stingy with memory. Gigabyte's leak indicates the RTX 4060 will only include a memory capacity of 8 GB, four gigabytes less than the previous generation.

While the RTX 4070's increased memory capacity is a welcome addition, it is a bit disappointing to see that Nvidia's upcoming RTX 4060 card actually has a smaller amount of memory than its predecessor. Due to this change, the RTX 4060 could be a risky card for gamers who intend on playing in 4K, simply because of memory constraints.

https://www.techspot.com/news/98021-gigabyte-accidentally-leaks-12gb-rtx-4070-8gb-rtx.html