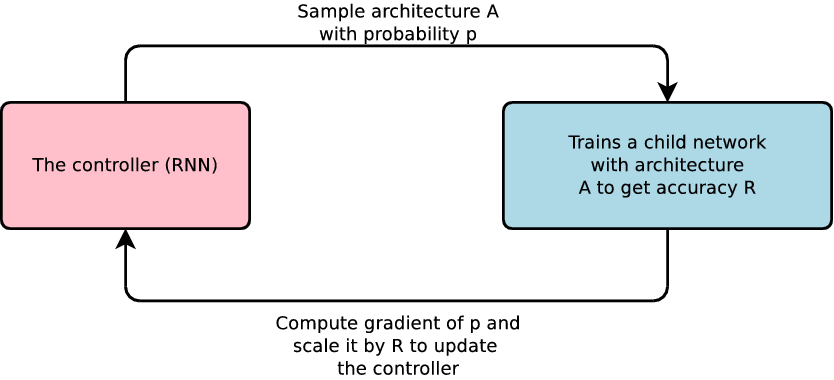

The Google's Brain team of researchers has been hard at work studying artificial intelligence systems. Back in May they developed AutoML, an AI system that could in turn generate its own subsequent AIs. Their next big task was to benchmark these automatically generated AIs against more traditional human-made AIs.

AutoML uses a technique called reinforcement learning as well as neural networks to develop the daughter AIs. NASNet, a child AI, was developed and trained to recognize objects in real-time video streams. When benchmarked with industry standard validation sets, it was found to be 82.7% accurate at recognizing known objects. This is 1.2% higher than anything seen before and the system is also 4% more efficient than the previous best algorithms.

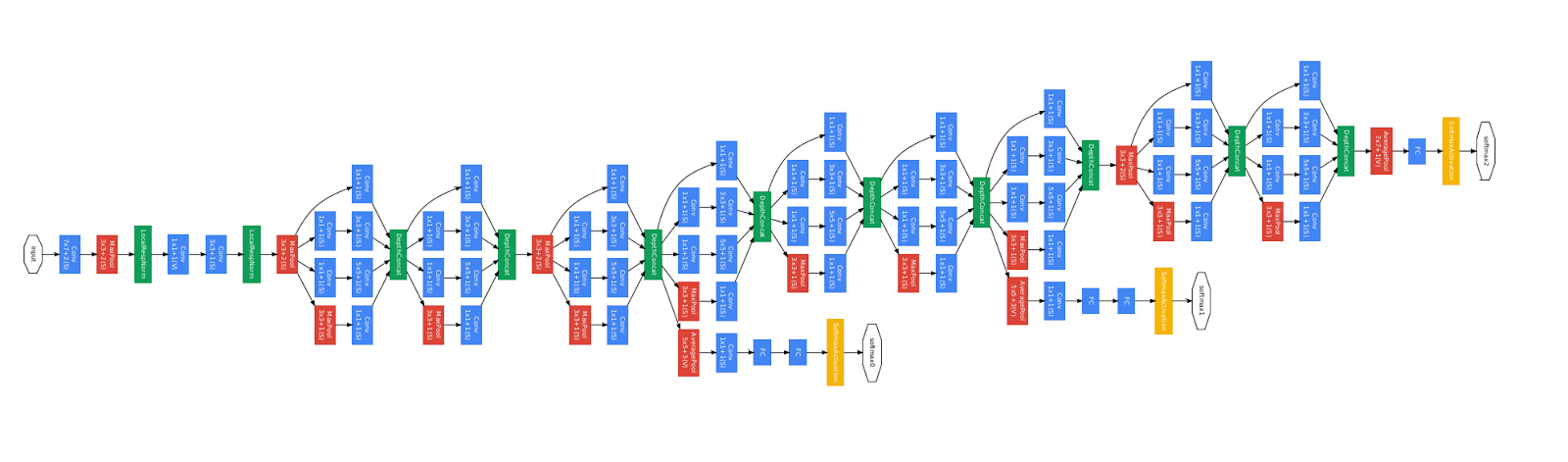

The "GoogleNet architecture" took many years of experimentation and refinement according to the company

These efficient and accurate computer vision algorithms are becoming more valuable as the technology advances. They could allow self-driving cars to better avoid obstacles or help visually impaired people regain some sight.

Creating machine learning and artificial intelligence systems requires massive datasets and powerful GPU arrays to train the networks. By automating their creation, AutoML can help bring ML and AI to a wider audience instead of just computer scientists.

There are still some privacy concerns related to inherent biases in the system that are passed down into the new generations of AIs. This is especially important for applications like facial recognition and security systems. That being said, this is still great news and is a big step of progress for the AI community.

https://www.techspot.com/news/72165-google-ai-made-own-ai-better-than-anything.html