In context: Intel CEO Pat Gelsinger has come out with the bold statement that the industry is better off with inference rather than Nvidia's CUDA because it is resource-efficient, adapts to changing data without the need to retrain a model and because Nvidia's moat is "shallow." But is he right? CUDA is currently the industry standard and shows little sign of being dislodged from its perch.

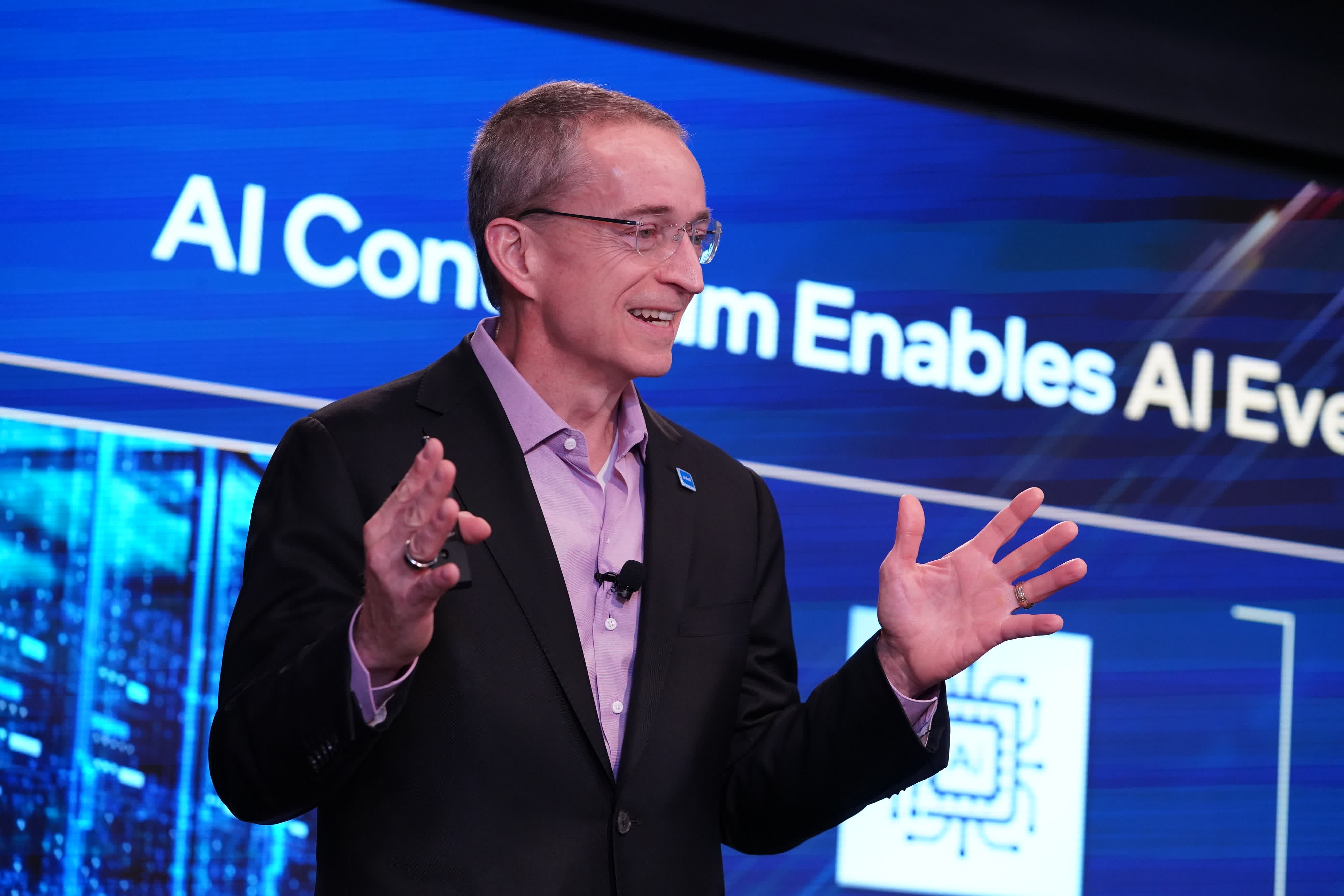

Intel rolled out a portfolio of AI products aimed at the data center, cloud, network, edge and PC at its AI Everywhere event in New York City last week. "Intel is on a mission to bring AI everywhere through exceptionally engineered platforms, secure solutions and support for open ecosystems," CEO Pat Gelsinger said, pointing to the day's launch of Intel Core Ultra mobile chips and 5th-gen Xeon CPUs for the enterprise.

The products were duly noted by press, investors and customers but what also caught their attention were Gelsinger's comments about Nvidia's CUDA technology and what he expected would be its eventual fade into obscurity.

"You know, the entire industry is motivated to eliminate the CUDA market," Gelsinger said, citing MLIR, Google, and OpenAI as moving to a "Pythonic programming layer" to make AI training more open.

Ultimately, Gelsinger said, inference technology will be more important than training for AI as the CUDA moat is "shallow and small." The industry wants a broader set of technologies for training, innovation and data science, he continued. The benefits include no CUDA dependency once the model has been trained with inferencing and then it becomes all about whether a company can run that model well.

Also read: The AI chip market landscape – Choose your battles carefully

An uncharitable explanation of Gelsinger's comments might be that he disparaged AI training models because that is where Intel lags. Inference, compared to model training, is much more resource-efficient and can adapt to rapidly changing data without the need to retrain a model, was the message.

However, from his remarks it is clear that Nvidia has made tremendous progress in the AI market and has become the player to beat. Last month the company reported revenue for the third quarter of $18.12 billion, up 206% from a year ago and up 34% from the previous quarter and attributed the increases to a broad industry platform transition from general-purpose to accelerated computing and generative AI, said CEO Jensen Huang. Nvidia GPUs, CPUs, networking, AI software and services are all in "full throttle," he said.

Whether Gelsinger's predictions about CUDA become true remains to be seen but right now the technology is arguably the market standard.

In the meantime, Intel is trotting out examples of its customer base and how it is using inference to solve their computing problems. One is Mor Miller, VP of Development at Bufferzone (video below) who explains that latency, privacy and cost are some of the challenges it has been experiencing when running AI services in the cloud. He says the company has been working with Intel to develop a new AI inference that addresses these concerns.

https://www.techspot.com/news/101233-intel-ceo-argues-inference-better-industry-nvidia-cuda.html