Bottom line: Oracle is predominantly recognized for its database and enterprise products. However, its founder and chairman, Larry Ellison, recently revealed the company's intentions to expand into the burgeoning realm of cloud-based, generative AI. The move will require a substantial investment in Nvidia GPUs and a considerable budget for chip procurement.

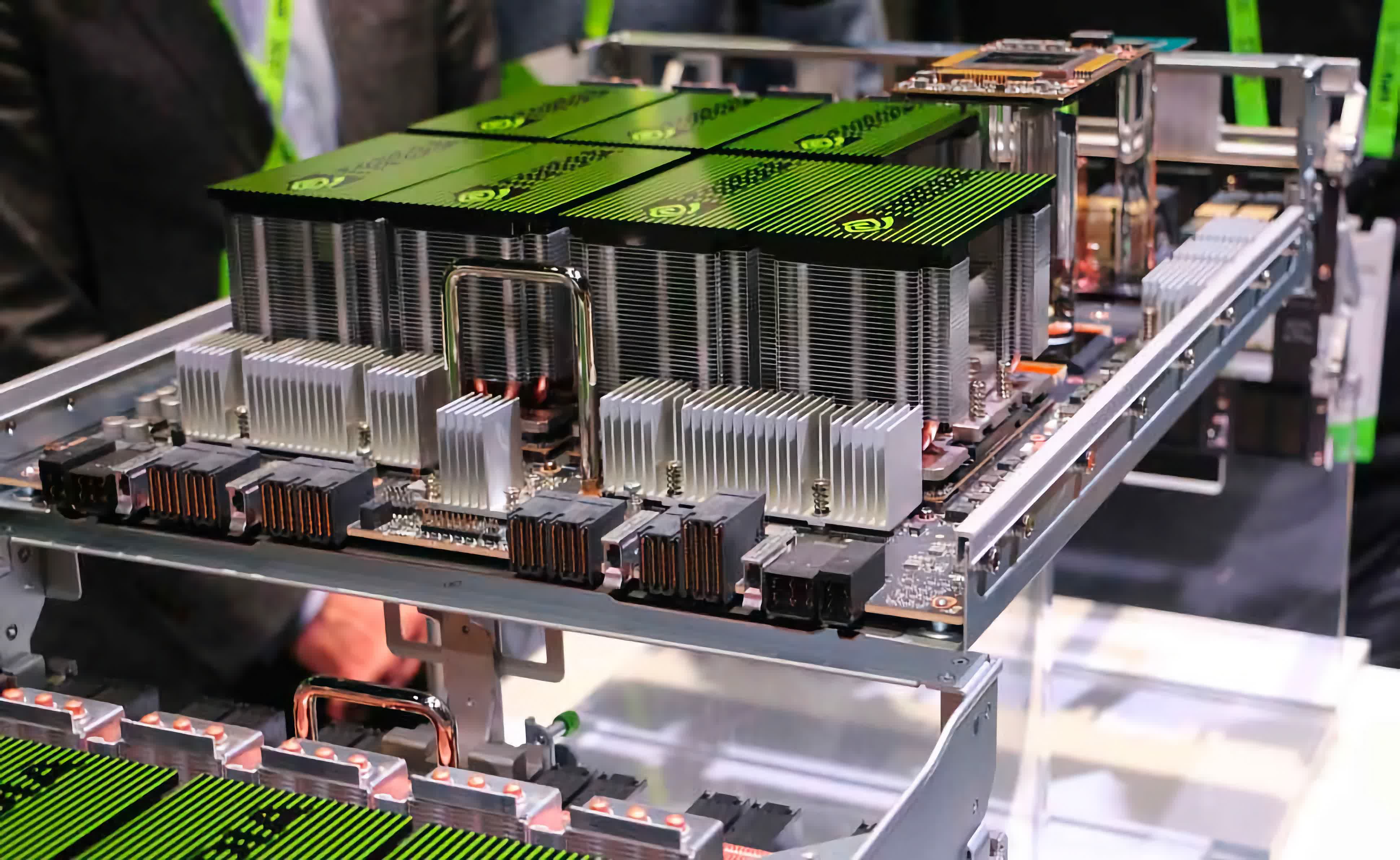

Oracle will spend billions of dollars to purchase Nvidia GPUs this year alone, driven by the rising demand for generative AI-based products, which require considerable hardware and financial investments for training and content delivery.

During a recent Ampere event, Larry Ellison, Oracle's founder and chairman, declared the company's willingness to join the AI "gold rush", despite the current market being largely dominated by larger competitors in the AI cloud market.

Machine learning algorithms, which underpin the most popular AI platforms, require an extensive amount of hardware and computing resources for training and prompt-based user interaction. To compete with cloud giants like Microsoft, Amazon (AWS), and Google, Oracle is investing in the creation of high-speed networks designed to enhance data delivery speed.

Ellison said that Oracle plans to procure computing units (GPUs and CPUs) from three different companies, with a significant portion of this investment going into Nvidia GPUs as graphics chips from Team Green have been designed with AI-based algorithms in mind for quite some time.

Ellison, listed among the wealthiest people in the world, also disclosed that Oracle plans to purchase a significant number of CPUs from AMD and Ampere Computing. Oracle has made a substantial investment in Ampere, a fabless company that has been developing Arm-based server chips (with assistance from TSMC) for cloud-native infrastructures.

According to Ellison, Oracle will spend "three times" as much money on AMD and Ampere CPUs as on Nvidia GPUs. He also highlighted the need for increased funding for "conventional compute".

Oracle's hefty bet on the generative AI sector is evident, as the company is not only increasing its infrastructure investments, but also securing deals with third-party companies. Last month, Oracle entered into an agreement with Cohere, an AI startup founded by former Google employees. As per the deal, Cohere will operate its AI software in Oracle data centers, utilizing up to 16,000 Nvidia GPUs.

https://www.techspot.com/news/99260-oracle-plans-spend-billions-nvidia-gpus.html