Rumor mill: Microsoft has invested billions of dollars in OpenAI already. With this new project, it aims to shorten the time it takes to train AI models for its servers. A new network card that rivals the functionality of Nvidia's ConnectX-7 would also give optimizations for Microsoft's hyperscale data centers a performance boost.

The Information has learned that Microsoft is developing a new network card that could improve the performance of its Maia AI server chip. As an added bonus, it would further Microsoft's goal of reducing its dependence on Nvidia as the new network card is expected to be similar to Team Green's ConnectX-7 card, which supports 400 Gb Ethernet at maximum bandwidth. TechPowerUp speculates that with availability still so far off, the final design could aim for even higher Ethernet bandwidth, such as 800 GbE, for example.

Microsoft's goal for its new endeavor is to shorten the time it takes for OpenAI to train its models on Microsoft servers and make the process less expensive, according to the report. There should be other uses as well, including providing a performance uplift from specific optimizations needed in its hyperscale data centers, TechPowerUp says.

Microsoft has invested billions of dollars in OpenAI, the artificial intelligence firm behind the wildly popular ChatGPT service and other projects like DALL-E and GPT-3, and has incorporated its technology in many Microsoft products.

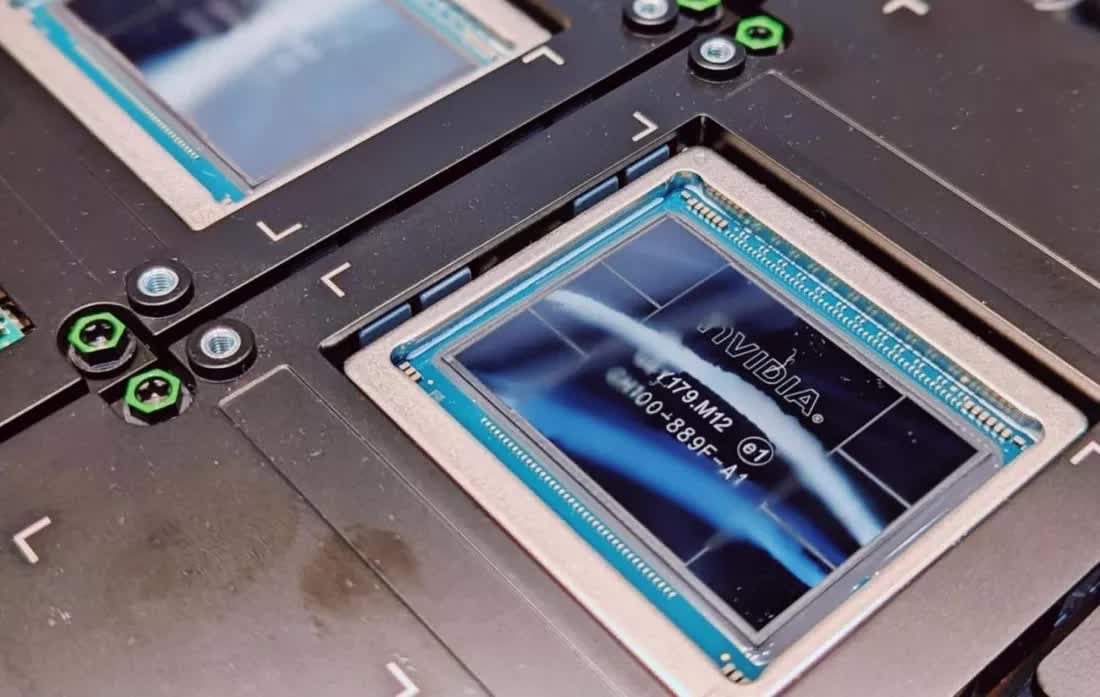

At the same time, Microsoft is determined to reduce its reliance on Nvidia. Last year, it announced it built two homegrown chips to handle AI and general computing workloads in the Azure cloud. One of them was the Microsoft Azure Maia 100 AI Accelerator, designed for running large language models such as GPT-3.5 Turbo and GPT-4, which arguably could go up against Nvidia's H100 AI Superchip. But taking on Nvidia will be difficult even for Microsoft. Nvidia's early adoption of AI technology and existing GPU capabilities have positioned it as the clear leader, holding more than 70% of the existing $150 billion AI market.

Still, Microsoft is throwing significant weight behind this project, even though the network card could take a year or more to come to market. Pradeep Sindhu has been tapped to spearhead Microsoft's network card initiative. Sindhu co-founded Juniper Networks as well as a server chip startup called Fungible, which Microsoft acquired last year. Sindhu better hurry though: Nvidia continues to make gains in this space. Late last year it introduced a new AI superchip, the H200 Tensor Core GPU, which allows for greater density and higher memory bandwidth, both crucial factors in improving the speed of services like ChatGPT and Google Bard.

https://www.techspot.com/news/101960-microsoft-developing-network-card-improves-ai-performance.html