Uncannily Fast: Generative AI services can produce a high-quality visual patchwork but are usually quite sluggish. Researchers from MIT and Adobe have developed a potential solution to this time-consuming issue, with a new super-fast image generation method with minimal impact on quality. The technique spits out about 20 images per second.

Image generation AI typically employs a process known as diffusion, which refines the visual output through several sampling steps to reach the final, hopefully "realistic" result. Researchers say diffusion models can generate high-quality images, but they require dozens of forward passes.

Adobe Research and MIT experts are now introducing a technique called "distribution matching distillation" (DMD). This procedure reduces a multi-step diffusion model to a one-step image generation solution. The resulting model can generate images comparable to "traditional" diffusion models like Stable Diffusion 1.5, but orders of magnitude faster.

"Our core idea is training two diffusion models to estimate not only the score function of the target real distribution, but also that of the fake distribution," the team's study reads.

The researchers claim their model can generate 20 images per second on modern GPU hardware.

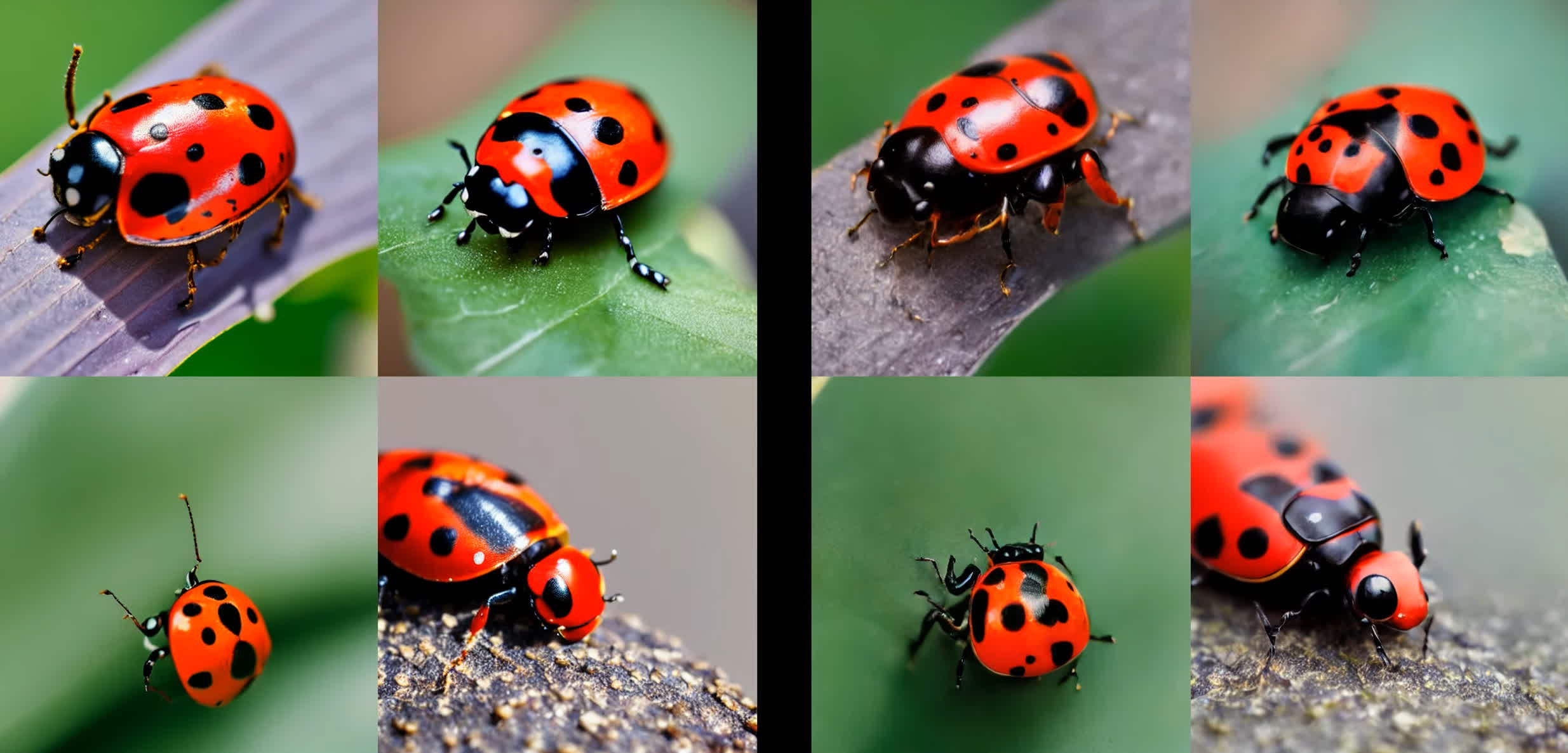

The short video above highlights DMD's image generation compared to Stable Diffusion 1.5. While SD needs 1.4 seconds per image, DMD can render a similar image in a fraction of second. There is a trade-off between quality and performance, but the final results are within acceptable limits for the average user.

The team's publication of the new rendering method shows additional examples of image results produced with DMD. It compares Stable Diffusion and DMD while providing the all-important textual prompt that generated the images. Subjects include a dog framed through virtual DSLR lenses, the Dolomites mountain range, a magical deer in a forest, a 3D render of a baby parrot, unicorns, beards, cars, cats, and even more dogs.

Distribution matching distillation is not the first single-step method ever proposed for uncanny AI image generation. Stability AI developed a technique known as Adversarial Diffusion Distillation (ADD) to generate 1-megapixel images in real-time. The company trained its SDXL Turbo model through ADD, achieving image generation speeds of just 207 ms on a single Nvidia A100 AI GPU accelerator. Stability's ADD employs a similar approach to MIT's DMD.

https://www.techspot.com/news/102404-mit-researchers-develop-new-method-single-pass-ai.html