Rumor mill: A leaker with a strong track record added to rumors that Nvidia's next generation of gaming graphics cards will use TSMC's 5-nanometer node process. It may also arrive a bit earlier than previously expected.

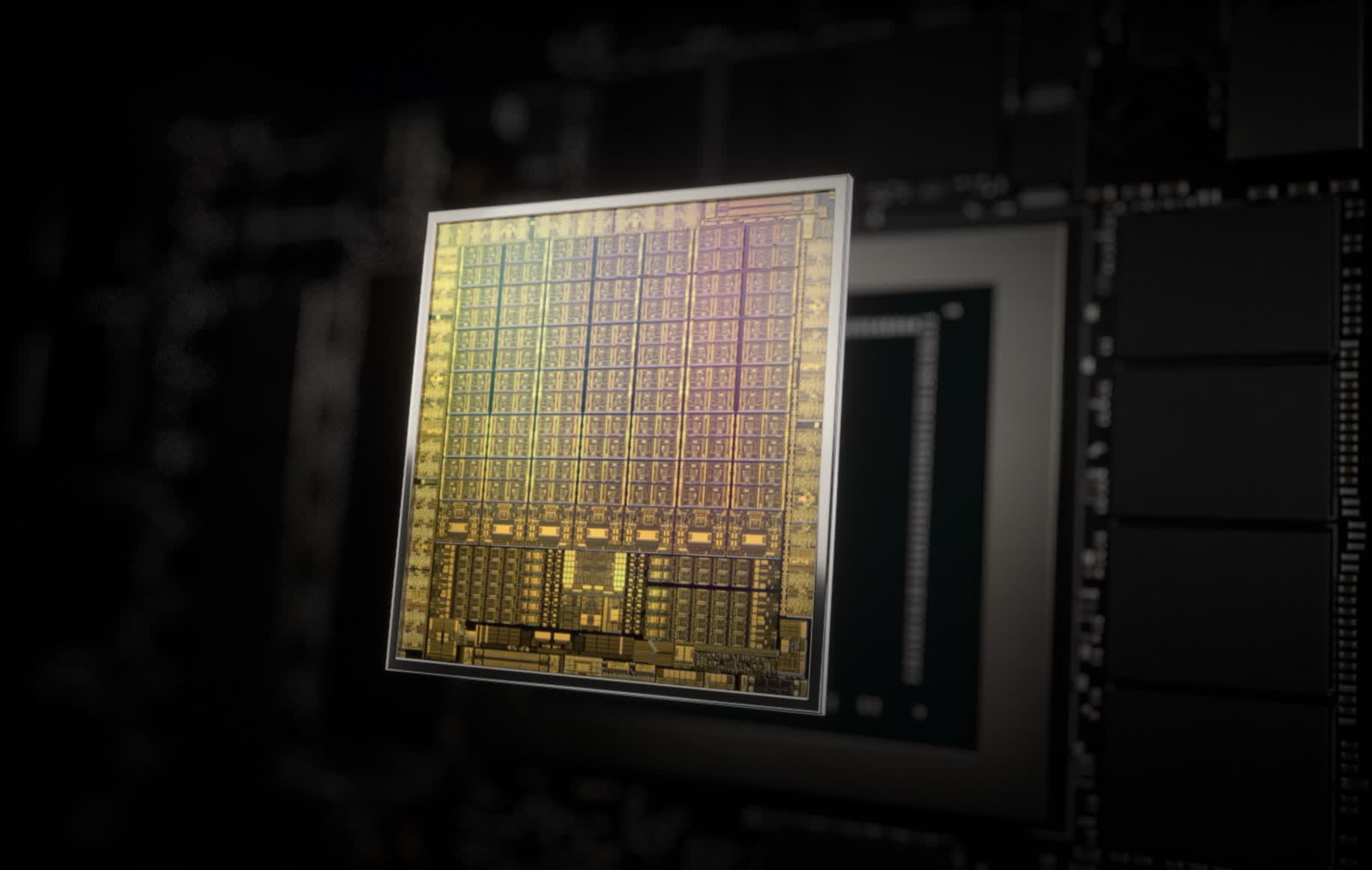

Kopite7kimi, who knew details of Nvidia's current Ampere graphics card lineup before they launched last year, asserted last week that Nvidia's next series—probably the RTX 40 cards—would use TSMC's N5 node process. The Ampere series uses Samsung's 8nm silicon. This is the second consecutive time Nvidia has switched back and forth between the two companies.

Known leaker Greymon55 stated as much last month, so this puts more strength behind existing rumors. Kopite also said the RTX 40 series, supposedly running on an architecture called "Ada Lovelace," should arrive a bit earlier than previously expected. Those previous expectations put it around the end of 2022. Nvidia tends to launch new generations of graphics cards every couple of years, so the latest rumors could mean as early as Q3 2022.

of course TSMC N5

— kopite7kimi (@kopite7kimi) August 26, 2021

Earlier, both leakers suggested the top-of-the-line cards in the RTX 40 series, similar to the rumored RTX 3090 super, would draw over 400W of power, setting a new precedent in a concerning upward trend.

The flagship of the 40 series will supposedly be based on the AD102 GPU, which according to estimates from Kopite's leaks about its structure, might have as many as 18432 CUDA cores, compared to the RTX 3090's 10496. The flagship 40 series card may double its predecessor's performance based on these rumors.

https://www.techspot.com/news/91032-more-rumors-suggest-nvidia-lovelace-use-tsmc-n5.html