Why it matters: ChatGPT is about to get a "brain" upgrade. A lack of information beyond September 2021 has long hindered the bot. OpenAI has plugins in development that will give it access to more current data and allow it to interact with websites through APIs. No date is set for a stable release, but OpenAi is already taking reservations to try it, so it shouldn't be too long before a wide release.

OpenAI announced it is adding plugins to ChatGPT. The implementation will provide several capabilities to the chatbot and other developers using the algorithms. The plugins are in a limited testing phase for now, but anybody can get on the waitlist by signing up or logging into OpenAI.

One of the new features allows ChatGPT to access the web when formulating a response. The software's accuracy has been questioned several times since its release. Part of its problem is that its training data only goes up to September 2021. So the bot has difficulty responding to questions requiring knowledge of current events.

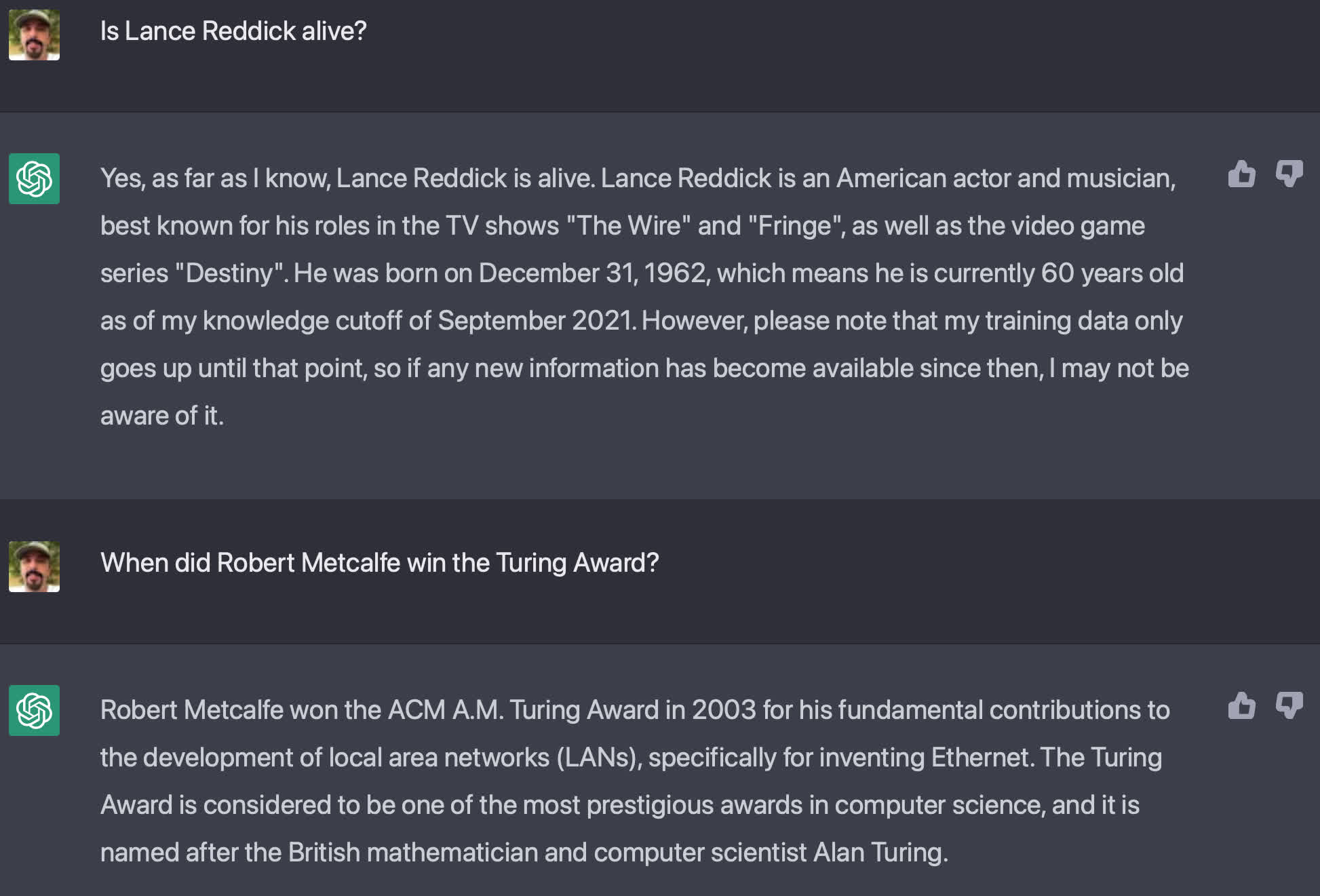

For example, if you ask it if someone who died this year is still alive, it will tell you that, as far as it knows, that person is still living. A more oblique query that requires current knowledge produces results that are not accurate (below).

Note that ChatGPT claims that Metcalfe won the Turing Award in 2003 (instead of 2023), even though it should know Alan Kay won in 2003 for his work in object-oriented programming languages. This type of reply is known as a "hallucination." Allowing ChatGPT to look up current information should make it much more functional and reliable when replying to specific time-sensitive queries.

In addition to browsing the internet, plugins will allow developers to create interfaces for the AI to interact with on their websites. OpenAI President and Co-founder Greg Brockman demonstrated how to look up a recipe and purchase its ingredients using ChatGPT. It does this by leveraging APIs to act "on behalf of the user."

We've added initial support for ChatGPT plugins --- a protocol for developers to build tools for ChatGPT, with safety as a core design principle. Deploying iteratively (starting with a small number of users & developers) to learn from contact with reality: https://t.co/ySek2oevod pic.twitter.com/S61MTpddOV

— Greg Brockman (@gdb) March 23, 2023

Whether or not allowing an AI to make decisions for you is a good idea is debatable. It will depend heavily on the extent to which it can act. Having it place products into a shopping cart and then handing it off for the user to complete the sale is relatively benign. Having it interact with your banking or health care provider's website, not so much. However, functionality seems to be the burden of developers and not OpenAI.

The beta is on a slow roll. Testing is limited to a few developers and ChatGPT Plus subscribers for now. However, OpenAI has started a queue for the general public. It will gradually add users from the waitlist to the beta as it irons out bugs.

https://www.techspot.com/news/98059-new-chatgpt-plugins-allow-bot-access-internet-make.html