Why it matters: Nvidia announced that DLSS 3 would come equipped with the ability to generate whole frames when it announced the RTX 4000-series and its new software stack. What it didn't say was that frame generation could be de-coupled from DLSS and even works with upscalers from the competition.

According to their write-up, the team at Igor's Lab was fooling around with Spider-Man Remastered when they noticed that the game's settings gave them some strange options: to switch frame generation on without enabling DLSS and to pair frame generation with AMD FSR and Intel XeSS.

Igor's Lab went straight to Nvidia to ask if those options were meant to be there. Nvidia confirmed that they were and explained that frame generation functioned separately from upscaling, but added that DLSS 3 had been optimized to work with it. Igor's Lab found an RTX 4090 and started testing to see what frame generation was capable of without DLSS 3.

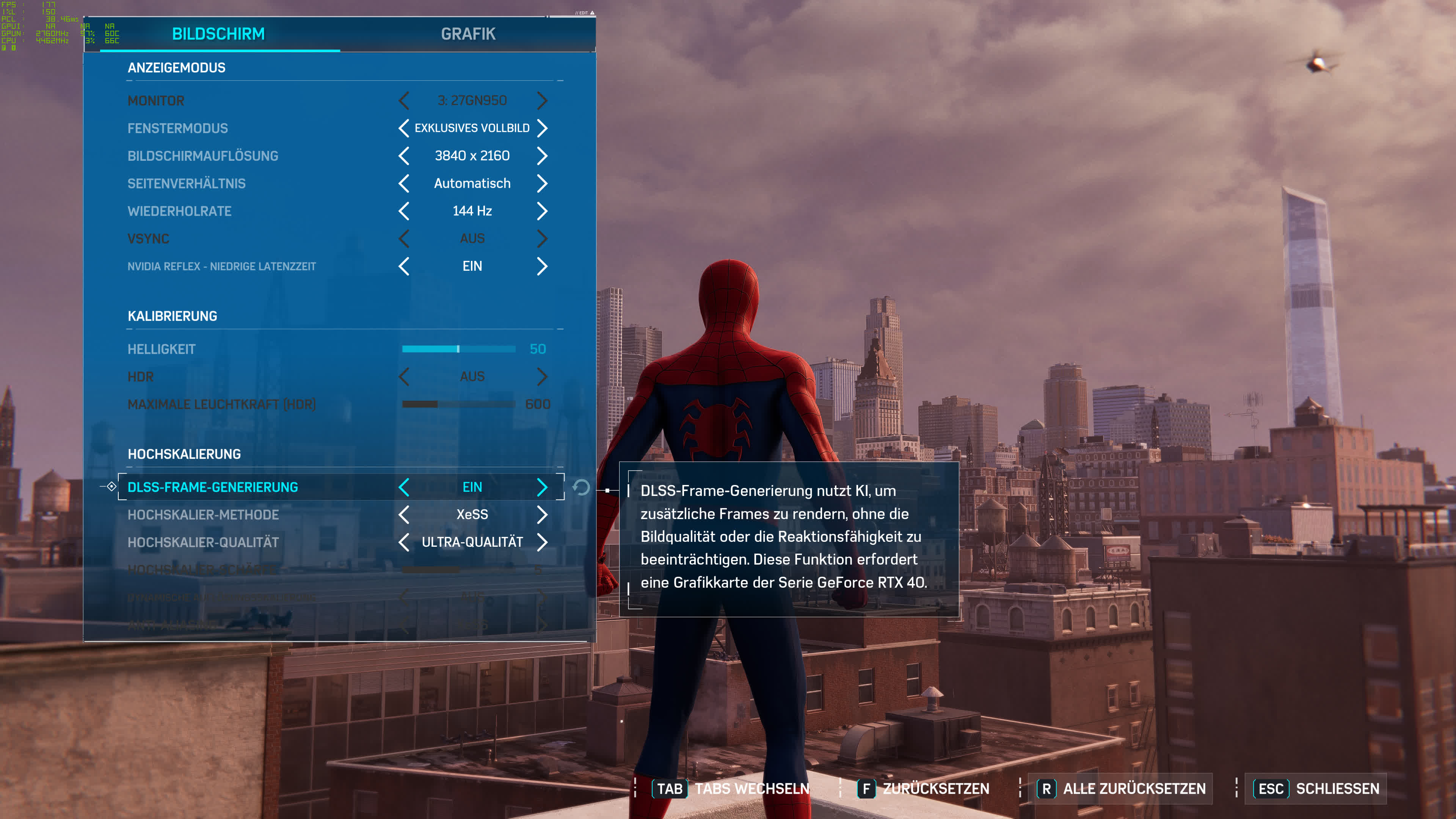

The settings menu (in German). Credit: Igor's Lab

Paired with an Intel i9-12900K and running Spider-Man Remastered with the maximum visual quality preset at 4K, the RTX 4090 reached a sensible 125 fps. With either DLSS, FSR, or XeSS enabled with their performance settings, the game was bottlenecked by the CPU to about 135 fps.

And then, with frame generation enabled but without any upscaling, the framerate jumped to 168 fps. And it jumped again to about 220 fps with both DLSS and FSR on (again, performance settings). XeSS lagged a bit behind, only managing 204 fps when working in tandem with frame generation.

Igor's Lab also tested the impact of frame generation with other DLSS, FSR, and XeSS quality settings and the story stayed the same. Frame generation helped all three to reach much higher framerates but DLSS and FSR outdid XeSS by a wide margin, like they usually do on non-Intel hardware.

DLSS 3 at the performance setting with frame generation. Via Igor's Lab

XeSS was also struggling to match the visual quality of DLSS and FSR. In my opinion, the difference between DLSS and FSR was mostly a matter of taste. I preferred the slightly sharper look of FSR but DLSS seemed to have fewer artifacts. XeSS was blurrier and had some trouble managing antialiasing.

Igor's Lab has some great tools to help you inspect the difference between frames generated with each of the three upscalers. But, once again, the story here is pretty familiar: all three tools work the same as they usually do and treat the phony frames like engine-generated ones, so whatever you're already a fan of will probably be your favorite here, too.

It's great that Nvidia isn't locking frame generation to DLSS 3 and giving consumers some form choice, even if the feature is limited to the RTX 4000-series. There are suspicions that FSR 3 will have frame generation that works in a similar way to Nvidia's implementation and have much broader compatibility. It'll be interesting to see if that also works with DLSS and XeSS and which tool produces the best results when FSR 3 arrives next year.

https://www.techspot.com/news/96638-nvidia-dlss-frame-generation-works-surprisingly-well-amd.html