Something to look forward to: Nvidia might push up the release date of their next-gen RTX 40 lineup in order to get a head start on AMD, who is also planning to launch new GPUs later this year. So far, leakers have teased massive generational performance gains and TGPs saturating the new PCIe 5 power connector, but no one has a clear idea of pricing yet.

According to reliable GPU leaker kopite7kimi, Nvidia might release their GeForce RTX 40 series in early Q3. While just a rumor at this point, it means that we could see an announcement of the eagerly-anticipated GPU lineup as early as July.

Such a launch wouldn't be without precedent. The GeForce RTX 20 Super lineup was made public on July 2, 2019, while the first RTX 30-series graphics cards got announced in early September 2020.

Q3 early

— kopite7kimi (@kopite7kimi) May 15, 2022

Nvidia's RTX 40 series is based on the Ada Lovelace architecture and will probably be built on TSMC's N4 process node. The company is expected to launch high-end models first comprising the RTX 4090, RTX 4080, and RTX 4070, with more affordable cards coming a few months later.

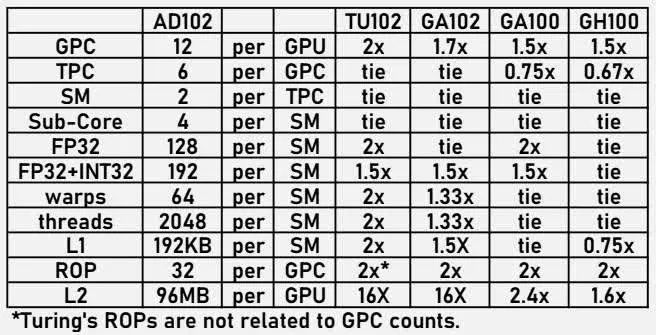

The tipster has also shared a table with the configuration of the AD102 GPU that's going to be used in Nvidia's new flagship.

According to the leaks, the chip could feature 96MB of L2 cache, 16 times more than its predecessor:

The reference RTX 4090 is also rumored to come with a whopping 600W TGP (or more?), while some third-party cards might include two 16-pin power connectors, allowing enthusiasts to push the power limit even higher for overclocking.

As for the RTX 4080, it's believed that it'll use the AD103 GPU paired with 16GB GDDR6X and a 400W TGP. The RTX 4070 would feature an AD104 GPU, 12GB of GDDR6 memory, and a relatively-tame 300W TGP.

https://www.techspot.com/news/94596-nvidia-geforce-rtx-40-series-might-get-announced.html