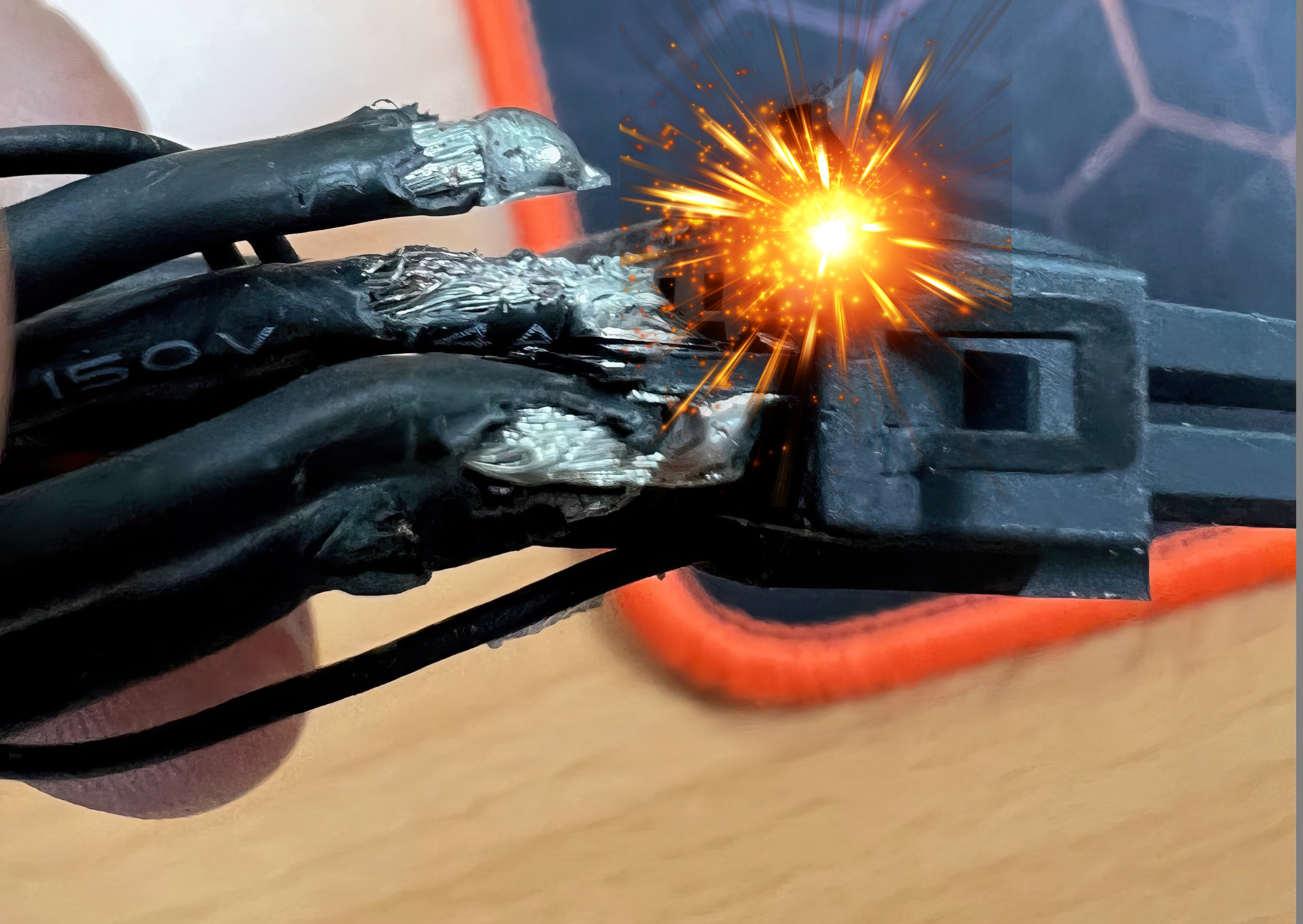

Some of the power is supplied via the PCI Express slot, up to a maximum of 75W. So, in theory, the slot and three 8-pin connectors can provide 525W."Those looking to upgrade without replacing their ATX 2.0 PSUs can use adapters bundled with the cards connecting three 8-pins or four 8-pins to one 12VHPWR cable."

Question: Unless I'm very mistaken, doesn't a single 8-pin have a 150W limit? Wouldn't that imply a converter cable that uses just three 8-pin connectors would result in at least one of those 8-pins exceeding that limit (if total draw of the GPU is >450W)?

However, most cards don't go near the 75W limit on the PCIe slot, typically only hitting around 50W or so. That's why 4090 cards with TDPs higher than 450W (e.g. MSI's Suprim Liquid X that's 480W) come with a four 8-pin adapter.

Edit: Cards will distribute the current demand across the various supply channels. Below is my RTX 2080 Super at full power:

Here you can the PCIe slot is at 71% capacity, the 6-pin connector is at 83% capacity, and the 8-pin at 91% (board sensors are a little bit on the rough side, so treat the figures as estimates, rather than concrete values).

So if one assumed the same loads exist on a 450W card with three 8-pin connectors, then each of the latter would be at 89% capacity.

Last edited: