Rumor mill: After only releasing it in January this year, Nvidia is rumored to have ceased production of the RTX 3080 12GB GPU. The move comes as the card's price falls to that of the 10GB variant while the more powerful RTX 3080 Ti is reduced by $200.

Twitter user @Zed_Wang (via PC Gamer) tweeted that Nvidia has decided to stop manufacturing the RTX 3080 12GB now that graphics card prices are falling and it needs to clear stock. A quick look on Newegg shows several of these 12GB variants priced the same as or, in some cases, less than the 10GB model.

It seems Nvidia is getting rid of the card with the extra VRAM and wider bus to shift the remaining excess of RTX 3080 10GB cards before its RTX 4000 (Ada Lovelace) series lands later this year.

Another factor in Nvidia's decision is the RTX 3080 Ti seeing its MSRP drop by $200—the cheapest on Newegg is $1,089—which leaves less room, price-wise, for the RTX 3080 12GB to sit between the Ti and 10 GB models. From Nvidia's point of view, it also means consumers might be tempted to pay extra and buy an RTX 3080 Ti instead of an RTX 3080 10GB when there's no 12GB variant.

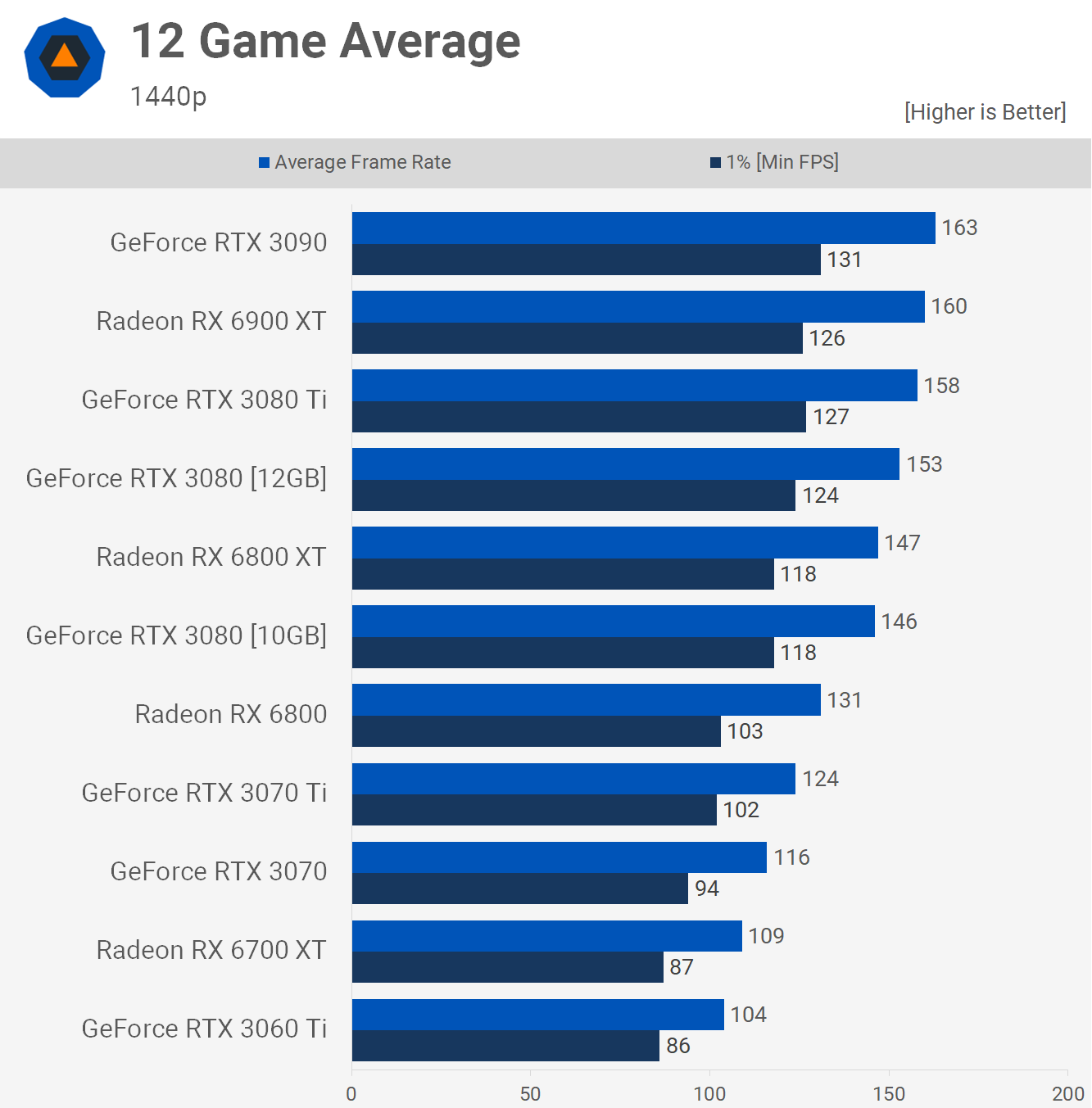

We weren't overly impressed with the RTX 3080 12GB. It was given a score of 75 in our review, where we noted that it allowed Nvidia to significantly boost profit margins on silicon that would have otherwise been sold as the original 10GB model. It also allowed board partners and/or distributors to cash in on the incredible demand the market was experiencing at the time. But finding one at the same price as the RTX 3080 10GB makes the newer card a much more appealing prospect, so you might want to grab one while you can.

https://www.techspot.com/news/95098-nvidia-might-killing-off-rtx-3080-12gb-now.html