Why it matters: During the GTC 2023 keynote, Nvidia's CEO Jensen Huang highlighted a new generation of breakthroughs that aim to bring AI to every industry. In partnership with tech giants like Google, Microsoft, and Oracle, Nvidia is making advancements in AI training, deployment, semiconductors, software libraries, systems, and cloud services. Other partnerships and developments announced included the likes of Adobe, AT&T, and vehicle maker BYD.

Huang noted numerous examples of Nvidia's ecosystem in action, including Microsoft 365 and Azure users gaining access to a platform for building virtual worlds, and Amazon using simulation capabilities to train autonomous warehouse robots. He also mentioned the rapid rise of generative AI services like ChatGPT, referring to its success as the "iPhone moment of AI."

Based on Nvidia's Hopper architecture, Huang announced a new H100 NVL GPU that works in a dual-GPU configuration with NVLink, to cater to the growing demand for AI and large language model (LLM) inference. The GPU features a Transformer Engine designed for processing models like GPT, reducing LLM processing costs. Compared to HGX A100 for GPT-3 processing, a server with four pairs of H100 NVL can be up to 10x faster, the company claims.

With cloud computing becoming a $1 trillion industry, Nvidia has developed the Arm-based Grace CPU for AI and cloud workloads. The company claims 2x performance over x86 processors at the same power envelope across major data center applications. Then, the Grace Hopper superchip combines the Grace CPU and Hopper GPU, for processing giant datasets commonly found in AI databases and large language models.

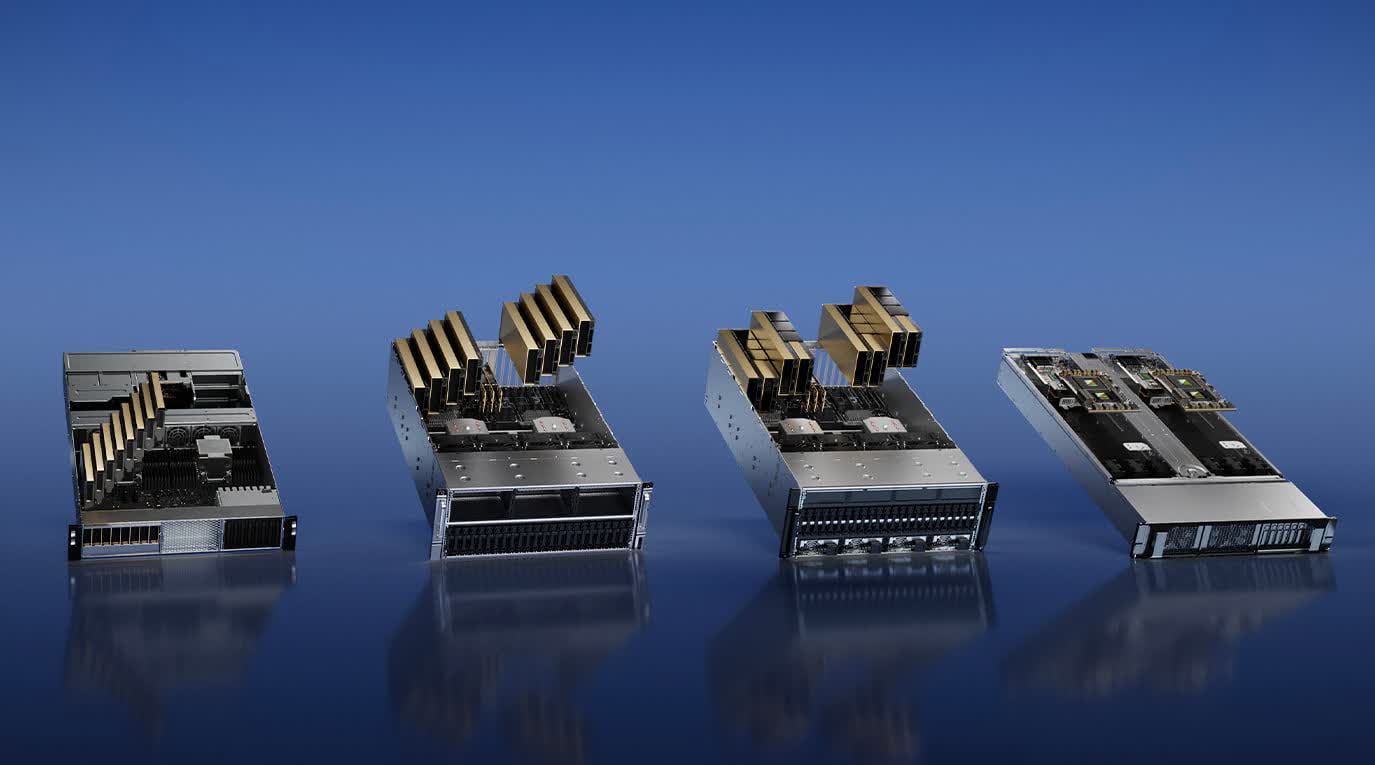

Furthermore, Nvidia's CEO claims their DGX H100 platform, featuring eight Nvidia H100 GPUs, has become the blueprint for building AI infrastructure. Several major cloud providers, including Oracle Cloud, AWS, and Microsoft Azure, have announced plans to adopt H100 GPUs in their offerings. Server makers like Dell, Cisco, and Lenovo are making systems powered by Nvidia H100 GPUs as well.

Because clearly, generative AI models are all the rage, Nvidia is offering new hardware products with specific use cases for running inference platforms more efficiently as well. The new L4 Tensor Core GPU is a universal accelerator that is optimized for video, offering 120 times better AI-powered video performance and 99% improved energy efficiency compared to CPUs, while the L40 for Image Generation is optimized for graphics and AI-enabled 2D, video, and 3D image generation.

Also read: Has Nvidia won the AI training market?

Nvidia's Omniverse is present in the modernization of the auto industry as well. By 2030, the industry will mark a shift towards electric vehicles, new factories and battery megafactories. Nvidia says Omniverse is being adopted by major auto brands for various tasks: Lotus uses it for virtual welding station assembly, Mercedes-Benz for assembly line planning and optimization, and Lucid Motors for building digital stores with accurate design data. BMW collaborates with idealworks for factory robot training and to plan an electric-vehicle factory entirely in Omniverse.

All in all, there were too many announcements and partnerships to mention, but arguably the last big milestone came from the manufacturing side. Nvidia announced a breakthrough in chip production speed and energy efficiency with the introduction of "cuLitho," a software library designed to accelerate computational lithography by up to 40 times.

Jensen explained that cuLitho can drastically reduce the extensive calculations and data processing required in chip design and manufacturing. This would result in significantly lower electricity and resource consumption. TSMC and semiconductor equipment supplier ASML plan to incorporate cuLitho in their production processes.

https://www.techspot.com/news/98026-nvidia-ambitious-future-bring-ai-every-industry-where.html