Rumor mill: Nvidia's highly-anticipated GeForce event is just around the corner. It's scheduled for September 1, and if the teases and leaks we've seen so far are accurate, it will finally bring the grand reveal of Nvidia's RTX 30-series GPUs. However, it seems we won't have to wait until the event to know what to expect from Nvidia's first three consumer-focused Ampere GPUs: their specifications have just been leaked.

Update (Sept 1): The official RTX 3000 series announcement is live, read here.

Update (Aug 31): There's less than 24 hours before Nvidia makes the official debut of Ampere on consumer RTX graphics cards, but leaks have kept on coming. Slides and photos from manufacturer Gainward have all but confirmed the rumored specs we published during the weekend.

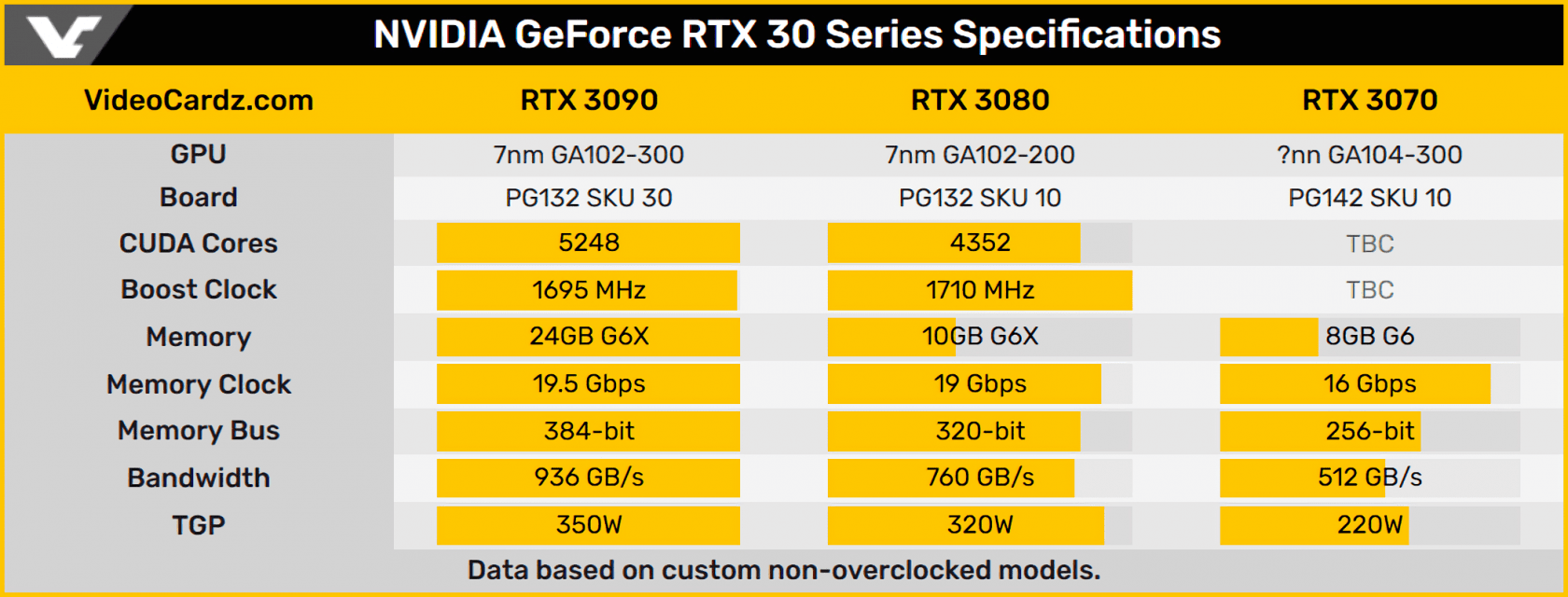

The same CUDA cores counts (5248 cores on the RTX 3090, 4352 cores on the RTX 3080), clocks speeds, memory capacity and bandwidth as shown in the table below. It's likely Nvidia will only announce the high-end RTX 3090 and 3080 tomorrow, while the RTX 3070 will be unveiled at a later date.

The original leak comes courtesy of anonymous sources that spoke to Videocardz, claims Nvidia is preparing to launch three Ampere GPUs in September. These cards will be the GeForce RTX 3090, the GeForce RTX 3080, and the GeForce RTX 3070. Each GPU has been built using 7nm fabrication tech, and they will reportedly support PCIe 4.0 out of the box.

The cards will introduce 2nd-gen RT cores and 3rd-gen Tensor Cores, according to Videocardz, and they will likely ship with support for DP 1.4a and HDMI 2.1 connectors.

In terms of nitty-gritty specifications, we'll start with the RTX 3070. If this leak is true, the card should ship with 8GB of GDDR6 VRAM clocked at 16 Gbps, across a 256-bit memory bus. The TGP should be about 220W, though the CUDA Core count and boost clock speed are unknown at the moment.

Moving on to the RTX 3080, we're looking at 10GB of GDDR6X VRAM (clocked at 19 Gbps), a 320-bit memory bus, 4352 CUDA cores, a boost clock of 1710 MHz, and a TGP of 320W. Videocardz says a second variant of the 3080 with twice the memory (20GB in total) is also in development, but it was unable to determine when the card might release.

The final leaked SKU, the RTX 3090, is an absolute monster. It's expected to have 5248 CUDA cores, a boost clock of 1695 MHz, a whopping 24GB of GDDR6X VRAM clocked at 19.5 Gbps, a 384-bit memory bus, and a TGP of 350W. Memory bandwidth for the 3070, 3080, and 3090 should be 512 GB/s, 760 GB/s, and 936 GB/s, respectively.

Strangely, Videocardz says the 3080 and 3090 will both require dual 8-pin power connectors instead of a single 12-pin connector. This seems to be at odds with the Ampere engineering video we covered a few days ago, in which Nvidia discussed its decision to transition toward 12-pin connectors for modern GPUs.

That inconsistency aside, the rest of this leak sounds fairly credible to us. Of course, we still recommend taking this information with a grain of salt -- rumors are rumors, and it's always best to wait for official confirmation before getting too invested in pre-launch spec details.

https://www.techspot.com/news/86559-nvidia-geforce-rtx-3090-3080-3070-specs-have.html