In context: Elon Musk definitely seems to be a love-him-or-hate-him character. One of those firmly in the latter camp is Dan O'Dowd, founder of the Dawn Project and CEO of Green Hills Software, who has been running a public campaign slamming Tesla's Full Self-Driving (FSD) software as dangerous. The latest jab is a viral ad showing the vehicles running over child-sized mannequins while in FSD mode. It's resulted in a cease-and-desist letter from the automaker, which O'Dowd has responded to by calling Musk a "crybaby."

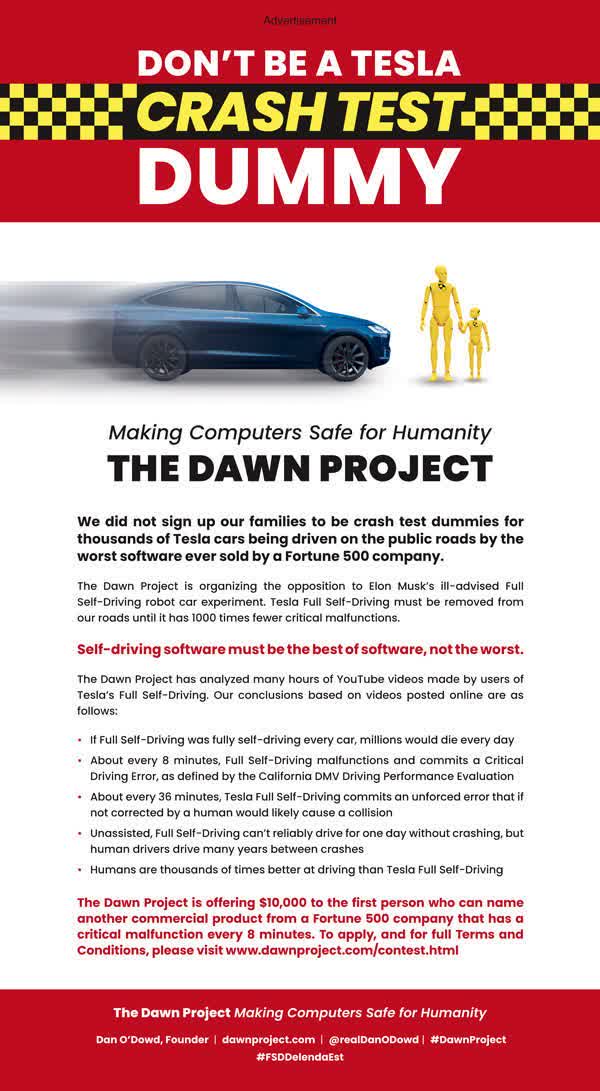

The Dawn Project is an organization launched last year calling for software in critical computer-controlled systems to be replaced with unhackable alternatives that never fail. It took out a full-page ad criticizing Tesla's FSD beta program earlier in 2022, offering $10,000 to the first person who could name "another commercial product from a Fortune 500 company that has a critical malfunction every 8 minutes."

The Dawn Project continued its campaign earlier this month by releasing a video called "Test Track - The Dangers of Tesla's Full Self-Driving Software." It claims to show a Tesla in FSD mode running over child-size mannequins wearing children's clothes at 25mph. O'Dowd says FSD is the worst self-driving software he's ever seen and calls for congress to shut it down.

Tesla took action against the ad. It sent a cease-and-desist letter on August 11 to O'Dowd demanding the video, which it calls defamatory and misrepresentative, be removed. It also asked O'Dowd to issue a public retraction and send all material from the video to Tesla within 24 hours of receiving the letter.

O'Dowd responded to the letter in a lengthy blog post calling Musk "just another crybaby hiding behind his lawyer's skirt."

Master Scammer Musk threatens to sue me over a tv ad.

— Dan O'Dowd (@RealDanODowd) August 25, 2022

Turns out Mr Free Speech Absolutist is just another crybaby hiding behind his lawyer's skirt.

Guess I hurt his wittle feewings.

Now the coward @elonmusk lashes out, sending pawns to fight his fight.https://t.co/Sbawi2rmDI pic.twitter.com/VZ7cMQR4AO

There have been plenty of people and publications pointing out what appear to be flaws in O'Dowd's video; Electrek highlights several apparent inconsistencies.

Green Hills Software develops operating systems and programming tools for embedded systems; its real-time OS and other safety software are used in BMW's iX vehicles and others from various automakers. The company is also developing its own self-driving software.

Musk tweeted a reply to O'Dowd's blog with an emoji of a bat, a poop, and the word "crazy."

🦇 ' crazy

— Elon Musk (@elonmusk) August 25, 2022

Last month , the California Department of Motor Vehicles filed a complaint against Tesla's autopilot and self-driving claims, alleging the company made "untrue or misleading" statements in advertisements on its website relating to its driver assistance programs.

https://www.techspot.com/news/95771-tesla-sends-cease-desist-ceo-behind-video-cars.html